Ruping Zou

Self-Adaptive Partial Domain Adaptation

Sep 18, 2021

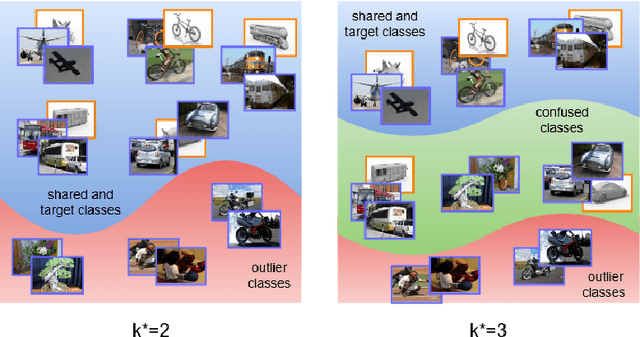

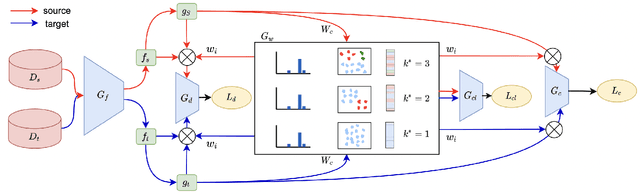

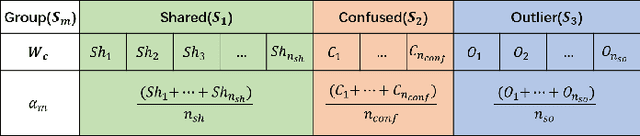

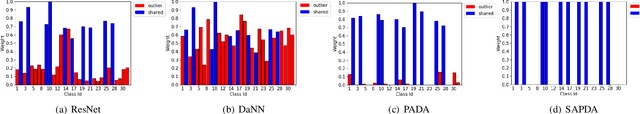

Abstract:Partial Domain adaptation (PDA) aims to solve a more practical cross-domain learning problem that assumes target label space is a subset of source label space. However, the mismatched label space causes significant negative transfer. A traditional solution is using soft weights to increase weights of source shared domain and reduce those of source outlier domain. But it still learns features of outliers and leads to negative immigration. The other mainstream idea is to distinguish source domain into shared and outlier parts by hard binary weights, while it is unavailable to correct the tangled shared and outlier classes. In this paper, we propose an end-to-end Self-Adaptive Partial Domain Adaptation(SAPDA) Network. Class weights evaluation mechanism is introduced to dynamically self-rectify the weights of shared, outlier and confused classes, thus the higher confidence samples have the more sufficient weights. Meanwhile it can eliminate the negative transfer caused by the mismatching of label space greatly. Moreover, our strategy can efficiently measure the transferability of samples in a broader sense, so that our method can achieve competitive results on unsupervised DA task likewise. A large number of experiments on multiple benchmarks have demonstrated the effectiveness of our SAPDA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge