Rongxiang Zhu

ArenaRL: Scaling RL for Open-Ended Agents via Tournament-based Relative Ranking

Jan 10, 2026Abstract:Reinforcement learning has substantially improved the performance of LLM agents on tasks with verifiable outcomes, but it still struggles on open-ended agent tasks with vast solution spaces (e.g., complex travel planning). Due to the absence of objective ground-truth for these tasks, current RL algorithms largely rely on reward models that assign scalar scores to individual responses. We contend that such pointwise scoring suffers from an inherent discrimination collapse: the reward model struggles to distinguish subtle advantages among different trajectories, resulting in scores within a group being compressed into a narrow range. Consequently, the effective reward signal becomes dominated by noise from the reward model, leading to optimization stagnation. To address this, we propose ArenaRL, a reinforcement learning paradigm that shifts from pointwise scalar scoring to intra-group relative ranking. ArenaRL introduces a process-aware pairwise evaluation mechanism, employing multi-level rubrics to assign fine-grained relative scores to trajectories. Additionally, we construct an intra-group adversarial arena and devise a tournament-based ranking scheme to obtain stable advantage signals. Empirical results confirm that the built seeded single-elimination scheme achieves nearly equivalent advantage estimation accuracy to full pairwise comparisons with O(N^2) complexity, while operating with only O(N) complexity, striking an optimal balance between efficiency and precision. Furthermore, to address the lack of full-cycle benchmarks for open-ended agents, we build Open-Travel and Open-DeepResearch, two high-quality benchmarks featuring a comprehensive pipeline covering SFT, RL training, and multi-dimensional evaluation. Extensive experiments show that ArenaRL substantially outperforms standard RL baselines, enabling LLM agents to generate more robust solutions for complex real-world tasks.

HopGAT: Hop-aware Supervision Graph Attention Networks for Sparsely Labeled Graphs

Apr 09, 2020

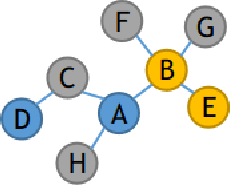

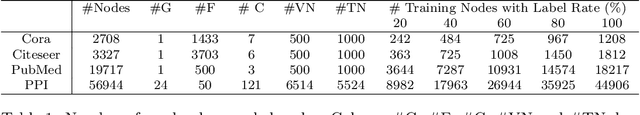

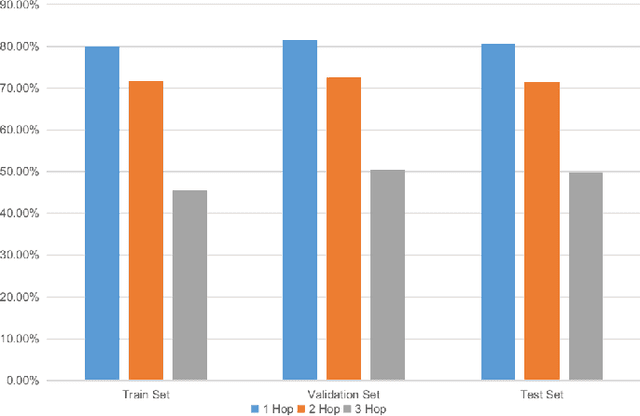

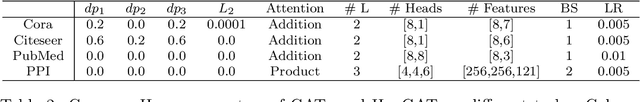

Abstract:Due to the cost of labeling nodes, classifying a node in a sparsely labeled graph while maintaining the prediction accuracy deserves attention. The key point is how the algorithm learns sufficient information from more neighbors with different hop distances. This study first proposes a hop-aware attention supervision mechanism for the node classification task. A simulated annealing learning strategy is then adopted to balance two learning tasks, node classification and the hop-aware attention coefficients, along the training timeline. Compared with state-of-the-art models, the experimental results proved the superior effectiveness of the proposed Hop-aware Supervision Graph Attention Networks (HopGAT) model. Especially, for the protein-protein interaction network, in a 40% labeled graph, the performance loss is only 3.9%, from 98.5% to 94.6%, compared to the fully labeled graph. Extensive experiments also demonstrate the effectiveness of supervised attention coefficient and learning strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge