Rongjun Tang

FedAds: A Benchmark for Privacy-Preserving CVR Estimation with Vertical Federated Learning

May 15, 2023

Abstract:Conversion rate (CVR) estimation aims to predict the probability of conversion event after a user has clicked an ad. Typically, online publisher has user browsing interests and click feedbacks, while demand-side advertising platform collects users' post-click behaviors such as dwell time and conversion decisions. To estimate CVR accurately and protect data privacy better, vertical federated learning (vFL) is a natural solution to combine two sides' advantages for training models, without exchanging raw data. Both CVR estimation and applied vFL algorithms have attracted increasing research attentions. However, standardized and systematical evaluations are missing: due to the lack of standardized datasets, existing studies adopt public datasets to simulate a vFL setting via hand-crafted feature partition, which brings challenges to fair comparison. We introduce FedAds, the first benchmark for CVR estimation with vFL, to facilitate standardized and systematical evaluations for vFL algorithms. It contains a large-scale real world dataset collected from Alibaba's advertising platform, as well as systematical evaluations for both effectiveness and privacy aspects of various vFL algorithms. Besides, we also explore to incorporate unaligned data in vFL to improve effectiveness, and develop perturbation operations to protect privacy well. We hope that future research work in vFL and CVR estimation benefits from the FedAds benchmark.

Data-free Backdoor Removal based on Channel Lipschitzness

Aug 05, 2022

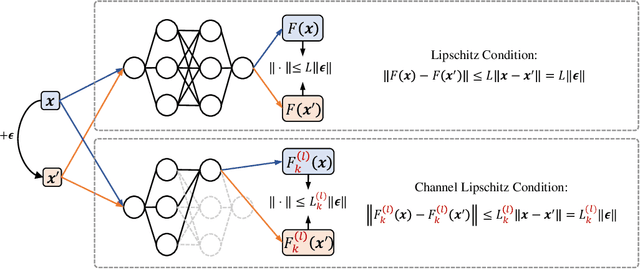

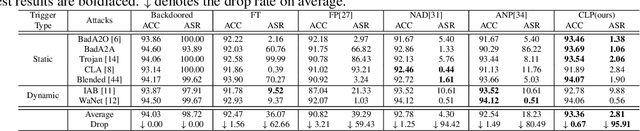

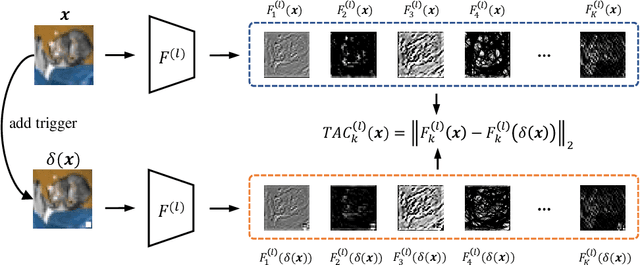

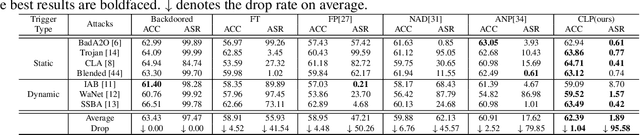

Abstract:Recent studies have shown that Deep Neural Networks (DNNs) are vulnerable to the backdoor attacks, which leads to malicious behaviors of DNNs when specific triggers are attached to the input images. It was further demonstrated that the infected DNNs possess a collection of channels, which are more sensitive to the backdoor triggers compared with normal channels. Pruning these channels was then shown to be effective in mitigating the backdoor behaviors. To locate those channels, it is natural to consider their Lipschitzness, which measures their sensitivity against worst-case perturbations on the inputs. In this work, we introduce a novel concept called Channel Lipschitz Constant (CLC), which is defined as the Lipschitz constant of the mapping from the input images to the output of each channel. Then we provide empirical evidences to show the strong correlation between an Upper bound of the CLC (UCLC) and the trigger-activated change on the channel activation. Since UCLC can be directly calculated from the weight matrices, we can detect the potential backdoor channels in a data-free manner, and do simple pruning on the infected DNN to repair the model. The proposed Channel Lipschitzness based Pruning (CLP) method is super fast, simple, data-free and robust to the choice of the pruning threshold. Extensive experiments are conducted to evaluate the efficiency and effectiveness of CLP, which achieves state-of-the-art results among the mainstream defense methods even without any data. Source codes are available at https://github.com/rkteddy/channel-Lipschitzness-based-pruning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge