Robbert Reijnen

Graph-Supported Dynamic Algorithm Configuration for Multi-Objective Combinatorial Optimization

May 22, 2025Abstract:Deep reinforcement learning (DRL) has been widely used for dynamic algorithm configuration, particularly in evolutionary computation, which benefits from the adaptive update of parameters during the algorithmic execution. However, applying DRL to algorithm configuration for multi-objective combinatorial optimization (MOCO) problems remains relatively unexplored. This paper presents a novel graph neural network (GNN) based DRL to configure multi-objective evolutionary algorithms. We model the dynamic algorithm configuration as a Markov decision process, representing the convergence of solutions in the objective space by a graph, with their embeddings learned by a GNN to enhance the state representation. Experiments on diverse MOCO challenges indicate that our method outperforms traditional and DRL-based algorithm configuration methods in terms of efficacy and adaptability. It also exhibits advantageous generalizability across objective types and problem sizes, and applicability to different evolutionary computation methods.

Graph Neural Networks for Job Shop Scheduling Problems: A Survey

Jun 20, 2024

Abstract:Job shop scheduling problems (JSSPs) represent a critical and challenging class of combinatorial optimization problems. Recent years have witnessed a rapid increase in the application of graph neural networks (GNNs) to solve JSSPs, albeit lacking a systematic survey of the relevant literature. This paper aims to thoroughly review prevailing GNN methods for different types of JSSPs and the closely related flow-shop scheduling problems (FSPs), especially those leveraging deep reinforcement learning (DRL). We begin by presenting the graph representations of various JSSPs, followed by an introduction to the most commonly used GNN architectures. We then review current GNN-based methods for each problem type, highlighting key technical elements such as graph representations, GNN architectures, GNN tasks, and training algorithms. Finally, we summarize and analyze the advantages and limitations of GNNs in solving JSSPs and provide potential future research opportunities. We hope this survey can motivate and inspire innovative approaches for more powerful GNN-based approaches in tackling JSSPs and other scheduling problems.

Job Shop Scheduling Benchmark: Environments and Instances for Learning and Non-learning Methods

Aug 24, 2023

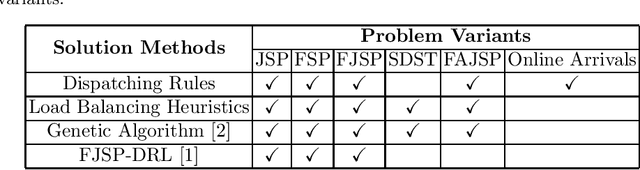

Abstract:We introduce an open-source GitHub repository containing comprehensive benchmarks for a wide range of machine scheduling problems, including Job Shop Scheduling (JSP), Flow Shop Scheduling (FSP), Flexible Job Shop Scheduling (FJSP), FJSP with Assembly constraints (FAJSP), FJSP with Sequence-Dependent Setup Times (FJSP-SDST), and the online FJSP (with online job arrivals). Our primary goal is to provide a centralized hub for researchers, practitioners, and enthusiasts interested in tackling machine scheduling challenges.

Operator Selection in Adaptive Large Neighborhood Search using Deep Reinforcement Learning

Nov 01, 2022Abstract:Large Neighborhood Search (LNS) is a popular heuristic for solving combinatorial optimization problems. LNS iteratively explores the neighborhoods in solution spaces using destroy and repair operators. Determining the best operators for LNS to solve a problem at hand is a labor-intensive process. Hence, Adaptive Large Neighborhood Search (ALNS) has been proposed to adaptively select operators during the search process based on operator performances of the previous search iterations. Such an operator selection procedure is a heuristic, based on domain knowledge, which is ineffective with complex, large solution spaces. In this paper, we address the problem of selecting operators for each search iteration of ALNS as a sequential decision problem and propose a Deep Reinforcement Learning based method called Deep Reinforced Adaptive Large Neighborhood Search. As such, the proposed method aims to learn based on the state of the search which operation to select to obtain a high long-term reward, i.e., a good solution to the underlying optimization problem. The proposed method is evaluated on a time-dependent orienteering problem with stochastic weights and time windows. Results show that our approach effectively learns a strategy that adaptively selects operators for large neighborhood search, obtaining competitive results compared to a state-of-the-art machine learning approach while trained with much fewer observations on small-sized problem instances.

Learning Adaptive Evolutionary Computation for Solving Multi-Objective Optimization Problems

Nov 01, 2022

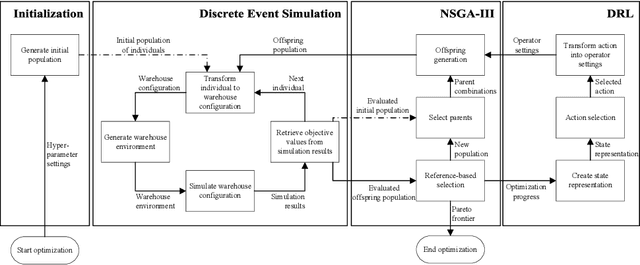

Abstract:Multi-objective evolutionary algorithms (MOEAs) are widely used to solve multi-objective optimization problems. The algorithms rely on setting appropriate parameters to find good solutions. However, this parameter tuning could be very computationally expensive in solving non-trial (combinatorial) optimization problems. This paper proposes a framework that integrates MOEAs with adaptive parameter control using Deep Reinforcement Learning (DRL). The DRL policy is trained to adaptively set the values that dictate the intensity and probability of mutation for solutions during optimization. We test the proposed approach with a simple benchmark problem and a real-world, complex warehouse design and control problem. The experimental results demonstrate the advantages of our method in terms of solution quality and computation time to reach good solutions. In addition, we show the learned policy is transferable, i.e., the policy trained on a simple benchmark problem can be directly applied to solve the complex warehouse optimization problem, effectively, without the need for retraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge