Riya Kumari

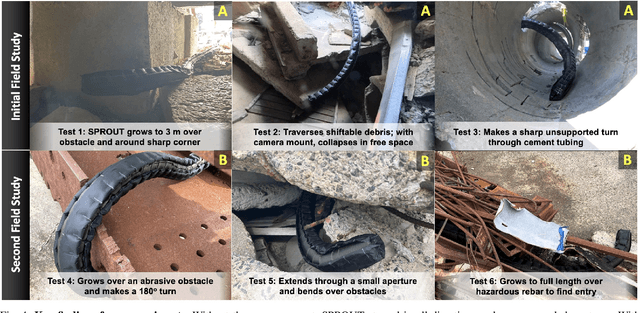

Field Insights for Portable Vine Robots in Urban Search and Rescue

Nov 10, 2024

Abstract:Soft, growing vine robots are well-suited for exploring cluttered, unknown environments, and are theorized to be performant during structural collapse incidents caused by earthquakes, fires, explosions, and material flaws. These vine robots grow from the tip, enabling them to navigate rubble-filled passageways easily. State-of-the-art vine robots have been tested in archaeological and other field settings, but their translational capabilities to urban search and rescue (USAR) are not well understood. To this end, we present a set of experiments designed to test the limits of a vine robot system, the Soft Pathfinding Robotic Observation Unit (SPROUT), operating in an engineered collapsed structure. Our testing is driven by a taxonomy of difficulty derived from the challenges USAR crews face navigating void spaces and their associated hazards. Initial experiments explore the viability of the vine robot form factor, both ideal and implemented, as well as the control and sensorization of the system. A secondary set of experiments applies domain-specific design improvements to increase the portability and reliability of the system. SPROUT can grow through tight apertures, around corners, and into void spaces, but requires additional development in sensorization to improve control and situational awareness.

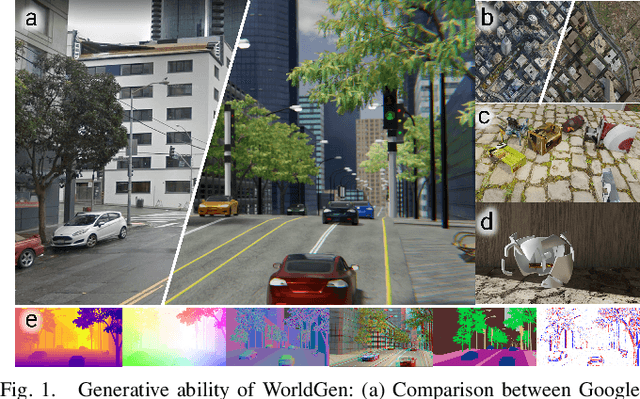

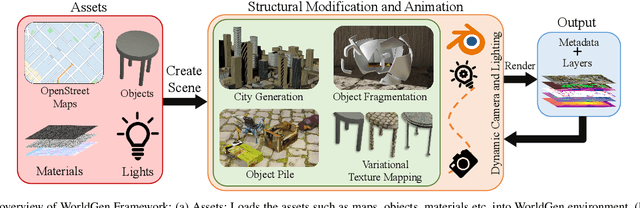

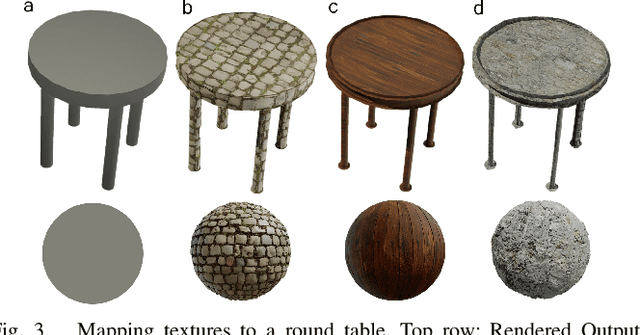

WorldGen: A Large Scale Generative Simulator

Oct 03, 2022

Abstract:In the era of deep learning, data is the critical determining factor in the performance of neural network models. Generating large datasets suffers from various difficulties such as scalability, cost efficiency and photorealism. To avoid expensive and strenuous dataset collection and annotations, researchers have inclined towards computer-generated datasets. Although, a lack of photorealism and a limited amount of computer-aided data, has bounded the accuracy of network predictions. To this end, we present WorldGen -- an open source framework to autonomously generate countless structured and unstructured 3D photorealistic scenes such as city view, object collection, and object fragmentation along with its rich ground truth annotation data. WorldGen being a generative model gives the user full access and control to features such as texture, object structure, motion, camera and lens properties for better generalizability by diminishing the data bias in the network. We demonstrate the effectiveness of WorldGen by presenting an evaluation on deep optical flow. We hope such a tool can open doors for future research in a myriad of domains related to robotics and computer vision by reducing manual labor and the cost of acquiring rich and high-quality data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge