Ritu Yadav

PANGAEA: A Global and Inclusive Benchmark for Geospatial Foundation Models

Dec 05, 2024Abstract:Geospatial Foundation Models (GFMs) have emerged as powerful tools for extracting representations from Earth observation data, but their evaluation remains inconsistent and narrow. Existing works often evaluate on suboptimal downstream datasets and tasks, that are often too easy or too narrow, limiting the usefulness of the evaluations to assess the real-world applicability of GFMs. Additionally, there is a distinct lack of diversity in current evaluation protocols, which fail to account for the multiplicity of image resolutions, sensor types, and temporalities, which further complicates the assessment of GFM performance. In particular, most existing benchmarks are geographically biased towards North America and Europe, questioning the global applicability of GFMs. To overcome these challenges, we introduce PANGAEA, a standardized evaluation protocol that covers a diverse set of datasets, tasks, resolutions, sensor modalities, and temporalities. It establishes a robust and widely applicable benchmark for GFMs. We evaluate the most popular GFMs openly available on this benchmark and analyze their performance across several domains. In particular, we compare these models to supervised baselines (e.g. UNet and vanilla ViT), and assess their effectiveness when faced with limited labeled data. Our findings highlight the limitations of GFMs, under different scenarios, showing that they do not consistently outperform supervised models. PANGAEA is designed to be highly extensible, allowing for the seamless inclusion of new datasets, models, and tasks in future research. By releasing the evaluation code and benchmark, we aim to enable other researchers to replicate our experiments and build upon our work, fostering a more principled evaluation protocol for large pre-trained geospatial models. The code is available at https://github.com/VMarsocci/pangaea-bench.

A CNN regression model to estimate buildings height maps using Sentinel-1 SAR and Sentinel-2 MSI time series

Jul 03, 2023Abstract:Accurate estimation of building heights is essential for urban planning, infrastructure management, and environmental analysis. In this study, we propose a supervised Multimodal Building Height Regression Network (MBHR-Net) for estimating building heights at 10m spatial resolution using Sentinel-1 (S1) and Sentinel-2 (S2) satellite time series. S1 provides Synthetic Aperture Radar (SAR) data that offers valuable information on building structures, while S2 provides multispectral data that is sensitive to different land cover types, vegetation phenology, and building shadows. Our MBHR-Net aims to extract meaningful features from the S1 and S2 images to learn complex spatio-temporal relationships between image patterns and building heights. The model is trained and tested in 10 cities in the Netherlands. Root Mean Squared Error (RMSE), Intersection over Union (IOU), and R-squared (R2) score metrics are used to evaluate the performance of the model. The preliminary results (3.73m RMSE, 0.95 IoU, 0.61 R2) demonstrate the effectiveness of our deep learning model in accurately estimating building heights, showcasing its potential for urban planning, environmental impact analysis, and other related applications.

Context-Aware Change Detection With Semi-Supervised Learning

Jun 15, 2023

Abstract:Change detection using earth observation data plays a vital role in quantifying the impact of disasters in affected areas. While data sources like Sentinel-2 provide rich optical information, they are often hindered by cloud cover, limiting their usage in disaster scenarios. However, leveraging pre-disaster optical data can offer valuable contextual information about the area such as landcover type, vegetation cover, soil types, enabling a better understanding of the disaster's impact. In this study, we develop a model to assess the contribution of pre-disaster Sentinel-2 data in change detection tasks, focusing on disaster-affected areas. The proposed Context-Aware Change Detection Network (CACDN) utilizes a combination of pre-disaster Sentinel-2 data, pre and post-disaster Sentinel-1 data and ancillary Digital Elevation Models (DEM) data. The model is validated on flood and landslide detection and evaluated using three metrics: Area Under the Precision-Recall Curve (AUPRC), Intersection over Union (IoU), and mean IoU. The preliminary results show significant improvement (4\%, AUPRC, 3-7\% IoU, 3-6\% mean IoU) in model's change detection capabilities when incorporated with pre-disaster optical data reflecting the effectiveness of using contextual information for accurate flood and landslide detection.

Unsupervised Flood Detection on SAR Time Series

Dec 07, 2022

Abstract:Human civilization has an increasingly powerful influence on the earth system. Affected by climate change and land-use change, natural disasters such as flooding have been increasing in recent years. Earth observations are an invaluable source for assessing and mitigating negative impacts. Detecting changes from Earth observation data is one way to monitor the possible impact. Effective and reliable Change Detection (CD) methods can help in identifying the risk of disaster events at an early stage. In this work, we propose a novel unsupervised CD method on time series Synthetic Aperture Radar~(SAR) data. Our proposed method is a probabilistic model trained with unsupervised learning techniques, reconstruction, and contrastive learning. The change map is generated with the help of the distribution difference between pre-incident and post-incident data. Our proposed CD model is evaluated on flood detection data. We verified the efficacy of our model on 8 different flood sites, including three recent flood events from Copernicus Emergency Management Services and six from the Sen1Floods11 dataset. Our proposed model achieved an average of 64.53\% Intersection Over Union(IoU) value and 75.43\% F1 score. Our achieved IoU score is approximately 6-27\% and F1 score is approximately 7-22\% better than the compared unsupervised and supervised existing CD methods. The results and extensive discussion presented in the study show the effectiveness of the proposed unsupervised CD method.

Building Change Detection using Multi-Temporal Airborne LiDAR Data

Apr 26, 2022

Abstract:Building change detection is essential for monitoring urbanization, disaster assessment, urban planning and frequently updating the maps. 3D structure information from airborne light detection and ranging (LiDAR) is very effective for detecting urban changes. But the 3D point cloud from airborne LiDAR(ALS) holds an enormous amount of unordered and irregularly sparse information. Handling such data is tricky and consumes large memory for processing. Most of this information is not necessary when we are looking for a particular type of urban change. In this study, we propose an automatic method that reduces the 3D point clouds into a much smaller representation without losing the necessary information required for detecting Building changes. The method utilizes the Deep Learning(DL) model U-Net for segmenting the buildings from the background. Produced segmentation maps are then processed further for detecting changes and the results are refined using morphological methods. For the change detection task, we used multi-temporal airborne LiDAR data. The data is acquired over Stockholm in the years 2017 and 2019. The changes in buildings are classified into four types: 'newly built', 'demolished', 'taller' and 'shorter'. The detected changes are visualized in one map for better interpretation.

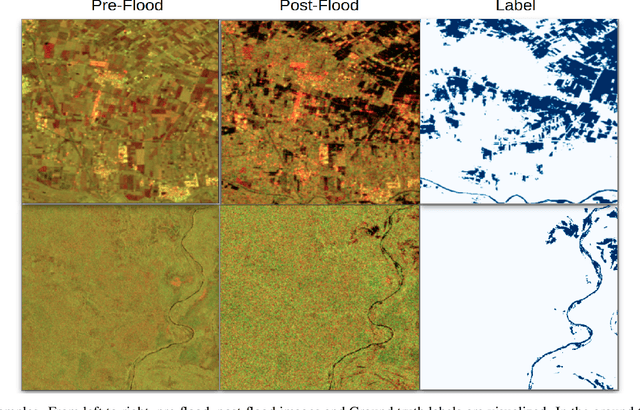

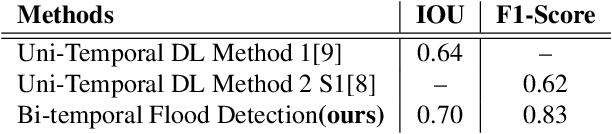

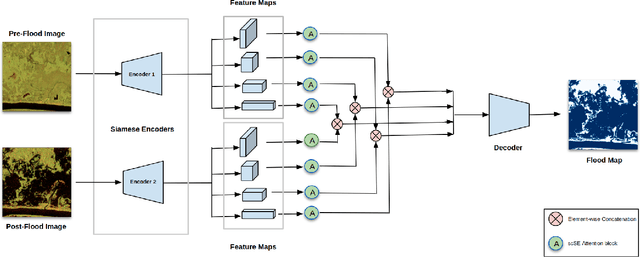

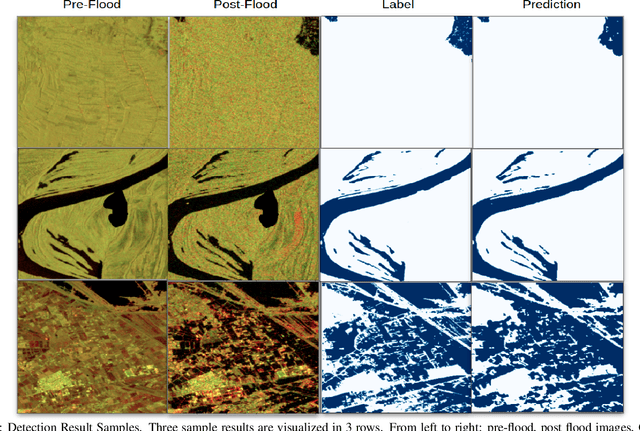

Attentive Dual Stream Siamese U-net for Flood Detection on Multi-temporal Sentinel-1 Data

Apr 20, 2022

Abstract:Due to climate and land-use change, natural disasters such as flooding have been increasing in recent years. Timely and reliable flood detection and mapping can help emergency response and disaster management. In this work, we propose a flood detection network using bi-temporal SAR acquisitions. The proposed segmentation network has an encoder-decoder architecture with two Siamese encoders for pre and post-flood images. The network's feature maps are fused and enhanced using attention blocks to achieve more accurate detection of the flooded areas. Our proposed network is evaluated on publicly available Sen1Flood11 benchmark dataset. The network outperformed the existing state-of-the-art (uni-temporal) flood detection method by 6\% IOU. The experiments highlight that the combination of bi-temporal SAR data with an effective network architecture achieves more accurate flood detection than uni-temporal methods.

Radar+RGB Attentive Fusion for Robust Object Detection in Autonomous Vehicles

Aug 31, 2020

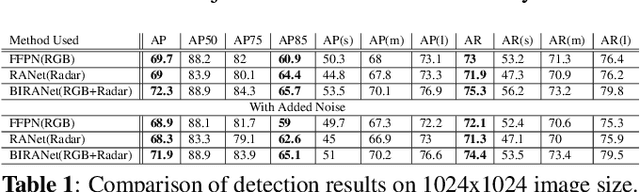

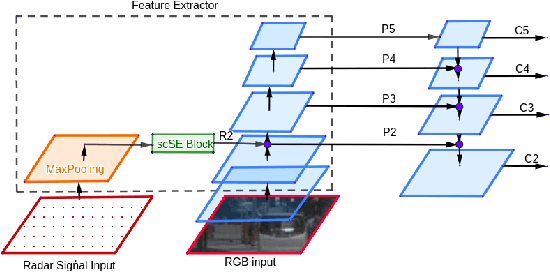

Abstract:This paper presents two variations of architecture referred to as RANet and BIRANet. The proposed architecture aims to use radar signal data along with RGB camera images to form a robust detection network that works efficiently, even in variable lighting and weather conditions such as rain, dust, fog, and others. First, radar information is fused in the feature extractor network. Second, radar points are used to generate guided anchors. Third, a method is proposed to improve region proposal network targets. BIRANet yields 72.3/75.3% average AP/AR on the NuScenes dataset, which is better than the performance of our base network Faster-RCNN with Feature pyramid network(FFPN). RANet gives 69.6/71.9% average AP/AR on the same dataset, which is reasonably acceptable performance. Also, both BIRANet and RANet are evaluated to be robust towards the noise.

Light-Weighted CNN for Text Classification

Apr 16, 2020

Abstract:For management, documents are categorized into a specific category, and to do these, most of the organizations use manual labor. In today's automation era, manual efforts on such a task are not justified, and to avoid this, we have so many software out there in the market. However, efficiency and minimal resource consumption is the focal point which is also creating a competition. The categorization of such documents into specified classes by machine provides excellent help. One of categorization technique is text classification using a Convolutional neural network(TextCNN). TextCNN uses multiple sizes of filters, as in the case of the inception layer introduced in Googlenet. The network provides good accuracy but causes high memory consumption due to a large number of trainable parameters. As a solution to this problem, we introduced a whole new architecture based on separable convolution. The idea of separable convolution already exists in the field of image classification but not yet introduces to text classification tasks. With the help of this architecture, we can achieve a drastic reduction in trainable parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge