Axel Vierling

Evaluating the Robustness of Off-Road Autonomous Driving Segmentation against Adversarial Attacks: A Dataset-Centric analysis

Feb 03, 2024Abstract:This study investigates the vulnerability of semantic segmentation models to adversarial input perturbations, in the domain of off-road autonomous driving. Despite good performance in generic conditions, the state-of-the-art classifiers are often susceptible to (even) small perturbations, ultimately resulting in inaccurate predictions with high confidence. Prior research has directed their focus on making models more robust by modifying the architecture and training with noisy input images, but has not explored the influence of datasets in adversarial attacks. Our study aims to address this gap by examining the impact of non-robust features in off-road datasets and comparing the effects of adversarial attacks on different segmentation network architectures. To enable this, a robust dataset is created consisting of only robust features and training the networks on this robustified dataset. We present both qualitative and quantitative analysis of our findings, which have important implications on improving the robustness of machine learning models in off-road autonomous driving applications. Additionally, this work contributes to the safe navigation of autonomous robot Unimog U5023 in rough off-road unstructured environments by evaluating the robustness of segmentation outputs. The code is publicly available at https://github.com/rohtkumar/adversarial_attacks_ on_segmentation

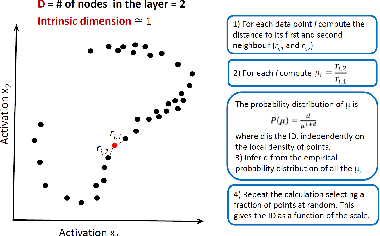

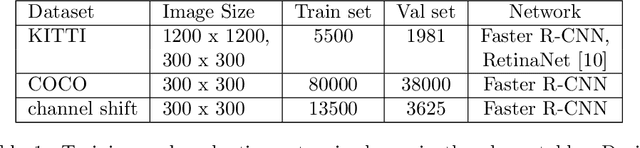

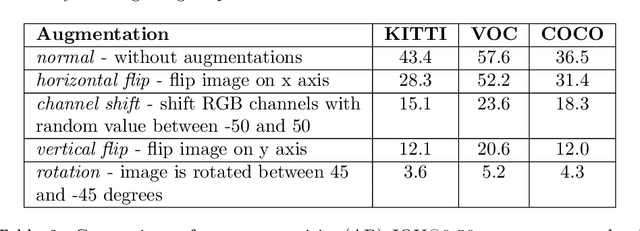

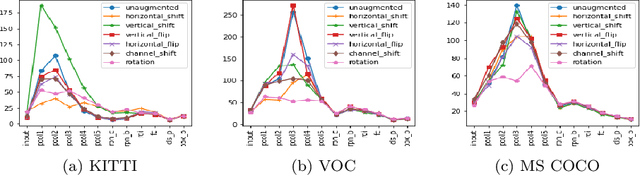

Dimensionality of datasets in object detection networks

Oct 13, 2022

Abstract:In recent years, convolutional neural networks (CNNs) are used in a large number of tasks in computer vision. One of them is object detection for autonomous driving. Although CNNs are used widely in many areas, what happens inside the network is still unexplained on many levels. Our goal is to determine the effect of Intrinsic dimension (i.e. minimum number of parameters required to represent data) in different layers on the accuracy of object detection network for augmented data sets. Our investigation determines that there is difference between the representation of normal and augmented data during feature extraction.

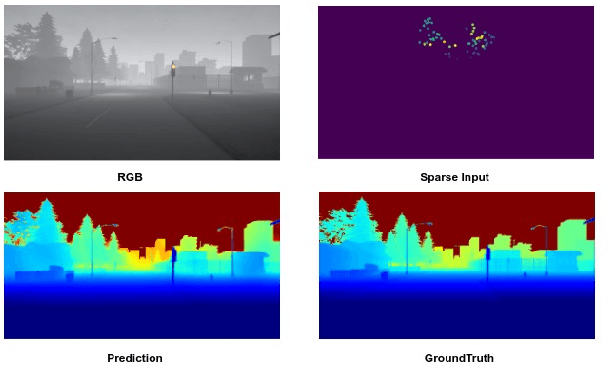

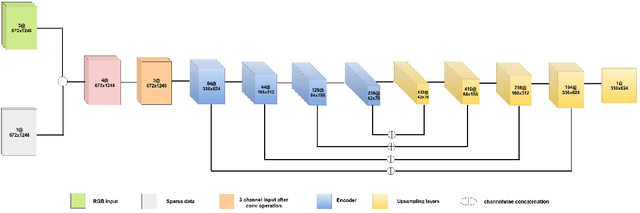

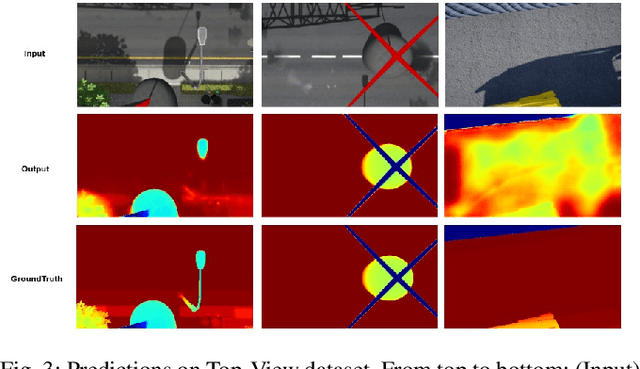

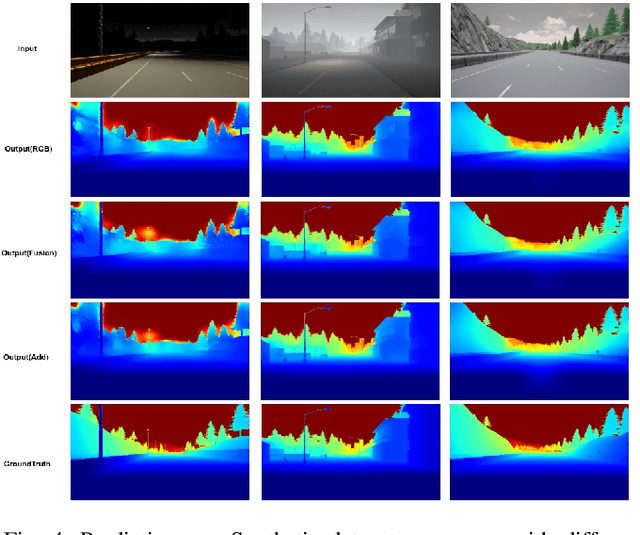

Multi-Modal Depth Estimation Using Convolutional Neural Networks

Dec 17, 2020

Abstract:This paper addresses the problem of dense depth predictions from sparse distance sensor data and a single camera image on challenging weather conditions. This work explores the significance of different sensor modalities such as camera, Radar, and Lidar for estimating depth by applying Deep Learning approaches. Although Lidar has higher depth-sensing abilities than Radar and has been integrated with camera images in lots of previous works, depth estimation using CNN's on the fusion of robust Radar distance data and camera images has not been explored much. In this work, a deep regression network is proposed utilizing a transfer learning approach consisting of an encoder where a high performing pre-trained model has been used to initialize it for extracting dense features and a decoder for upsampling and predicting desired depth. The results are demonstrated on Nuscenes, KITTI, and a Synthetic dataset which was created using the CARLA simulator. Also, top-view zoom-camera images captured from the crane on a construction site are evaluated to estimate the distance of the crane boom carrying heavy loads from the ground to show the usability in safety-critical applications.

Radar+RGB Attentive Fusion for Robust Object Detection in Autonomous Vehicles

Aug 31, 2020

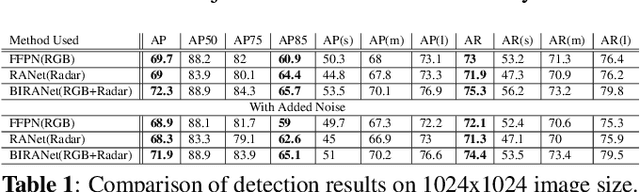

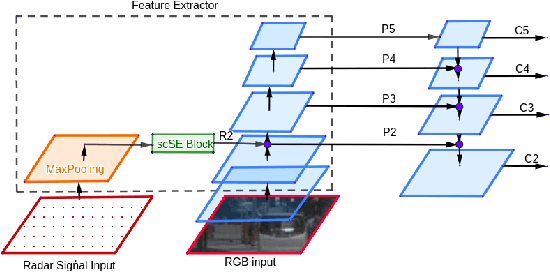

Abstract:This paper presents two variations of architecture referred to as RANet and BIRANet. The proposed architecture aims to use radar signal data along with RGB camera images to form a robust detection network that works efficiently, even in variable lighting and weather conditions such as rain, dust, fog, and others. First, radar information is fused in the feature extractor network. Second, radar points are used to generate guided anchors. Third, a method is proposed to improve region proposal network targets. BIRANet yields 72.3/75.3% average AP/AR on the NuScenes dataset, which is better than the performance of our base network Faster-RCNN with Feature pyramid network(FFPN). RANet gives 69.6/71.9% average AP/AR on the same dataset, which is reasonably acceptable performance. Also, both BIRANet and RANet are evaluated to be robust towards the noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge