Yifang Ban

TS-SatFire: A Multi-Task Satellite Image Time-Series Dataset for Wildfire Detection and Prediction

Dec 16, 2024

Abstract:Wildfire monitoring and prediction are essential for understanding wildfire behaviour. With extensive Earth observation data, these tasks can be integrated and enhanced through multi-task deep learning models. We present a comprehensive multi-temporal remote sensing dataset for active fire detection, daily wildfire monitoring, and next-day wildfire prediction. Covering wildfire events in the contiguous U.S. from January 2017 to October 2021, the dataset includes 3552 surface reflectance images and auxiliary data such as weather, topography, land cover, and fuel information, totalling 71 GB. The lifecycle of each wildfire is documented, with labels for active fires (AF) and burned areas (BA), supported by manual quality assurance of AF and BA test labels. The dataset supports three tasks: a) active fire detection, b) daily burned area mapping, and c) wildfire progression prediction. Detection tasks use pixel-wise classification of multi-spectral, multi-temporal images, while prediction tasks integrate satellite and auxiliary data to model fire dynamics. This dataset and its benchmarks provide a foundation for advancing wildfire research using deep learning.

RADARSAT Constellation Mission Compact Polarisation SAR Data for Burned Area Mapping with Deep Learning

Dec 16, 2024Abstract:Monitoring wildfires has become increasingly critical due to the sharp rise in wildfire incidents in recent years. Optical satellites like Sentinel-2 and Landsat are extensively utilized for mapping burned areas. However, the effectiveness of optical sensors is compromised by clouds and smoke, which obstruct the detection of burned areas. Thus, satellites equipped with Synthetic Aperture Radar (SAR), such as dual-polarization Sentinel-1 and quad-polarization RADARSAT-1/-2 C-band SAR, which can penetrate clouds and smoke, are investigated for mapping burned areas. However, there is limited research on using compact polarisation (compact-pol) C-band RADARSAT Constellation Mission (RCM) SAR data for this purpose. This study aims to investigate the capacity of compact polarisation RCM data for burned area mapping through deep learning. Compact-pol m-chi decomposition and Compact-pol Radar Vegetation Index (CpRVI) are derived from the RCM Multi-look Complex product. A deep-learning-based processing pipeline incorporating ConvNet-based and Transformer-based models is applied for burned area mapping, with three different input settings: using only log-ratio dual-polarization intensity images images, using only compact-pol decomposition plus CpRVI, and using all three data sources. The results demonstrate that compact-pol m-chi decomposition and CpRVI images significantly complement log-ratio images for burned area mapping. The best-performing Transformer-based model, UNETR, trained with log-ratio, m-chi decomposition, and CpRVI data, achieved an F1 Score of 0.718 and an IoU Score of 0.565, showing a notable improvement compared to the same model trained using only log-ratio images.

PANGAEA: A Global and Inclusive Benchmark for Geospatial Foundation Models

Dec 05, 2024Abstract:Geospatial Foundation Models (GFMs) have emerged as powerful tools for extracting representations from Earth observation data, but their evaluation remains inconsistent and narrow. Existing works often evaluate on suboptimal downstream datasets and tasks, that are often too easy or too narrow, limiting the usefulness of the evaluations to assess the real-world applicability of GFMs. Additionally, there is a distinct lack of diversity in current evaluation protocols, which fail to account for the multiplicity of image resolutions, sensor types, and temporalities, which further complicates the assessment of GFM performance. In particular, most existing benchmarks are geographically biased towards North America and Europe, questioning the global applicability of GFMs. To overcome these challenges, we introduce PANGAEA, a standardized evaluation protocol that covers a diverse set of datasets, tasks, resolutions, sensor modalities, and temporalities. It establishes a robust and widely applicable benchmark for GFMs. We evaluate the most popular GFMs openly available on this benchmark and analyze their performance across several domains. In particular, we compare these models to supervised baselines (e.g. UNet and vanilla ViT), and assess their effectiveness when faced with limited labeled data. Our findings highlight the limitations of GFMs, under different scenarios, showing that they do not consistently outperform supervised models. PANGAEA is designed to be highly extensible, allowing for the seamless inclusion of new datasets, models, and tasks in future research. By releasing the evaluation code and benchmark, we aim to enable other researchers to replicate our experiments and build upon our work, fostering a more principled evaluation protocol for large pre-trained geospatial models. The code is available at https://github.com/VMarsocci/pangaea-bench.

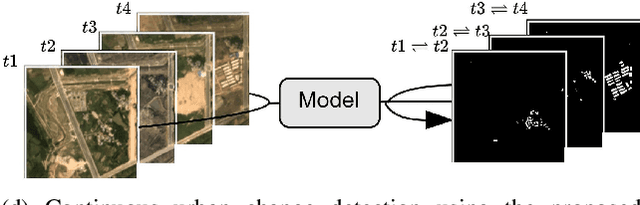

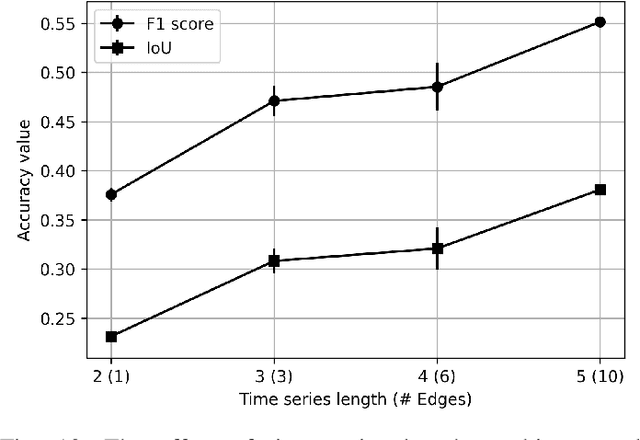

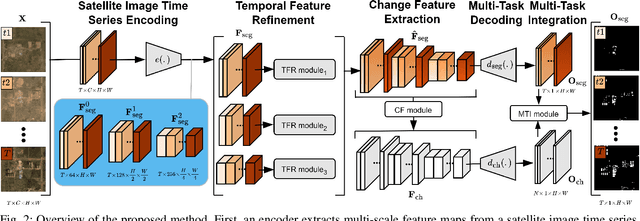

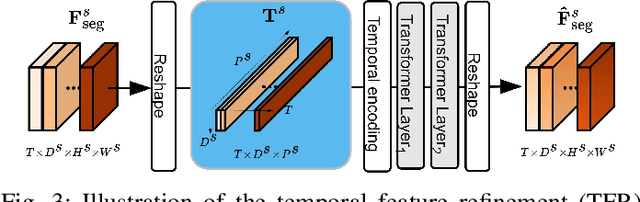

Continuous Urban Change Detection from Satellite Image Time Series with Temporal Feature Refinement and Multi-Task Integration

Jun 25, 2024

Abstract:Urbanization advances at unprecedented rates, resulting in negative effects on the environment and human well-being. Remote sensing has the potential to mitigate these effects by supporting sustainable development strategies with accurate information on urban growth. Deep learning-based methods have achieved promising urban change detection results from optical satellite image pairs using convolutional neural networks (ConvNets), transformers, and a multi-task learning setup. However, transformers have not been leveraged for urban change detection with multi-temporal data, i.e., >2 images, and multi-task learning methods lack integration approaches that combine change and segmentation outputs. To fill this research gap, we propose a continuous urban change detection method that identifies changes in each consecutive image pair of a satellite image time series. Specifically, we propose a temporal feature refinement (TFR) module that utilizes self-attention to improve ConvNet-based multi-temporal building representations. Furthermore, we propose a multi-task integration (MTI) module that utilizes Markov networks to find an optimal building map time series based on segmentation and dense change outputs. The proposed method effectively identifies urban changes based on high-resolution satellite image time series acquired by the PlanetScope constellation (F1 score 0.551) and Gaofen-2 (F1 score 0.440). Moreover, our experiments on two challenging datasets demonstrate the effectiveness of the proposed method compared to bi-temporal and multi-temporal urban change detection and segmentation methods.

A CNN regression model to estimate buildings height maps using Sentinel-1 SAR and Sentinel-2 MSI time series

Jul 03, 2023Abstract:Accurate estimation of building heights is essential for urban planning, infrastructure management, and environmental analysis. In this study, we propose a supervised Multimodal Building Height Regression Network (MBHR-Net) for estimating building heights at 10m spatial resolution using Sentinel-1 (S1) and Sentinel-2 (S2) satellite time series. S1 provides Synthetic Aperture Radar (SAR) data that offers valuable information on building structures, while S2 provides multispectral data that is sensitive to different land cover types, vegetation phenology, and building shadows. Our MBHR-Net aims to extract meaningful features from the S1 and S2 images to learn complex spatio-temporal relationships between image patterns and building heights. The model is trained and tested in 10 cities in the Netherlands. Root Mean Squared Error (RMSE), Intersection over Union (IOU), and R-squared (R2) score metrics are used to evaluate the performance of the model. The preliminary results (3.73m RMSE, 0.95 IoU, 0.61 R2) demonstrate the effectiveness of our deep learning model in accurately estimating building heights, showcasing its potential for urban planning, environmental impact analysis, and other related applications.

Context-Aware Change Detection With Semi-Supervised Learning

Jun 15, 2023

Abstract:Change detection using earth observation data plays a vital role in quantifying the impact of disasters in affected areas. While data sources like Sentinel-2 provide rich optical information, they are often hindered by cloud cover, limiting their usage in disaster scenarios. However, leveraging pre-disaster optical data can offer valuable contextual information about the area such as landcover type, vegetation cover, soil types, enabling a better understanding of the disaster's impact. In this study, we develop a model to assess the contribution of pre-disaster Sentinel-2 data in change detection tasks, focusing on disaster-affected areas. The proposed Context-Aware Change Detection Network (CACDN) utilizes a combination of pre-disaster Sentinel-2 data, pre and post-disaster Sentinel-1 data and ancillary Digital Elevation Models (DEM) data. The model is validated on flood and landslide detection and evaluated using three metrics: Area Under the Precision-Recall Curve (AUPRC), Intersection over Union (IoU), and mean IoU. The preliminary results show significant improvement (4\%, AUPRC, 3-7\% IoU, 3-6\% mean IoU) in model's change detection capabilities when incorporated with pre-disaster optical data reflecting the effectiveness of using contextual information for accurate flood and landslide detection.

Multi-Modal Deep Learning for Multi-Temporal Urban Mapping With a Partly Missing Optical Modality

Jun 01, 2023

Abstract:This paper proposes a novel multi-temporal urban mapping approach using multi-modal satellite data from the Sentinel-1 Synthetic Aperture Radar (SAR) and Sentinel-2 MultiSpectral Instrument (MSI) missions. In particular, it focuses on the problem of a partly missing optical modality due to clouds. The proposed model utilizes two networks to extract features from each modality separately. In addition, a reconstruction network is utilized to approximate the optical features based on the SAR data in case of a missing optical modality. Our experiments on a multi-temporal urban mapping dataset with Sentinel-1 SAR and Sentinel-2 MSI data demonstrate that the proposed method outperforms a multi-modal approach that uses zero values as a replacement for missing optical data, as well as a uni-modal SAR-based approach. Therefore, the proposed method is effective in exploiting multi-modal data, if available, but it also retains its effectiveness in case the optical modality is missing.

Investigating Imbalances Between SAR and Optical Utilization for Multi-Modal Urban Mapping

Apr 11, 2023

Abstract:Accurate urban maps provide essential information to support sustainable urban development. Recent urban mapping methods use multi-modal deep neural networks to fuse Synthetic Aperture Radar (SAR) and optical data. However, multi-modal networks may rely on just one modality due to the greedy nature of learning. In turn, the imbalanced utilization of modalities can negatively affect the generalization ability of a network. In this paper, we investigate the utilization of SAR and optical data for urban mapping. To that end, a dual-branch network architecture using intermediate fusion modules to share information between the uni-modal branches is utilized. A cut-off mechanism in the fusion modules enables the stopping of information flow between the branches, which is used to estimate the network's dependence on SAR and optical data. While our experiments on the SEN12 Global Urban Mapping dataset show that good performance can be achieved with conventional SAR-optical data fusion (F1 score = 0.682 $\pm$ 0.014), we also observed a clear under-utilization of optical data. Therefore, future work is required to investigate whether a more balanced utilization of SAR and optical data can lead to performance improvements.

Mapping Urban Population Growth from Sentinel-2 MSI and Census Data Using Deep Learning: A Case Study in Kigali, Rwanda

Mar 15, 2023Abstract:To better understand current trends of urban population growth in Sub-Saharan Africa, high-quality spatiotemporal population estimates are necessary. While the joint use of remote sensing and deep learning has achieved promising results for population distribution estimation, most of the current work focuses on fine-scale spatial predictions derived from single date census, thereby neglecting temporal analyses. In this work, we focus on evaluating how deep learning change detection techniques can unravel temporal population dynamics at short intervals. Since Post-Classification Comparison (PCC) methods for change detection are known to propagate the error of the individual maps, we propose an end-to-end population growth mapping method. Specifically, a ResNet encoder, pretrained on a population mapping task with Sentinel-2 MSI data, was incorporated into a Siamese network. The Siamese network was trained at the census level to accurately predict population change. The effectiveness of the proposed method is demonstrated in Kigali, Rwanda, for the time period 2016-2020, using bi-temporal Sentinel-2 data. Compared to PCC, the Siamese network greatly reduced errors in population change predictions at the census level. These results show promise for future remote sensing-based population growth mapping endeavors.

Unsupervised Flood Detection on SAR Time Series

Dec 07, 2022

Abstract:Human civilization has an increasingly powerful influence on the earth system. Affected by climate change and land-use change, natural disasters such as flooding have been increasing in recent years. Earth observations are an invaluable source for assessing and mitigating negative impacts. Detecting changes from Earth observation data is one way to monitor the possible impact. Effective and reliable Change Detection (CD) methods can help in identifying the risk of disaster events at an early stage. In this work, we propose a novel unsupervised CD method on time series Synthetic Aperture Radar~(SAR) data. Our proposed method is a probabilistic model trained with unsupervised learning techniques, reconstruction, and contrastive learning. The change map is generated with the help of the distribution difference between pre-incident and post-incident data. Our proposed CD model is evaluated on flood detection data. We verified the efficacy of our model on 8 different flood sites, including three recent flood events from Copernicus Emergency Management Services and six from the Sen1Floods11 dataset. Our proposed model achieved an average of 64.53\% Intersection Over Union(IoU) value and 75.43\% F1 score. Our achieved IoU score is approximately 6-27\% and F1 score is approximately 7-22\% better than the compared unsupervised and supervised existing CD methods. The results and extensive discussion presented in the study show the effectiveness of the proposed unsupervised CD method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge