Rikky Muller

SPIRIT: Low Power Seizure Prediction using Unsupervised Online-Learning and Zoom Analog Frontends

Sep 07, 2024Abstract:Early prediction of seizures and timely interventions are vital for improving patients' quality of life. While seizure prediction has been shown in software-based implementations, to enable timely warnings of upcoming seizures, prediction must be done on an edge device to reduce latency. Ideally, such devices must also be low-power and track long-term drifts to minimize maintenance from the user. This work presents SPIRIT: Stochastic-gradient-descent-based Predictor with Integrated Retraining and In situ accuracy Tuning. SPIRIT is a complete system-on-a-chip (SoC) integrating an unsupervised online-learning seizure prediction classifier with eight 14.4 uW, 0.057 mm2, 90.5 dB dynamic range, Zoom Analog Frontends. SPIRIT achieves, on average, 97.5%/96.2% sensitivity/specificity respectively, predicting seizures an average of 8.4 minutes before they occur. Through its online learning algorithm, prediction accuracy improves by up to 15%, and prediction times extend by up to 7x, without any external intervention. Its classifier consumes 17.2 uW and occupies 0.14 mm2, the lowest reported for a prediction classifier by >134x in power and >5x in area. SPIRIT is also at least 5.6x more energy efficient than the state-of-the-art.

Towards EMG-to-Speech with a Necklace Form Factor

Jul 31, 2024

Abstract:Electrodes for decoding speech from electromyography (EMG) are typically placed on the face, requiring adhesives that are inconvenient and skin-irritating if used regularly. We explore a different device form factor, where dry electrodes are placed around the neck instead. 11-word, multi-speaker voiced EMG classifiers trained on data recorded with this device achieve 92.7% accuracy. Ablation studies reveal the importance of having more than two electrodes on the neck, and phonological analyses reveal similar classification confusions between neck-only and neck-and-face form factors. Finally, speech-EMG correlation experiments demonstrate a linear relationship between many EMG spectrogram frequency bins and self-supervised speech representation dimensions.

A Wireless Ear EEG Drowsiness Monitor

Jan 11, 2024Abstract:Wireless, neural wearables can enable life-saving drowsiness, cognitive, and health monitoring for heavy machinery operators, pilots, and drivers. While existing systems use in-cabin sensors to alert operators before accidents, wearables may enable monitoring across many user environments. Current neural wearables are promising but limited by consumable electrodes and bulky, wired electronics. To improve neural wearable usability, scalability, and enable discreet use in daily and itinerant environments, this work showcases the end-to-end design of the first wireless, in-ear, dry-electrode drowsiness monitoring platform. The proposed platform integrates additive manufacturing processes for gold-plated dry electrodes, user-generic earpiece designs, wireless electronics, and low-complexity machine learning algorithms. To evaluate the platform, thirty-five hours of ExG data were recorded across nine subjects performing repetitive drowsiness-inducing tasks. The data was used to train three, offline classifier models (logistic regression, support vector machine, and random forest) and evaluated with three training regimes (user-specific, leave-one-trial-out, and leave-one-user-out). The support vector machine classifier achieved an average accuracy of 93.2% while evaluating users it has seen before and 93.3% when evaluating a never-before-seen user. These results demonstrate for the first time that dry, 3D printed, user-generic electrodes can be used with wireless electronics to rapidly prototype wearable systems and achieve comparable average accuracy (>90%) to existing state-of-the-art in-ear and scalp ExG systems that utilize wet electrodes and wired, benchtop electronics. Further, this work demonstrates the feasibility of using population-trained machine learning models in future, wearable ear ExG applications focused on cognitive health and wellness tracking.

SOUL: An Energy-Efficient Unsupervised Online Learning Seizure Detection Classifier

Oct 01, 2021

Abstract:Implantable devices that record neural activity and detect seizures have been adopted to issue warnings or trigger neurostimulation to suppress epileptic seizures. Typical seizure detection systems rely on high-accuracy offline-trained machine learning classifiers that require manual retraining when seizure patterns change over long periods of time. For an implantable seizure detection system, a low power, at-the-edge, online learning algorithm can be employed to dynamically adapt to the neural signal drifts, thereby maintaining high accuracy without external intervention. This work proposes SOUL: Stochastic-gradient-descent-based Online Unsupervised Logistic regression classifier. After an initial offline training phase, continuous online unsupervised classifier updates are applied in situ, which improves sensitivity in patients with drifting seizure features. SOUL was tested on two human electroencephalography (EEG) datasets: the CHB-MIT scalp EEG dataset, and a long (>100 hours) NeuroVista intracranial EEG dataset. It was able to achieve an average sensitivity of 97.5% and 97.9% for the two datasets respectively, at >95% specificity. Sensitivity improved by at most 8.2% on long-term data when compared to a typical seizure detection classifier. SOUL was fabricated in TSMC's 28 nm process occupying 0.1 mm2 and achieves 1.5 nJ/classification energy efficiency, which is at least 24x more efficient than state-of-the-art.

Memory-Efficient, Limb Position-Aware Hand Gesture Recognition using Hyperdimensional Computing

Mar 09, 2021

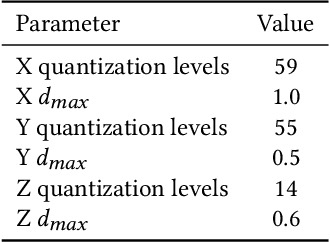

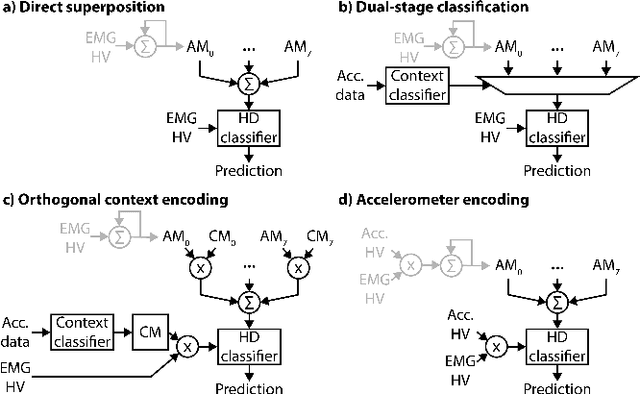

Abstract:Electromyogram (EMG) pattern recognition can be used to classify hand gestures and movements for human-machine interface and prosthetics applications, but it often faces reliability issues resulting from limb position change. One method to address this is dual-stage classification, in which the limb position is first determined using additional sensors to select between multiple position-specific gesture classifiers. While improving performance, this also increases model complexity and memory footprint, making a dual-stage classifier difficult to implement in a wearable device with limited resources. In this paper, we present sensor fusion of accelerometer and EMG signals using a hyperdimensional computing model to emulate dual-stage classification in a memory-efficient way. We demonstrate two methods of encoding accelerometer features to act as keys for retrieval of position-specific parameters from multiple models stored in superposition. Through validation on a dataset of 13 gestures in 8 limb positions, we obtain a classification accuracy of up to 93.34%, an improvement of 17.79% over using a model trained solely on EMG. We achieve this while only marginally increasing memory footprint over a single limb position model, requiring $8\times$ less memory than a traditional dual-stage classification architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge