Ana C. Arias

A Wireless Ear EEG Drowsiness Monitor

Jan 11, 2024Abstract:Wireless, neural wearables can enable life-saving drowsiness, cognitive, and health monitoring for heavy machinery operators, pilots, and drivers. While existing systems use in-cabin sensors to alert operators before accidents, wearables may enable monitoring across many user environments. Current neural wearables are promising but limited by consumable electrodes and bulky, wired electronics. To improve neural wearable usability, scalability, and enable discreet use in daily and itinerant environments, this work showcases the end-to-end design of the first wireless, in-ear, dry-electrode drowsiness monitoring platform. The proposed platform integrates additive manufacturing processes for gold-plated dry electrodes, user-generic earpiece designs, wireless electronics, and low-complexity machine learning algorithms. To evaluate the platform, thirty-five hours of ExG data were recorded across nine subjects performing repetitive drowsiness-inducing tasks. The data was used to train three, offline classifier models (logistic regression, support vector machine, and random forest) and evaluated with three training regimes (user-specific, leave-one-trial-out, and leave-one-user-out). The support vector machine classifier achieved an average accuracy of 93.2% while evaluating users it has seen before and 93.3% when evaluating a never-before-seen user. These results demonstrate for the first time that dry, 3D printed, user-generic electrodes can be used with wireless electronics to rapidly prototype wearable systems and achieve comparable average accuracy (>90%) to existing state-of-the-art in-ear and scalp ExG systems that utilize wet electrodes and wired, benchtop electronics. Further, this work demonstrates the feasibility of using population-trained machine learning models in future, wearable ear ExG applications focused on cognitive health and wellness tracking.

Adaptive EMG-based hand gesture recognition using hyperdimensional computing

Jan 02, 2019

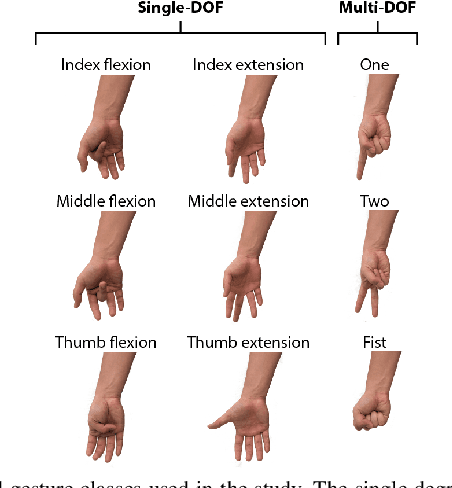

Abstract:Accurate recognition of hand gestures is crucial to the functionality of smart prosthetics and other modern human-computer interfaces. Many machine learning-based classifiers use electromyography (EMG) signals as input features, but they often misclassify gestures performed in different situational contexts (changing arm position, reapplication of electrodes, etc.) or with different effort levels due to changing signal properties. Here, we describe a learning and classification algorithm based on hyperdimensional (HD) computing that, unlike traditional machine learning algorithms, enables computationally efficient updates to incrementally incorporate new data and adapt to changing contexts. EMG signal encoding for both training and classification is performed using the same set of simple operations on 10,000-element random hypervectors enabling updates on the fly. Through human experiments using a custom EMG acquisition system, we demonstrate 88.87% classification accuracy on 13 individual finger flexion and extension gestures. Using simple model updates, we preserve this accuracy with less than 5.48% degradation when expanding to 21 commonly used gestures or when subject to changing situational contexts. We also show that the same methods for updating models can be used to account for variations resulting from the effort level with which a gesture is performed.

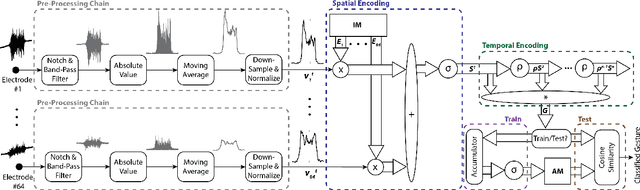

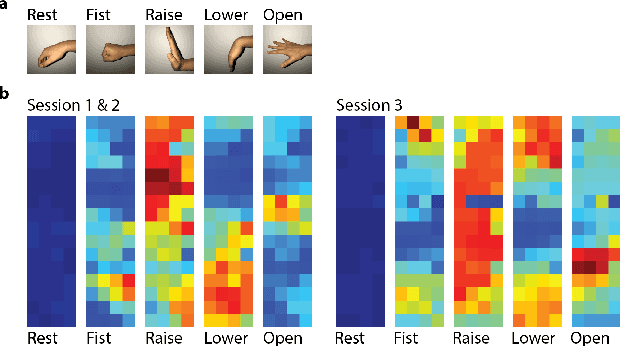

An EMG Gesture Recognition System with Flexible High-Density Sensors and Brain-Inspired High-Dimensional Classifier

Apr 05, 2018

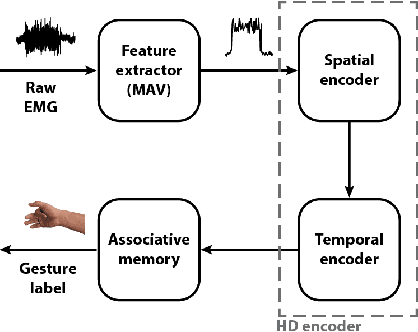

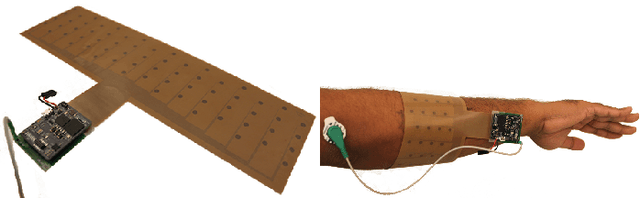

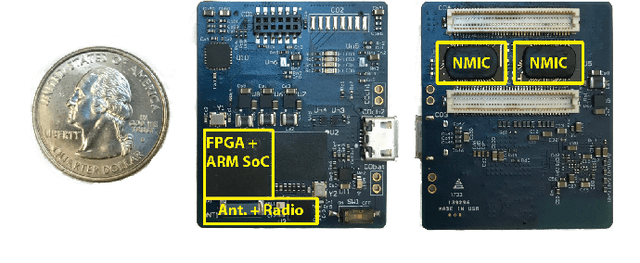

Abstract:EMG-based gesture recognition shows promise for human-machine interaction. Systems are often afflicted by signal and electrode variability which degrades performance over time. We present an end-to-end system combating this variability using a large-area, high-density sensor array and a robust classification algorithm. EMG electrodes are fabricated on a flexible substrate and interfaced to a custom wireless device for 64-channel signal acquisition and streaming. We use brain-inspired high-dimensional (HD) computing for processing EMG features in one-shot learning. The HD algorithm is tolerant to noise and electrode misplacement and can quickly learn from few gestures without gradient descent or back-propagation. We achieve an average classification accuracy of 96.64% for five gestures, with only 7% degradation when training and testing across different days. Our system maintains this accuracy when trained with only three trials of gestures; it also demonstrates comparable accuracy with the state-of-the-art when trained with one trial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge