Ricardo Ribeiro

Learning Rhetorical Structure Theory-based descriptions of observed behaviour

Jun 24, 2022

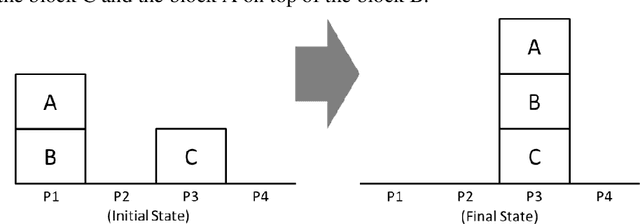

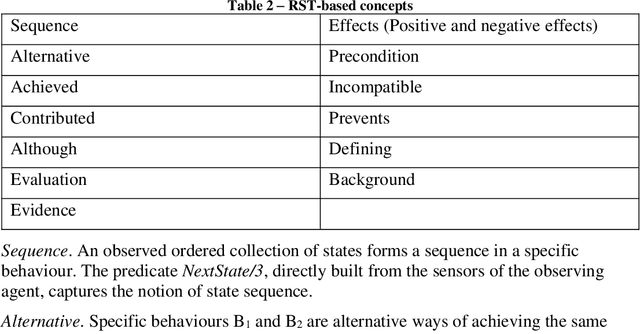

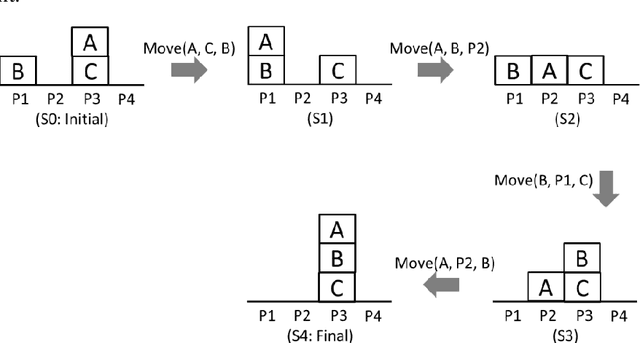

Abstract:In a previous paper, we have proposed a set of concepts, axiom schemata and algorithms that can be used by agents to learn to describe their behaviour, goals, capabilities, and environment. The current paper proposes a new set of concepts, axiom schemata and algorithms that allow the agent to learn new descriptions of an observed behaviour (e.g., perplexing actions), of its actor (e.g., undesired propositions or actions), and of its environment (e.g., incompatible propositions). Each learned description (e.g., a certain action prevents another action from being performed in the future) is represented by a relationship between entities (either propositions or actions) and is learned by the agent, just by observation, using domain-independent axiom schemata and or learning algorithms. The relations used by agents to represent the descriptions they learn were inspired on the Theory of Rhetorical Structure (RST). The main contribution of the paper is the relation family Although, inspired on the RST relation Concession. The accurate definition of the relations of the family Although involves a set of deontic concepts whose definition and corresponding algorithms are presented. The relations of the family Although, once extracted from the agent's observations, express surprise at the observed behaviour and, in certain circumstances, present a justification for it. The paper shows results of the presented proposals in a demonstration scenario, using implemented software.

Towards Learning Through Open-Domain Dialog

Feb 07, 2022Abstract:The development of artificial agents able to learn through dialog without domain restrictions has the potential to allow machines to learn how to perform tasks in a similar manner to humans and change how we relate to them. However, research in this area is practically nonexistent. In this paper, we identify the modifications required for a dialog system to be able to learn from the dialog and propose generic approaches that can be used to implement those modifications. More specifically, we discuss how knowledge can be extracted from the dialog, used to update the agent's semantic network, and grounded in action and observation. This way, we hope to raise awareness for this subject, so that it can become a focus of research in the future.

Online Body Schema Adaptation through Cost-Sensitive Active Learning

Jan 26, 2021

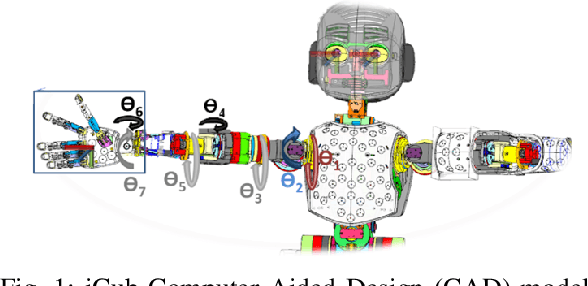

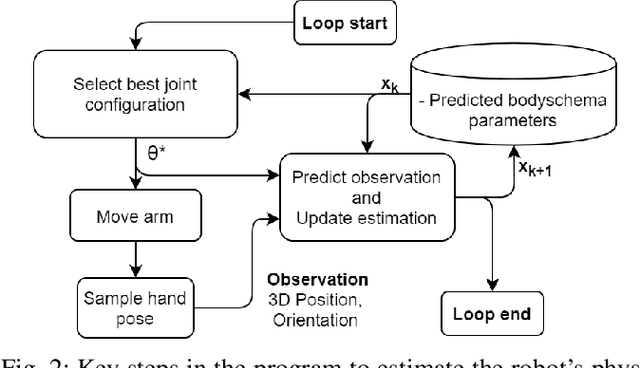

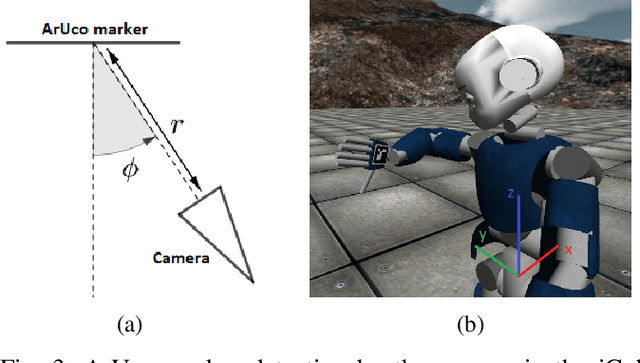

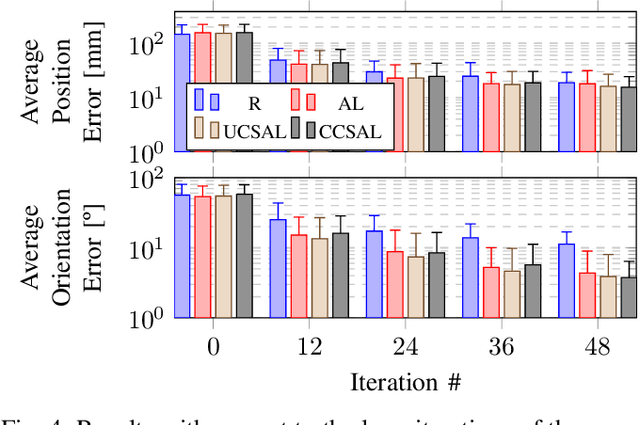

Abstract:Humanoid robots have complex bodies and kinematic chains with several Degrees-of-Freedom (DoF) which are difficult to model. Learning the parameters of a kinematic model can be achieved by observing the position of the robot links during prospective motions and minimising the prediction errors. This work proposes a movement efficient approach for estimating online the body-schema of a humanoid robot arm in the form of Denavit-Hartenberg (DH) parameters. A cost-sensitive active learning approach based on the A-Optimality criterion is used to select optimal joint configurations. The chosen joint configurations simultaneously minimise the error in the estimation of the body schema and minimise the movement between samples. This reduces energy consumption, along with mechanical fatigue and wear, while not compromising the learning accuracy. The work was implemented in a simulation environment, using the 7DoF arm of the iCub robot simulator. The hand pose is measured with a single camera via markers placed in the palm and back of the robot's hand. A non-parametric occlusion model is proposed to avoid choosing joint configurations where the markers are not visible, thus preventing worthless attempts. The results show cost-sensitive active learning has similar accuracy to the standard active learning approach, while reducing in about half the executed movement.

General-Purpose Communicative Function Recognition using a Hierarchical Network with Cascading Outputs and Maximum a Posteriori Path Estimation

Mar 07, 2020

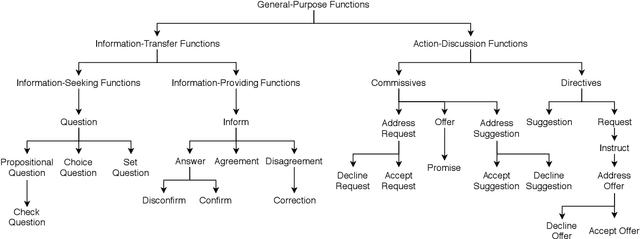

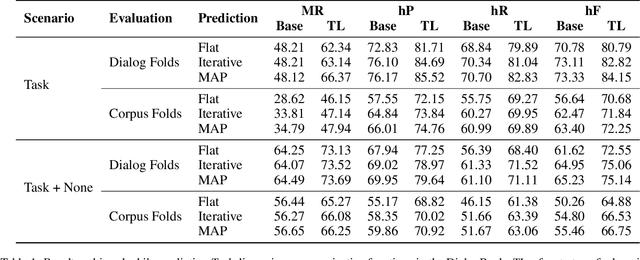

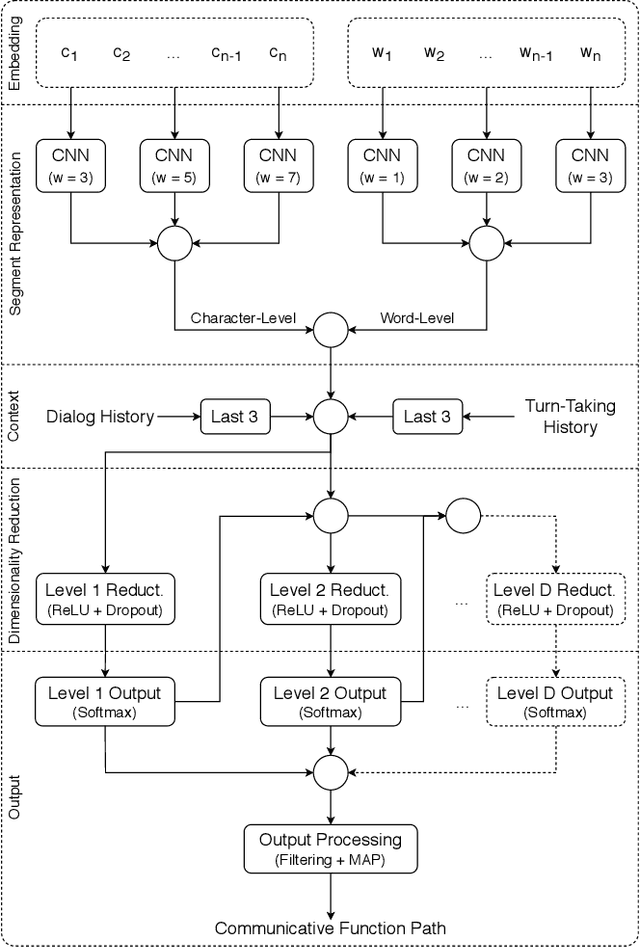

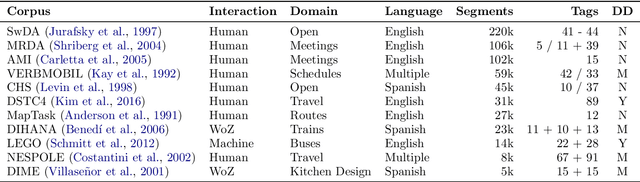

Abstract:ISO 24617-2, the standard for dialog act annotation, defines a hierarchically organized set of general-purpose communicative functions. The automatic recognition of these functions, although practically unexplored, is relevant for a dialog system, since they provide cues regarding the intention behind the segments and how they should be interpreted. In this paper, we explore the recognition of general-purpose communicative functions in the DialogBank, which is a reference set of dialogs annotated according to the standard. To do so, we adapt a state-of-the-art approach on flat dialog act recognition to deal with the hierarchical classification problem. More specifically, we propose the use of a hierarchical network with cascading outputs and maximum a posteriori path estimation to predict the communicative function at each level of the hierarchy, preserve the dependencies between the functions in the path, and decide at which level to stop. Furthermore, since the amount of dialogs in the DialogBank is reduced, we rely both on additional dialogs annotated using mapping processes and on transfer learning to improve performance. The results of our experiments show that the hierarchical approach outperforms a flat one and that maximum a posteriori estimation outperforms an iterative prediction approach based on masking.

Hierarchical Multi-Label Dialog Act Recognition on Spanish Data

Jul 29, 2019

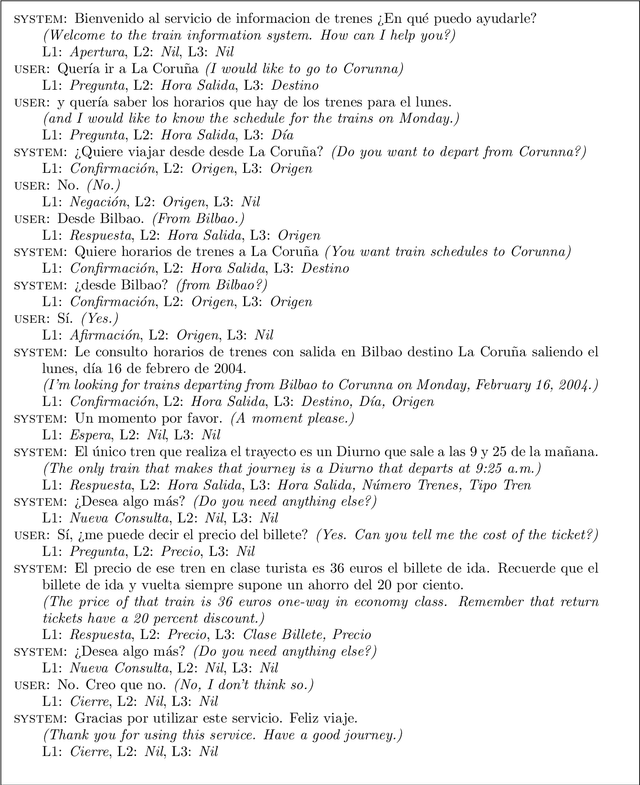

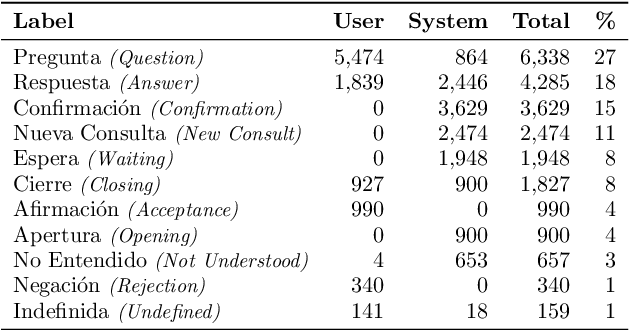

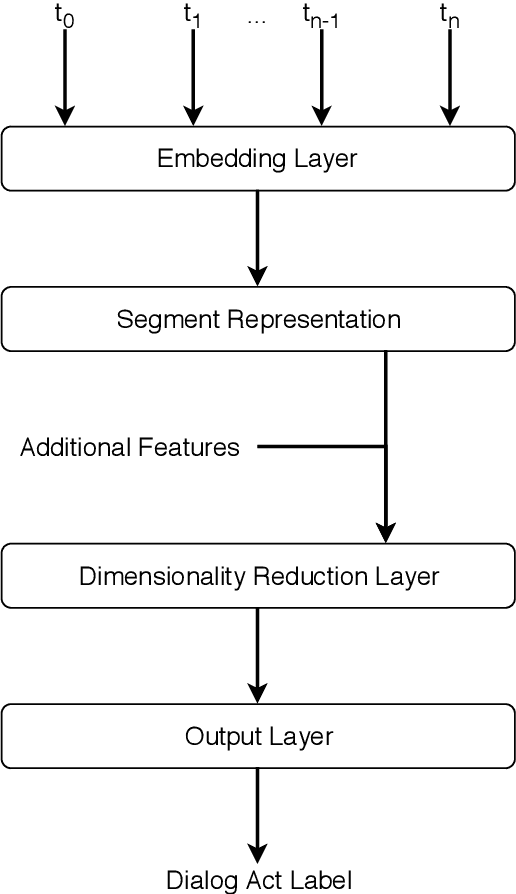

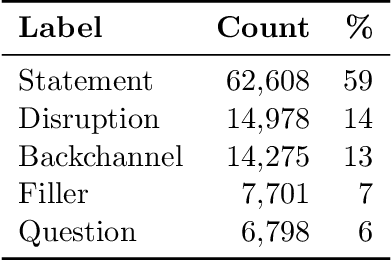

Abstract:Dialog acts reveal the intention behind the uttered words. Thus, their automatic recognition is important for a dialog system trying to understand its conversational partner. The study presented in this article approaches that task on the DIHANA corpus, whose three-level dialog act annotation scheme poses problems which have not been explored in recent studies. In addition to the hierarchical problem, the two lower levels pose multi-label classification problems. Furthermore, each level in the hierarchy refers to a different aspect concerning the intention of the speaker both in terms of the structure of the dialog and the task. Also, since its dialogs are in Spanish, it allows us to assess whether the state-of-the-art approaches on English data generalize to a different language. More specifically, we compare the performance of different segment representation approaches focusing on both sequences and patterns of words and assess the importance of the dialog history and the relations between the multiple levels of the hierarchy. Concerning the single-label classification problem posed by the top level, we show that the conclusions drawn on English data also hold on Spanish data. Furthermore, we show that the approaches can be adapted to multi-label scenarios. Finally, by hierarchically combining the best classifiers for each level, we achieve the best results reported for this corpus.

* 21 pages, 4 figures, 17 tables, translated version of the article published in Linguam\'atica 11(1)

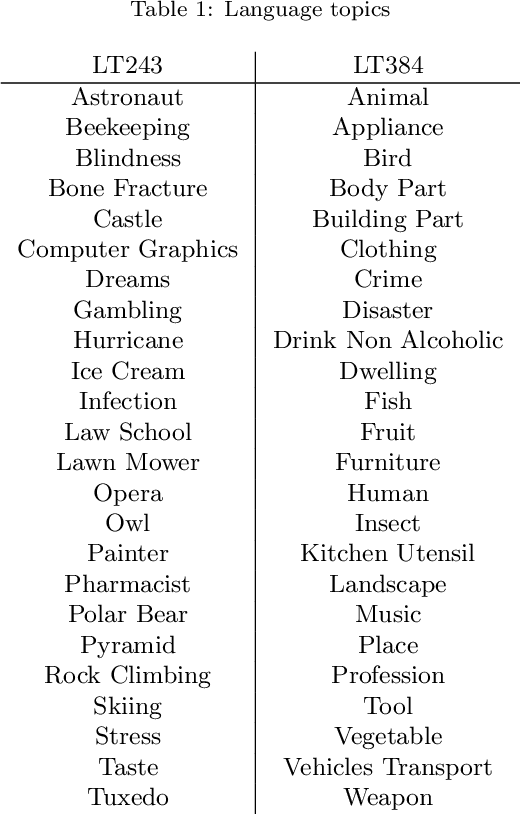

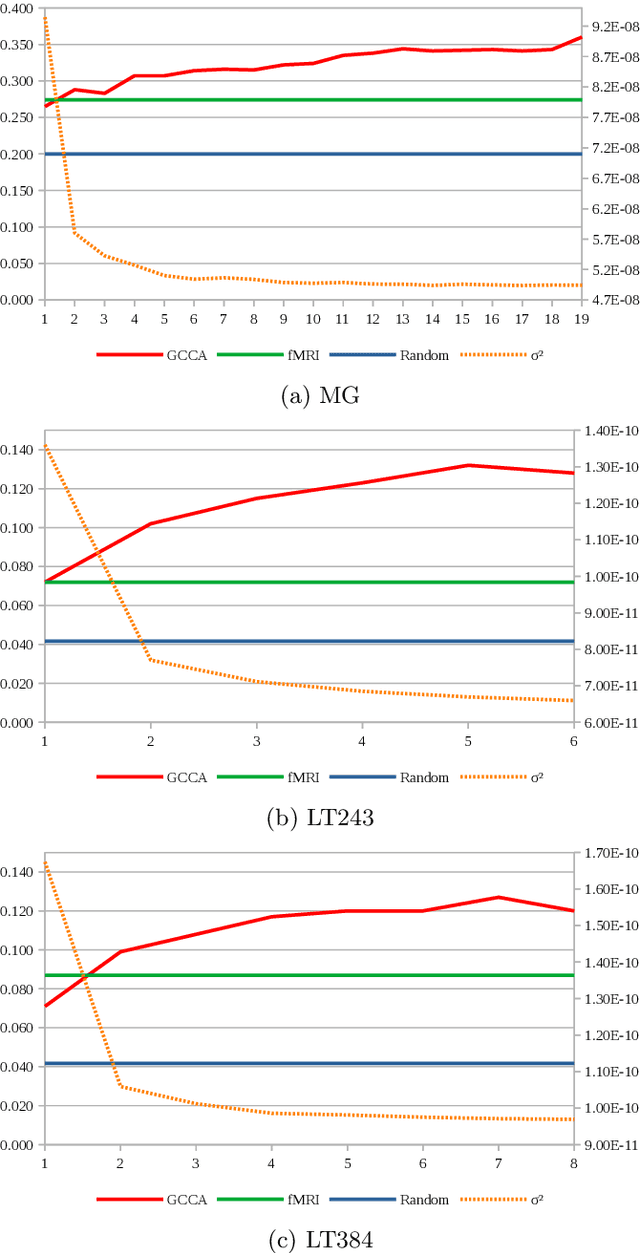

Low-dimensional Embodied Semantics for Music and Language

Jun 20, 2019

Abstract:Embodied cognition states that semantics is encoded in the brain as firing patterns of neural circuits, which are learned according to the statistical structure of human multimodal experience. However, each human brain is idiosyncratically biased, according to its subjective experience history, making this biological semantic machinery noisy with respect to the overall semantics inherent to media artifacts, such as music and language excerpts. We propose to represent shared semantics using low-dimensional vector embeddings by jointly modeling several brains from human subjects. We show these unsupervised efficient representations outperform the original high-dimensional fMRI voxel spaces in proxy music genre and language topic classification tasks. We further show that joint modeling of several subjects increases the semantic richness of the learned latent vector spaces.

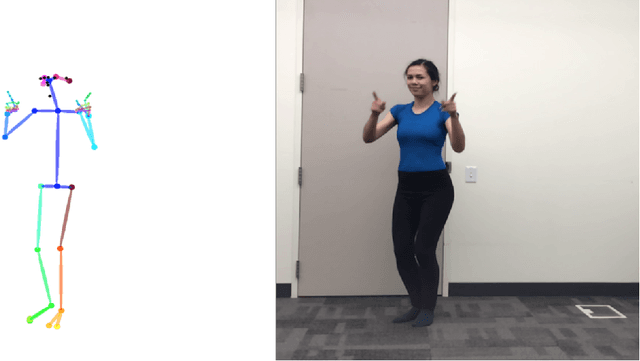

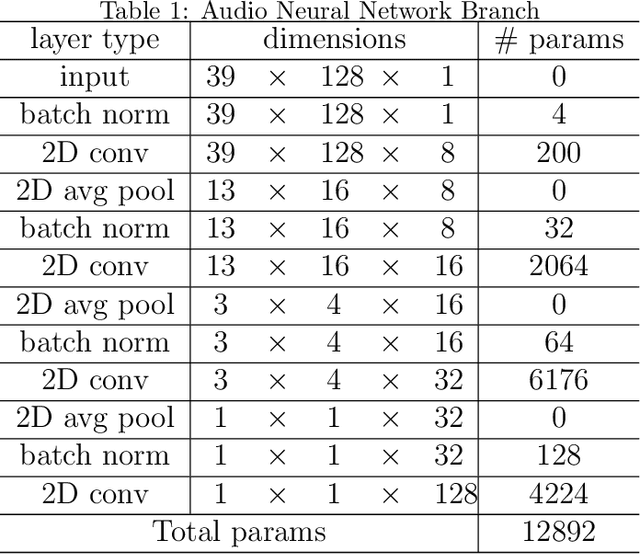

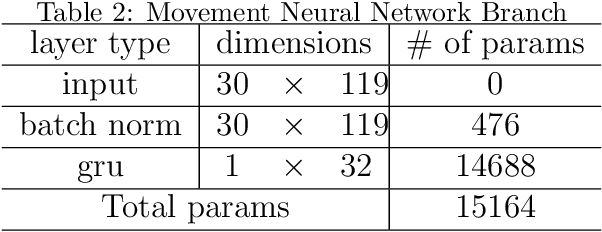

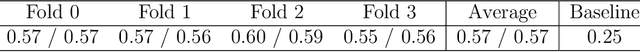

Learning Embodied Semantics via Music and Dance Semiotic Correlations

Mar 25, 2019

Abstract:Music semantics is embodied, in the sense that meaning is biologically mediated by and grounded in the human body and brain. This embodied cognition perspective also explains why music structures modulate kinetic and somatosensory perception. We leverage this aspect of cognition, by considering dance as a proxy for music perception, in a statistical computational model that learns semiotic correlations between music audio and dance video. We evaluate the ability of this model to effectively capture underlying semantics in a cross-modal retrieval task. Quantitative results, validated with statistical significance testing, strengthen the body of evidence for embodied cognition in music and show the model can recommend music audio for dance video queries and vice-versa.

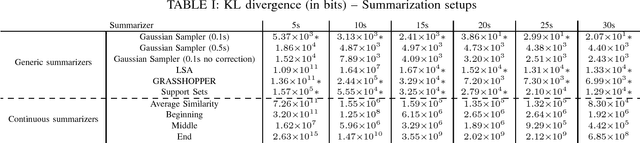

An Information-theoretic Approach to Machine-oriented Music Summarization

Sep 21, 2018

Abstract:Music summarization allows for higher efficiency in processing, storage, and sharing of datasets. Machine-oriented approaches, being agnostic to human consumption, optimize these aspects even further. Such summaries have already been successfully validated in some MIR tasks. We now generalize previous conclusions by evaluating the impact of generic summarization of music from a probabilistic perspective. We estimate Gaussian distributions for original and summarized songs and compute their relative entropy, in order to measure information loss incurred by summarization. Our results suggest that relative entropy is a good predictor of summarization performance in the context of tasks relying on a bag-of-features model. Based on this observation, we further propose a straightforward yet expressive summarizer, which minimizes relative entropy with respect to the original song, that objectively outperforms previous methods and is better suited to avoid potential copyright issues.

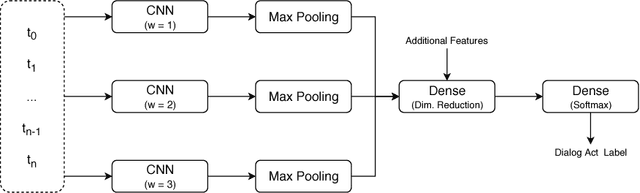

A Study on Dialog Act Recognition using Character-Level Tokenization

Jul 23, 2018

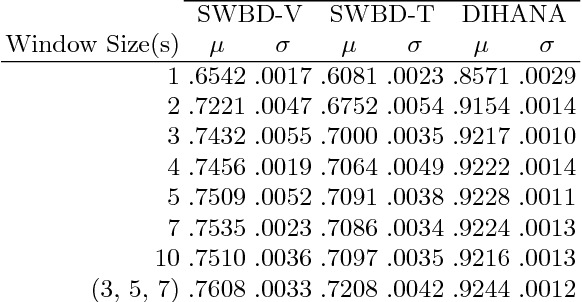

Abstract:Dialog act recognition is an important step for dialog systems since it reveals the intention behind the uttered words. Most approaches on the task use word-level tokenization. In contrast, this paper explores the use of character-level tokenization. This is relevant since there is information at the sub-word level that is related to the function of the words and, thus, their intention. We also explore the use of different context windows around each token, which are able to capture important elements, such as affixes. Furthermore, we assess the importance of punctuation and capitalization. We performed experiments on both the Switchboard Dialog Act Corpus and the DIHANA Corpus. In both cases, the experiments not only show that character-level tokenization leads to better performance than the typical word-level approaches, but also that both approaches are able to capture complementary information. Thus, the best results are achieved by combining tokenization at both levels.

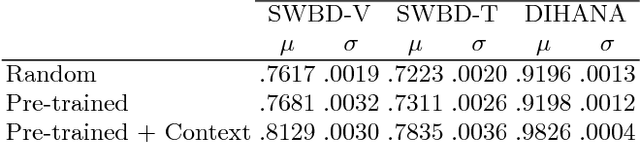

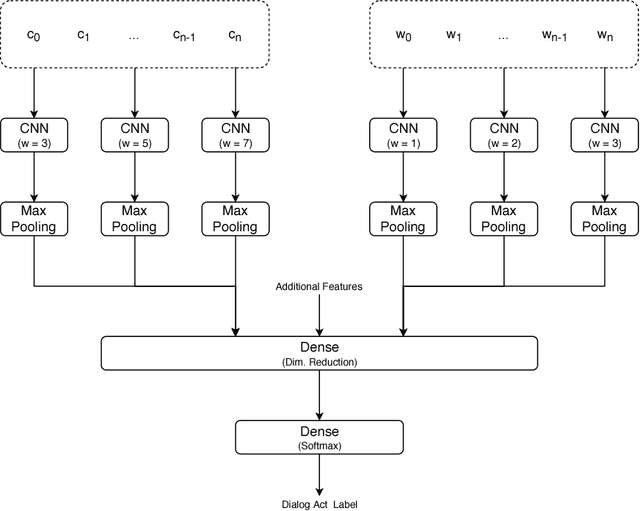

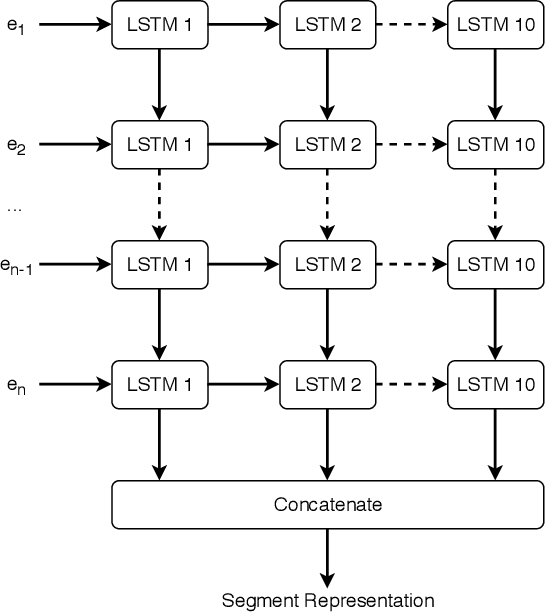

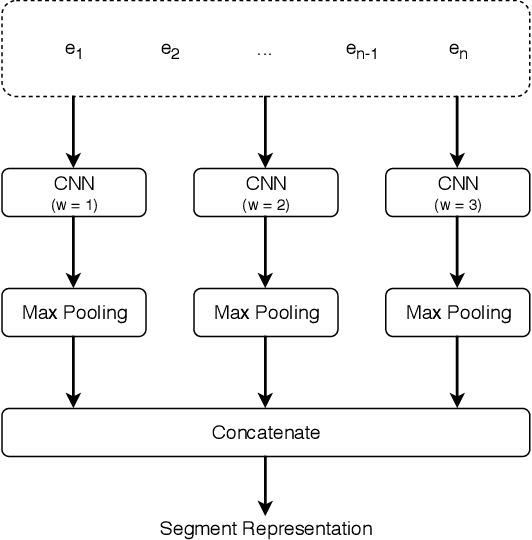

Deep Dialog Act Recognition using Multiple Token, Segment, and Context Information Representations

Jul 23, 2018

Abstract:A dialog act is a representation of an intention transmitted in the form of words. In this sense, when someone wants to transmit some intention, it is revealed both in the selected words and in how they are combined to form a structured segment. Furthermore, the intentions of a speaker depend not only on her intrinsic motivation, but also on the history of the dialog and the expectation she has of its future. In this article we explore multiple representation approaches to capture cues for intention at different levels. Recent approaches on automatic dialog act recognition use Word2Vec embeddings for word representation. However, these are not able to capture segment structure information nor morphological traits related to intention. Thus, we also explore the use of dependency-based word embeddings, as well as character-level tokenization. To generate the segment representation, the top performing approaches on the task use either RNNs that are able to capture information concerning the sequentiality of the tokens or CNNs that are able to capture token patterns that reveal function. However, both aspects are important and should be captured together. Thus, we also explore the use of an RCNN. Finally, context information concerning turn-taking, as well as that provided by the surrounding segments has been proved important in previous studies. However, the representation approaches used for the latter in those studies are not appropriate to capture sequentiality, which is one of the most important characteristics of the segments in a dialog. Thus, we explore the use of approaches able to capture that information. By combining the best approaches for each aspect, we achieve results that surpass the previous state-of-the-art in a dialog system context and similar to human-level in an annotation context on the Switchboard Dialog Act Corpus, which is the most explored corpus for the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge