Renfu Lu

Advancement and Field Evaluation of a Dual-arm Apple Harvesting Robot

Jun 06, 2025Abstract:Apples are among the most widely consumed fruits worldwide. Currently, apple harvesting fully relies on manual labor, which is costly, drudging, and hazardous to workers. Hence, robotic harvesting has attracted increasing attention in recent years. However, existing systems still fall short in terms of performance, effectiveness, and reliability for complex orchard environments. In this work, we present the development and evaluation of a dual-arm harvesting robot. The system integrates a ToF camera, two 4DOF robotic arms, a centralized vacuum system, and a post-harvest handling module. During harvesting, suction force is dynamically assigned to either arm via the vacuum system, enabling efficient apple detachment while reducing power consumption and noise. Compared to our previous design, we incorporated a platform movement mechanism that enables both in-out and up-down adjustments, enhancing the robot's dexterity and adaptability to varying canopy structures. On the algorithmic side, we developed a robust apple localization pipeline that combines a foundation-model-based detector, segmentation, and clustering-based depth estimation, which improves performance in orchards. Additionally, pressure sensors were integrated into the system, and a novel dual-arm coordination strategy was introduced to respond to harvest failures based on sensor feedback, further improving picking efficiency. Field demos were conducted in two commercial orchards in MI, USA, with different canopy structures. The system achieved success rates of 0.807 and 0.797, with an average picking cycle time of 5.97s. The proposed strategy reduced harvest time by 28% compared to a single-arm baseline. The dual-arm harvesting robot enhances the reliability and efficiency of apple picking. With further advancements, the system holds strong potential for autonomous operation and commercialization for the apple industry.

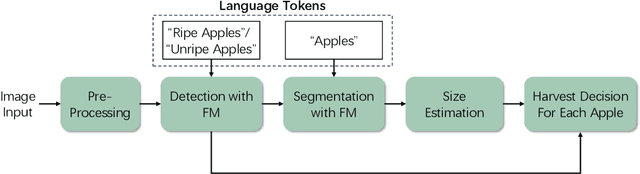

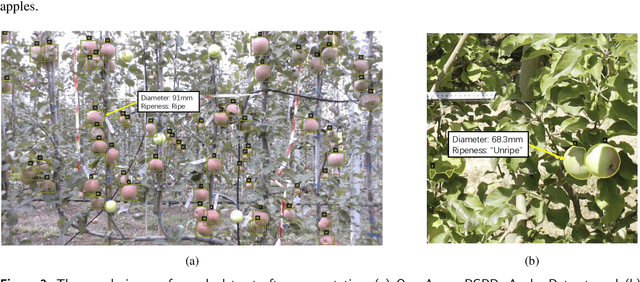

Foundation Model-Based Apple Ripeness and Size Estimation for Selective Harvesting

Feb 03, 2025

Abstract:Harvesting is a critical task in the tree fruit industry, demanding extensive manual labor and substantial costs, and exposing workers to potential hazards. Recent advances in automated harvesting offer a promising solution by enabling efficient, cost-effective, and ergonomic fruit picking within tight harvesting windows. However, existing harvesting technologies often indiscriminately harvest all visible and accessible fruits, including those that are unripe or undersized. This study introduces a novel foundation model-based framework for efficient apple ripeness and size estimation. Specifically, we curated two public RGBD-based Fuji apple image datasets, integrating expanded annotations for ripeness ("Ripe" vs. "Unripe") based on fruit color and image capture dates. The resulting comprehensive dataset, Fuji-Ripeness-Size Dataset, includes 4,027 images and 16,257 annotated apples with ripeness and size labels. Using Grounding-DINO, a language-model-based object detector, we achieved robust apple detection and ripeness classification, outperforming other state-of-the-art models. Additionally, we developed and evaluated six size estimation algorithms, selecting the one with the lowest error and variation for optimal performance. The Fuji-Ripeness-Size Dataset and the apple detection and size estimation algorithms are made publicly available, which provides valuable benchmarks for future studies in automated and selective harvesting.

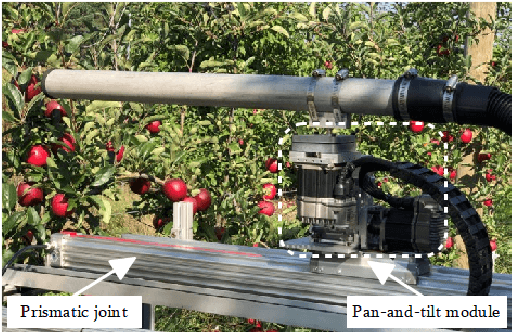

High-Precision Fruit Localization Using Active Laser-Camera Scanning: Robust Laser Line Extraction for 2D-3D Transformation

Nov 15, 2023Abstract:Recent advancements in deep learning-based approaches have led to remarkable progress in fruit detection, enabling robust fruit identification in complex environments. However, much less progress has been made on fruit 3D localization, which is equally crucial for robotic harvesting. Complex fruit shape/orientation, fruit clustering, varying lighting conditions, and occlusions by leaves and branches have greatly restricted existing sensors from achieving accurate fruit localization in the natural orchard environment. In this paper, we report on the design of a novel localization technique, called Active Laser-Camera Scanning (ALACS), to achieve accurate and robust fruit 3D localization. The ALACS hardware setup comprises a red line laser, an RGB color camera, a linear motion slide, and an external RGB-D camera. Leveraging the principles of dynamic-targeting laser-triangulation, ALACS enables precise transformation of the projected 2D laser line from the surface of apples to the 3D positions. To facilitate laser pattern acquisitions, a Laser Line Extraction (LLE) method is proposed for robust and high-precision feature extraction on apples. Comprehensive evaluations of LLE demonstrated its ability to extract precise patterns under variable lighting and occlusion conditions. The ALACS system achieved average apple localization accuracies of 6.9 11.2 mm at distances ranging from 1.0 m to 1.6 m, compared to 21.5 mm by a commercial RealSense RGB-D camera, in an indoor experiment. Orchard evaluations demonstrated that ALACS has achieved a 95% fruit detachment rate versus a 71% rate by the RealSense camera. By overcoming the challenges of apple 3D localization, this research contributes to the advancement of robotic fruit harvesting technology.

Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration

Nov 04, 2023Abstract:Robust and effective fruit detection and localization is essential for robotic harvesting systems. While extensive research efforts have been devoted to improving fruit detection, less emphasis has been placed on the fruit localization aspect, which is a crucial yet challenging task due to limited depth accuracy from existing sensor measurements in the natural orchard environment with variable lighting conditions and foliage/branch occlusions. In this paper, we present the system design and calibration of an Active LAser-Camera Scanner (ALACS), a novel perception module for robust and high-precision fruit localization. The hardware of ALACS mainly consists of a red line laser, an RGB camera, and a linear motion slide, which are seamlessly integrated into an active scanning scheme where a dynamic-targeting laser-triangulation principle is employed. A high-fidelity extrinsic model is developed to pair the laser illumination and the RGB camera, enabling precise depth computation when the target is captured by both sensors. A random sample consensus-based robust calibration scheme is then designed to calibrate the model parameters based on collected data. Comprehensive evaluations are conducted to validate the system model and calibration scheme. The results show that the proposed calibration method can detect and remove data outliers to achieve robust parameter computation, and the calibrated ALACS system is able to achieve high-precision localization with millimeter-level accuracy.

O2RNet: Occluder-Occludee Relational Network for Robust Apple Detection in Clustered Orchard Environments

Mar 08, 2023Abstract:Automated apple harvesting has attracted significant research interest in recent years due to its potential to revolutionize the apple industry, addressing the issues of shortage and high costs in labor. One key technology to fully enable efficient automated harvesting is accurate and robust apple detection, which is challenging due to complex orchard environments that involve varying lighting conditions and foliage/branch occlusions. Furthermore, clustered apples are common in the orchard, which brings additional challenges as the clustered apples may be identified as one apple. This will cause issues in localization for subsequent robotic operations. In this paper, we present the development of a novel deep learning-based apple detection framework, Occluder-Occludee Relational Network (O2RNet), for robust detection of apples in such clustered environments. This network exploits the occuluder-occludee relationship modeling head by introducing a feature expansion structure to enable the combination of layered traditional detectors to split clustered apples and foliage occlusions. More specifically, we collect a comprehensive apple orchard image dataset under different lighting conditions (overcast, front lighting, and back lighting) with frequent apple occlusions. We then develop a novel occlusion-aware network for apple detection, in which a feature expansion structure is incorporated into the convolutional neural networks to extract additional features generated by the original network for occluded apples. Comprehensive evaluations are performed, which show that the developed O2RNet outperforms state-of-the-art models with a higher accuracy of 94\% and a higher F1-score of 0.88 on apple detection.

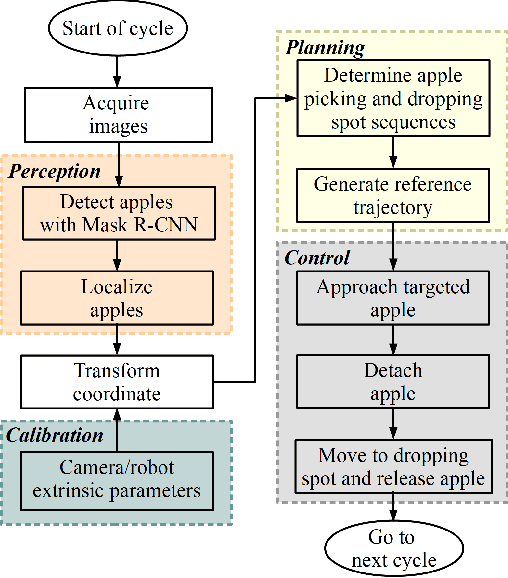

Algorithm Design and Integration for a Robotic Apple Harvesting System

Mar 01, 2022

Abstract:Due to labor shortage and rising labor cost for the apple industry, there is an urgent need for the development of robotic systems to efficiently and autonomously harvest apples. In this paper, we present a system overview and algorithm design of our recently developed robotic apple harvester prototype. Our robotic system is enabled by the close integration of several core modules, including calibration, visual perception, planning, and control. This paper covers the main methods and advancements in robust extrinsic parameter calibration, deep learning-based multi-view fruit detection and localization, unified picking and dropping planning, and dexterous manipulation control. Indoor and field experiments were conducted to evaluate the performance of the developed system, which achieved an average picking rate of 3.6 seconds per apple. This is a significant improvement over other reported apple harvesting robots with a picking rate in the range of 7-10 seconds per apple. The current prototype shows promising performance towards further development of efficient and automated apple harvesting technology. Finally, limitations of the current system and future work are discussed.

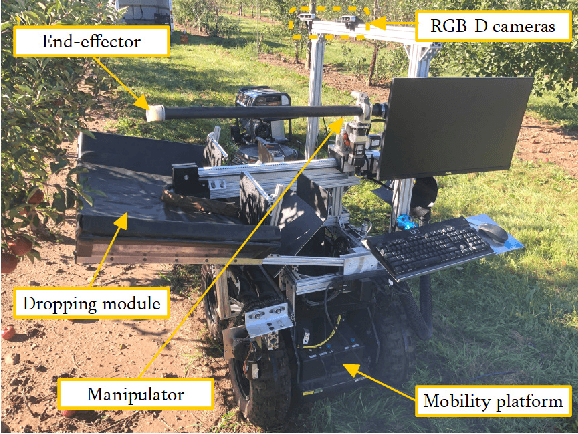

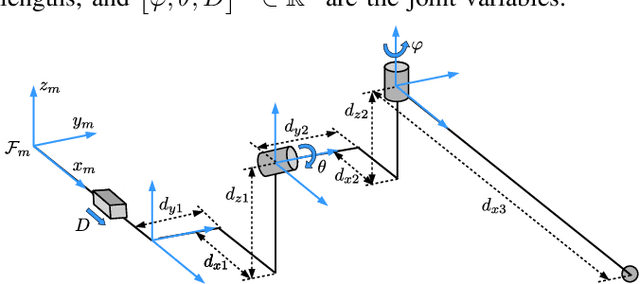

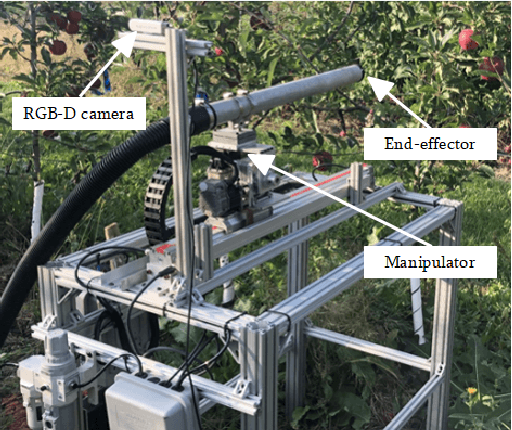

System Design and Control of an Apple Harvesting Robot

Oct 21, 2020

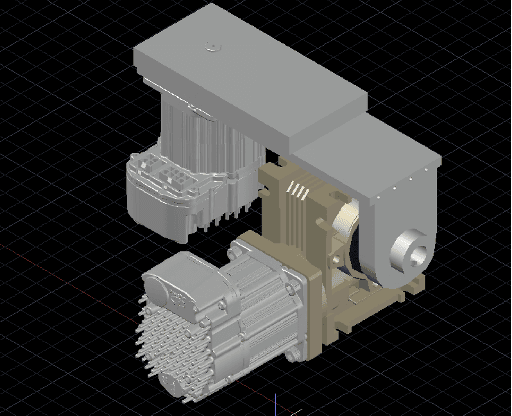

Abstract:There is a growing need for robotic apple harvesting due to decreasing availability and rising cost in labor. Towards the goal of developing a viable robotic system for apple harvesting, this paper presents synergistic mechatronic design and motion control of a robotic apple harvesting prototype, which lays a critical foundation for future advancements. Specifically, we develop a deep learning-based fruit detection and localization system using an RGB-D camera. A three degree-of-freedom manipulator is then designed with a hybrid pneumatic/motor actuation mechanism to achieve fast and dexterous movements. A vacuum-based end-effector is used for apple detaching. These three components are integrated into a robotic apple harvesting prototype with simplicity, compactness, and robustness. Moreover, a nonlinear velocity-based control scheme is developed for the manipulator to achieve accurate and agile motion control. Test experiments are conducted to demonstrate the performance of the developed apple harvesting robot.

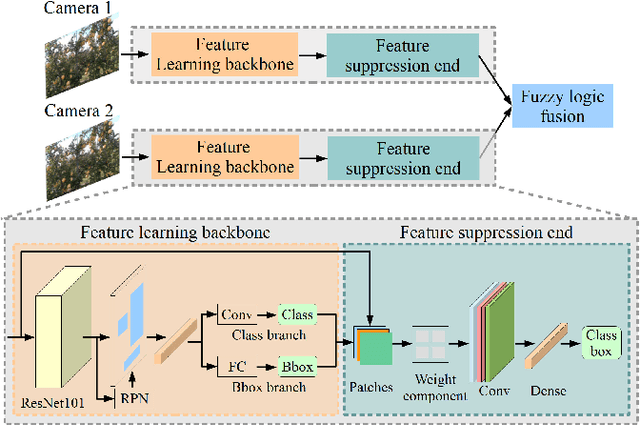

DeepApple: Deep Learning-based Apple Detection using a Suppression Mask R-CNN

Oct 19, 2020

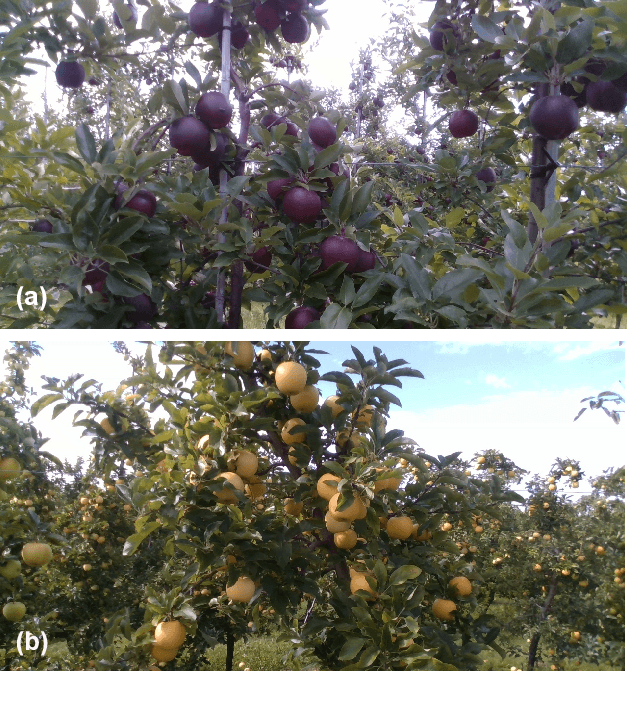

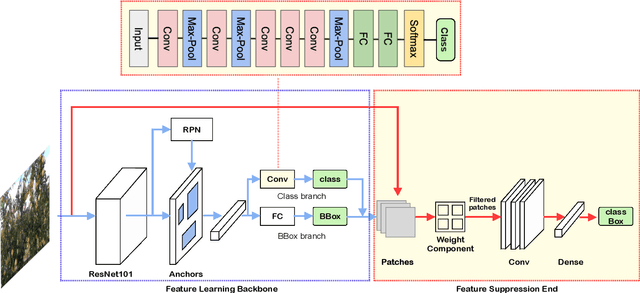

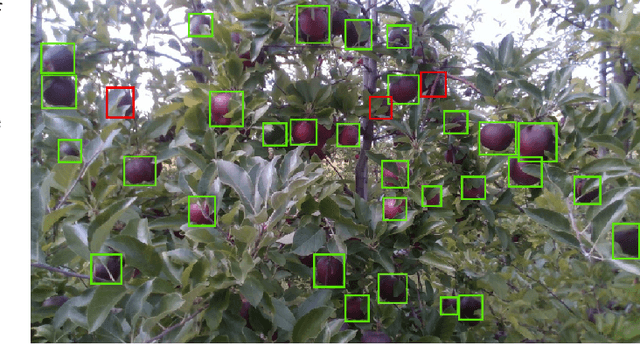

Abstract:Robotic apple harvesting has received much research attention in the past few years due to growing shortage and rising cost in labor. One key enabling technology towards automated harvesting is accurate and robust apple detection, which poses great challenges as a result of the complex orchard environment that involves varying lighting conditions and foliage/branch occlusions. This letter reports on the development of a novel deep learning-based apple detection framework named DeepApple. Specifically, we first collect a comprehensive apple orchard dataset for 'Gala' and 'Blondee' apples, using a color camera, under different lighting conditions (sunny vs. overcast and front lighting vs. back lighting). We then develop a novel suppression Mask R-CNN for apple detection, in which a suppression branch is added to the standard Mask R-CNN to suppress non-apple features generated by the original network. Comprehensive evaluations are performed, which show that the developed suppression Mask R-CNN network outperforms state-of-the-art models with a higher F1-score of 0.905 and a detection time of 0.25 second per frame on a standard desktop computer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge