René Schuster

SAILS: Segment Anything with Incrementally Learned Semantics for Task-Invariant and Training-Free Continual Learning

Feb 16, 2026Abstract:Continual learning remains constrained by the need for repeated retraining, high computational costs, and the persistent challenge of forgetting. These factors significantly limit the applicability of continuous learning in real-world settings, as iterative model updates require significant computational resources and inherently exacerbate forgetting. We present SAILS -- Segment Anything with Incrementally Learned Semantics, a training-free framework for Class-Incremental Semantic Segmentation (CISS) that sidesteps these challenges entirely. SAILS leverages foundational models to decouple CISS into two stages: Zero-shot region extraction using Segment Anything Model (SAM), followed by semantic association through prototypes in a fixed feature space. SAILS incorporates selective intra-class clustering, resulting in multiple prototypes per class to better model intra-class variability. Our results demonstrate that, despite requiring no incremental training, SAILS typically surpasses the performance of existing training-based approaches on standard CISS datasets, particularly in long and challenging task sequences where forgetting tends to be most severe. By avoiding parameter updates, SAILS completely eliminates forgetting and maintains consistent, task-invariant performance. Furthermore, SAILS exhibits positive backward transfer, where the introduction of new classes can enhance performance on previous classes.

Domain-Incremental Semantic Segmentation for Autonomous Driving under Adverse Driving Conditions

Jan 09, 2025Abstract:Semantic segmentation for autonomous driving is an even more challenging task when faced with adverse driving conditions. Standard models trained on data recorded under ideal conditions show a deteriorated performance in unfavorable weather or illumination conditions. Fine-tuning on the new task or condition would lead to overwriting the previously learned information resulting in catastrophic forgetting. Adapting to the new conditions through traditional domain adaption methods improves the performance on the target domain at the expense of the source domain. Addressing these issues, we propose an architecture-based domain-incremental learning approach called Progressive Semantic Segmentation (PSS). PSS is a task-agnostic, dynamically growing collection of domain-specific segmentation models. The task of inferring the domain and subsequently selecting the appropriate module for segmentation is carried out using a collection of convolutional autoencoders. We extensively evaluate our proposed approach using several datasets at varying levels of granularity in the categorization of adverse driving conditions. Furthermore, we demonstrate the generalization of the proposed approach to similar and unseen domains.

Modality-Incremental Learning with Disjoint Relevance Mapping Networks for Image-based Semantic Segmentation

Nov 26, 2024

Abstract:In autonomous driving, environment perception has significantly advanced with the utilization of deep learning techniques for diverse sensors such as cameras, depth sensors, or infrared sensors. The diversity in the sensor stack increases the safety and contributes to robustness against adverse weather and lighting conditions. However, the variance in data acquired from different sensors poses challenges. In the context of continual learning (CL), incremental learning is especially challenging for considerably large domain shifts, e.g. different sensor modalities. This amplifies the problem of catastrophic forgetting. To address this issue, we formulate the concept of modality-incremental learning and examine its necessity, by contrasting it with existing incremental learning paradigms. We propose the use of a modified Relevance Mapping Network (RMN) to incrementally learn new modalities while preserving performance on previously learned modalities, in which relevance maps are disjoint. Experimental results demonstrate that the prevention of shared connections in this approach helps alleviate the problem of forgetting within the constraints of a strict continual learning framework.

AnonyNoise: Anonymizing Event Data with Smart Noise to Outsmart Re-Identification and Preserve Privacy

Nov 25, 2024Abstract:The increasing capabilities of deep neural networks for re-identification, combined with the rise in public surveillance in recent years, pose a substantial threat to individual privacy. Event cameras were initially considered as a promising solution since their output is sparse and therefore difficult for humans to interpret. However, recent advances in deep learning proof that neural networks are able to reconstruct high-quality grayscale images and re-identify individuals using data from event cameras. In our paper, we contribute a crucial ethical discussion on data privacy and present the first event anonymization pipeline to prevent re-identification not only by humans but also by neural networks. Our method effectively introduces learnable data-dependent noise to cover personally identifiable information in raw event data, reducing attackers' re-identification capabilities by up to 60%, while maintaining substantial information for the performing of downstream tasks. Moreover, our anonymization generalizes well on unseen data and is robust against image reconstruction and inversion attacks. Code: https://github.com/dfki-av/AnonyNoise

ShapeAug++: More Realistic Shape Augmentation for Event Data

Sep 17, 2024

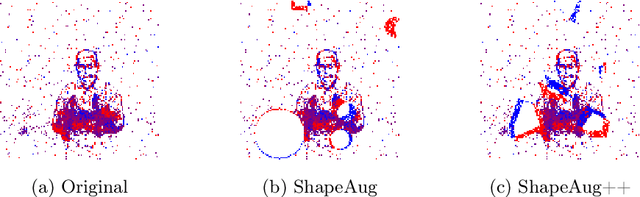

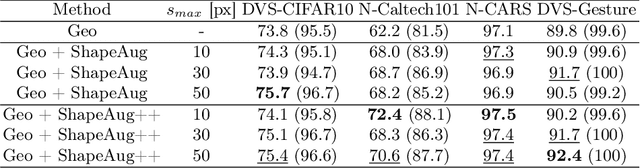

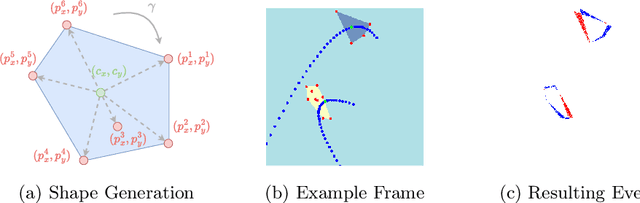

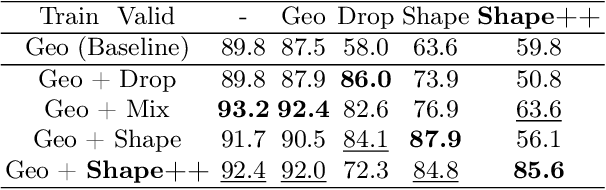

Abstract:The novel Dynamic Vision Sensors (DVSs) gained a great amount of attention recently as they are superior compared to RGB cameras in terms of latency, dynamic range and energy consumption. This is particularly of interest for autonomous applications since event cameras are able to alleviate motion blur and allow for night vision. One challenge in real-world autonomous settings is occlusion where foreground objects hinder the view on traffic participants in the background. The ShapeAug method addresses this problem by using simulated events resulting from objects moving on linear paths for event data augmentation. However, the shapes and movements lack complexity, making the simulation fail to resemble the behavior of objects in the real world. Therefore in this paper, we propose ShapeAug++, an extended version of ShapeAug which involves randomly generated polygons as well as curved movements. We show the superiority of our method on multiple DVS classification datasets, improving the top-1 accuracy by up to 3.7% compared to ShapeAug.

CLEO: Continual Learning of Evolving Ontologies

Jul 11, 2024

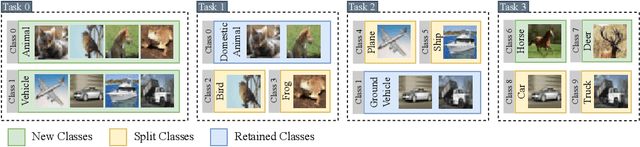

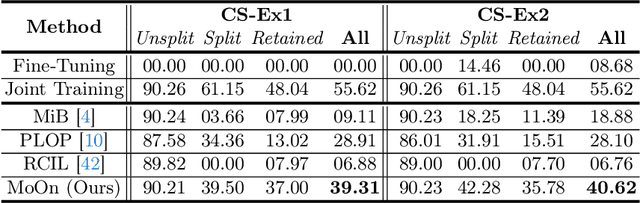

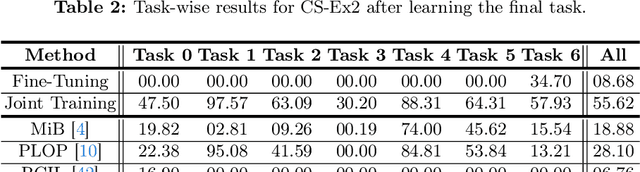

Abstract:Continual learning (CL) addresses the problem of catastrophic forgetting in neural networks, which occurs when a trained model tends to overwrite previously learned information, when presented with a new task. CL aims to instill the lifelong learning characteristic of humans in intelligent systems, making them capable of learning continuously while retaining what was already learned. Current CL problems involve either learning new domains (domain-incremental) or new and previously unseen classes (class-incremental). However, general learning processes are not just limited to learning information, but also refinement of existing information. In this paper, we define CLEO - Continual Learning of Evolving Ontologies, as a new incremental learning setting under CL to tackle evolving classes. CLEO is motivated by the need for intelligent systems to adapt to real-world ontologies that change over time, such as those in autonomous driving. We use Cityscapes, PASCAL VOC, and Mapillary Vistas to define the task settings and demonstrate the applicability of CLEO. We highlight the shortcomings of existing CIL methods in adapting to CLEO and propose a baseline solution, called Modelling Ontologies (MoOn). CLEO is a promising new approach to CL that addresses the challenge of evolving ontologies in real-world applications. MoOn surpasses previous CL approaches in the context of CLEO.

EgoFlowNet: Non-Rigid Scene Flow from Point Clouds with Ego-Motion Support

Jul 03, 2024Abstract:Recent weakly-supervised methods for scene flow estimation from LiDAR point clouds are limited to explicit reasoning on object-level. These methods perform multiple iterative optimizations for each rigid object, which makes them vulnerable to clustering robustness. In this paper, we propose our EgoFlowNet - a point-level scene flow estimation network trained in a weakly-supervised manner and without object-based abstraction. Our approach predicts a binary segmentation mask that implicitly drives two parallel branches for ego-motion and scene flow. Unlike previous methods, we provide both branches with all input points and carefully integrate the binary mask into the feature extraction and losses. We also use a shared cost volume with local refinement that is updated at multiple scales without explicit clustering or rigidity assumptions. On realistic KITTI scenes, we show that our EgoFlowNet performs better than state-of-the-art methods in the presence of ground surface points.

ShapeAug: Occlusion Augmentation for Event Camera Data

Jan 04, 2024Abstract:Recently, Dynamic Vision Sensors (DVSs) sparked a lot of interest due to their inherent advantages over conventional RGB cameras. These advantages include a low latency, a high dynamic range and a low energy consumption. Nevertheless, the processing of DVS data using Deep Learning (DL) methods remains a challenge, particularly since the availability of event training data is still limited. This leads to a need for event data augmentation techniques in order to improve accuracy as well as to avoid over-fitting on the training data. Another challenge especially in real world automotive applications is occlusion, meaning one object is hindering the view onto the object behind it. In this paper, we present a novel event data augmentation approach, which addresses this problem by introducing synthetic events for randomly moving objects in a scene. We test our method on multiple DVS classification datasets, resulting in an relative improvement of up to 6.5 % in top1-accuracy. Moreover, we apply our augmentation technique on the real world Gen1 Automotive Event Dataset for object detection, where we especially improve the detection of pedestrians by up to 5 %.

Learned Fusion: 3D Object Detection using Calibration-Free Transformer Feature Fusion

Dec 14, 2023

Abstract:The state of the art in 3D object detection using sensor fusion heavily relies on calibration quality, which is difficult to maintain in large scale deployment outside a lab environment. We present the first calibration-free approach for 3D object detection. Thus, eliminating the need for complex and costly calibration procedures. Our approach uses transformers to map the features between multiple views of different sensors at multiple abstraction levels. In an extensive evaluation for object detection, we not only show that our approach outperforms single modal setups by 14.1% in BEV mAP, but also that the transformer indeed learns mapping. By showing calibration is not necessary for sensor fusion, we hope to motivate other researchers following the direction of calibration-free fusion. Additionally, resulting approaches have a substantial resilience against rotation and translation changes.

JPPF: Multi-task Fusion for Consistent Panoptic-Part Segmentation

Nov 30, 2023Abstract:Part-aware panoptic segmentation is a problem of computer vision that aims to provide a semantic understanding of the scene at multiple levels of granularity. More precisely, semantic areas, object instances, and semantic parts are predicted simultaneously. In this paper, we present our Joint Panoptic Part Fusion (JPPF) that combines the three individual segmentations effectively to obtain a panoptic-part segmentation. Two aspects are of utmost importance for this: First, a unified model for the three problems is desired that allows for mutually improved and consistent representation learning. Second, balancing the combination so that it gives equal importance to all individual results during fusion. Our proposed JPPF is parameter-free and dynamically balances its input. The method is evaluated and compared on the Cityscapes Panoptic Parts (CPP) and Pascal Panoptic Parts (PPP) datasets in terms of PartPQ and Part-Whole Quality (PWQ). In extensive experiments, we verify the importance of our fair fusion, highlight its most significant impact for areas that can be further segmented into parts, and demonstrate the generalization capabilities of our design without fine-tuning on 5 additional datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge