Ramy Battrawy

EgoFlowNet: Non-Rigid Scene Flow from Point Clouds with Ego-Motion Support

Jul 03, 2024Abstract:Recent weakly-supervised methods for scene flow estimation from LiDAR point clouds are limited to explicit reasoning on object-level. These methods perform multiple iterative optimizations for each rigid object, which makes them vulnerable to clustering robustness. In this paper, we propose our EgoFlowNet - a point-level scene flow estimation network trained in a weakly-supervised manner and without object-based abstraction. Our approach predicts a binary segmentation mask that implicitly drives two parallel branches for ego-motion and scene flow. Unlike previous methods, we provide both branches with all input points and carefully integrate the binary mask into the feature extraction and losses. We also use a shared cost volume with local refinement that is updated at multiple scales without explicit clustering or rigidity assumptions. On realistic KITTI scenes, we show that our EgoFlowNet performs better than state-of-the-art methods in the presence of ground surface points.

RMS-FlowNet: Efficient and Robust Multi-Scale Scene Flow Estimation for Large-Scale Point Clouds

Apr 01, 2022

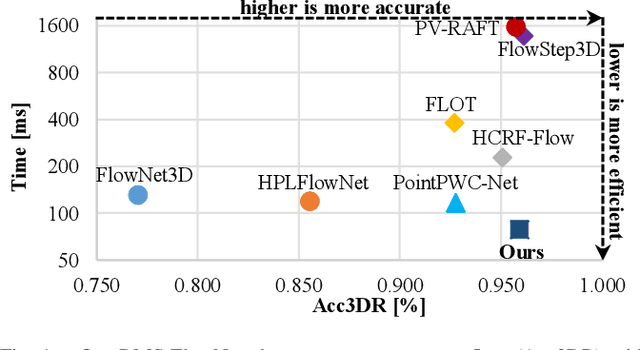

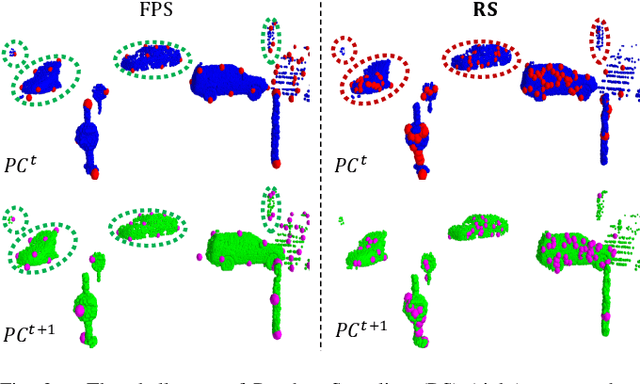

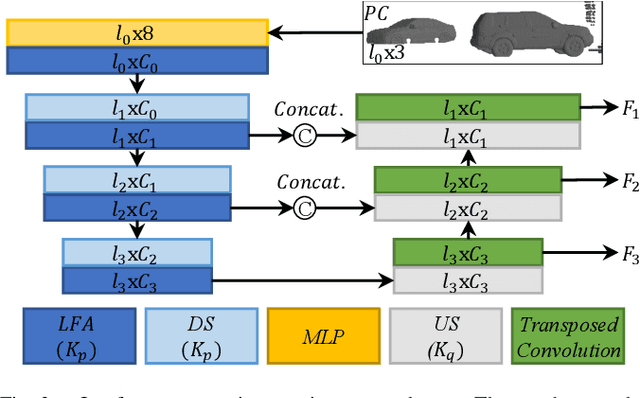

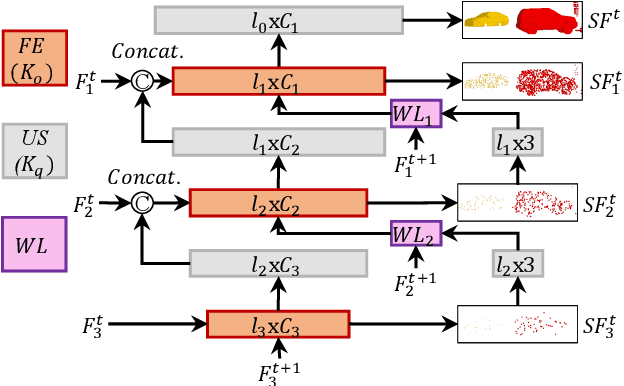

Abstract:The proposed RMS-FlowNet is a novel end-to-end learning-based architecture for accurate and efficient scene flow estimation which can operate on point clouds of high density. For hierarchical scene flow estimation, the existing methods depend on either expensive Farthest-Point-Sampling (FPS) or structure-based scaling which decrease their ability to handle a large number of points. Unlike these methods, we base our fully supervised architecture on Random-Sampling (RS) for multiscale scene flow prediction. To this end, we propose a novel flow embedding design which can predict more robust scene flow in conjunction with RS. Exhibiting high accuracy, our RMS-FlowNet provides a faster prediction than state-of-the-art methods and works efficiently on consecutive dense point clouds of more than 250K points at once. Our comprehensive experiments verify the accuracy of RMS-FlowNet on the established FlyingThings3D data set with different point cloud densities and validate our design choices. Additionally, we show that our model presents a competitive ability to generalize towards the real-world scenes of KITTI data set without fine-tuning.

DeepLiDARFlow: A Deep Learning Architecture For Scene Flow Estimation Using Monocular Camera and Sparse LiDAR

Aug 18, 2020

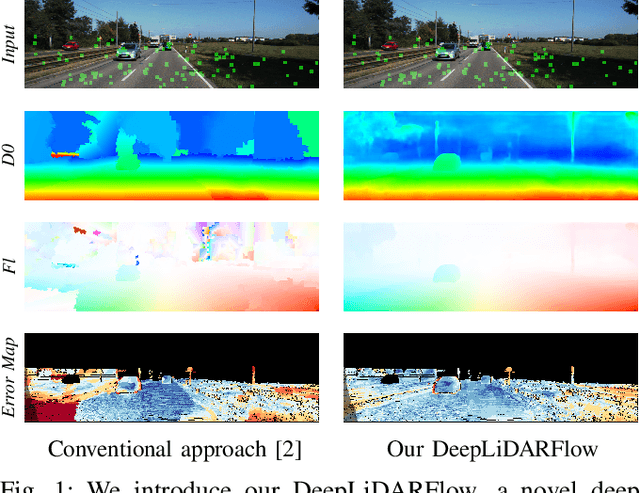

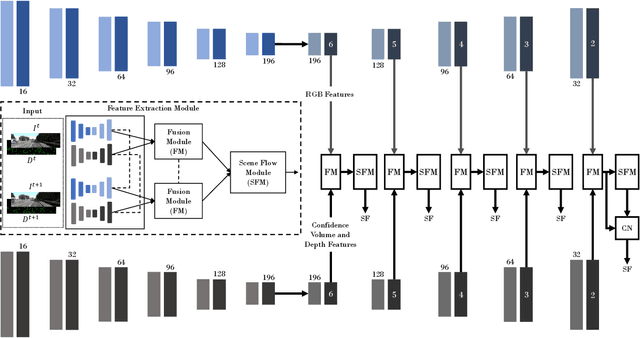

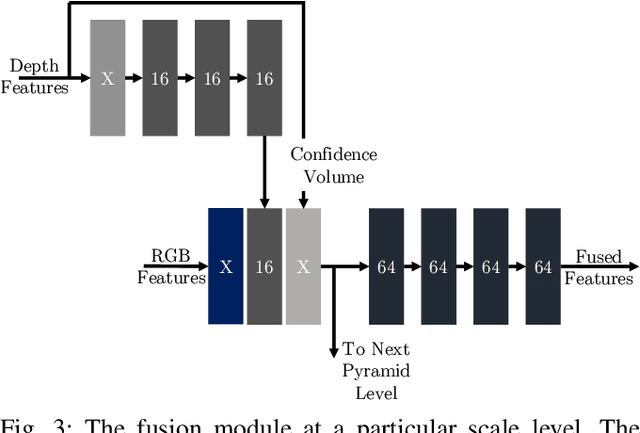

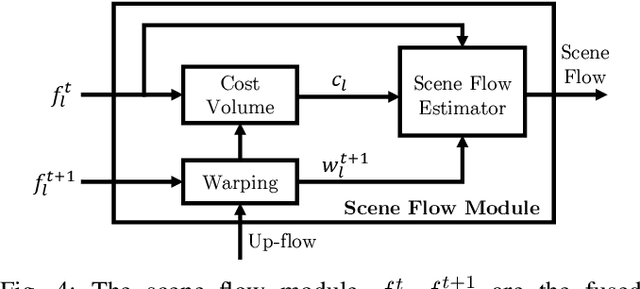

Abstract:Scene flow is the dense 3D reconstruction of motion and geometry of a scene. Most state-of-the-art methods use a pair of stereo images as input for full scene reconstruction. These methods depend a lot on the quality of the RGB images and perform poorly in regions with reflective objects, shadows, ill-conditioned light environment and so on. LiDAR measurements are much less sensitive to the aforementioned conditions but LiDAR features are in general unsuitable for matching tasks due to their sparse nature. Hence, using both LiDAR and RGB can potentially overcome the individual disadvantages of each sensor by mutual improvement and yield robust features which can improve the matching process. In this paper, we present DeepLiDARFlow, a novel deep learning architecture which fuses high level RGB and LiDAR features at multiple scales in a monocular setup to predict dense scene flow. Its performance is much better in the critical regions where image-only and LiDAR-only methods are inaccurate. We verify our DeepLiDARFlow using the established data sets KITTI and FlyingThings3D and we show strong robustness compared to several state-of-the-art methods which used other input modalities. The code of our paper is available at https://github.com/dfki-av/DeepLiDARFlow.

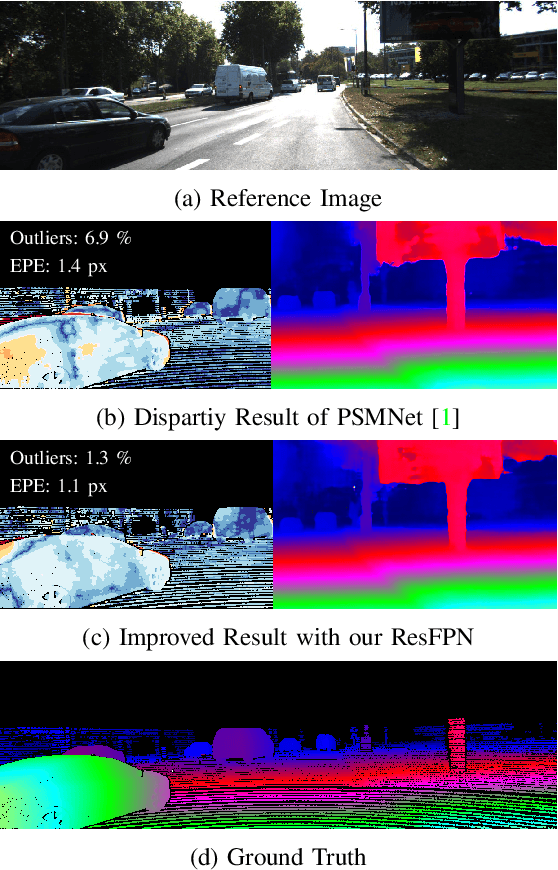

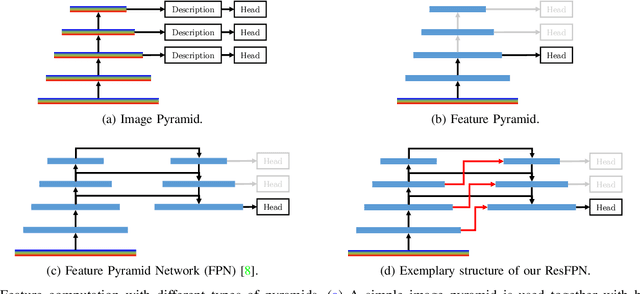

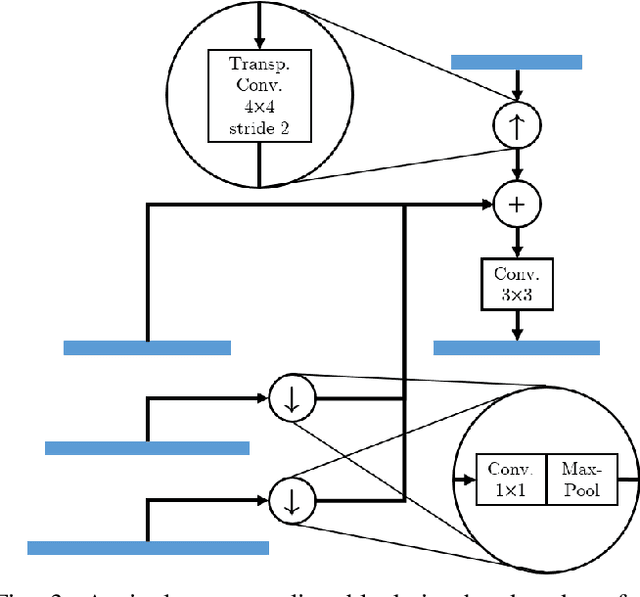

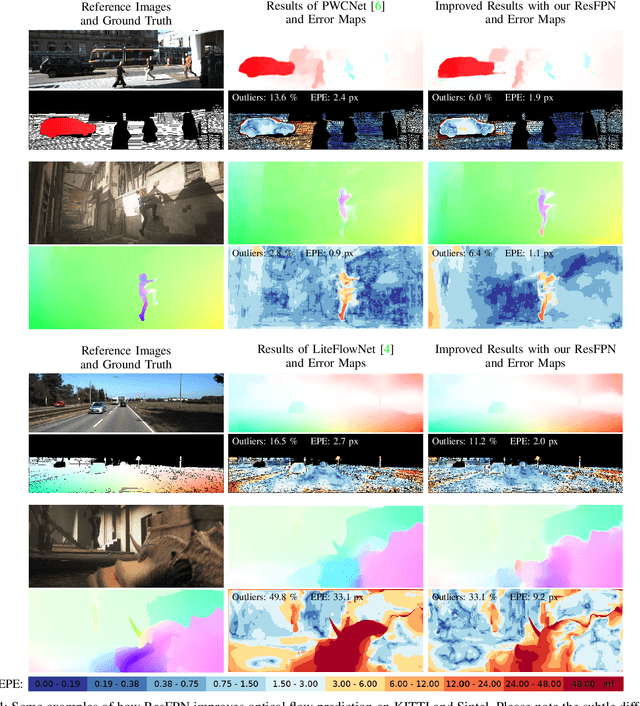

ResFPN: Residual Skip Connections in Multi-Resolution Feature Pyramid Networks for Accurate Dense Pixel Matching

Jun 22, 2020

Abstract:Dense pixel matching is required for many computer vision algorithms such as disparity, optical flow or scene flow estimation. Feature Pyramid Networks (FPN) have proven to be a suitable feature extractor for CNN-based dense matching tasks. FPN generates well localized and semantically strong features at multiple scales. However, the generic FPN is not utilizing its full potential, due to its reasonable but limited localization accuracy. Thus, we present ResFPN -- a multi-resolution feature pyramid network with multiple residual skip connections, where at any scale, we leverage the information from higher resolution maps for stronger and better localized features. In our ablation study, we demonstrate the effectiveness of our novel architecture with clearly higher accuracy than FPN. In addition, we verify the superior accuracy of ResFPN in many different pixel matching applications on established datasets like KITTI, Sintel, and FlyingThings3D.

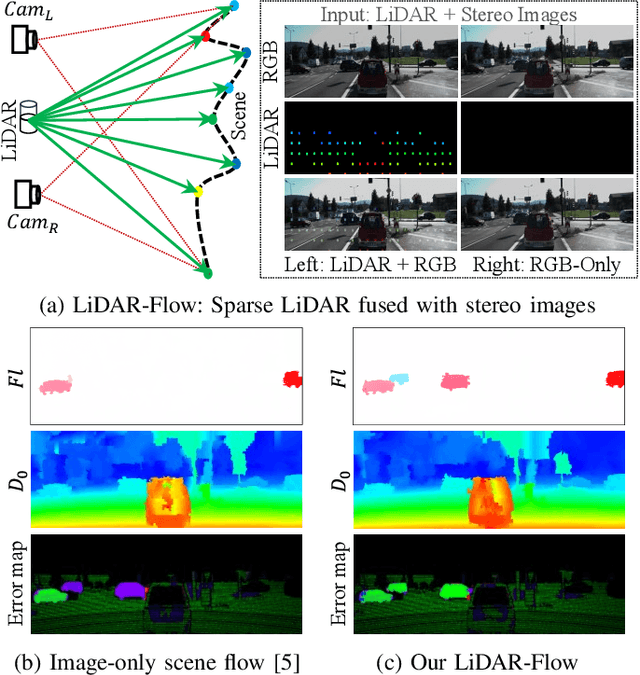

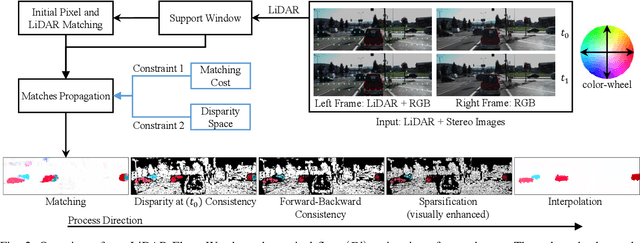

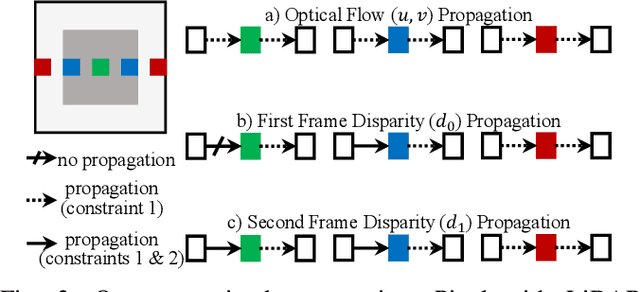

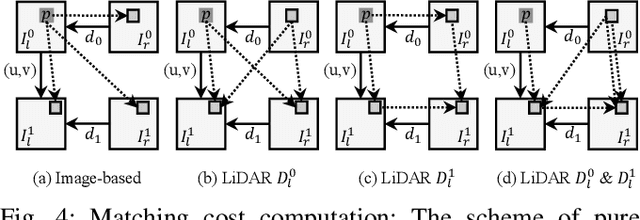

LiDAR-Flow: Dense Scene Flow Estimation from Sparse LiDAR and Stereo Images

Oct 31, 2019

Abstract:We propose a new approach called LiDAR-Flow to robustly estimate a dense scene flow by fusing a sparse LiDAR with stereo images. We take the advantage of the high accuracy of LiDAR to resolve the lack of information in some regions of stereo images due to textureless objects, shadows, ill-conditioned light environment and many more. Additionally, this fusion can overcome the difficulty of matching unstructured 3D points between LiDAR-only scans. Our LiDAR-Flow approach consists of three main steps; each of them exploits LiDAR measurements. First, we build strong seeds from LiDAR to enhance the robustness of matches between stereo images. The imagery part seeks the motion matches and increases the density of scene flow estimation. Then, a consistency check employs LiDAR seeds to remove the possible mismatches. Finally, LiDAR measurements constraint the edge-preserving interpolation method to fill the remaining gaps. In our evaluation we investigate the individual processing steps of our LiDAR-Flow approach and demonstrate the superior performance compared to image-only approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge