Georg Schneider

CLEO: Continual Learning of Evolving Ontologies

Jul 11, 2024

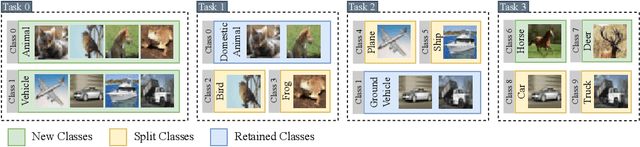

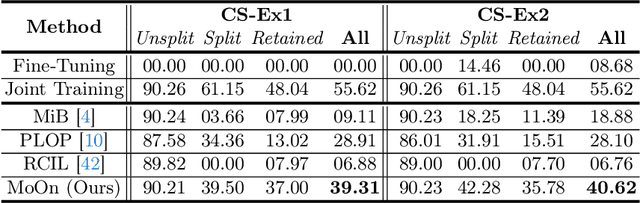

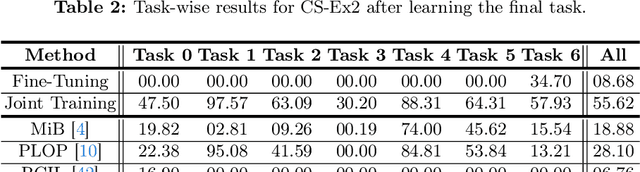

Abstract:Continual learning (CL) addresses the problem of catastrophic forgetting in neural networks, which occurs when a trained model tends to overwrite previously learned information, when presented with a new task. CL aims to instill the lifelong learning characteristic of humans in intelligent systems, making them capable of learning continuously while retaining what was already learned. Current CL problems involve either learning new domains (domain-incremental) or new and previously unseen classes (class-incremental). However, general learning processes are not just limited to learning information, but also refinement of existing information. In this paper, we define CLEO - Continual Learning of Evolving Ontologies, as a new incremental learning setting under CL to tackle evolving classes. CLEO is motivated by the need for intelligent systems to adapt to real-world ontologies that change over time, such as those in autonomous driving. We use Cityscapes, PASCAL VOC, and Mapillary Vistas to define the task settings and demonstrate the applicability of CLEO. We highlight the shortcomings of existing CIL methods in adapting to CLEO and propose a baseline solution, called Modelling Ontologies (MoOn). CLEO is a promising new approach to CL that addresses the challenge of evolving ontologies in real-world applications. MoOn surpasses previous CL approaches in the context of CLEO.

Towards Audit Requirements for AI-based Systems in Mobility Applications

Feb 27, 2023Abstract:Various mobility applications like advanced driver assistance systems increasingly utilize artificial intelligence (AI) based functionalities. Typically, deep neural networks (DNNs) are used as these provide the best performance on the challenging perception, prediction or planning tasks that occur in real driving environments. However, current regulations like UNECE R 155 or ISO 26262 do not consider AI-related aspects and are only applied to traditional algorithm-based systems. The non-existence of AI-specific standards or norms prevents the practical application and can harm the trust level of users. Hence, it is important to extend existing standardization for security and safety to consider AI-specific challenges and requirements. To take a step towards a suitable regulation we propose 50 technical requirements or best practices that extend existing regulations and address the concrete needs for DNN-based systems. We show the applicability, usefulness and meaningfulness of the proposed requirements by performing an exemplary audit of a DNN-based traffic sign recognition system using three of the proposed requirements.

Physical Adversarial Attacks on Deep Neural Networks for Traffic Sign Recognition: A Feasibility Study

Feb 27, 2023Abstract:Deep Neural Networks (DNNs) are increasingly applied in the real world in safety critical applications like advanced driver assistance systems. An example for such use case is represented by traffic sign recognition systems. At the same time, it is known that current DNNs can be fooled by adversarial attacks, which raises safety concerns if those attacks can be applied under realistic conditions. In this work we apply different black-box attack methods to generate perturbations that are applied in the physical environment and can be used to fool systems under different environmental conditions. To the best of our knowledge we are the first to combine a general framework for physical attacks with different black-box attack methods and study the impact of the different methods on the success rate of the attack under the same setting. We show that reliable physical adversarial attacks can be performed with different methods and that it is also possible to reduce the perceptibility of the resulting perturbations. The findings highlight the need for viable defenses of a DNN even in the black-box case, but at the same time form the basis for securing a DNN with methods like adversarial training which utilizes adversarial attacks to augment the original training data.

Online Black-Box Confidence Estimation of Deep Neural Networks

Feb 27, 2023Abstract:Autonomous driving (AD) and advanced driver assistance systems (ADAS) increasingly utilize deep neural networks (DNNs) for improved perception or planning. Nevertheless, DNNs are quite brittle when the data distribution during inference deviates from the data distribution during training. This represents a challenge when deploying in partly unknown environments like in the case of ADAS. At the same time, the standard confidence of DNNs remains high even if the classification reliability decreases. This is problematic since following motion control algorithms consider the apparently confident prediction as reliable even though it might be considerably wrong. To reduce this problem real-time capable confidence estimation is required that better aligns with the actual reliability of the DNN classification. Additionally, the need exists for black-box confidence estimation to enable the homogeneous inclusion of externally developed components to an entire system. In this work we explore this use case and introduce the neighborhood confidence (NHC) which estimates the confidence of an arbitrary DNN for classification. The metric can be used for black-box systems since only the top-1 class output is required and does not need access to the gradients, the training dataset or a hold-out validation dataset. Evaluation on different data distributions, including small in-domain distribution shifts, out-of-domain data or adversarial attacks, shows that the NHC performs better or on par with a comparable method for online white-box confidence estimation in low data regimes which is required for real-time capable AD/ADAS.

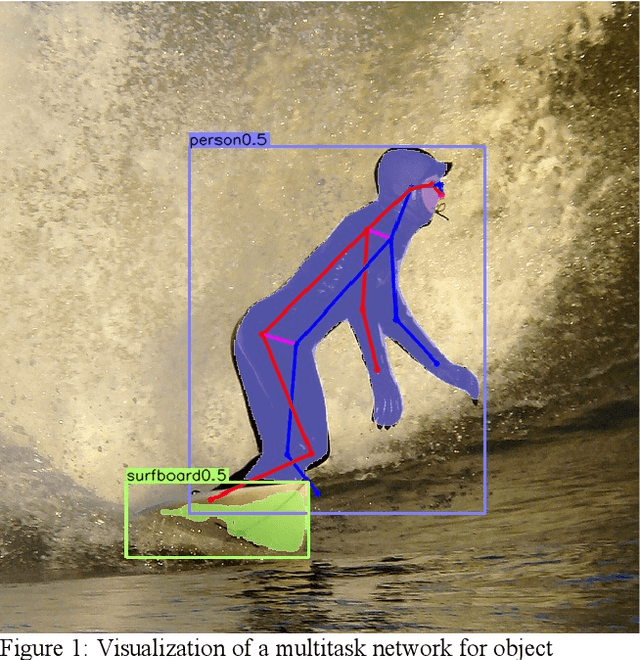

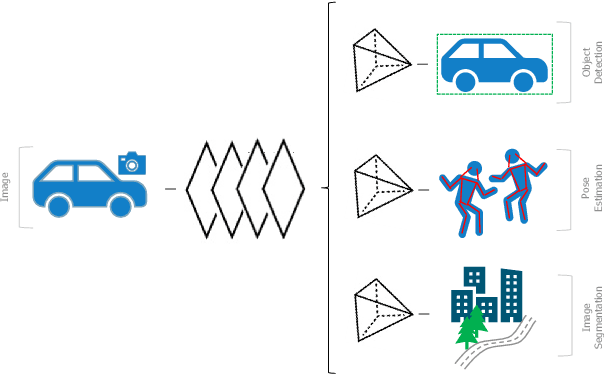

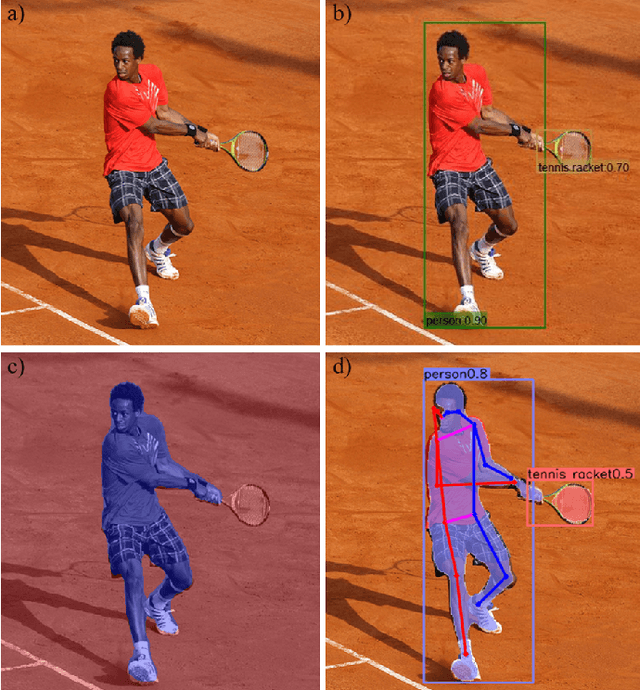

MultiTask-CenterNet (MCN): Efficient and Diverse Multitask Learning using an Anchor Free Approach

Sep 10, 2021

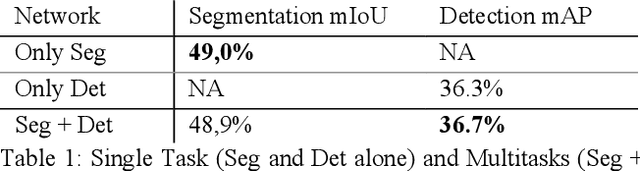

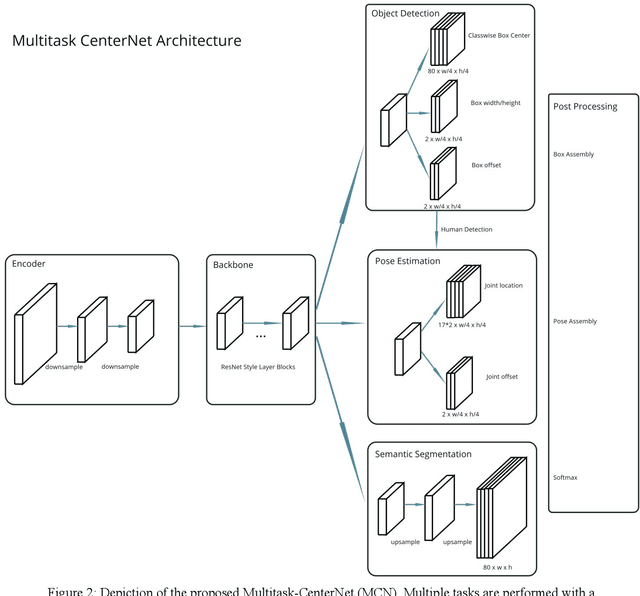

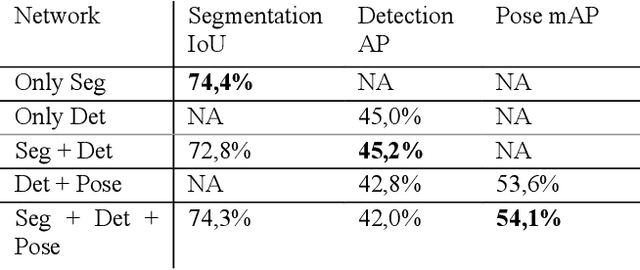

Abstract:Multitask learning is a common approach in machine learning, which allows to train multiple objectives with a shared architecture. It has been shown that by training multiple tasks together inference time and compute resources can be saved, while the objectives performance remains on a similar or even higher level. However, in perception related multitask networks only closely related tasks can be found, such as object detection, instance and semantic segmentation or depth estimation. Multitask networks with diverse tasks and their effects with respect to efficiency on one another are not well studied. In this paper we augment the CenterNet anchor-free approach for training multiple diverse perception related tasks together, including the task of object detection and semantic segmentation as well as human pose estimation. We refer to this DNN as Multitask-CenterNet (MCN). Additionally, we study different MCN settings for efficiency. The MCN can perform several tasks at once while maintaining, and in some cases even exceeding, the performance values of its corresponding single task networks. More importantly, the MCN architecture decreases inference time and reduces network size when compared to a composition of single task networks.

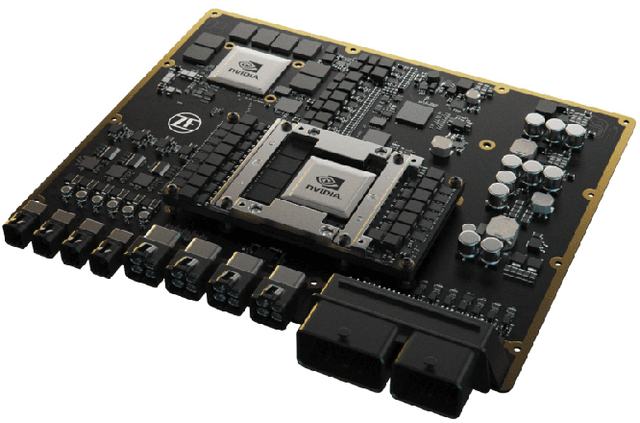

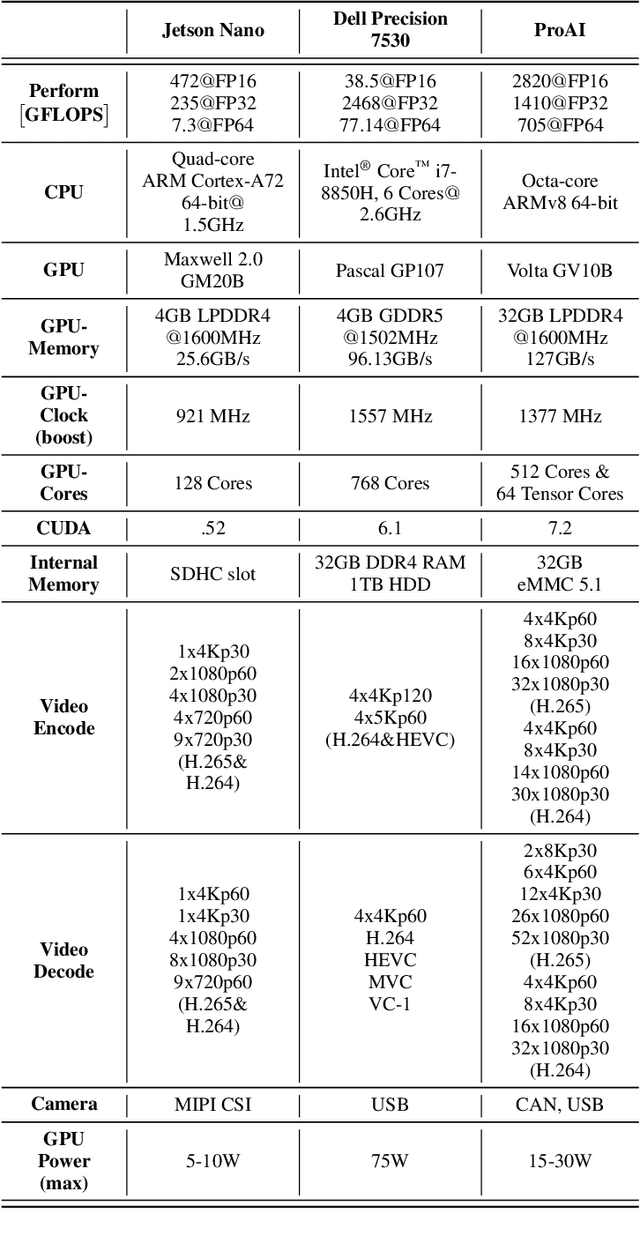

ProAI: An Efficient Embedded AI Hardware for Automotive Applications -- a Benchmark Study

Sep 09, 2021

Abstract:Development in the field of Single Board Computers (SBC) have been increasing for several years. They provide a good balance between computing performance and power consumption which is usually required for mobile platforms, like application in vehicles for Advanced Driver Assistance Systems (ADAS) and Autonomous Driving (AD). However, there is an ever-increasing need of more powerful and efficient SBCs which can run power intensive Deep Neural Networks (DNNs) in real-time and can also satisfy necessary functional safety requirements such as Automotive Safety Integrity Level (ASIL). ProAI is being developed by ZF mainly to run powerful and efficient applications such as multitask DNNs and on top of that it also has the required safety certification for AD. In this work, we compare and discuss state of the art SBC on the basis of power intensive multitask DNN architecture called Multitask-CenterNet with respect to performance measures such as, FPS and power efficiency. As an automotive supercomputer, ProAI delivers an excellent combination of performance and efficiency, managing nearly twice the number of FPS per watt than a modern workstation laptop and almost four times compared to the Jetson Nano. Furthermore, it was also shown that there is still power in reserve for further and more complex tasks on the ProAI, based on the CPU and GPU utilization during the benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge