Rajini Makam

Development of Domain-Invariant Visual Enhancement and Restoration (DIVER) Approach for Underwater Images

Jan 30, 2026Abstract:Underwater images suffer severe degradation due to wavelength-dependent attenuation, scattering, and illumination non-uniformity that vary across water types and depths. We propose an unsupervised Domain-Invariant Visual Enhancement and Restoration (DIVER) framework that integrates empirical correction with physics-guided modeling for robust underwater image enhancement. DIVER first applies either IlluminateNet for adaptive luminance enhancement or a Spectral Equalization Filter for spectral normalization. An Adaptive Optical Correction Module then refines hue and contrast using channel-adaptive filtering, while Hydro-OpticNet employs physics-constrained learning to compensate for backscatter and wavelength-dependent attenuation. The parameters of IlluminateNet and Hydro-OpticNet are optimized via unsupervised learning using a composite loss function. DIVER is evaluated on eight diverse datasets covering shallow, deep, and highly turbid environments, including both naturally low-light and artificially illuminated scenes, using reference and non-reference metrics. While state-of-the-art methods such as WaterNet, UDNet, and Phaseformer perform reasonably in shallow water, their performance degrades in deep, unevenly illuminated, or artificially lit conditions. In contrast, DIVER consistently achieves best or near-best performance across all datasets, demonstrating strong domain-invariant capability. DIVER yields at least a 9% improvement over SOTA methods in UCIQE. On the low-light SeaThru dataset, where color-palette references enable direct evaluation of color restoration, DIVER achieves at least a 4.9% reduction in GPMAE compared to existing methods. Beyond visual quality, DIVER also improves robotic perception by enhancing ORB-based keypoint repeatability and matching performance, confirming its robustness across diverse underwater environments.

Performance Analysis of Spatial and Temporal Learning Networks in the Presence of DVL Noise

Mar 07, 2025

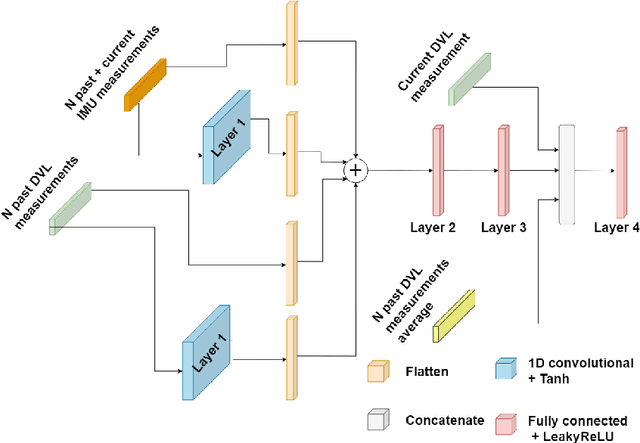

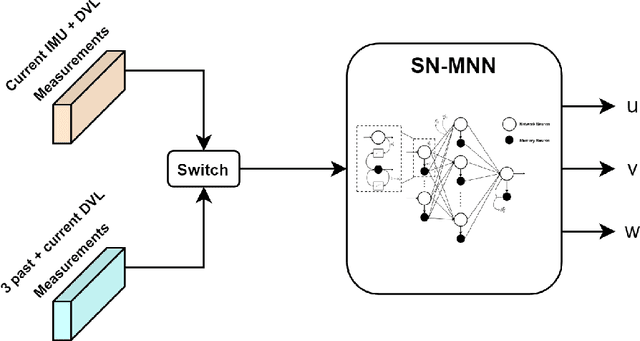

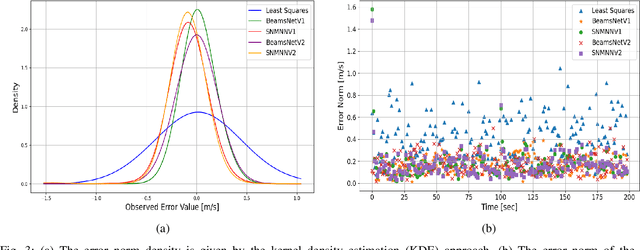

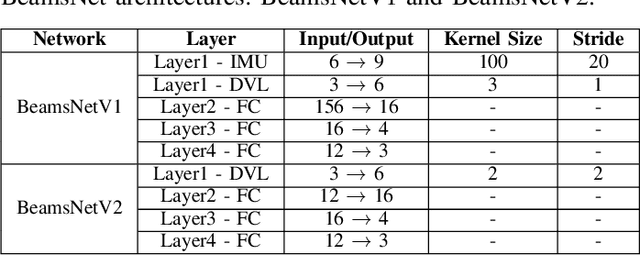

Abstract:Navigation is a critical aspect of autonomous underwater vehicles (AUVs) operating in complex underwater environments. Since global navigation satellite system (GNSS) signals are unavailable underwater, navigation relies on inertial sensing, which tends to accumulate errors over time. To mitigate this, the Doppler velocity log (DVL) plays a crucial role in determining navigation accuracy. In this paper, we compare two neural network models: an adapted version of BeamsNet, based on a one-dimensional convolutional neural network, and a Spectrally Normalized Memory Neural Network (SNMNN). The former focuses on extracting spatial features, while the latter leverages memory and temporal features to provide more accurate velocity estimates while handling biased and noisy DVL data. The proposed approaches were trained and tested on real AUV data collected in the Mediterranean Sea. Both models are evaluated in terms of accuracy and estimation certainty and are benchmarked against the least squares (LS) method, the current model-based approach. The results show that the neural network models achieve over a 50% improvement in RMSE for the estimation of the AUV velocity, with a smaller standard deviation.

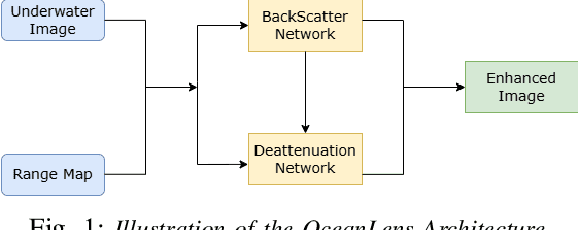

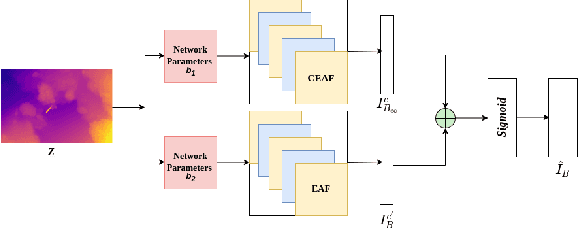

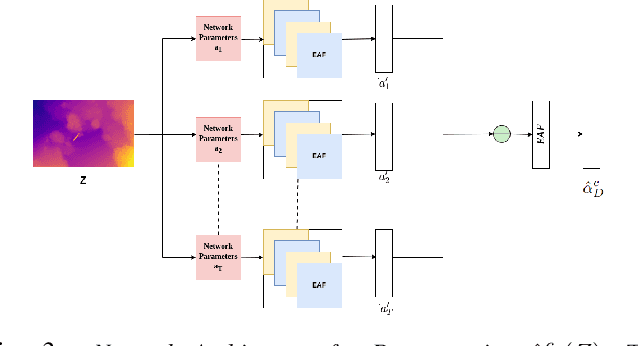

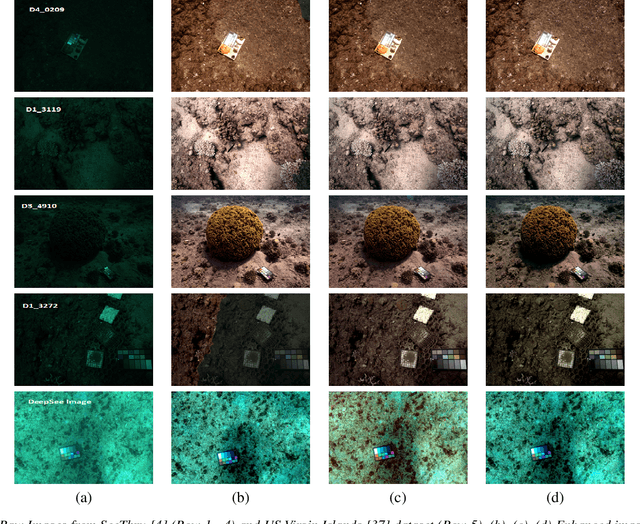

OceanLens: An Adaptive Backscatter and Edge Correction using Deep Learning Model for Enhanced Underwater Imaging

Nov 20, 2024

Abstract:Underwater environments pose significant challenges due to the selective absorption and scattering of light by water, which affects image clarity, contrast, and color fidelity. To overcome these, we introduce OceanLens, a method that models underwater image physics-encompassing both backscatter and attenuation-using neural networks. Our model incorporates adaptive backscatter and edge correction losses, specifically Sobel and LoG losses, to manage image variance and luminance, resulting in clearer and more accurate outputs. Additionally, we demonstrate the relevance of pre-trained monocular depth estimation models for generating underwater depth maps. Our evaluation compares the performance of various loss functions against state-of-the-art methods using the SeeThru dataset, revealing significant improvements. Specifically, we observe an average of 65% reduction in Grayscale Patch Mean Angular Error (GPMAE) and a 60% increase in the Underwater Image Quality Metric (UIQM) compared to the SeeThru and DeepSeeColor methods. Further, the results were improved with additional convolution layers that capture subtle image details more effectively with OceanLens. This architecture is validated on the UIEB dataset, with model performance assessed using Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) metrics. OceanLens with multiple convolutional layers achieves up to 12-15% improvement in the SSIM.

EROAS: 3D Efficient Reactive Obstacle Avoidance System for Autonomous Underwater Vehicles using 2.5D Forward-Looking Sonar

Nov 08, 2024

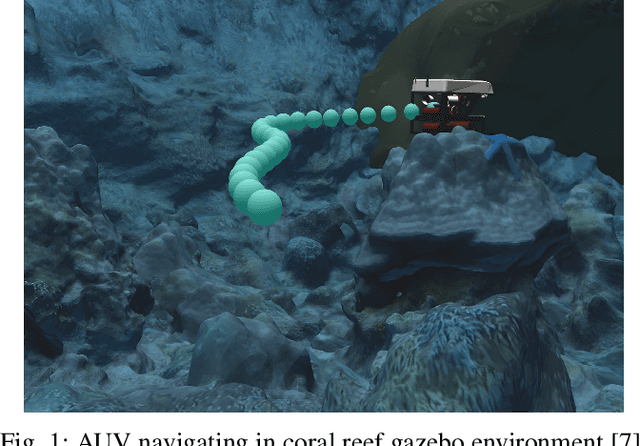

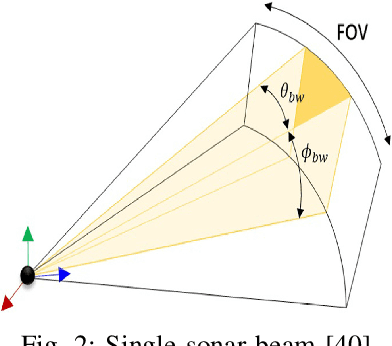

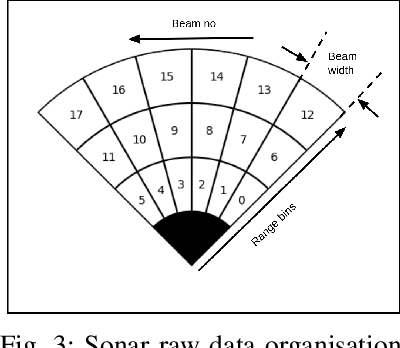

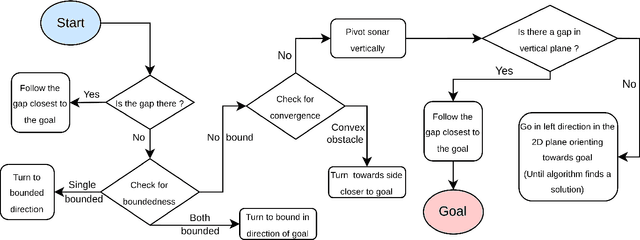

Abstract:Advances in Autonomous Underwater Vehicles (AUVs) have evolved vastly in short period of time. While advancements in sonar and camera technology with deep learning aid the obstacle detection and path planning to a great extent, achieving the right balance between computational resources , precision and safety maintained remains a challenge. Finding optimal solutions for real-time navigation in cluttered environments becomes pivotal as systems have to process large amounts of data efficiently. In this work, we propose a novel obstacle avoidance method for navigating 3D underwater environments. This approach utilizes a standard multibeam forward-looking sonar to detect and map obstacle in 3D environment. Instead of using computationally expensive 3D sensors, we pivot the 2D sonar to get 3D heuristic data effectively transforming the sensor into a 2.5D sonar for real-time 3D navigation decisions. This approach enhances obstacle detection and navigation by leveraging the simplicity of 2D sonar with the depth perception typically associated with 3D systems. We have further incorporated Control Barrier Function (CBF) as a filter to ensure safety of the AUV. The effectiveness of this algorithm was tested on a six degrees of freedom (DOF) rover in various simulation scenarios. The results demonstrate that the system successfully avoids obstacles and navigates toward predefined goals, showcasing its capability to manage complex underwater environments with precision. This paper highlights the potential of 2.5D sonar for improving AUV navigation and offers insights into future enhancements and applications of this technology in underwater autonomous systems. \url{https://github.com/AIRLabIISc/EROAS}

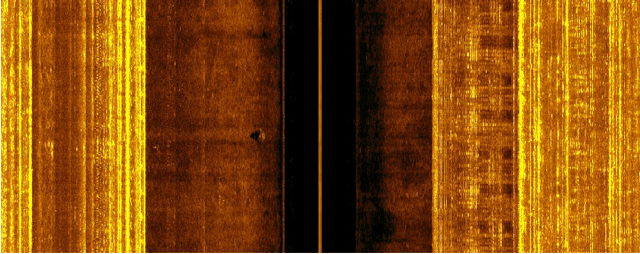

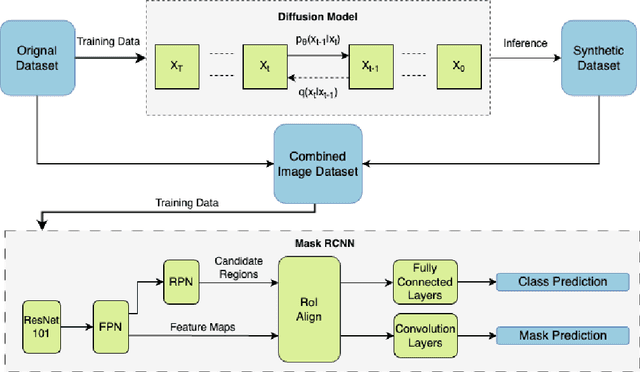

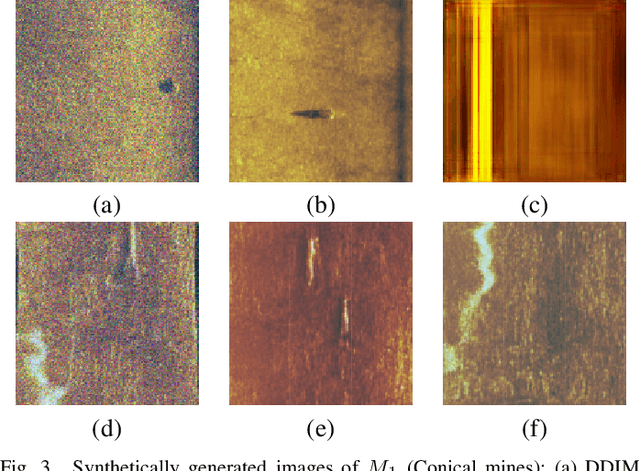

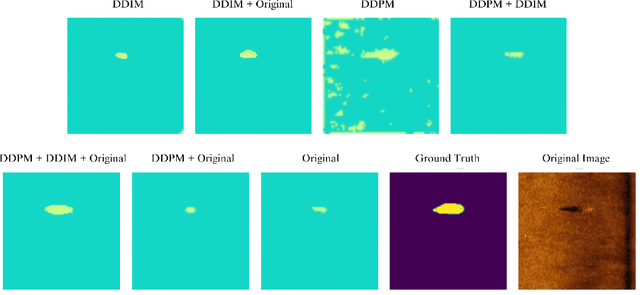

Syn2Real Domain Generalization for Underwater Mine-like Object Detection Using Side-Scan Sonar

Oct 16, 2024

Abstract:Underwater mine detection with deep learning suffers from limitations due to the scarcity of real-world data. This scarcity leads to overfitting, where models perform well on training data but poorly on unseen data. This paper proposes a Syn2Real (Synthetic to Real) domain generalization approach using diffusion models to address this challenge. We demonstrate that synthetic data generated with noise by DDPM and DDIM models, even if not perfectly realistic, can effectively augment real-world samples for training. The residual noise in the final sampled images improves the model's ability to generalize to real-world data with inherent noise and high variation. The baseline Mask-RCNN model when trained on a combination of synthetic and original training datasets, exhibited approximately a 60% increase in Average Precision (AP) compared to being trained solely on the original training data. This significant improvement highlights the potential of Syn2Real domain generalization for underwater mine detection tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge