Sharanya Patil

Development of Domain-Invariant Visual Enhancement and Restoration (DIVER) Approach for Underwater Images

Jan 30, 2026Abstract:Underwater images suffer severe degradation due to wavelength-dependent attenuation, scattering, and illumination non-uniformity that vary across water types and depths. We propose an unsupervised Domain-Invariant Visual Enhancement and Restoration (DIVER) framework that integrates empirical correction with physics-guided modeling for robust underwater image enhancement. DIVER first applies either IlluminateNet for adaptive luminance enhancement or a Spectral Equalization Filter for spectral normalization. An Adaptive Optical Correction Module then refines hue and contrast using channel-adaptive filtering, while Hydro-OpticNet employs physics-constrained learning to compensate for backscatter and wavelength-dependent attenuation. The parameters of IlluminateNet and Hydro-OpticNet are optimized via unsupervised learning using a composite loss function. DIVER is evaluated on eight diverse datasets covering shallow, deep, and highly turbid environments, including both naturally low-light and artificially illuminated scenes, using reference and non-reference metrics. While state-of-the-art methods such as WaterNet, UDNet, and Phaseformer perform reasonably in shallow water, their performance degrades in deep, unevenly illuminated, or artificially lit conditions. In contrast, DIVER consistently achieves best or near-best performance across all datasets, demonstrating strong domain-invariant capability. DIVER yields at least a 9% improvement over SOTA methods in UCIQE. On the low-light SeaThru dataset, where color-palette references enable direct evaluation of color restoration, DIVER achieves at least a 4.9% reduction in GPMAE compared to existing methods. Beyond visual quality, DIVER also improves robotic perception by enhancing ORB-based keypoint repeatability and matching performance, confirming its robustness across diverse underwater environments.

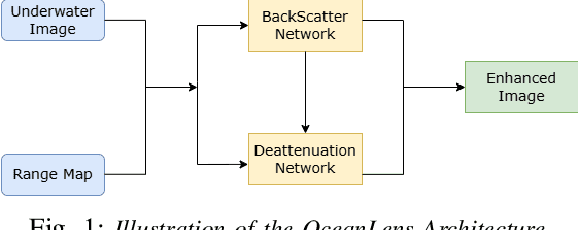

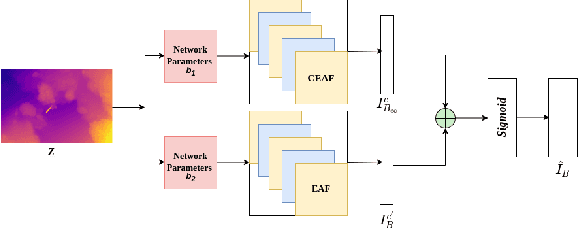

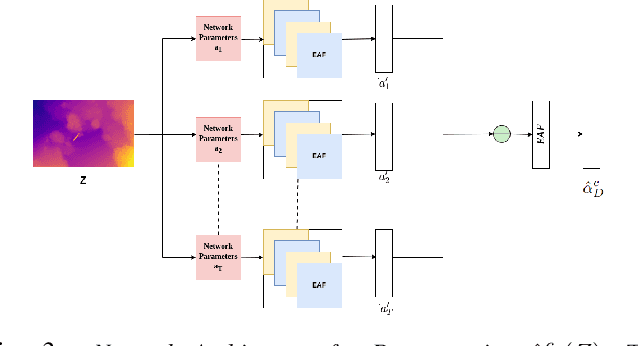

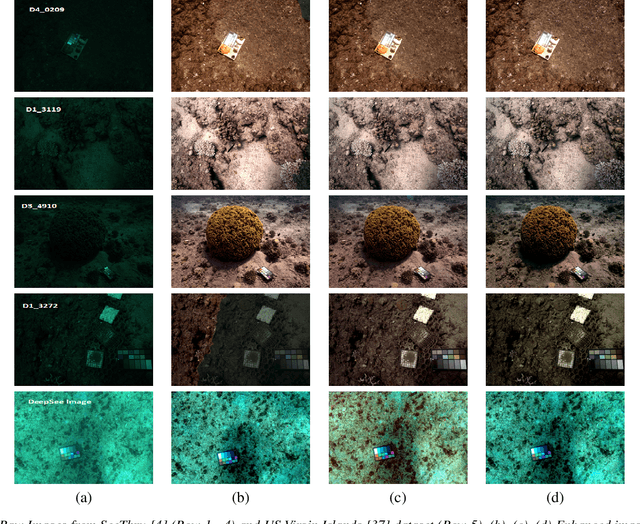

OceanLens: An Adaptive Backscatter and Edge Correction using Deep Learning Model for Enhanced Underwater Imaging

Nov 20, 2024

Abstract:Underwater environments pose significant challenges due to the selective absorption and scattering of light by water, which affects image clarity, contrast, and color fidelity. To overcome these, we introduce OceanLens, a method that models underwater image physics-encompassing both backscatter and attenuation-using neural networks. Our model incorporates adaptive backscatter and edge correction losses, specifically Sobel and LoG losses, to manage image variance and luminance, resulting in clearer and more accurate outputs. Additionally, we demonstrate the relevance of pre-trained monocular depth estimation models for generating underwater depth maps. Our evaluation compares the performance of various loss functions against state-of-the-art methods using the SeeThru dataset, revealing significant improvements. Specifically, we observe an average of 65% reduction in Grayscale Patch Mean Angular Error (GPMAE) and a 60% increase in the Underwater Image Quality Metric (UIQM) compared to the SeeThru and DeepSeeColor methods. Further, the results were improved with additional convolution layers that capture subtle image details more effectively with OceanLens. This architecture is validated on the UIEB dataset, with model performance assessed using Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) metrics. OceanLens with multiple convolutional layers achieves up to 12-15% improvement in the SSIM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge