Raj Palleti

Learning production functions for supply chains with graph neural networks

Jul 26, 2024Abstract:The global economy relies on the flow of goods over supply chain networks, with nodes as firms and edges as transactions between firms. While we may observe these external transactions, they are governed by unseen production functions, which determine how firms internally transform the input products they receive into output products that they sell. In this setting, it can be extremely valuable to infer these production functions, to better understand and improve supply chains, and to forecast future transactions more accurately. However, existing graph neural networks (GNNs) cannot capture these hidden relationships between nodes' inputs and outputs. Here, we introduce a new class of models for this setting, by combining temporal GNNs with a novel inventory module, which learns production functions via attention weights and a special loss function. We evaluate our models extensively on real supply chains data, along with data generated from our new open-source simulator, SupplySim. Our models successfully infer production functions, with a 6-50% improvement over baselines, and forecast future transactions on real and synthetic data, outperforming baselines by 11-62%.

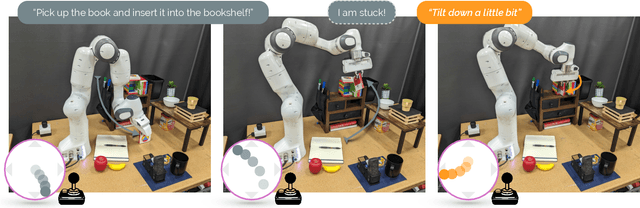

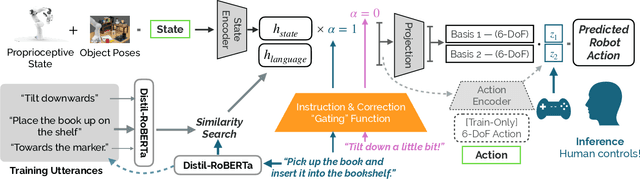

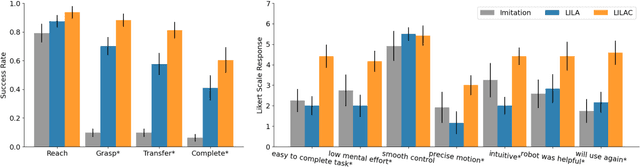

"No, to the Right" -- Online Language Corrections for Robotic Manipulation via Shared Autonomy

Jan 06, 2023

Abstract:Systems for language-guided human-robot interaction must satisfy two key desiderata for broad adoption: adaptivity and learning efficiency. Unfortunately, existing instruction-following agents cannot adapt, lacking the ability to incorporate online natural language supervision, and even if they could, require hundreds of demonstrations to learn even simple policies. In this work, we address these problems by presenting Language-Informed Latent Actions with Corrections (LILAC), a framework for incorporating and adapting to natural language corrections - "to the right," or "no, towards the book" - online, during execution. We explore rich manipulation domains within a shared autonomy paradigm. Instead of discrete turn-taking between a human and robot, LILAC splits agency between the human and robot: language is an input to a learned model that produces a meaningful, low-dimensional control space that the human can use to guide the robot. Each real-time correction refines the human's control space, enabling precise, extended behaviors - with the added benefit of requiring only a handful of demonstrations to learn. We evaluate our approach via a user study where users work with a Franka Emika Panda manipulator to complete complex manipulation tasks. Compared to existing learned baselines covering both open-loop instruction following and single-turn shared autonomy, we show that our corrections-aware approach obtains higher task completion rates, and is subjectively preferred by users because of its reliability, precision, and ease of use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge