Rahul Peddi

A Decision Tree-based Monitoring and Recovery Framework for Autonomous Robots with Decision Uncertainties

Aug 02, 2023Abstract:Autonomous mobile robots (AMR) operating in the real world often need to make critical decisions that directly impact their own safety and the safety of their surroundings. Learning-based approaches for decision making have gained popularity in recent years, since decisions can be made very quickly and with reasonable levels of accuracy for many applications. These approaches, however, typically return only one decision, and if the learner is poorly trained or observations are noisy, the decision may be incorrect. This problem is further exacerbated when the robot is making decisions about its own failures, such as faulty actuators or sensors and external disturbances, when a wrong decision can immediately cause damage to the robot. In this paper, we consider this very case study: a robot dealing with such failures must quickly assess uncertainties and make safe decisions. We propose an uncertainty aware learning-based failure detection and recovery approach, in which we leverage Decision Tree theory along with Model Predictive Control to detect and explain which failure is compromising the system, assess uncertainties associated with the failure, and lastly, find and validate corrective controls to recover the system. Our approach is validated with simulations and real experiments on a faulty unmanned ground vehicle (UGV) navigation case study, demonstrating recovery to safety under uncertainties.

Autonomous Ground Navigation in Highly Constrained Spaces: Lessons learned from The BARN Challenge at ICRA 2022

Aug 22, 2022

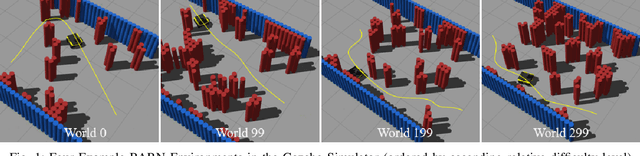

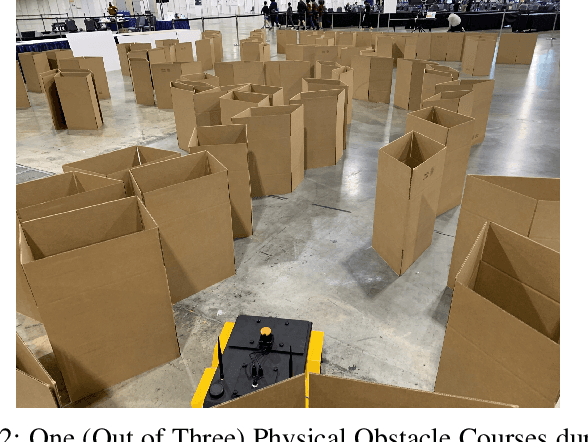

Abstract:The BARN (Benchmark Autonomous Robot Navigation) Challenge took place at the 2022 IEEE International Conference on Robotics and Automation (ICRA 2022) in Philadelphia, PA. The aim of the challenge was to evaluate state-of-the-art autonomous ground navigation systems for moving robots through highly constrained environments in a safe and efficient manner. Specifically, the task was to navigate a standardized, differential-drive ground robot from a predefined start location to a goal location as quickly as possible without colliding with any obstacles, both in simulation and in the real world. Five teams from all over the world participated in the qualifying simulation competition, three of which were invited to compete with each other at a set of physical obstacle courses at the conference center in Philadelphia. The competition results suggest that autonomous ground navigation in highly constrained spaces, despite seeming ostensibly simple even for experienced roboticists, is actually far from being a solved problem. In this article, we discuss the challenge, the approaches used by the top three winning teams, and lessons learned to direct future research.

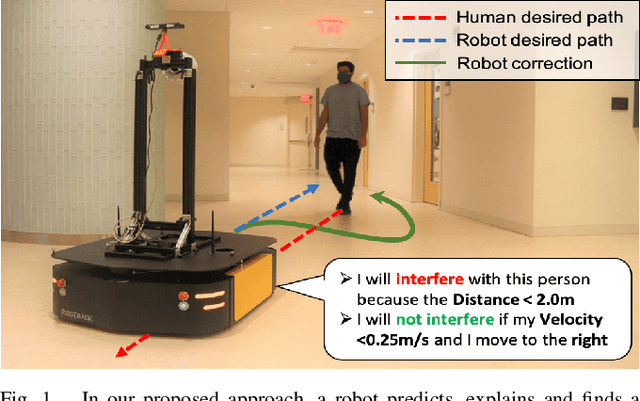

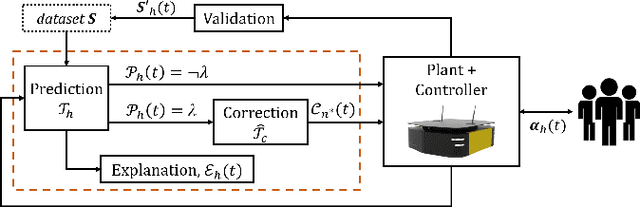

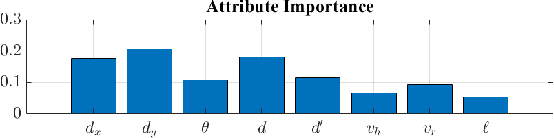

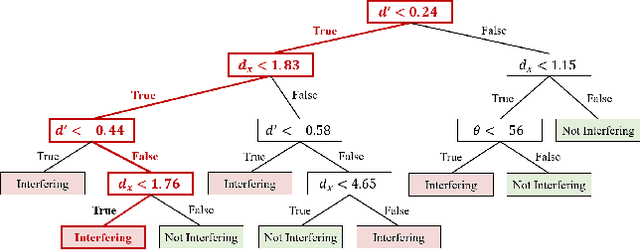

Interpretable Run-Time Prediction and Planning in Co-Robotic Environments

Sep 08, 2021

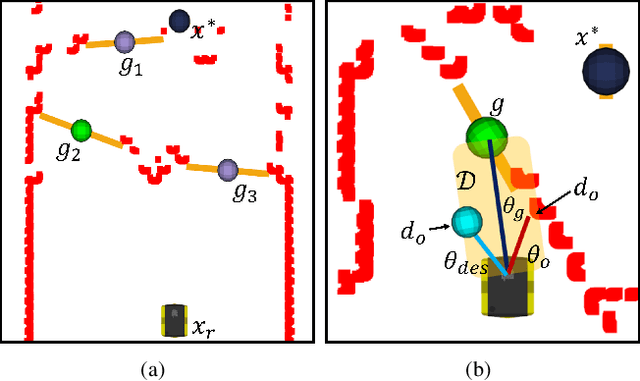

Abstract:Mobile robots are traditionally developed to be reactive and avoid collisions with surrounding humans, often moving in unnatural ways without following social protocols, forcing people to behave very differently from human-human interaction rules. Humans, on the other hand, are seamlessly able to understand why they may interfere with surrounding humans and change their behavior based on their reasoning, resulting in smooth, intuitive avoiding behaviors. In this paper, we propose an approach for a mobile robot to avoid interfering with the desired paths of surrounding humans. We leverage a library of previously observed trajectories to design a decision-tree based interpretable monitor that: i) predicts whether the robot is interfering with surrounding humans, ii) explains what behaviors are causing either prediction, and iii) plans corrective behaviors if interference is predicted. We also propose a validation scheme to improve the predictive model at run-time. The proposed approach is validated with simulations and experiments involving an unmanned ground vehicle (UGV) performing go-to-goal operations in the presence of humans, demonstrating non-interfering behaviors and run-time learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge