Nicola Bezzo

University of Virginia

A Schwarz-Christoffel Mapping-based Framework for Sim-to-Real Transfer in Autonomous Robot Operations

Mar 20, 2025

Abstract:Despite the remarkable acceleration of robotic development through advanced simulation technology, robotic applications are often subject to performance reductions in real-world deployment due to the inherent discrepancy between simulation and reality, often referred to as the "sim-to-real gap". This gap arises from factors like model inaccuracies, environmental variations, and unexpected disturbances. Similarly, model discrepancies caused by system degradation over time or minor changes in the system's configuration also hinder the effectiveness of the developed methodologies. Effectively closing these gaps is critical and remains an open challenge. This work proposes a lightweight conformal mapping framework to transfer control and planning policies from an expert teacher to a degraded less capable learner. The method leverages Schwarz-Christoffel Mapping (SCM) to geometrically map teacher control inputs into the learner's command space, ensuring maneuver consistency. To demonstrate its generality, the framework is applied to two representative types of control and planning methods in a path-tracking task: 1) a discretized motion primitives command transfer and 2) a continuous Model Predictive Control (MPC)-based command transfer. The proposed framework is validated through extensive simulations and real-world experiments, demonstrating its effectiveness in reducing the sim-to-real gap by closely transferring teacher commands to the learner robot.

Soft Actor-Critic-based Control Barrier Adaptation for Robust Autonomous Navigation in Unknown Environments

Mar 11, 2025

Abstract:Motion planning failures during autonomous navigation often occur when safety constraints are either too conservative, leading to deadlocks, or too liberal, resulting in collisions. To improve robustness, a robot must dynamically adapt its safety constraints to ensure it reaches its goal while balancing safety and performance measures. To this end, we propose a Soft Actor-Critic (SAC)-based policy for adapting Control Barrier Function (CBF) constraint parameters at runtime, ensuring safe yet non-conservative motion. The proposed approach is designed for a general high-level motion planner, low-level controller, and target system model, and is trained in simulation only. Through extensive simulations and physical experiments, we demonstrate that our framework effectively adapts CBF constraints, enabling the robot to reach its final goal without compromising safety.

Implicit Coordination using Active Epistemic Inference

Jan 07, 2025Abstract:A Multi-robot system (MRS) provides significant advantages for intricate tasks such as environmental monitoring, underwater inspections, and space missions. However, addressing potential communication failures or the lack of communication infrastructure in these fields remains a challenge. A significant portion of MRS research presumes that the system can maintain communication with proximity constraints, but this approach does not solve situations where communication is either non-existent, unreliable, or poses a security risk. Some approaches tackle this issue using predictions about other robots while not communicating, but these methods generally only permit agents to utilize first-order reasoning, which involves reasoning based purely on their own observations. In contrast, to deal with this problem, our proposed framework utilizes Theory of Mind (ToM), employing higher-order reasoning by shifting a robot's perspective to reason about a belief of others observations. Our approach has two main phases: i) an efficient runtime plan adaptation using active inference to signal intentions and reason about a robot's own belief and the beliefs of others in the system, and ii) a hierarchical epistemic planning framework to iteratively reason about the current MRS mission state. The proposed framework outperforms greedy and first-order reasoning approaches and is validated using simulations and experiments with heterogeneous robotic systems.

Take Your Best Shot: Sampling-Based Next-Best-View Planning for Autonomous Photography & Inspection

Mar 08, 2024

Abstract:Autonomous mobile robots (AMRs) equipped with high-quality cameras have revolutionized the field of inspections by providing efficient and cost-effective means of conducting surveys. The use of autonomous inspection is becoming more widespread in a variety of contexts, yet it is still challenging to acquire the best inspection information autonomously. In situations where objects may block a robot's view, it is necessary to use reasoning to determine the optimal points for collecting data. Although researchers have explored cloud-based applications to store inspection data, these applications may not operate optimally under network constraints, and parsing these datasets can be manually intensive. Instead, there is an emerging requirement for AMRs to autonomously capture the most informative views efficiently. To address this challenge, we present an autonomous Next-Best-View (NBV) framework that maximizes the inspection information while reducing the number of pictures needed during operations. The framework consists of a formalized evaluation metric using ray-tracing and Gaussian process interpolation to estimate information reward based on the current understanding of the partially-known environment. A derivative-free optimization (DFO) method is used to sample candidate views in the environment and identify the NBV point. The proposed approach's effectiveness is shown by comparing it with existing methods and further validated through simulations and experiments with various vehicles.

Robust Online Epistemic Replanning of Multi-Robot Missions

Mar 01, 2024

Abstract:As Multi-Robot Systems (MRS) become more affordable and computing capabilities grow, they provide significant advantages for complex applications such as environmental monitoring, underwater inspections, or space exploration. However, accounting for potential communication loss or the unavailability of communication infrastructures in these application domains remains an open problem. Much of the applicable MRS research assumes that the system can sustain communication through proximity regulations and formation control or by devising a framework for separating and adhering to a predetermined plan for extended periods of disconnection. The latter technique enables an MRS to be more efficient, but breakdowns and environmental uncertainties can have a domino effect throughout the system, particularly when the mission goal is intricate or time-sensitive. To deal with this problem, our proposed framework has two main phases: i) a centralized planner to allocate mission tasks by rewarding intermittent rendezvous between robots to mitigate the effects of the unforeseen events during mission execution, and ii) a decentralized replanning scheme leveraging epistemic planning to formalize belief propagation and a Monte Carlo tree search for policy optimization given distributed rational belief updates. The proposed framework outperforms a baseline heuristic and is validated using simulations and experiments with aerial vehicles.

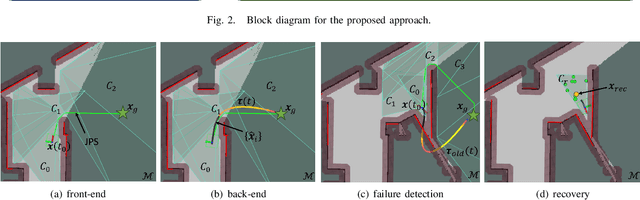

A GP-based Robust Motion Planning Framework for Agile Autonomous Robot Navigation and Recovery in Unknown Environments

Feb 02, 2024

Abstract:For autonomous mobile robots, uncertainties in the environment and system model can lead to failure in the motion planning pipeline, resulting in potential collisions. In order to achieve a high level of robust autonomy, these robots should be able to proactively predict and recover from such failures. To this end, we propose a Gaussian Process (GP) based model for proactively detecting the risk of future motion planning failure. When this risk exceeds a certain threshold, a recovery behavior is triggered that leverages the same GP model to find a safe state from which the robot may continue towards the goal. The proposed approach is trained in simulation only and can generalize to real world environments on different robotic platforms. Simulations and physical experiments demonstrate that our framework is capable of both predicting planner failures and recovering the robot to states where planner success is likely, all while producing agile motion.

Epistemic Planning for Heterogeneous Robotic Systems

Aug 03, 2023

Abstract:In applications such as search and rescue or disaster relief, heterogeneous multi-robot systems (MRS) can provide significant advantages for complex objectives that require a suite of capabilities. However, within these application spaces, communication is often unreliable, causing inefficiencies or outright failures to arise in most MRS algorithms. Many researchers tackle this problem by requiring all robots to either maintain communication using proximity constraints or assuming that all robots will execute a predetermined plan over long periods of disconnection. The latter method allows for higher levels of efficiency in a MRS, but failures and environmental uncertainties can have cascading effects across the system, especially when a mission objective is complex or time-sensitive. To solve this, we propose an epistemic planning framework that allows robots to reason about the system state, leverage heterogeneous system makeups, and optimize information dissemination to disconnected neighbors. Dynamic epistemic logic formalizes the propagation of belief states, and epistemic task allocation and gossip is accomplished via a mixed integer program using the belief states for utility predictions and planning. The proposed framework is validated using simulations and experiments with heterogeneous vehicles.

A Decision Tree-based Monitoring and Recovery Framework for Autonomous Robots with Decision Uncertainties

Aug 02, 2023Abstract:Autonomous mobile robots (AMR) operating in the real world often need to make critical decisions that directly impact their own safety and the safety of their surroundings. Learning-based approaches for decision making have gained popularity in recent years, since decisions can be made very quickly and with reasonable levels of accuracy for many applications. These approaches, however, typically return only one decision, and if the learner is poorly trained or observations are noisy, the decision may be incorrect. This problem is further exacerbated when the robot is making decisions about its own failures, such as faulty actuators or sensors and external disturbances, when a wrong decision can immediately cause damage to the robot. In this paper, we consider this very case study: a robot dealing with such failures must quickly assess uncertainties and make safe decisions. We propose an uncertainty aware learning-based failure detection and recovery approach, in which we leverage Decision Tree theory along with Model Predictive Control to detect and explain which failure is compromising the system, assess uncertainties associated with the failure, and lastly, find and validate corrective controls to recover the system. Our approach is validated with simulations and real experiments on a faulty unmanned ground vehicle (UGV) navigation case study, demonstrating recovery to safety under uncertainties.

A Model Predictive Path Integral Method for Fast, Proactive, and Uncertainty-Aware UAV Planning in Cluttered Environments

Aug 02, 2023

Abstract:Current motion planning approaches for autonomous mobile robots often assume that the low level controller of the system is able to track the planned motion with very high accuracy. In practice, however, tracking error can be affected by many factors, and could lead to potential collisions when the robot must traverse a cluttered environment. To address this problem, this paper proposes a novel receding-horizon motion planning approach based on Model Predictive Path Integral (MPPI) control theory -- a flexible sampling-based control technique that requires minimal assumptions on vehicle dynamics and cost functions. This flexibility is leveraged to propose a motion planning framework that also considers a data-informed risk function. Using the MPPI algorithm as a motion planner also reduces the number of samples required by the algorithm, relaxing the hardware requirements for implementation. The proposed approach is validated through trajectory generation for a quadrotor unmanned aerial vehicle (UAV), where fast motion increases trajectory tracking error and can lead to collisions with nearby obstacles. Simulations and hardware experiments demonstrate that the MPPI motion planner proactively adapts to the obstacles that the UAV must negotiate, slowing down when near obstacles and moving quickly when away from obstacles, resulting in a complete reduction of collisions while still producing lively motion.

Epistemic Prediction and Planning with Implicit Coordination for Multi-Robot Teams in Communication Restricted Environments

Feb 21, 2023

Abstract:In communication restricted environments, a multi-robot system can be deployed to either: i) maintain constant communication but potentially sacrifice operational efficiency due to proximity constraints or ii) allow disconnections to increase environmental coverage efficiency, challenges on how, when, and where to reconnect (rendezvous problem). In this work we tackle the latter problem and notice that most state-of-the-art methods assume that robots will be able to execute a predetermined plan; however system failures and changes in environmental conditions can cause the robots to deviate from the plan with cascading effects across the multi-robot system. This paper proposes a coordinated epistemic prediction and planning framework to achieve consensus without communicating for exploration and coverage, task discovery and completion, and rendezvous applications. Dynamic epistemic logic is the principal component implemented to allow robots to propagate belief states and empathize with other agents. Propagation of belief states and subsequent coverage of the environment is achieved via a frontier-based method within an artificial physics-based framework. The proposed framework is validated with both simulations and experiments with unmanned ground vehicles in various cluttered environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge