Raghavendra Selvan

CoDeQ: End-to-End Joint Model Compression with Dead-Zone Quantizer for High-Sparsity and Low-Precision Networks

Dec 15, 2025Abstract:While joint pruning--quantization is theoretically superior to sequential application, current joint methods rely on auxiliary procedures outside the training loop for finding compression parameters. This reliance adds engineering complexity and hyperparameter tuning, while also lacking a direct data-driven gradient signal, which might result in sub-optimal compression. In this paper, we introduce CoDeQ, a simple, fully differentiable method for joint pruning--quantization. Our approach builds on a key observation: the dead-zone of a scalar quantizer is equivalent to magnitude pruning, and can be used to induce sparsity directly within the quantization operator. Concretely, we parameterize the dead-zone width and learn it via backpropagation, alongside the quantization parameters. This design provides explicit control of sparsity, regularized by a single global hyperparameter, while decoupling sparsity selection from bit-width selection. The result is a method for Compression with Dead-zone Quantizer (CoDeQ) that supports both fixed-precision and mixed-precision quantization (controlled by an optional second hyperparameter). It simultaneously determines the sparsity pattern and quantization parameters in a single end-to-end optimization. Consequently, CoDeQ does not require any auxiliary procedures, making the method architecture-agnostic and straightforward to implement. On ImageNet with ResNet-18, CoDeQ reduces bit operations to ~5% while maintaining close to full precision accuracy in both fixed and mixed-precision regimes.

The HCI GenAI CO2ST Calculator: A Tool for Calculating the Carbon Footprint of Generative AI Use in Human-Computer Interaction Research

Apr 01, 2025

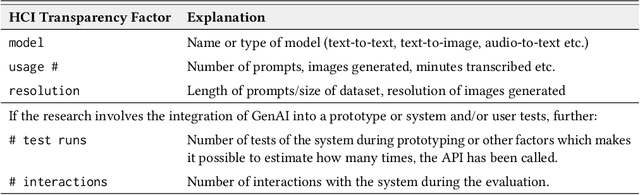

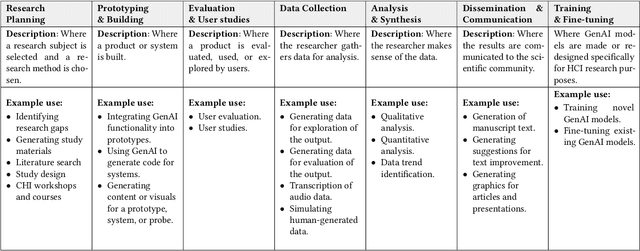

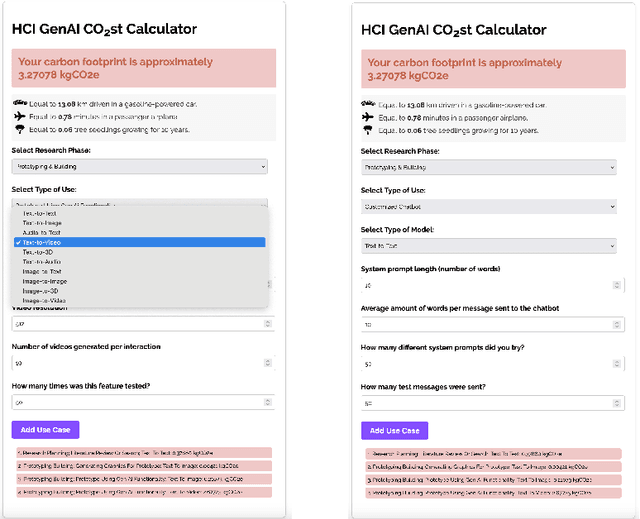

Abstract:Increased usage of generative AI (GenAI) in Human-Computer Interaction (HCI) research induces a climate impact from carbon emissions due to energy consumption of the hardware used to develop and run GenAI models and systems. The exact energy usage and and subsequent carbon emissions are difficult to estimate in HCI research because HCI researchers most often use cloud-based services where the hardware and its energy consumption are hidden from plain view. The HCI GenAI CO2ST Calculator is a tool designed specifically for the HCI research pipeline, to help researchers estimate the energy consumption and carbon footprint of using generative AI in their research, either a priori (allowing for mitigation strategies or experimental redesign) or post hoc (allowing for transparent documentation of carbon footprint in written reports of the research).

Climate And Resource Awareness is Imperative to Achieving Sustainable AI (and Preventing a Global AI Arms Race)

Feb 27, 2025Abstract:Sustainability encompasses three key facets: economic, environmental, and social. However, the nascent discourse that is emerging on sustainable artificial intelligence (AI) has predominantly focused on the environmental sustainability of AI, often neglecting the economic and social aspects. Achieving truly sustainable AI necessitates addressing the tension between its climate awareness and its social sustainability, which hinges on equitable access to AI development resources. The concept of resource awareness advocates for broader access to the infrastructure required to develop AI, fostering equity in AI innovation. Yet, this push for improving accessibility often overlooks the environmental costs of expanding such resource usage. In this position paper, we argue that reconciling climate and resource awareness is essential to realizing the full potential of sustainable AI. We use the framework of base-superstructure to analyze how the material conditions are influencing the current AI discourse. We also introduce the Climate and Resource Aware Machine Learning (CARAML) framework to address this conflict and propose actionable recommendations spanning individual, community, industry, government, and global levels to achieve sustainable AI.

deCIFer: Crystal Structure Prediction from Powder Diffraction Data using Autoregressive Language Models

Feb 04, 2025Abstract:Novel materials drive progress across applications from energy storage to electronics. Automated characterization of material structures with machine learning methods offers a promising strategy for accelerating this key step in material design. In this work, we introduce an autoregressive language model that performs crystal structure prediction (CSP) from powder diffraction data. The presented model, deCIFer, generates crystal structures in the widely used Crystallographic Information File (CIF) format and can be conditioned on powder X-ray diffraction (PXRD) data. Unlike earlier works that primarily rely on high-level descriptors like composition, deCIFer performs CSP from diffraction data. We train deCIFer on nearly 2.3M unique crystal structures and validate on diverse sets of PXRD patterns for characterizing challenging inorganic crystal systems. Qualitative and quantitative assessments using the residual weighted profile and Wasserstein distance show that deCIFer produces structures that more accurately match the target diffraction data when conditioned, compared to the unconditioned case. Notably, deCIFer can achieve a 94% match rate on unseen data. deCIFer bridges experimental diffraction data with computational CSP, lending itself as a powerful tool for crystal structure characterization and accelerating materials discovery.

When Can Memorization Improve Fairness?

Dec 12, 2024Abstract:We study to which extent additive fairness metrics (statistical parity, equal opportunity and equalized odds) can be influenced in a multi-class classification problem by memorizing a subset of the population. We give explicit expressions for the bias resulting from memorization in terms of the label and group membership distribution of the memorized dataset and the classifier bias on the unmemorized dataset. We also characterize the memorized datasets that eliminate the bias for all three metrics considered. Finally we provide upper and lower bounds on the total probability mass in the memorized dataset that is necessary for the complete elimination of these biases.

BMRS: Bayesian Model Reduction for Structured Pruning

Jun 03, 2024Abstract:Modern neural networks are often massively overparameterized leading to high compute costs during training and at inference. One effective method to improve both the compute and energy efficiency of neural networks while maintaining good performance is structured pruning, where full network structures (e.g. neurons or convolutional filters) that have limited impact on the model output are removed. In this work, we propose Bayesian Model Reduction for Structured pruning (BMRS), a fully end-to-end Bayesian method of structured pruning. BMRS is based on two recent methods: Bayesian structured pruning with multiplicative noise, and Bayesian model reduction (BMR), a method which allows efficient comparison of Bayesian models under a change in prior. We present two realizations of BMRS derived from different priors which yield different structured pruning characteristics: 1) BMRS_N with the truncated log-normal prior, which offers reliable compression rates and accuracy without the need for tuning any thresholds and 2) BMRS_U with the truncated log-uniform prior that can achieve more aggressive compression based on the boundaries of truncation. Overall, we find that BMRS offers a theoretically grounded approach to structured pruning of neural networks yielding both high compression rates and accuracy. Experiments on multiple datasets and neural networks of varying complexity showed that the two BMRS methods offer a competitive performance-efficiency trade-off compared to other pruning methods.

Equity through Access: A Case for Small-scale Deep Learning

Mar 19, 2024

Abstract:The recent advances in deep learning (DL) have been accelerated by access to large-scale data and compute. These large-scale resources have been used to train progressively larger models which are resource intensive in terms of compute, data, energy, and carbon emissions. These costs are becoming a new type of entry barrier to researchers and practitioners with limited access to resources at such scale, particularly in the Global South. In this work, we take a comprehensive look at the landscape of existing DL models for vision tasks and demonstrate their usefulness in settings where resources are limited. To account for the resource consumption of DL models, we introduce a novel measure to estimate the performance per resource unit, which we call the PePR score. Using a diverse family of 131 unique DL architectures (spanning 1M to 130M trainable parameters) and three medical image datasets, we capture trends about the performance-resource trade-offs. In applications like medical image analysis, we argue that small-scale, specialized models are better than striving for large-scale models. Furthermore, we show that using pretrained models can significantly reduce the computational resources and data required. We hope this work will encourage the community to focus on improving AI equity by developing methods and models with smaller resource footprints.

Adversarial Fine-tuning of Compressed Neural Networks for Joint Improvement of Robustness and Efficiency

Mar 14, 2024

Abstract:As deep learning (DL) models are increasingly being integrated into our everyday lives, ensuring their safety by making them robust against adversarial attacks has become increasingly critical. DL models have been found to be susceptible to adversarial attacks which can be achieved by introducing small, targeted perturbations to disrupt the input data. Adversarial training has been presented as a mitigation strategy which can result in more robust models. This adversarial robustness comes with additional computational costs required to design adversarial attacks during training. The two objectives -- adversarial robustness and computational efficiency -- then appear to be in conflict of each other. In this work, we explore the effects of two different model compression methods -- structured weight pruning and quantization -- on adversarial robustness. We specifically explore the effects of fine-tuning on compressed models, and present the trade-off between standard fine-tuning and adversarial fine-tuning. Our results show that compression does not inherently lead to loss in model robustness and adversarial fine-tuning of a compressed model can yield large improvement to the robustness performance of models. We present experiments on two benchmark datasets showing that adversarial fine-tuning of compressed models can achieve robustness performance comparable to adversarially trained models, while also improving computational efficiency.

CHILI: Chemically-Informed Large-scale Inorganic Nanomaterials Dataset for Advancing Graph Machine Learning

Feb 21, 2024

Abstract:Advances in graph machine learning (ML) have been driven by applications in chemistry as graphs have remained the most expressive representations of molecules. While early graph ML methods focused primarily on small organic molecules, recently, the scope of graph ML has expanded to include inorganic materials. Modelling the periodicity and symmetry of inorganic crystalline materials poses unique challenges, which existing graph ML methods are unable to address. Moving to inorganic nanomaterials increases complexity as the scale of number of nodes within each graph can be broad ($10$ to $10^5$). The bulk of existing graph ML focuses on characterising molecules and materials by predicting target properties with graphs as input. However, the most exciting applications of graph ML will be in their generative capabilities, which is currently not at par with other domains such as images or text. We invite the graph ML community to address these open challenges by presenting two new chemically-informed large-scale inorganic (CHILI) nanomaterials datasets: A medium-scale dataset (with overall >6M nodes, >49M edges) of mono-metallic oxide nanomaterials generated from 12 selected crystal types (CHILI-3K) and a large-scale dataset (with overall >183M nodes, >1.2B edges) of nanomaterials generated from experimentally determined crystal structures (CHILI-100K). We define 11 property prediction tasks and 6 structure prediction tasks, which are of special interest for nanomaterial research. We benchmark the performance of a wide array of baseline methods and use these benchmarking results to highlight areas which need future work. To the best of our knowledge, CHILI-3K and CHILI-100K are the first open-source nanomaterial datasets of this scale -- both on the individual graph level and of the dataset as a whole -- and the only nanomaterials datasets with high structural and elemental diversity.

Is Adversarial Training with Compressed Datasets Effective?

Feb 08, 2024Abstract:Dataset Condensation (DC) refers to the recent class of dataset compression methods that generate a smaller, synthetic, dataset from a larger dataset. This synthetic dataset retains the essential information of the original dataset, enabling models trained on it to achieve performance levels comparable to those trained on the full dataset. Most current DC methods have mainly concerned with achieving high test performance with limited data budget, and have not directly addressed the question of adversarial robustness. In this work, we investigate the impact of adversarial robustness on models trained with compressed datasets. We show that the compressed datasets obtained from DC methods are not effective in transferring adversarial robustness to models. As a solution to improve dataset compression efficiency and adversarial robustness simultaneously, we propose a novel robustness-aware dataset compression method based on finding the Minimal Finite Covering (MFC) of the dataset. The proposed method is (1) obtained by one-time computation and is applicable for any model, (2) more effective than DC methods when applying adversarial training over MFC, (3) provably robust by minimizing the generalized adversarial loss. Additionally, empirical evaluation on three datasets shows that the proposed method is able to achieve better robustness and performance trade-off compared to DC methods such as distribution matching.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge