Rafid Umayer Murshed

Department of Electrical and Electronic Engineering Bangladesh University of Engineering and Technology, Dhaka, Bangladesh

MetaFAP: Meta-Learning for Frequency Agnostic Prediction of Metasurface Properties

Mar 19, 2025

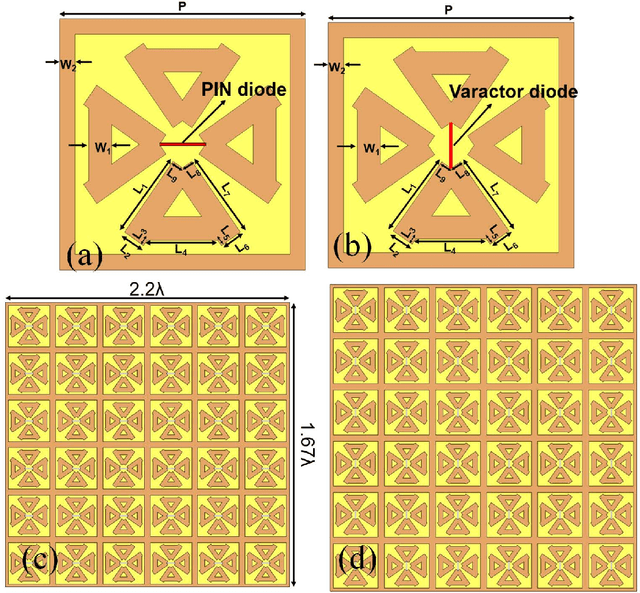

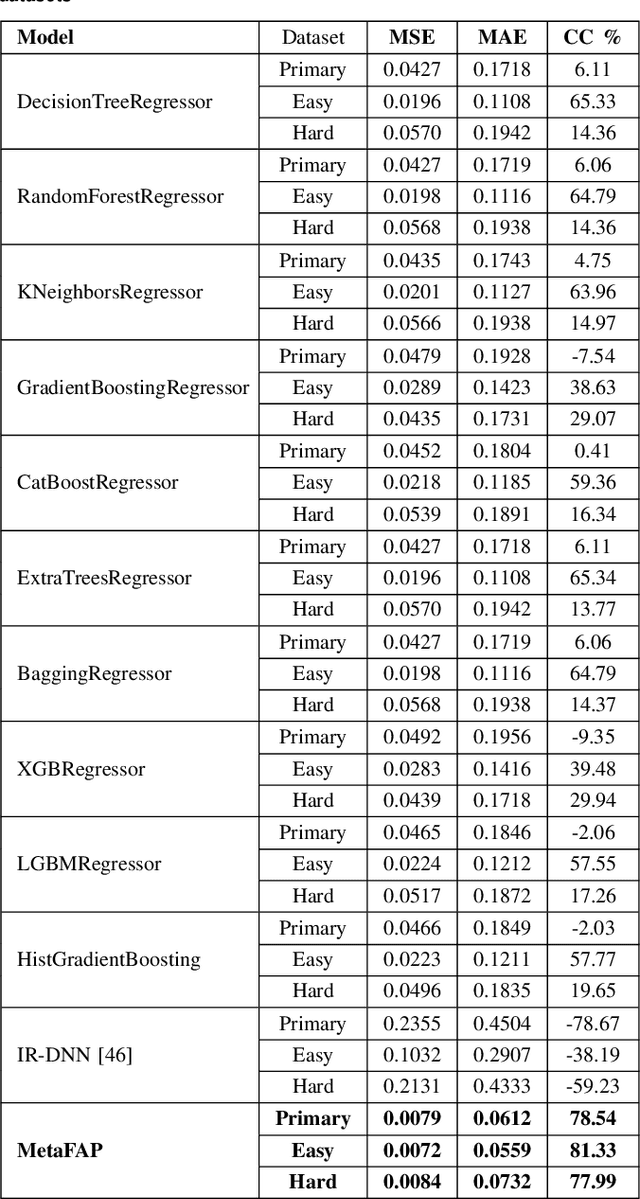

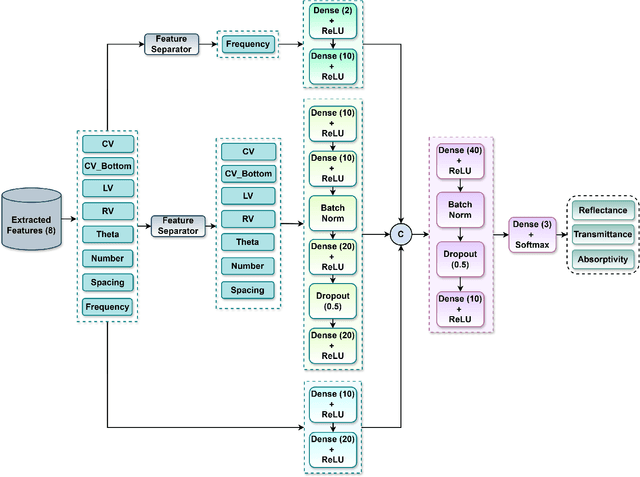

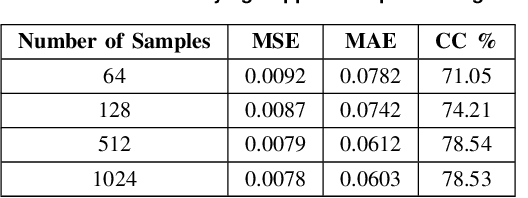

Abstract:Metasurfaces, and in particular reconfigurable intelligent surfaces (RIS), are revolutionizing wireless communications by dynamically controlling electromagnetic waves. Recent wireless communication advancements necessitate broadband and multi-band RIS, capable of supporting dynamic spectrum access and carrier aggregation from sub-6 GHz to mmWave and THz bands. The inherent frequency dependence of meta-atom resonances degrades performance as operating conditions change, making real-time, frequency-agnostic metasurface property prediction crucial for practical deployment. Yet, accurately predicting metasurface behavior across different frequencies remains challenging. Traditional simulations struggle with complexity, while standard deep learning models often overfit or generalize poorly. To address this, we introduce MetaFAP (Meta-Learning for Frequency-Agnostic Prediction), a novel framework built on the meta-learning paradigm for predicting metasurface properties. By training on diverse frequency tasks, MetaFAP learns broadly applicable patterns. This allows it to adapt quickly to new spectral conditions with minimal data, solving key limitations of existing methods. Experimental evaluations demonstrate that MetaFAP reduces prediction errors by an order of magnitude in MSE and MAE while maintaining high Pearson correlations. Remarkably, it achieves inference in less than a millisecond, bypassing the computational bottlenecks of traditional simulations, which take minutes per unit cell and scale poorly with array size. These improvements enable real-time RIS optimization in dynamic environments and support scalable, frequency-agnostic designs. MetaFAP thus bridges the gap between intelligent electromagnetic systems and practical deployment, offering a critical tool for next-generation wireless networks.

A Fast Effective Greedy Approach for MU-MIMO Beam Selection in mm-Wave and THz Communications

Jan 26, 2024

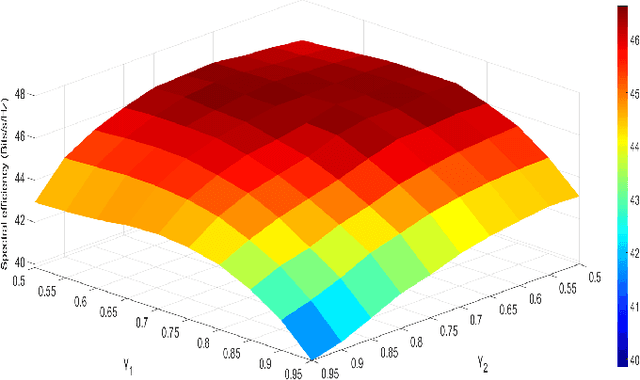

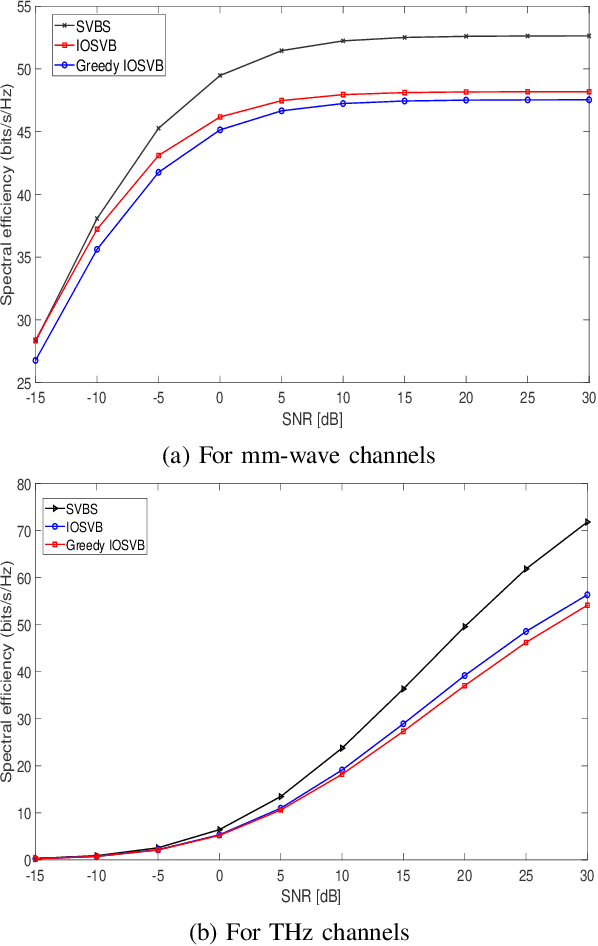

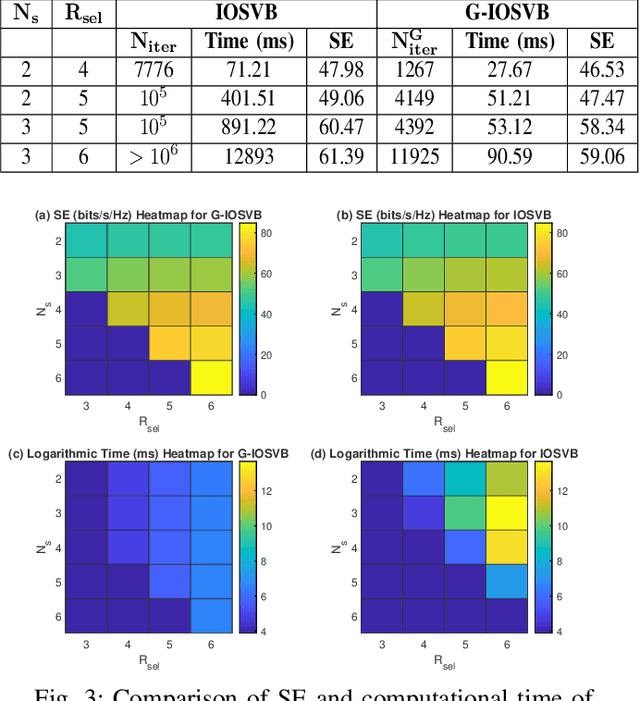

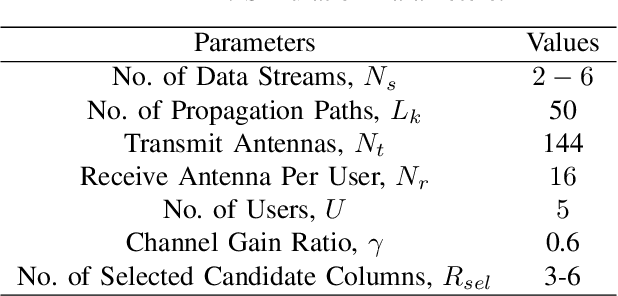

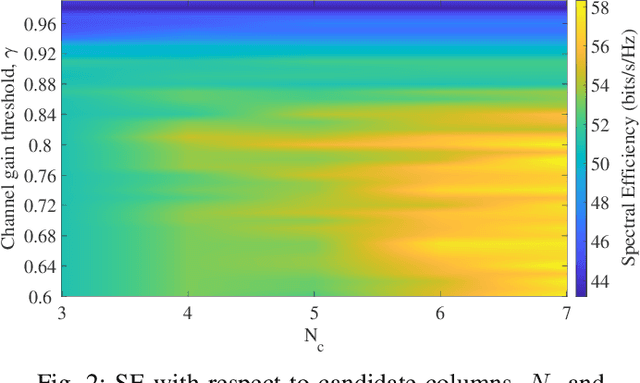

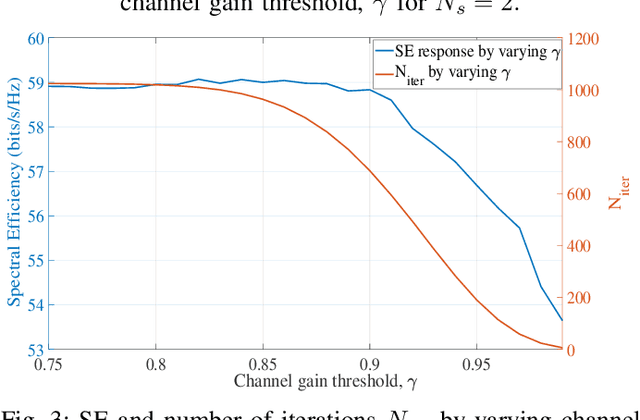

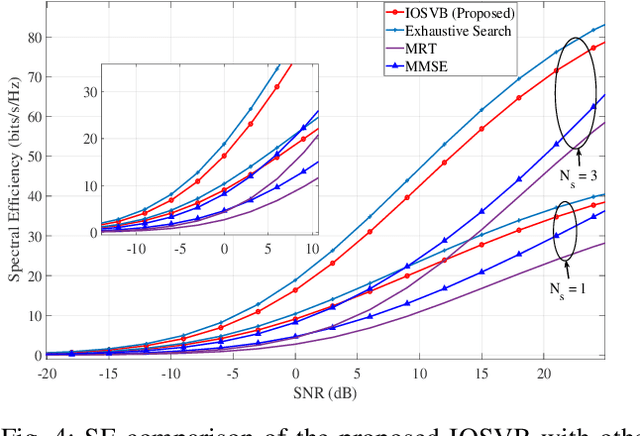

Abstract:This paper addresses the beam-selection challenges in Multi-User Multiple Input Multiple Output (MU-MIMO) beamforming for mm-wave and THz channels, focusing on the pivotal aspect of spectral efficiency (SE) and computational efficiency. We introduce a novel approach, the Greedy Interference-Optimized Singular Vector Beam-selection (G-IOSVB) algorithm, which offers a strategic balance between high SE and low computational complexity. Our study embarks on a comparative analysis of G-IOSVB against the traditional IOSVB and the exhaustive Singular-Vector Beamspace Search (SVBS) algorithms. The findings reveal that while SVBS achieves the highest SE, it incurs significant computational costs, approximately 162 seconds per channel realization. In contrast, G-IOSVB aligns closely with IOSVB in SE performance yet is markedly more computationally efficient. Heatmaps vividly demonstrate this efficiency, highlighting G-IOSVB's reduced computation time without sacrificing SE. We also delve into the mathematical intricacies of G-IOSVB, demonstrating its theoretical and practical superiority through rigorous expressions and detailed algorithmic analysis. The numerical results illustrate that G-IOSVB stands out as an efficient, practical solution for MU-MIMO systems, making it a promising candidate for high-speed, high-efficiency wireless communication networks.

Self-supervised Contrastive Learning for 6G UM-MIMO THz Communications: Improving Robustness Under Imperfect CSI

Jan 21, 2024Abstract:This paper investigates the potential of contrastive learning in 6G ultra-massive multiple-input multiple-output (UM-MIMO) communication systems, specifically focusing on hybrid beamforming under imperfect channel state information (CSI) conditions at THz. UM-MIMO systems are promising for future 6G wireless communication networks due to their high spectral efficiency and capacity. The accuracy of CSI significantly influences the performance of UM-MIMO systems. However, acquiring perfect CSI is challenging due to various practical constraints such as channel estimation errors, feedback delays, and hardware imperfections. To address this issue, we propose a novel self-supervised contrastive learning-based approach for hybrid beamforming, which is robust against imperfect CSI. We demonstrate the power of contrastive learning to tackle the challenges posed by imperfect CSI and show that our proposed method results in improved system performance in terms of achievable rate compared to traditional methods.

Beyond Traditional Beamforming: Singular Vector Projection Techniques for MU-MIMO Interference Management

Nov 07, 2023

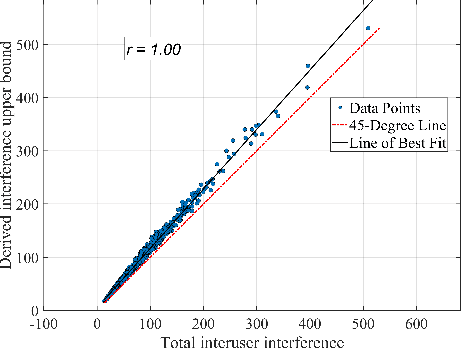

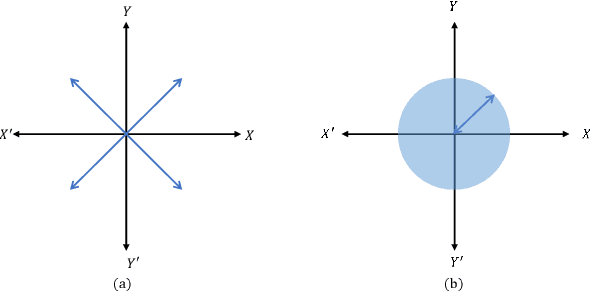

Abstract:This paper introduces low-complexity beamforming algorithms for multi-user multiple-input multiple-output (MU-MIMO) systems to minimize inter-user interference and enhance spectral efficiency (SE). A Singular-Vector Beamspace Search (SVBS) algorithm is initially presented, wherein all the singular vectors are assessed to determine the most effective beamforming scheme. We then establish a mathematical proof demonstrating that the total inter-user interference of a MU-MIMO beamforming system can be efficiently calculated from the mutual projections of orthonormal singular vectors. Capitalizing on this, we present an Interference-optimized Singular Vector Beamforming (IOSVB) algorithm for optimal singular vector selection. For further reducing the computational burden, we propose a Dimensionality-reduced IOSVB (DR-IOSVB) algorithm by integrating the principal component analysis (PCA). The numerical results demonstrate the superiority of the SVBS algorithm over the existing algorithms, with the IOSVB offering near-identical SE and the DR-IOSVB balancing the performance and computational efficiency. This work establishes a new benchmark for high-performance and low-complexity beamforming in MU-MIMO wireless communication systems.

Real-time Seismic Intensity Prediction using Self-supervised Contrastive GNN for Earthquake Early Warning

Jun 25, 2023Abstract:Seismic intensity prediction in a geographical area from early or initial seismic waves received by a few seismic stations is a critical component of an effective Earthquake Early Warning (EEW) system. State-of-the-art deep learning-based techniques for this task suffer from limited accuracy in the prediction and, more importantly, require input waveforms of a large time window from a handful number of seismic stations, which is not practical for EEW systems. To overcome the above limitations, in this paper, we propose a novel deep learning approach, Seismic Contrastive Graph Neural Network (SC-GNN) for highly accurate seismic intensity prediction using a small portion of initial seismic waveforms received by a few seismic stations. The SC-GNN comprises two key components: (i) a graph neural network (GNN) to propagate spatiotemporal information through the nodes of a graph-like structure of seismic station distribution and wave propagation, and (ii) a self-supervised contrastive learning component to train the model with larger time windows and make predictions using shorter initial waveforms. The efficacy of our proposed model is thoroughly evaluated through experiments on three real-world seismic datasets, showing superior performance over existing state-of-the-art techniques. In particular, the SC-GNN model demonstrates a substantial reduction in mean squared error (MSE) and the lowest standard deviation of the error, indicating its robustness, reliability, and a strong positive relationship between predicted and actual values. More importantly, the model maintains superior performance even with 5s input waveforms, making it particularly efficient for EEW systems.

Automated Level Crossing System: A Computer Vision Based Approach with Raspberry Pi Microcontroller

Dec 08, 2022Abstract:In a rapidly flourishing country like Bangladesh, accidents in unmanned level crossings are increasing daily. This study presents a deep learning-based approach for automating level crossing junctions, ensuring maximum safety. Here, we develop a fully automated technique using computer vision on a microcontroller that will reduce and eliminate level-crossing deaths and accidents. A Raspberry Pi microcontroller detects impending trains using computer vision on live video, and the intersection is closed until the incoming train passes unimpeded. Live video activity recognition and object detection algorithms scan the junction 24/7. Self-regulating microcontrollers control the entire process. When persistent unauthorized activity is identified, authorities, such as police and fire brigade, are notified via automated messages and notifications. The microcontroller evaluates live rail-track data, and arrival and departure times to anticipate ETAs, train position, velocity, and track problems to avoid head-on collisions. This proposed scheme reduces level crossing accidents and fatalities at a lower cost than current market solutions. Index Terms: Deep Learning, Microcontroller, Object Detection, Railway Crossing, Raspberry Pi

MIBINET: Real-time Proctoring of Cardiovascular Inter-Beat-Intervals using a Multifaceted CNN from mm-Wave Ballistocardiography Signal

Nov 14, 2022Abstract:The recent pandemic has refocused the medical world's attention on the diagnostic techniques associated with cardiovascular disease. Heart rate provides a real-time snapshot of cardiovascular health. A more precise heart rate reading provides a better understanding of cardiac muscle activity. Although many existing diagnostic techniques are approaching the limits of perfection, there remains potential for further development. In this paper, we propose MIBINET, a convolutional neural network for real-time proctoring of heart rate via inter-beat-interval (IBI) from millimeter wave (mm-wave) radar ballistocardiography signals. This network can be used in hospitals, homes, and passenger vehicles due to its lightweight and contactless properties. It employs classical signal processing prior to fitting the data into the network. Although MIBINET is primarily designed to work on mm-wave signals, it is found equally effective on signals of various modalities such as PCG, ECG, and PPG. Extensive experimental results and a thorough comparison with the current state-of-the-art on mm-wave signals demonstrate the viability and versatility of the proposed methodology. Keywords: Cardiovascular disease, contactless measurement, heart rate, IBI, mm-wave radar, neural network

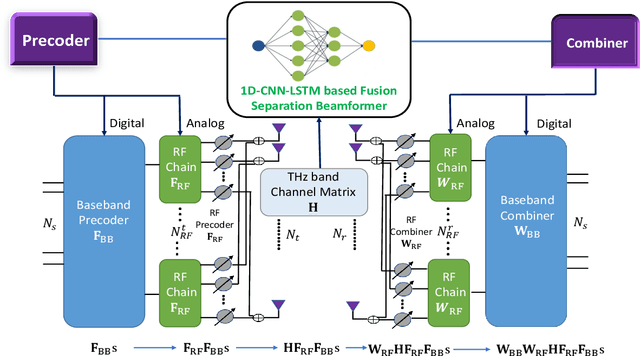

A CNN-LSTM-based Fusion Separation Deep Neural Network for 6G Ultra-Massive MIMO Hybrid Beamforming

Sep 26, 2022

Abstract:In the sixth-generation (6G) cellular networks, hybrid beamforming would be a real-time optimization problem that is becoming progressively more challenging. Although numerical computation-based iterative methods such as the minimal mean square error (MMSE) and the alternative manifold-optimization (Alt-Min) can already attain near-optimal performance, their computational cost renders them unsuitable for real-time applications. However, recent studies have demonstrated that machine learning techniques like deep neural networks (DNN) can learn the mapping done by those algorithms between channel state information (CSI) and near-optimal resource allocation, and then approximate this mapping in near real-time. In light of this, we investigate various DNN architectures for beamforming challenges in the terahertz (THz) band for ultra-massive multiple-input multiple-output (UM-MIMO) and explore their contextual mathematical modeling. Specifically, we design a sophisticated 1D convolutional neural network and long short-term memory (1D CNN-LSTM) based fusion-separation scheme, which can approach the performance of the Alt-Min algorithm in terms of spectral efficiency (SE) and, at the same time, use significantly less computational effort. Simulation results indicate that the proposed system can attain almost the same level of SE as that of the numerical iterative algorithms, while incurring a substantial reduction in computational cost. Our DNN-based approach also exhibits exceptional adaptability to diverse network setups and high scalability. Although the current model only addresses the fully connected hybrid architecture, our approach can also be expanded to address a variety of other network topologies. INDEX TERMS 6G, CNN, Hybrid Beamforming, LSTM, UM-MIMO

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge