Abu Horaira Hridhon

A CNN-LSTM-based Fusion Separation Deep Neural Network for 6G Ultra-Massive MIMO Hybrid Beamforming

Sep 26, 2022

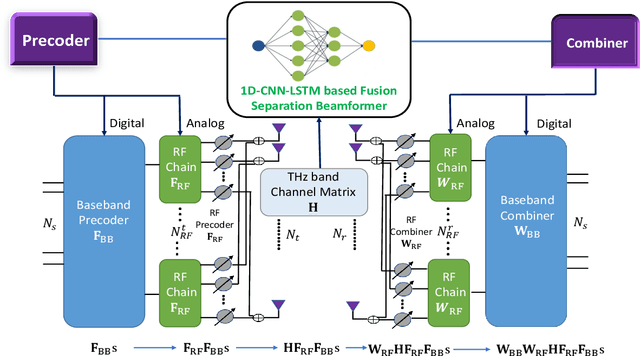

Abstract:In the sixth-generation (6G) cellular networks, hybrid beamforming would be a real-time optimization problem that is becoming progressively more challenging. Although numerical computation-based iterative methods such as the minimal mean square error (MMSE) and the alternative manifold-optimization (Alt-Min) can already attain near-optimal performance, their computational cost renders them unsuitable for real-time applications. However, recent studies have demonstrated that machine learning techniques like deep neural networks (DNN) can learn the mapping done by those algorithms between channel state information (CSI) and near-optimal resource allocation, and then approximate this mapping in near real-time. In light of this, we investigate various DNN architectures for beamforming challenges in the terahertz (THz) band for ultra-massive multiple-input multiple-output (UM-MIMO) and explore their contextual mathematical modeling. Specifically, we design a sophisticated 1D convolutional neural network and long short-term memory (1D CNN-LSTM) based fusion-separation scheme, which can approach the performance of the Alt-Min algorithm in terms of spectral efficiency (SE) and, at the same time, use significantly less computational effort. Simulation results indicate that the proposed system can attain almost the same level of SE as that of the numerical iterative algorithms, while incurring a substantial reduction in computational cost. Our DNN-based approach also exhibits exceptional adaptability to diverse network setups and high scalability. Although the current model only addresses the fully connected hybrid architecture, our approach can also be expanded to address a variety of other network topologies. INDEX TERMS 6G, CNN, Hybrid Beamforming, LSTM, UM-MIMO

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge