Qingwei Liu

Trajectory Entropy: Modeling Game State Stability from Multimodality Trajectory Prediction

Jun 06, 2025Abstract:Complex interactions among agents present a significant challenge for autonomous driving in real-world scenarios. Recently, a promising approach has emerged, which formulates the interactions of agents as a level-k game framework. It effectively decouples agent policies by hierarchical game levels. However, this framework ignores both the varying driving complexities among agents and the dynamic changes in agent states across game levels, instead treating them uniformly. Consequently, redundant and error-prone computations are introduced into this framework. To tackle the issue, this paper proposes a metric, termed as Trajectory Entropy, to reveal the game status of agents within the level-k game framework. The key insight stems from recognizing the inherit relationship between agent policy uncertainty and the associated driving complexity. Specifically, Trajectory Entropy extracts statistical signals representing uncertainty from the multimodality trajectory prediction results of agents in the game. Then, the signal-to-noise ratio of this signal is utilized to quantify the game status of agents. Based on the proposed Trajectory Entropy, we refine the current level-k game framework through a simple gating mechanism, significantly improving overall accuracy while reducing computational costs. Our method is evaluated on the Waymo and nuPlan datasets, in terms of trajectory prediction, open-loop and closed-loop planning tasks. The results demonstrate the state-of-the-art performance of our method, with precision improved by up to 19.89% for prediction and up to 16.48% for planning.

Unmixing based PAN guided fusion network for hyperspectral imagery

Jan 27, 2022

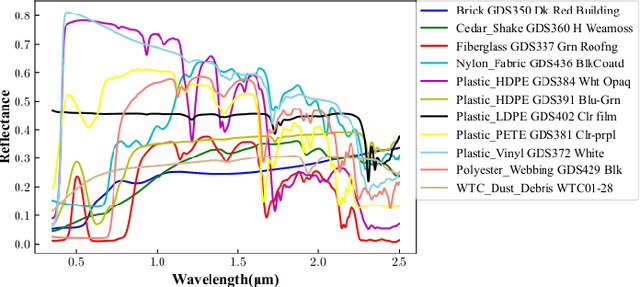

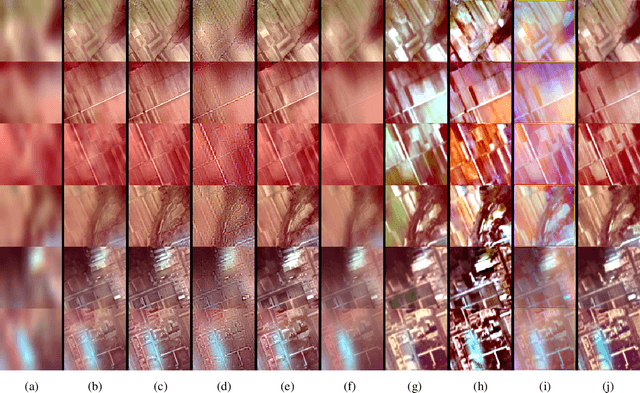

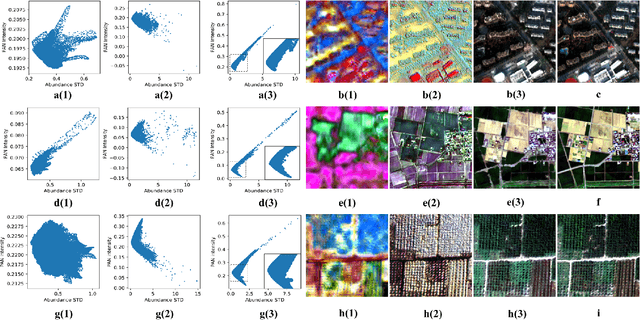

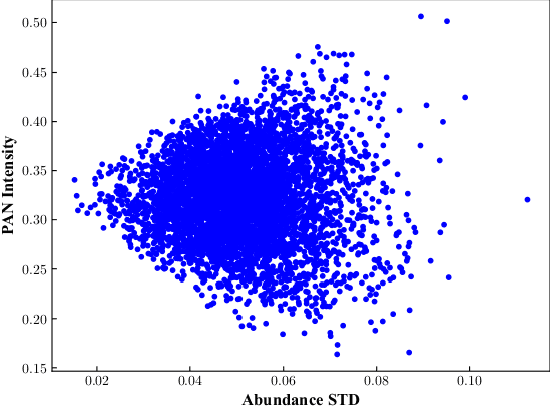

Abstract:The hyperspectral image (HSI) has been widely used in many applications due to its fruitful spectral information. However, the limitation of imaging sensors has reduced its spatial resolution that causes detail loss. One solution is to fuse the low spatial resolution hyperspectral image (LR-HSI) and the panchromatic image (PAN) with inverse features to get the high-resolution hyperspectral image (HR-HSI). Most of the existing fusion methods just focus on small fusion ratios like 4 or 6, which might be impractical for some large ratios' HSI and PAN image pairs. Moreover, the ill-posedness of restoring detail information in HSI with hundreds of bands from PAN image with only one band has not been solved effectively, especially under large fusion ratios. Therefore, a lightweight unmixing-based pan-guided fusion network (Pgnet) is proposed to mitigate this ill-posedness and improve the fusion performance significantly. Note that the fusion process of the proposed network is under the projected low-dimensional abundance subspace with an extremely large fusion ratio of 16. Furthermore, based on the linear and nonlinear relationships between the PAN intensity and abundance, an interpretable PAN detail inject network (PDIN) is designed to inject the PAN details into the abundance feature efficiently. Comprehensive experiments on simulated and real datasets demonstrate the superiority and generality of our method over several state-of-the-art (SOTA) methods qualitatively and quantitatively (The codes in pytorch and paddle versions and dataset could be available at https://github.com/rs-lsl/Pgnet). (This is a improved version compared with the publication in Tgrs with the modification in the deduction of the PDIN block.)

WGCN: Graph Convolutional Networks with Weighted Structural Features

Apr 29, 2021

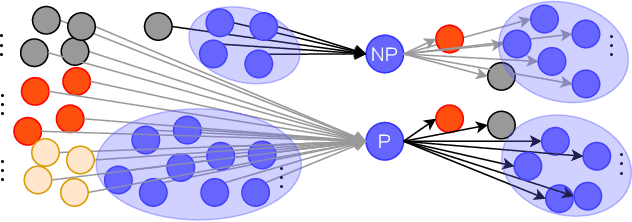

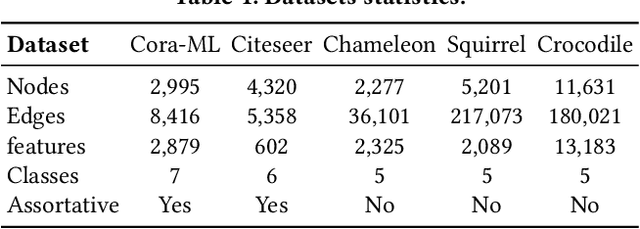

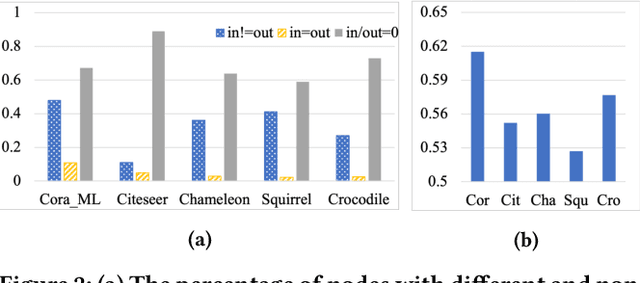

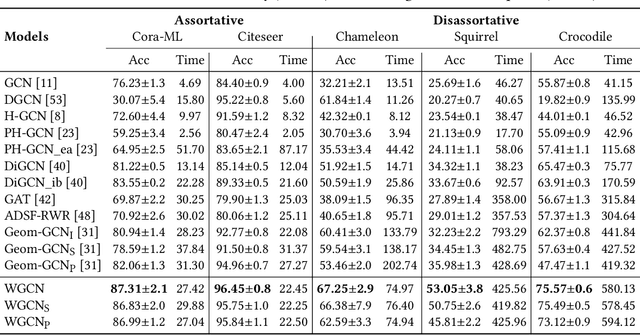

Abstract:Graph structural information such as topologies or connectivities provides valuable guidance for graph convolutional networks (GCNs) to learn nodes' representations. Existing GCN models that capture nodes' structural information weight in- and out-neighbors equally or differentiate in- and out-neighbors globally without considering nodes' local topologies. We observe that in- and out-neighbors contribute differently for nodes with different local topologies. To explore the directional structural information for different nodes, we propose a GCN model with weighted structural features, named WGCN. WGCN first captures nodes' structural fingerprints via a direction and degree aware Random Walk with Restart algorithm, where the walk is guided by both edge direction and nodes' in- and out-degrees. Then, the interactions between nodes' structural fingerprints are used as the weighted node structural features. To further capture nodes' high-order dependencies and graph geometry, WGCN embeds graphs into a latent space to obtain nodes' latent neighbors and geometrical relationships. Based on nodes' geometrical relationships in the latent space, WGCN differentiates latent, in-, and out-neighbors with an attention-based geometrical aggregation. Experiments on transductive node classification tasks show that WGCN outperforms the baseline models consistently by up to 17.07% in terms of accuracy on five benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge