Shuangliang Li

Efficiently improving key weather variables forecasting by performing the guided iterative prediction in latent space

Jul 27, 2024

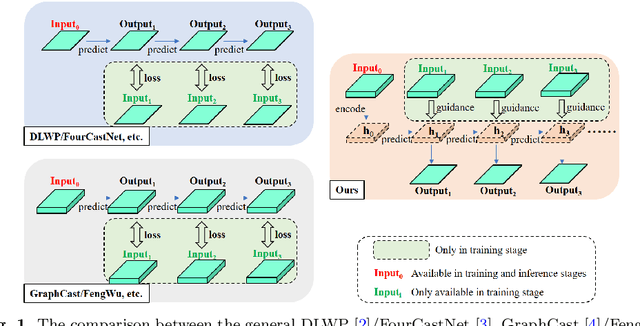

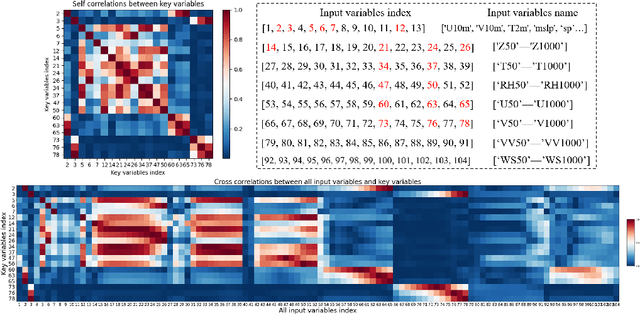

Abstract:Weather forecasting refers to learning evolutionary patterns of some key upper-air and surface variables which is of great significance. Recently, deep learning-based methods have been increasingly applied in the field of weather forecasting due to their powerful feature learning capabilities. However, prediction methods based on the original space iteration struggle to effectively and efficiently utilize large number of weather variables. Therefore, we propose an 'encoding-prediction-decoding' prediction network. This network can efficiently benefit to more related input variables with key variables, that is, it can adaptively extract key variable-related low-dimensional latent feature from much more input atmospheric variables for iterative prediction. And we construct a loss function to guide the iteration of latent feature by utilizing multiple atmospheric variables in corresponding lead times. The obtained latent features through iterative prediction are then decoded to obtain the predicted values of key variables in multiple lead times. In addition, we improve the HTA algorithm in \cite{bi2023accurate} by inputting more time steps to enhance the temporal correlation between the prediction results and input variables. Both qualitative and quantitative prediction results on ERA5 dataset validate the superiority of our method over other methods. (The code will be available at https://github.com/rs-lsl/Kvp-lsi)

Real HSI-MSI-PAN image dataset for the hyperspectral/multi-spectral/panchromatic image fusion and super-resolution fields

Jul 02, 2024

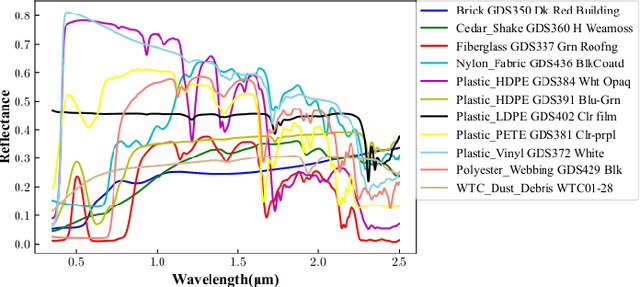

Abstract:Nowadays, most of the hyperspectral image (HSI) fusion experiments are based on simulated datasets to compare different fusion methods. However, most of the spectral response functions and spatial downsampling functions used to create the simulated datasets are not entirely accurate, resulting in deviations in spatial and spectral features between the generated images for fusion and the real images for fusion. This reduces the credibility of the fusion algorithm, causing unfairness in the comparison between different algorithms and hindering the development of the field of hyperspectral image fusion. Therefore, we release a real HSI/MSI/PAN image dataset to promote the development of the field of hyperspectral image fusion. These three images are spatially registered, meaning fusion can be performed between HSI and MSI, HSI and PAN image, MSI and PAN image, as well as among HSI, MSI, and PAN image. This real dataset could be available at https://aistudio.baidu.com/datasetdetail/281612. The related code to process the data could be available at https://github.com/rs-lsl/CSSNet.

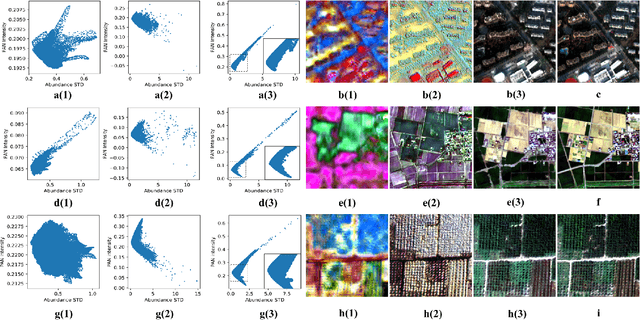

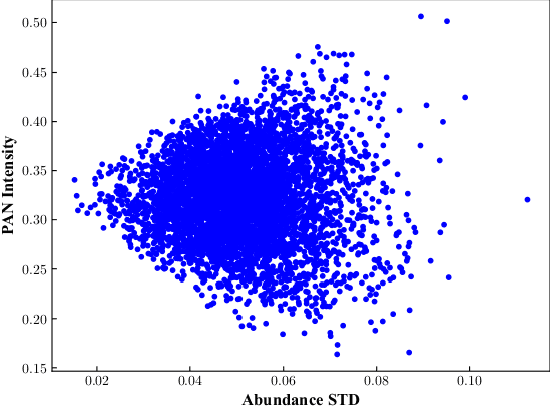

Unmixing based PAN guided fusion network for hyperspectral imagery

Jan 27, 2022

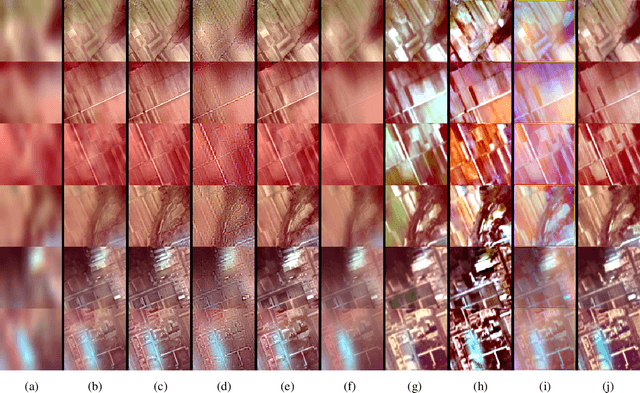

Abstract:The hyperspectral image (HSI) has been widely used in many applications due to its fruitful spectral information. However, the limitation of imaging sensors has reduced its spatial resolution that causes detail loss. One solution is to fuse the low spatial resolution hyperspectral image (LR-HSI) and the panchromatic image (PAN) with inverse features to get the high-resolution hyperspectral image (HR-HSI). Most of the existing fusion methods just focus on small fusion ratios like 4 or 6, which might be impractical for some large ratios' HSI and PAN image pairs. Moreover, the ill-posedness of restoring detail information in HSI with hundreds of bands from PAN image with only one band has not been solved effectively, especially under large fusion ratios. Therefore, a lightweight unmixing-based pan-guided fusion network (Pgnet) is proposed to mitigate this ill-posedness and improve the fusion performance significantly. Note that the fusion process of the proposed network is under the projected low-dimensional abundance subspace with an extremely large fusion ratio of 16. Furthermore, based on the linear and nonlinear relationships between the PAN intensity and abundance, an interpretable PAN detail inject network (PDIN) is designed to inject the PAN details into the abundance feature efficiently. Comprehensive experiments on simulated and real datasets demonstrate the superiority and generality of our method over several state-of-the-art (SOTA) methods qualitatively and quantitatively (The codes in pytorch and paddle versions and dataset could be available at https://github.com/rs-lsl/Pgnet). (This is a improved version compared with the publication in Tgrs with the modification in the deduction of the PDIN block.)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge