Qince Li

Bridging the Arithmetic Gap: The Cognitive Complexity Benchmark and Financial-PoT for Robust Financial Reasoning

Jan 29, 2026Abstract:While Large Language Models excel at semantic tasks, they face a critical bottleneck in financial quantitative reasoning, frequently suffering from "Arithmetic Hallucinations" and a systemic failure mode we term "Cognitive Collapse". To strictly quantify this phenomenon, we introduce the Cognitive Complexity Benchmark (CCB), a robust evaluation framework grounded in a dataset constructed from 95 real-world Chinese A-share annual reports. Unlike traditional datasets, the CCB stratifies financial queries into a three-dimensional taxonomy, Data Source, Mapping Difficulty, and Result Unit, enabling the precise diagnosis of reasoning degradation in high-cognitive-load scenarios. To address these failures, we propose the Iterative Dual-Phase Financial-PoT framework. This neuro-symbolic architecture enforces a strict architectural decoupling: it first isolates semantic variable extraction and logic formulation, then offloads computation to an iterative, self-correcting Python sandbox to ensure deterministic execution. Evaluation on the CCB demonstrates that while standard Chain-of-Thought falters on complex tasks, our approach offers superior robustness, elevating the Qwen3-235B model's average accuracy from 59.7\% to 67.3\% and achieving gains of up to 10-fold in high-complexity reasoning tasks. These findings suggest that architectural decoupling is a critical enabling factor for improving reliability in financial reasoning tasks, providing a transferable architectural insight for precision-critical domains that require tight alignment between semantic understanding and quantitative computation.

Learning Dual-Pixel Alignment for Defocus Deblurring

Apr 26, 2022

Abstract:It is a challenging task to recover all-in-focus image from a single defocus blurry image in real-world applications. On many modern cameras, dual-pixel (DP) sensors create two-image views, based on which stereo information can be exploited to benefit defocus deblurring. Despite existing DP defocus deblurring methods achieving impressive results, they directly take naive concatenation of DP views as input, while neglecting the disparity between left and right views in the regions out of camera's depth of field (DoF). In this work, we propose a Dual-Pixel Alignment Network (DPANet) for defocus deblurring. Generally, DPANet is an encoder-decoder with skip-connections, where two branches with shared parameters in the encoder are employed to extract and align deep features from left and right views, and one decoder is adopted to fuse aligned features for predicting the all-in-focus image. Due to that DP views suffer from different blur amounts, it is not trivial to align left and right views. To this end, we propose novel encoder alignment module (EAM) and decoder alignment module (DAM). In particular, a correlation layer is suggested in EAM to measure the disparity between DP views, whose deep features can then be accordingly aligned using deformable convolutions. And DAM can further enhance the alignment of skip-connected features from encoder and deep features in decoder. By introducing several EAMs and DAMs, DP views in DPANet can be well aligned for better predicting latent all-in-focus image. Experimental results on real-world datasets show that our DPANet is notably superior to state-of-the-art deblurring methods in reducing defocus blur while recovering visually plausible sharp structures and textures.

Weakly Supervised Arrhythmia Detection Based on Deep Convolutional Neural Network

Dec 10, 2020

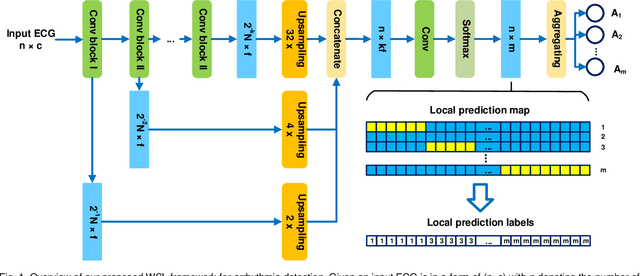

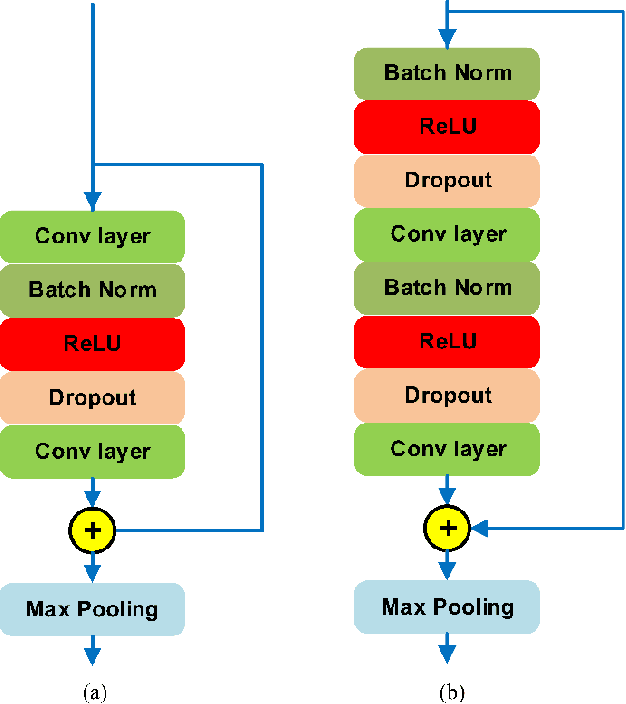

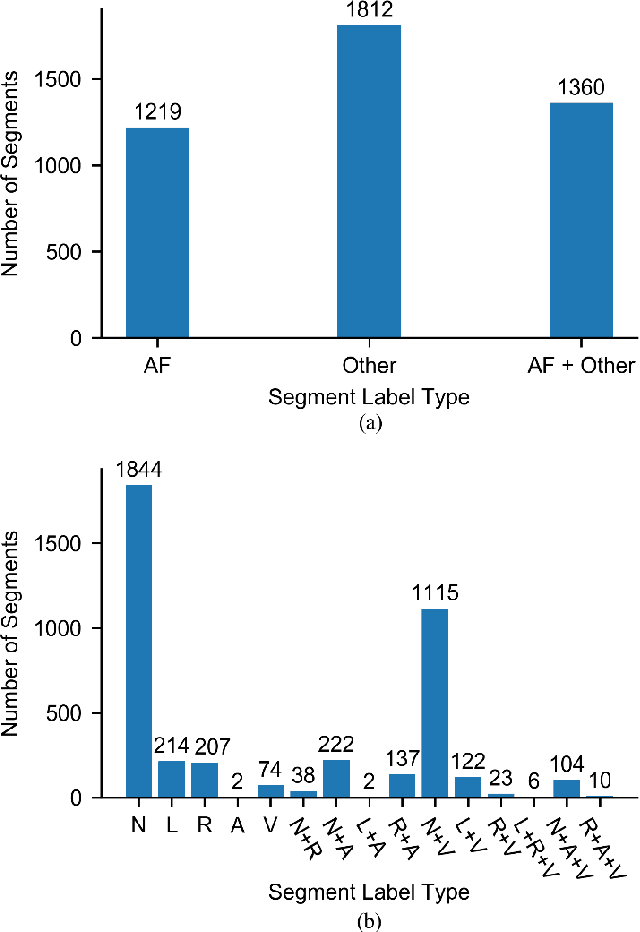

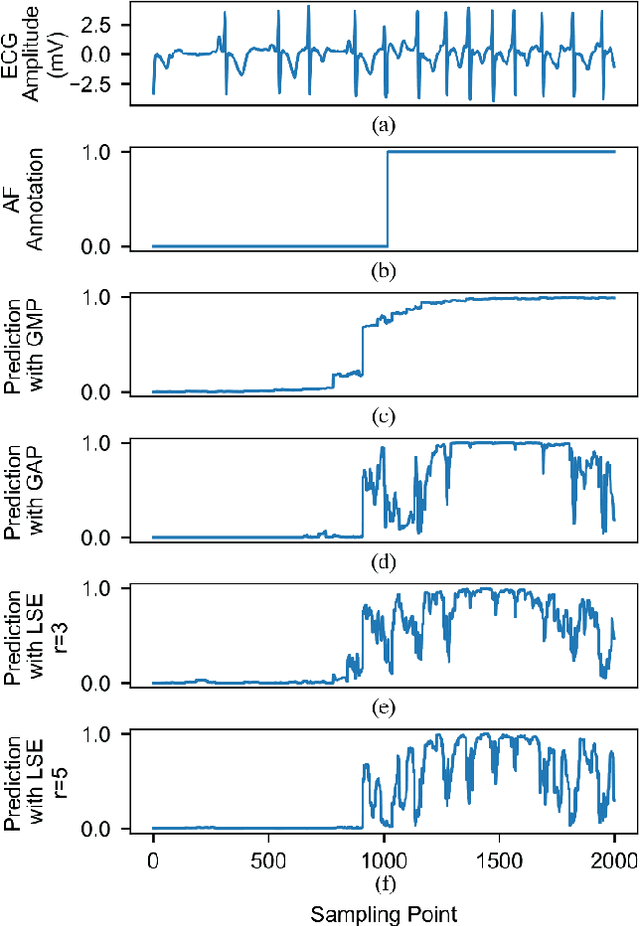

Abstract:Supervised deep learning has been widely used in the studies of automatic ECG classification, which largely benefits from sufficient annotation of large datasets. However, most of the existing large ECG datasets are roughly annotated, so the classification model trained on them can only detect the existence of abnormalities in a whole recording, but cannot determine their exact occurrence time. In addition, it may take huge time and economic cost to construct a fine-annotated ECG dataset. Therefore, this study proposes weakly supervised deep learning models for detecting abnormal ECG events and their occurrence time. The available supervision information for the models is limited to the event types in an ECG record, excluding the specific occurring time of each event. By leverage of feature locality of deep convolution neural network, the models first make predictions based on the local features, and then aggregate the local predictions to infer the existence of each event during the whole record. Through training, the local predictions are expected to reflect the specific occurring time of each event. To test their potentials, we apply the models for detecting cardiac rhythmic and morphological arrhythmias by using the AFDB and MITDB datasets, respectively. The results show that the models achieve beat-level accuracies of 99.09% in detecting atrial fibrillation, and 99.13% in detecting morphological arrhythmias, which are comparable to that of fully supervised learning models, demonstrating their effectiveness. The local prediction maps revealed by this method are also helpful to analyze and diagnose the decision logic of record-level classification models.

Two-Stage Single Image Reflection Removal with Reflection-Aware Guidance

Dec 02, 2020

Abstract:Removing undesired reflection from an image captured through a glass surface is a very challenging problem with many practical application scenarios. For improving reflection removal, cascaded deep models have been usually adopted to estimate the transmission in a progressive manner. However, most existing methods are still limited in exploiting the result in prior stage for guiding transmission estimation. In this paper, we present a novel two-stage network with reflection-aware guidance (RAGNet) for single image reflection removal (SIRR). To be specific, the reflection layer is firstly estimated due to that it generally is much simpler and is relatively easier to estimate. Reflectionaware guidance (RAG) module is then elaborated for better exploiting the estimated reflection in predicting transmission layer. By incorporating feature maps from the estimated reflection and observation, RAG can be used (i) to mitigate the effect of reflection from the observation, and (ii) to generate mask in partial convolution for mitigating the effect of deviating from linear combination hypothesis. A dedicated mask loss is further presented for reconciling the contributions of encoder and decoder features. Experiments on five commonly used datasets demonstrate the quantitative and qualitative superiority of our RAGNet in comparison to the state-of-the-art SIRR methods. The source code and pre-trained model are available at https://github.com/liyucs/RAGNet.

Automatic Detection of ECG Abnormalities by using an Ensemble of Deep Residual Networks with Attention

Aug 27, 2019

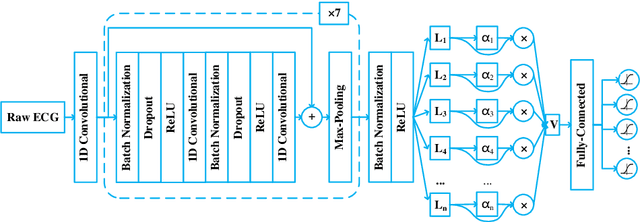

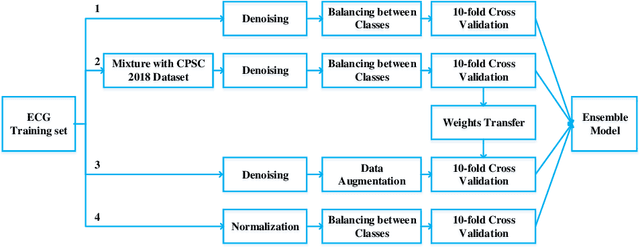

Abstract:Heart disease is one of the most common diseases causing morbidity and mortality. Electrocardiogram (ECG) has been widely used for diagnosing heart diseases for its simplicity and non-invasive property. Automatic ECG analyzing technologies are expected to reduce human working load and increase diagnostic efficacy. However, there are still some challenges to be addressed for achieving this goal. In this study, we develop an algorithm to identify multiple abnormalities from 12-lead ECG recordings. In the algorithm pipeline, several preprocessing methods are firstly applied on the ECG data for denoising, augmentation and balancing recording numbers of variant classes. In consideration of efficiency and consistency of data length, the recordings are padded or truncated into a medium length, where the padding/truncating time windows are selected randomly to sup-press overfitting. Then, the ECGs are used to train deep neural network (DNN) models with a novel structure that combines a deep residual network with an attention mechanism. Finally, an ensemble model is built based on these trained models to make predictions on the test data set. Our method is evaluated based on the test set of the First China ECG Intelligent Competition dataset by using the F1 metric that is regarded as the harmonic mean between the precision and recall. The resultant overall F1 score of the algorithm is 0.875, showing a promising performance and potential for practical use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge