Qifeng Lin

Cyborg Insect Factory: Automatic Assembly System to Build up Insect-computer Hybrid Robot Based on Vision-guided Robotic Arm Manipulation of Custom Bipolar Electrodes

Nov 20, 2024Abstract:The advancement of insect-computer hybrid robots holds significant promise for navigating complex terrains and enhancing robotics applications. This study introduced an automatic assembly method for insect-computer hybrid robots, which was accomplished by mounting backpack with precise implantation of custom-designed bipolar electrodes. We developed a stimulation protocol for the intersegmental membrane between pronotum and mesothorax of the Madagascar hissing cockroach, allowing for bipolar electrodes' automatic implantation using a robotic arm. The assembly process was integrated with a deep learning-based vision system to accurately identify the implantation site, and a dedicated structure to fix the insect (68 s for the whole assembly process). The automatically assembled hybrid robots demonstrated steering control (over 70 degrees for 0.4 s stimulation) and deceleration control (68.2% speed reduction for 0.4 s stimulation), matching the performance of manually assembled systems. Furthermore, a multi-agent system consisting of 4 hybrid robots successfully covered obstructed outdoor terrain (80.25% for 10 minutes 31 seconds), highlighting the feasibility of mass-producing these systems for practical applications. The proposed automatic assembly strategy reduced preparation time for the insect-computer hybrid robots while maintaining their precise control, laying a foundation for scalable production and deployment in real-world applications.

Resilient conductive membrane synthesized by in-situ polymerisation for wearable non-invasive electronics on moving appendages of cyborg insect

Mar 20, 2023

Abstract:By leveraging their high mobility and small size, insects have been combined with microcontrollers to build up cyborg insects for various practical applications. Unfortunately, all current cyborg insects rely on implanted electrodes to control their movement, which causes irreversible damage to their organs and muscles. Here, we develop a non-invasive method for cyborg insects to address above issues, using a conformal electrode with an in-situ polymerized ion-conducting layer and an electron-conducting layer. The neural and locomotion responses to the electrical inductions verify the efficient communication between insects and controllers by the non-invasive method. The precise "S" line following of the cyborg insect further demonstrates its potential in practical navigation. The conformal non-invasive electrodes keep the intactness of the insects used while controlling their motion. With the antennae, important olfactory organs of insects preserved, the cyborg insect, in the future, may be endowed with abilities to detect the surrounding environment.

TCTN: A 3D-Temporal Convolutional Transformer Network for Spatiotemporal Predictive Learning

Dec 02, 2021

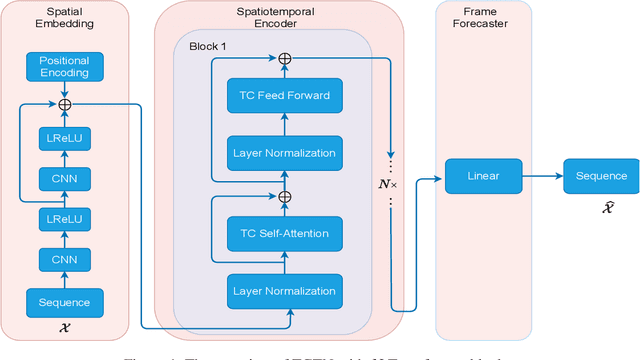

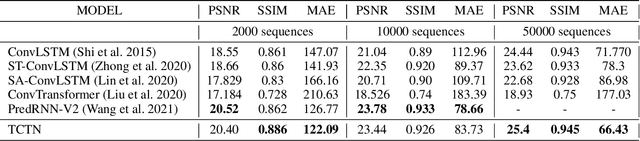

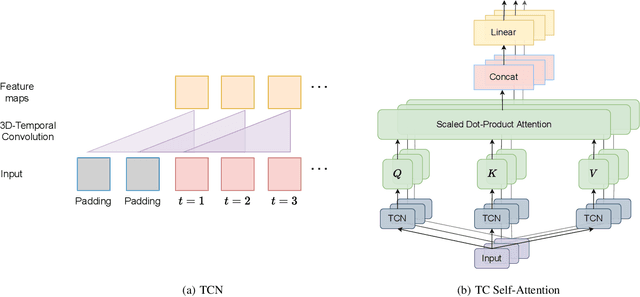

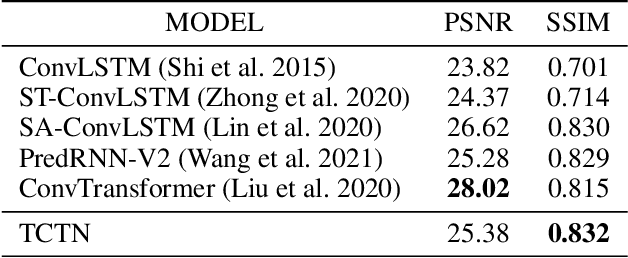

Abstract:Spatiotemporal predictive learning is to generate future frames given a sequence of historical frames. Conventional algorithms are mostly based on recurrent neural networks (RNNs). However, RNN suffers from heavy computational burden such as time and long back-propagation process due to the seriality of recurrent structure. Recently, Transformer-based methods have also been investigated in the form of encoder-decoder or plain encoder, but the encoder-decoder form requires too deep networks and the plain encoder is lack of short-term dependencies. To tackle these problems, we propose an algorithm named 3D-temporal convolutional transformer (TCTN), where a transformer-based encoder with temporal convolutional layers is employed to capture short-term and long-term dependencies. Our proposed algorithm can be easy to implement and trained much faster compared with RNN-based methods thanks to the parallel mechanism of Transformer. To validate our algorithm, we conduct experiments on the MovingMNIST and KTH dataset, and show that TCTN outperforms state-of-the-art (SOTA) methods in both performance and training speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge