Xiangrui Yang

Leveraging Orbital Information and Atomic Feature in Deep Learning Model

Oct 29, 2022

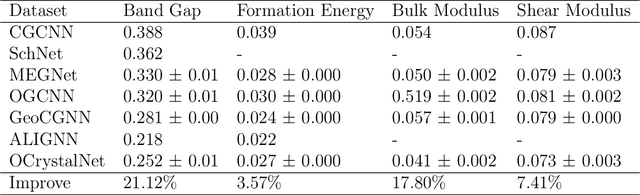

Abstract:Predicting material properties base on micro structure of materials has long been a challenging problem. Recently many deep learning methods have been developed for material property prediction. In this study, we propose a crystal representation learning framework, Orbital CrystalNet, OCrystalNet, which consists of two parts: atomic descriptor generation and graph representation learning. In OCrystalNet, we first incorporate orbital field matrix (OFM) and atomic features to construct OFM-feature atomic descriptor, and then the atomic descriptor is used as atom embedding in the atom-bond message passing module which takes advantage of the topological structure of crystal graphs to learn crystal representation. To demonstrate the capabilities of OCrystalNet we performed a number of prediction tasks on Material Project dataset and JARVIS dataset and compared our model with other baselines and state of art methods. To further present the effectiveness of OCrystalNet, we conducted ablation study and case study of our model. The results show that our model have various advantages over other state of art models.

TCTN: A 3D-Temporal Convolutional Transformer Network for Spatiotemporal Predictive Learning

Dec 02, 2021

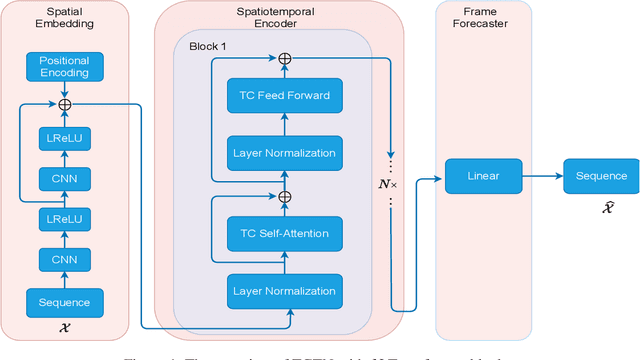

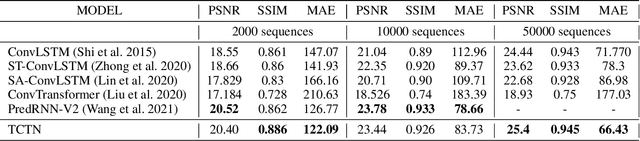

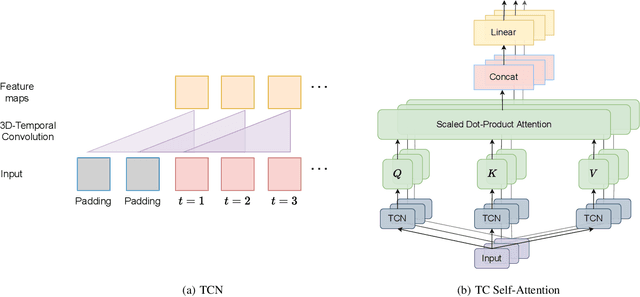

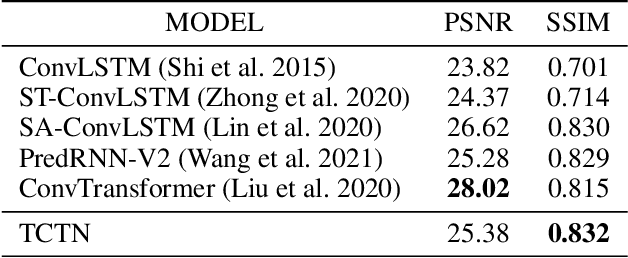

Abstract:Spatiotemporal predictive learning is to generate future frames given a sequence of historical frames. Conventional algorithms are mostly based on recurrent neural networks (RNNs). However, RNN suffers from heavy computational burden such as time and long back-propagation process due to the seriality of recurrent structure. Recently, Transformer-based methods have also been investigated in the form of encoder-decoder or plain encoder, but the encoder-decoder form requires too deep networks and the plain encoder is lack of short-term dependencies. To tackle these problems, we propose an algorithm named 3D-temporal convolutional transformer (TCTN), where a transformer-based encoder with temporal convolutional layers is employed to capture short-term and long-term dependencies. Our proposed algorithm can be easy to implement and trained much faster compared with RNN-based methods thanks to the parallel mechanism of Transformer. To validate our algorithm, we conduct experiments on the MovingMNIST and KTH dataset, and show that TCTN outperforms state-of-the-art (SOTA) methods in both performance and training speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge