Qibin Wang

Kimi K2.5: Visual Agentic Intelligence

Feb 02, 2026Abstract:We introduce Kimi K2.5, an open-source multimodal agentic model designed to advance general agentic intelligence. K2.5 emphasizes the joint optimization of text and vision so that two modalities enhance each other. This includes a series of techniques such as joint text-vision pre-training, zero-vision SFT, and joint text-vision reinforcement learning. Building on this multimodal foundation, K2.5 introduces Agent Swarm, a self-directed parallel agent orchestration framework that dynamically decomposes complex tasks into heterogeneous sub-problems and executes them concurrently. Extensive evaluations show that Kimi K2.5 achieves state-of-the-art results across various domains including coding, vision, reasoning, and agentic tasks. Agent Swarm also reduces latency by up to $4.5\times$ over single-agent baselines. We release the post-trained Kimi K2.5 model checkpoint to facilitate future research and real-world applications of agentic intelligence.

PMSS: Pretrained Matrices Skeleton Selection for LLM Fine-tuning

Sep 25, 2024

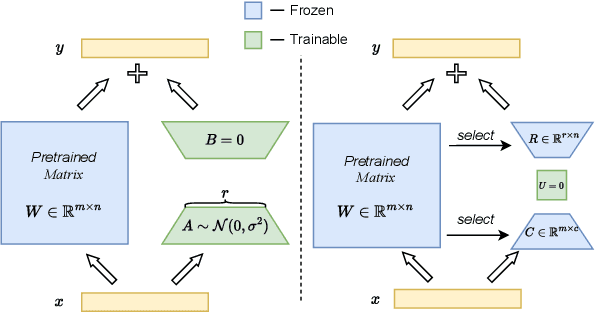

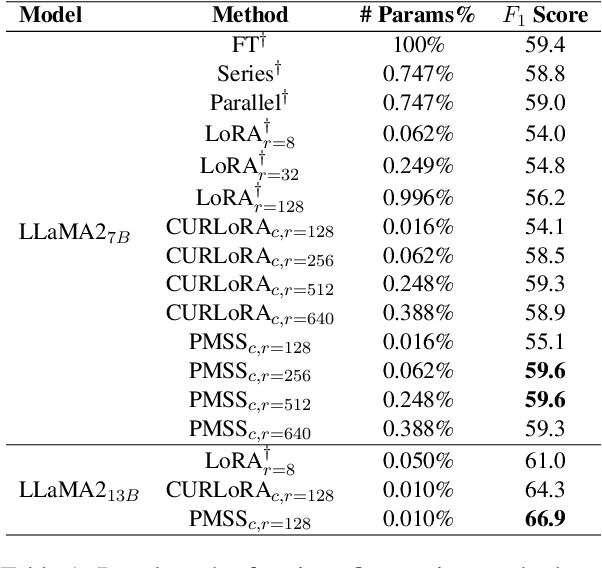

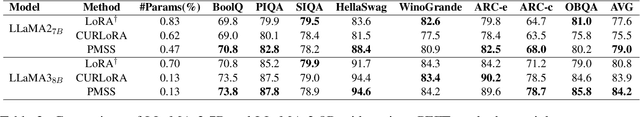

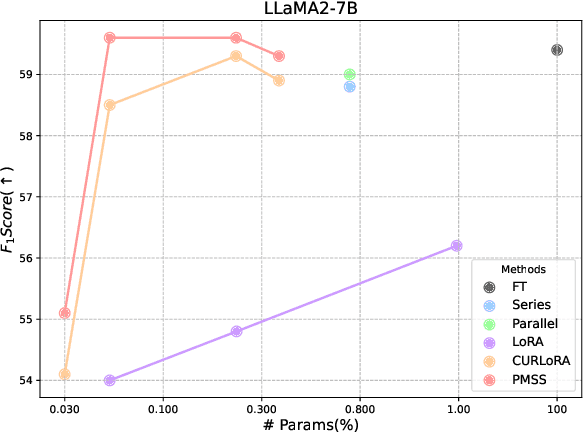

Abstract:Low-rank adaptation (LoRA) and its variants have recently gained much interest due to their ability to avoid excessive inference costs. However, LoRA still encounters the following challenges: (1) Limitation of low-rank assumption; and (2) Its initialization method may be suboptimal. To this end, we propose PMSS(Pre-trained Matrices Skeleton Selection), which enables high-rank updates with low costs while leveraging semantic and linguistic information inherent in pre-trained weight. It achieves this by selecting skeletons from the pre-trained weight matrix and only learning a small matrix instead. Experiments demonstrate that PMSS outperforms LoRA and other fine-tuning methods across tasks with much less trainable parameters. We demonstrate its effectiveness, especially in handling complex tasks such as DROP benchmark(+3.4%/+5.9% on LLaMA2-7B/13B) and math reasoning(+12.89%/+5.61%/+3.11% on LLaMA2-7B, Mistral-7B and Gemma-7B of GSM8K). The code and model will be released soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge