Qiaofei Li

Disentangle Your Dense Object Detector

Jul 27, 2021

Abstract:Deep learning-based dense object detectors have achieved great success in the past few years and have been applied to numerous multimedia applications such as video understanding. However, the current training pipeline for dense detectors is compromised to lots of conjunctions that may not hold. In this paper, we investigate three such important conjunctions: 1) only samples assigned as positive in classification head are used to train the regression head; 2) classification and regression share the same input feature and computational fields defined by the parallel head architecture; and 3) samples distributed in different feature pyramid layers are treated equally when computing the loss. We first carry out a series of pilot experiments to show disentangling such conjunctions can lead to persistent performance improvement. Then, based on these findings, we propose Disentangled Dense Object Detector (DDOD), in which simple and effective disentanglement mechanisms are designed and integrated into the current state-of-the-art dense object detectors. Extensive experiments on MS COCO benchmark show that our approach can lead to 2.0 mAP, 2.4 mAP and 2.2 mAP absolute improvements on RetinaNet, FCOS, and ATSS baselines with negligible extra overhead. Notably, our best model reaches 55.0 mAP on the COCO test-dev set and 93.5 AP on the hard subset of WIDER FACE, achieving new state-of-the-art performance on these two competitive benchmarks. Code is available at https://github.com/zehuichen123/DDOD.

Towards Fine-grained Large Object Segmentation 1st Place Solution to 3D AI Challenge 2020 -- Instance Segmentation Track

Sep 10, 2020

Abstract:This technical report introduces our solutions of Team 'FineGrainedSeg' for Instance Segmentation track in 3D AI Challenge 2020. In order to handle extremely large objects in 3D-FUTURE, we adopt PointRend as our basic framework, which outputs more fine-grained masks compared to HTC and SOLOv2. Our final submission is an ensemble of 5 PointRend models, which achieves the 1st place on both validation and test leaderboards. The code is available at https://github.com/zehuichen123/3DFuture_ins_seg.

1st Place Solutions of Waymo Open Dataset Challenge 2020 -- 2D Object Detection Track

Aug 04, 2020Abstract:In this technical report, we present our solutions of Waymo Open Dataset (WOD) Challenge 2020 - 2D Object Track. We adopt FPN as our basic framework. Cascade RCNN, stacked PAFPN Neck and Double-Head are used for performance improvements. In order to handle the small object detection problem in WOD, we use very large image scales for both training and testing. Using our methods, our team RW-TSDet achieved the 1st place in the 2D Object Detection Track.

Predicting Citywide Crowd Flows in Irregular Regions Using Multi-View Graph Convolutional Networks

Mar 19, 2019

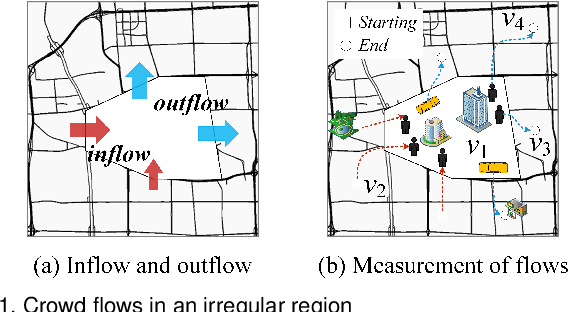

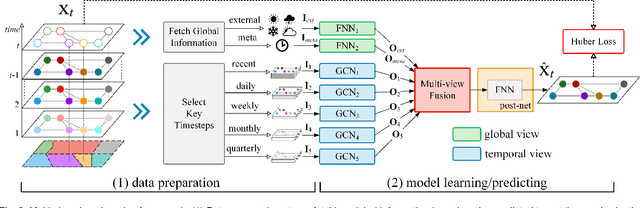

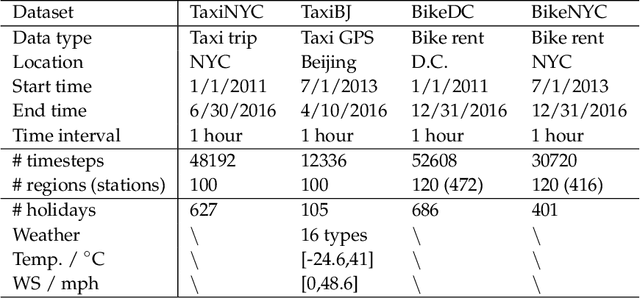

Abstract:Being able to predict the crowd flows in each and every part of a city, especially in irregular regions, is strategically important for traffic control, risk assessment, and public safety. However, it is very challenging because of interactions and spatial correlations between different regions. In addition, it is affected by many factors: i) multiple temporal correlations among different time intervals: closeness, period, trend; ii) complex external influential factors: weather, events; iii) meta features: time of the day, day of the week, and so on. In this paper, we formulate crowd flow forecasting in irregular regions as a spatio-temporal graph (STG) prediction problem in which each node represents a region with time-varying flows. By extending graph convolution to handle the spatial information, we propose using spatial graph convolution to build a multi-view graph convolutional network (MVGCN) for the crowd flow forecasting problem, where different views can capture different factors as mentioned above. We evaluate MVGCN using four real-world datasets (taxicabs and bikes) and extensive experimental results show that our approach outperforms the adaptations of state-of-the-art methods. And we have developed a crowd flow forecasting system for irregular regions that can now be used internally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge