Qianying Lin

Sparse Attentive Memory Network for Click-through Rate Prediction with Long Sequences

Aug 08, 2022

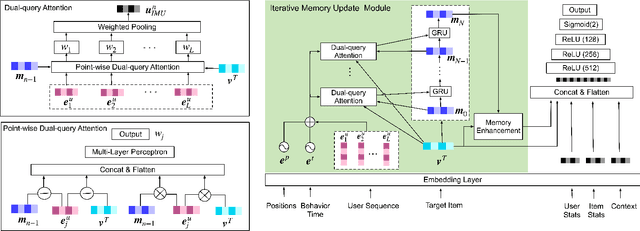

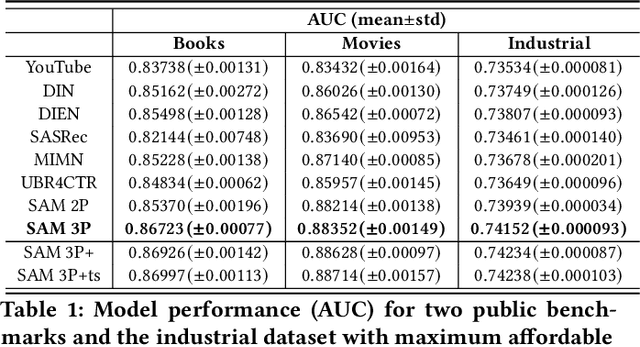

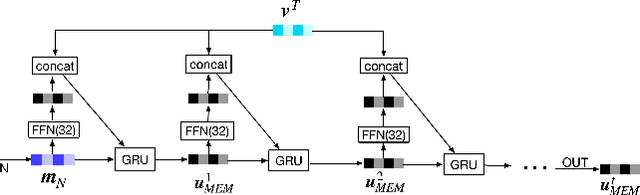

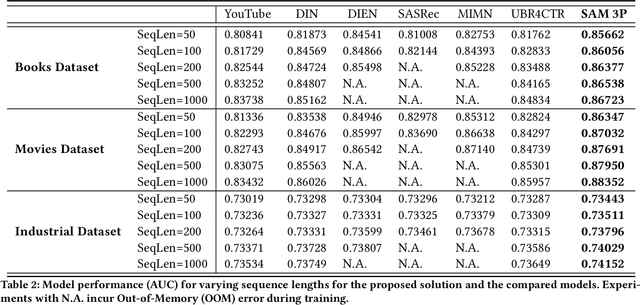

Abstract:Sequential recommendation predicts users' next behaviors with their historical interactions. Recommending with longer sequences improves recommendation accuracy and increases the degree of personalization. As sequences get longer, existing works have not yet addressed the following two main challenges. Firstly, modeling long-range intra-sequence dependency is difficult with increasing sequence lengths. Secondly, it requires efficient memory and computational speeds. In this paper, we propose a Sparse Attentive Memory (SAM) network for long sequential user behavior modeling. SAM supports efficient training and real-time inference for user behavior sequences with lengths on the scale of thousands. In SAM, we model the target item as the query and the long sequence as the knowledge database, where the former continuously elicits relevant information from the latter. SAM simultaneously models target-sequence dependencies and long-range intra-sequence dependencies with O(L) complexity and O(1) number of sequential updates, which can only be achieved by the self-attention mechanism with O(L^2) complexity. Extensive empirical results demonstrate that our proposed solution is effective not only in long user behavior modeling but also on short sequences modeling. Implemented on sequences of length 1000, SAM is successfully deployed on one of the largest international E-commerce platforms. This inference time is within 30ms, with a substantial 7.30% click-through rate improvement for the online A/B test. To the best of our knowledge, it is the first end-to-end long user sequence modeling framework that models intra-sequence and target-sequence dependencies with the aforementioned degree of efficiency and successfully deployed on a large-scale real-time industrial recommender system.

Learning-To-Ensemble by Contextual Rank Aggregation in E-Commerce

Aug 10, 2021

Abstract:Ensemble models in E-commerce combine predictions from multiple sub-models for ranking and revenue improvement. Industrial ensemble models are typically deep neural networks, following the supervised learning paradigm to infer conversion rate given inputs from sub-models. However, this process has the following two problems. Firstly, the point-wise scoring approach disregards the relationships between items and leads to homogeneous displayed results, while diversified display benefits user experience and revenue. Secondly, the learning paradigm focuses on the ranking metrics and does not directly optimize the revenue. In our work, we propose a new Learning-To-Ensemble (LTE) framework RAEGO, which replaces the ensemble model with a contextual Rank Aggregator (RA) and explores the best weights of sub-models by the Evaluator-Generator Optimization (EGO). To achieve the best online performance, we propose a new rank aggregation algorithm TournamentGreedy as a refinement of classic rank aggregators, which also produces the best average weighted Kendall Tau Distance (KTD) amongst all the considered algorithms with quadratic time complexity. Under the assumption that the best output list should be Pareto Optimal on the KTD metric for sub-models, we show that our RA algorithm has higher efficiency and coverage in exploring the optimal weights. Combined with the idea of Bayesian Optimization and gradient descent, we solve the online contextual Black-Box Optimization task that finds the optimal weights for sub-models given a chosen RA model. RA-EGO has been deployed in our online system and has improved the revenue significantly.

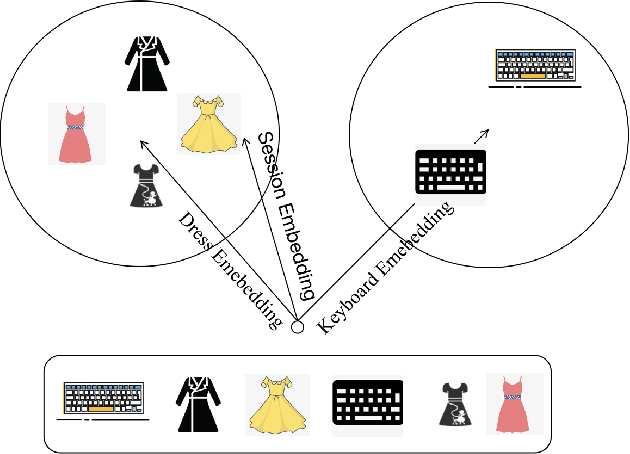

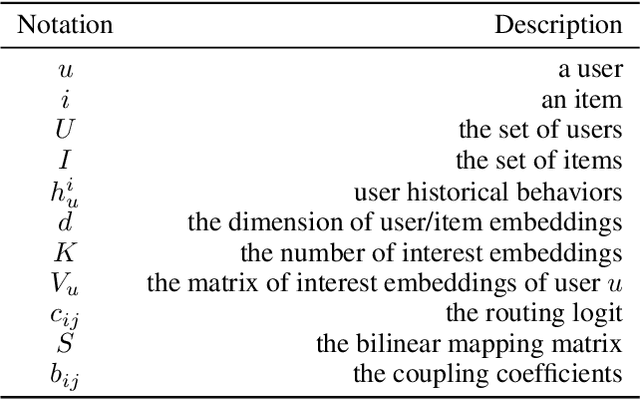

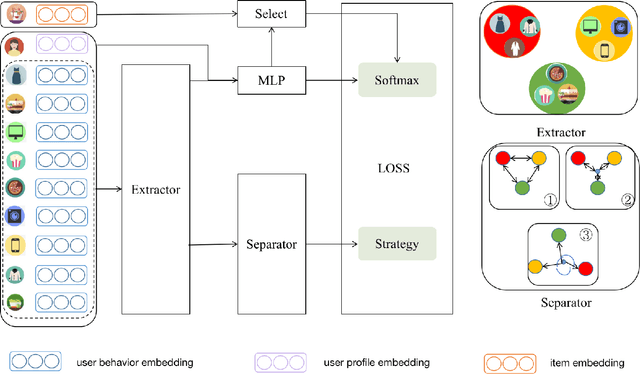

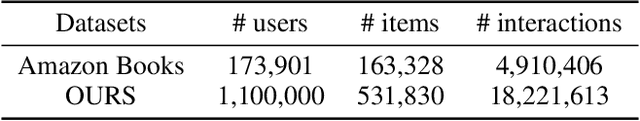

Diversity Regularized Interests Modeling for Recommender Systems

Mar 23, 2021

Abstract:With the rapid development of E-commerce and the increase in the quantity of items, users are presented with more items hence their interests broaden. It is increasingly difficult to model user intentions with traditional methods, which model the user's preference for an item by combining a single user vector and an item vector. Recently, some methods are proposed to generate multiple user interest vectors and achieve better performance compared to traditional methods. However, empirical studies demonstrate that vectors generated from these multi-interests methods are sometimes homogeneous, which may lead to sub-optimal performance. In this paper, we propose a novel method of Diversity Regularized Interests Modeling (DRIM) for Recommender Systems. We apply a capsule network in a multi-interest extractor to generate multiple user interest vectors. Each interest of the user should have a certain degree of distinction, thus we introduce three strategies as the diversity regularized separator to separate multiple user interest vectors. Experimental results on public and industrial data sets demonstrate the ability of the model to capture different interests of a user and the superior performance of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge