Pujan Kandel

Diagnosing Colorectal Polyps in the Wild with Capsule Networks

Jan 10, 2020

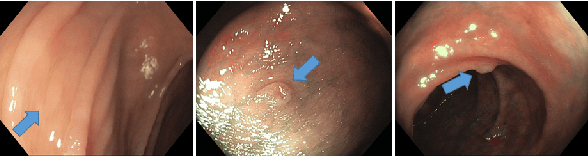

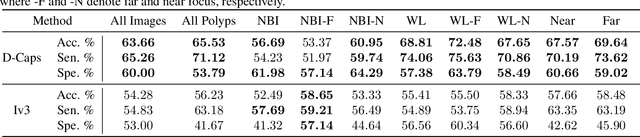

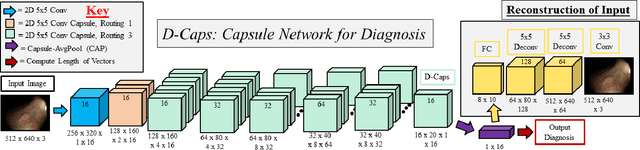

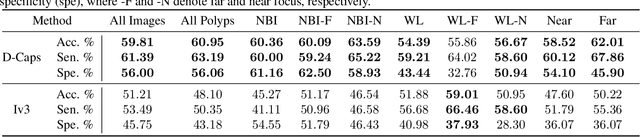

Abstract:Colorectal cancer, largely arising from precursor lesions called polyps, remains one of the leading causes of cancer-related death worldwide. Current clinical standards require the resection and histopathological analysis of polyps due to test accuracy and sensitivity of optical biopsy methods falling substantially below recommended levels. In this study, we design a novel capsule network architecture (D-Caps) to improve the viability of optical biopsy of colorectal polyps. Our proposed method introduces several technical novelties including a novel capsule architecture with a capsule-average pooling (CAP) method to improve efficiency in large-scale image classification. We demonstrate improved results over the previous state-of-the-art convolutional neural network (CNN) approach by as much as 43%. This work provides an important benchmark on the new Mayo Polyp dataset, a significantly more challenging and larger dataset than previous polyp studies, with results stratified across all available categories, imaging devices and modalities, and focus modes to promote future direction into AI-driven colorectal cancer screening systems. Code is publicly available at https://github.com/lalonderodney/D-Caps .

INN: Inflated Neural Networks for IPMN Diagnosis

Jun 30, 2019

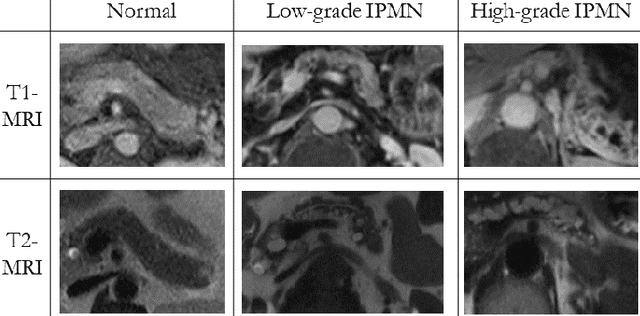

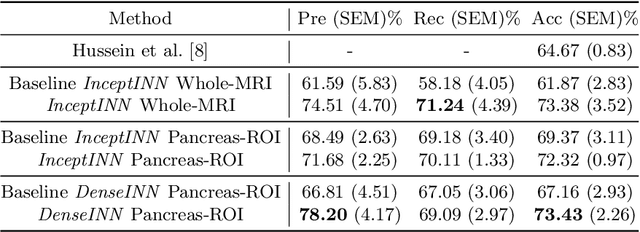

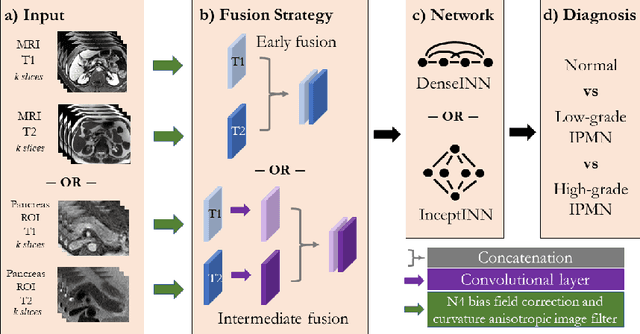

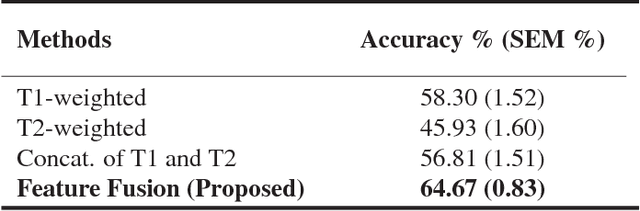

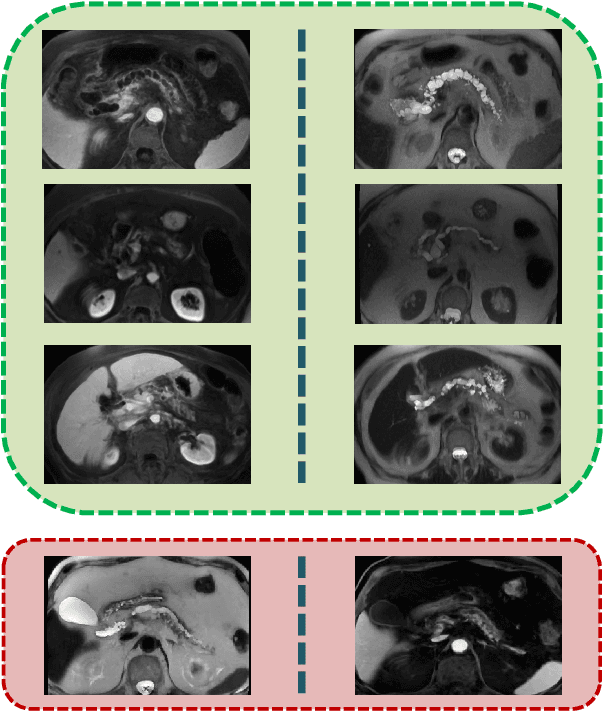

Abstract:Intraductal papillary mucinous neoplasm (IPMN) is a precursor to pancreatic ductal adenocarcinoma. While over half of patients are diagnosed with pancreatic cancer at a distant stage, patients who are diagnosed early enjoy a much higher 5-year survival rate of $34\%$ compared to $3\%$ in the former; hence, early diagnosis is key. Unique challenges in the medical imaging domain such as extremely limited annotated data sets and typically large 3D volumetric data have made it difficult for deep learning to secure a strong foothold. In this work, we construct two novel "inflated" deep network architectures, $\textit{InceptINN}$ and $\textit{DenseINN}$, for the task of diagnosing IPMN from multisequence (T1 and T2) MRI. These networks inflate their 2D layers to 3D and bootstrap weights from their 2D counterparts (Inceptionv3 and DenseNet121 respectively) trained on ImageNet to the new 3D kernels. We also extend the inflation process by further expanding the pre-trained kernels to handle any number of input modalities and different fusion strategies. This is one of the first studies to train an end-to-end deep network on multisequence MRI for IPMN diagnosis, and shows that our proposed novel inflated network architectures are able to handle the extremely limited training data (139 MRI scans), while providing an absolute improvement of $8.76\%$ in accuracy for diagnosing IPMN over the current state-of-the-art. Code is publicly available at https://github.com/lalonderodney/INN-Inflated-Neural-Nets.

Supervised and Unsupervised Tumor Characterization in the Deep Learning Era

Jul 29, 2018

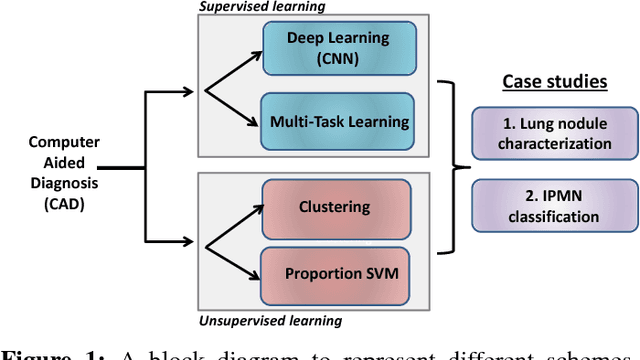

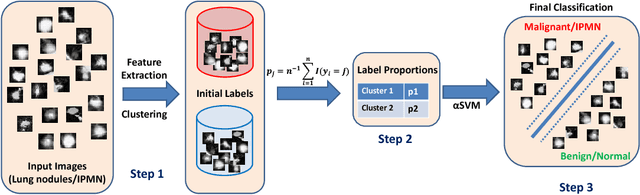

Abstract:Cancer is among the leading causes of death worldwide. Risk stratification of cancer tumors in radiology images can be improved with computer-aided diagnosis (CAD) tools which can be made faster and more accurate. Tumor characterization through CADs can enable non-invasive cancer staging and prognosis, and foster personalized treatment planning as a part of precision medicine. In this study, we propose both supervised and unsupervised machine learning strategies to improve tumor characterization. Our first approach is based on supervised learning for which we demonstrate significant gains in deep learning algorithms, particularly by utilizing a 3D Convolutional Neural Network along with transfer learning. Motivated by the radiologists' interpretations of the scans, we then show how to incorporate task dependent feature representations into a CAD system via a "graph-regularized sparse Multi-Task Learning (MTL)" framework. In the second approach, we explore an unsupervised scheme to address the limited availability of labeled training data, a common problem in medical imaging applications. Inspired by learning from label proportion (LLP) approaches, we propose a new algorithm, proportion-SVM, to characterize tumor types. We also seek the answer to the fundamental question about the goodness of "deep features" for unsupervised tumor classification. We evaluate our proposed approaches (both supervised and unsupervised) on two different tumor diagnosis challenges: lung and pancreas with 1018 CT and 171 MRI scans respectively.

Deep Multi-Modal Classification of Intraductal Papillary Mucinous Neoplasms (IPMN) with Canonical Correlation Analysis

Apr 27, 2018

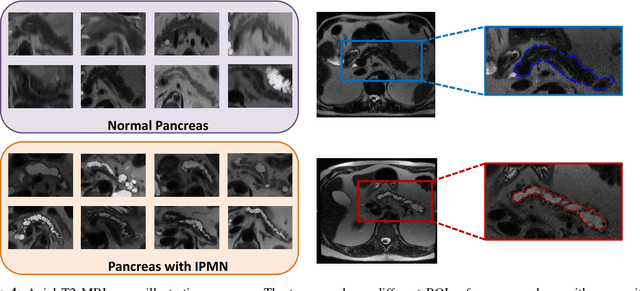

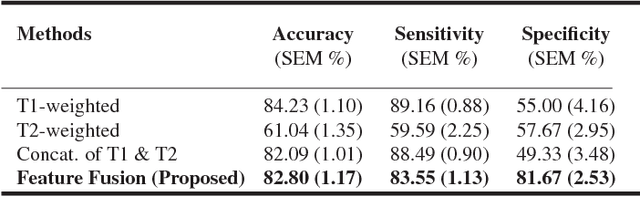

Abstract:Pancreatic cancer has the poorest prognosis among all cancer types. Intraductal Papillary Mucinous Neoplasms (IPMNs) are radiographically identifiable precursors to pancreatic cancer; hence, early detection and precise risk assessment of IPMN are vital. In this work, we propose a Convolutional Neural Network (CNN) based computer aided diagnosis (CAD) system to perform IPMN diagnosis and risk assessment by utilizing multi-modal MRI. In our proposed approach, we use minimum and maximum intensity projections to ease the annotation variations among different slices and type of MRIs. Then, we present a CNN to obtain deep feature representation corresponding to each MRI modality (T1-weighted and T2-weighted). At the final step, we employ canonical correlation analysis (CCA) to perform a fusion operation at the feature level, leading to discriminative canonical correlation features. Extracted features are used for classification. Our results indicate significant improvements over other potential approaches to solve this important problem. The proposed approach doesn't require explicit sample balancing in cases of imbalance between positive and negative examples. To the best of our knowledge, our study is the first to automatically diagnose IPMN using multi-modal MRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge