Prudence Wong

VMGNet: A Low Computational Complexity Robotic Grasping Network Based on VMamba with Multi-Scale Feature Fusion

Nov 19, 2024

Abstract:While deep learning-based robotic grasping technology has demonstrated strong adaptability, its computational complexity has also significantly increased, making it unsuitable for scenarios with high real-time requirements. Therefore, we propose a low computational complexity and high accuracy model named VMGNet for robotic grasping. For the first time, we introduce the Visual State Space into the robotic grasping field to achieve linear computational complexity, thereby greatly reducing the model's computational cost. Meanwhile, to improve the accuracy of the model, we propose an efficient and lightweight multi-scale feature fusion module, named Fusion Bridge Module, to extract and fuse information at different scales. We also present a new loss function calculation method to enhance the importance differences between subtasks, improving the model's fitting ability. Experiments show that VMGNet has only 8.7G Floating Point Operations and an inference time of 8.1 ms on our devices. VMGNet also achieved state-of-the-art performance on the Cornell and Jacquard public datasets. To validate VMGNet's effectiveness in practical applications, we conducted real grasping experiments in multi-object scenarios, and VMGNet achieved an excellent performance with a 94.4% success rate in real-world grasping tasks. The video for the real-world robotic grasping experiments is available at https://youtu.be/S-QHBtbmLc4.

Continual Graph Learning: A Survey

Jan 28, 2023Abstract:Research on continual learning (CL) mainly focuses on data represented in the Euclidean space, while research on graph-structured data is scarce. Furthermore, most graph learning models are tailored for static graphs. However, graphs usually evolve continually in the real world. Catastrophic forgetting also emerges in graph learning models when being trained incrementally. This leads to the need to develop robust, effective and efficient continual graph learning approaches. Continual graph learning (CGL) is an emerging area aiming to realize continual learning on graph-structured data. This survey is written to shed light on this emerging area. It introduces the basic concepts of CGL and highlights two unique challenges brought by graphs. Then it reviews and categorizes recent state-of-the-art approaches, analyzing their strategies to tackle the unique challenges in CGL. Besides, it discusses the main concerns in each family of CGL methods, offering potential solutions. Finally, it explores the open issues and potential applications of CGL.

Semi-Unsupervised Lifelong Learning for Sentiment Classification: Less Manual Data Annotation and More Self-Studying

May 30, 2019

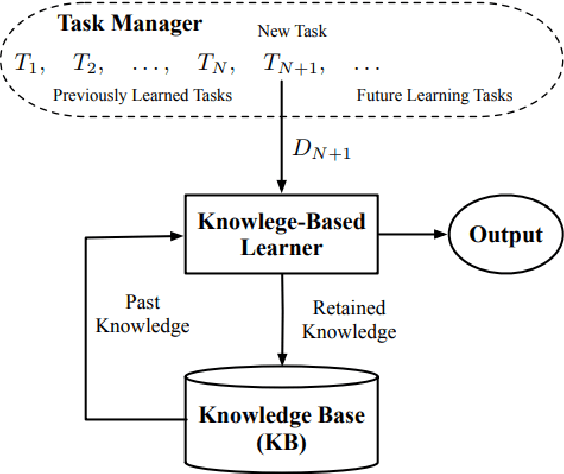

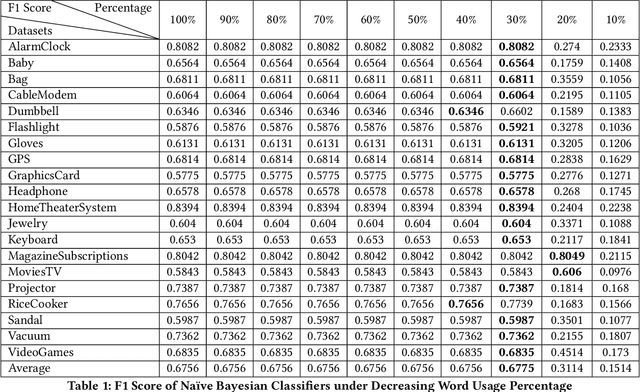

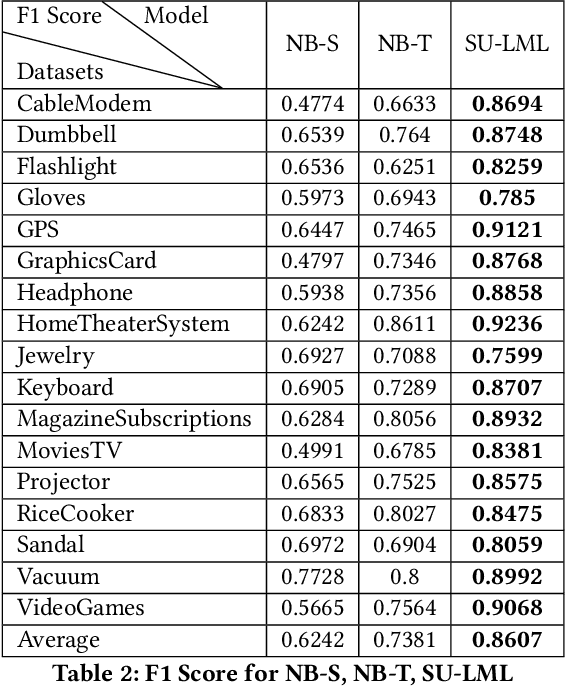

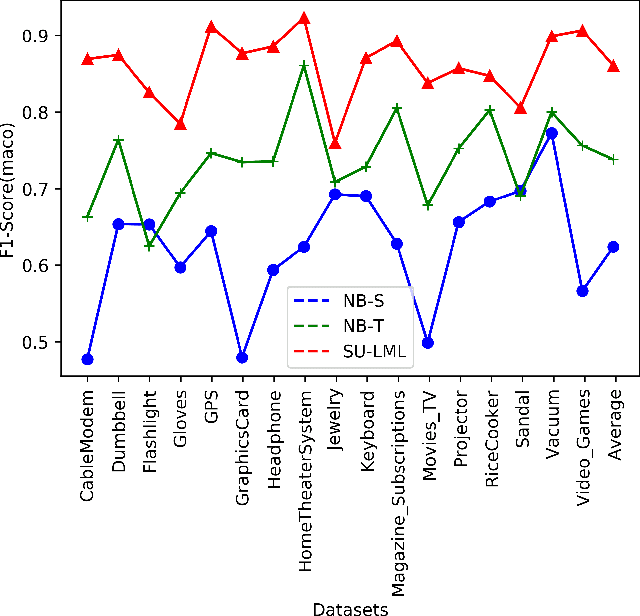

Abstract:Lifelong machine learning is a novel machine learning paradigm which can continually accumulate knowledge during learning. The knowledge extracting and reusing abilities enable the lifelong machine learning to solve the related problems. The traditional approaches like Na\"ive Bayes and some neural network based approaches only aim to achieve the best performance upon a single task. Unlike them, the lifelong machine learning in this paper focuses on how to accumulate knowledge during learning and leverage them for further tasks. Meanwhile, the demand for labelled data for training also is significantly decreased with the knowledge reusing. This paper suggests that the aim of the lifelong learning is to use less labelled data and computational cost to achieve the performance as well as or even better than the supervised learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge