Praveen Ravirathinam

Towards a Knowledge guided Multimodal Foundation Model for Spatio-Temporal Remote Sensing Applications

Jul 29, 2024

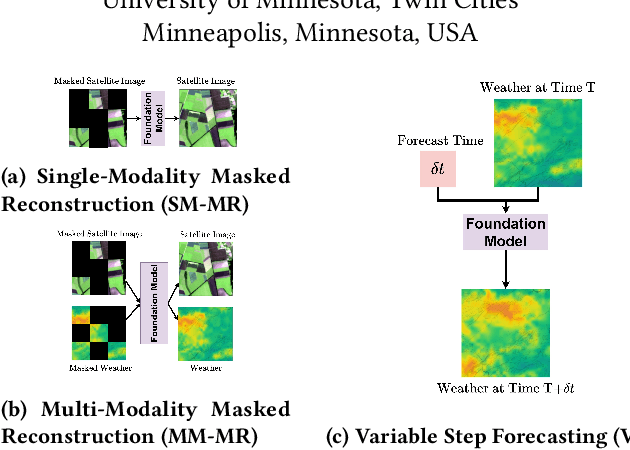

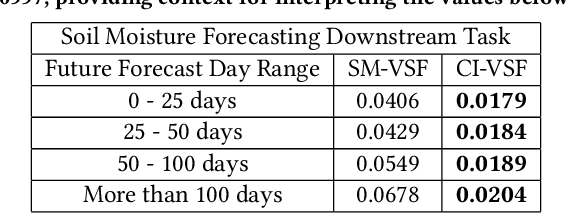

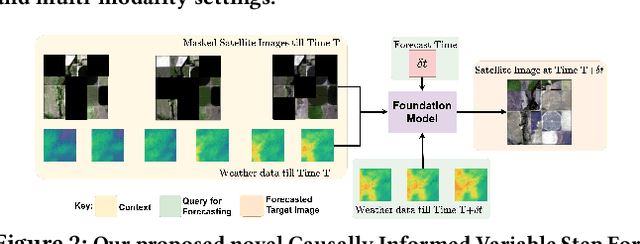

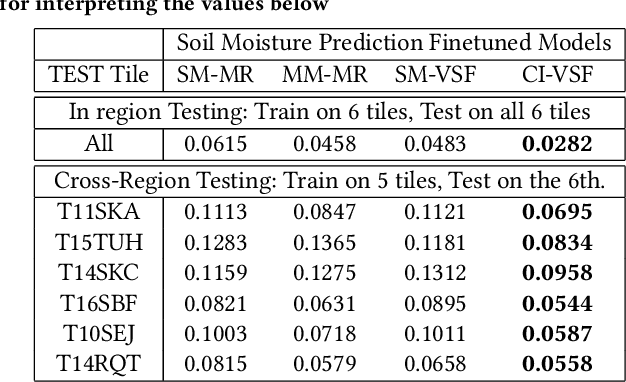

Abstract:In recent years, there is increased interest in foundation models for geoscience due to vast amount of earth observing satellite imagery. Existing remote sensing foundation models make use of the various sources of spectral imagery to create large models pretrained on masked reconstruction task. The embeddings from these foundation models are then used for various downstream remote sensing applications. In this paper we propose a foundational modeling framework for remote sensing geoscience applications, that goes beyond these traditional single modality masked autoencoder family of foundation models. This framework leverages the knowledge guided principles that the spectral imagery captures the impact of the physical drivers on the environmental system, and that the relationship between them is governed by the characteristics of the system. Specifically, our method, called MultiModal Variable Step Forecasting (MM-VSF), uses mutlimodal data (spectral imagery and weather) as its input and a variable step forecasting task as its pretraining objective. In our evaluation we show forecasting of satellite imagery using weather can be used as an effective pretraining task for foundation models. We further show the effectiveness of the embeddings from MM-VSF on the downstream task of pixel wise crop mapping, when compared with a model trained in the traditional setting of single modality input and masked reconstruction based pretraining.

Combining Satellite and Weather Data for Crop Type Mapping: An Inverse Modelling Approach

Jan 29, 2024

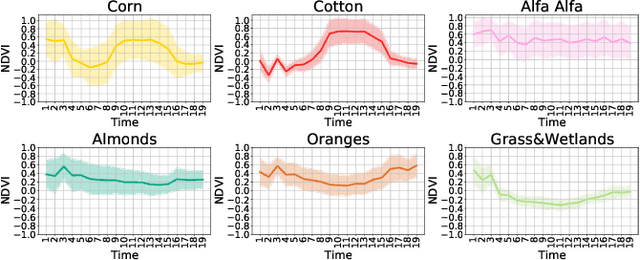

Abstract:Accurate and timely crop mapping is essential for yield estimation, insurance claims, and conservation efforts. Over the years, many successful machine learning models for crop mapping have been developed that use just the multi-spectral imagery from satellites to predict crop type over the area of interest. However, these traditional methods do not account for the physical processes that govern crop growth. At a high level, crop growth can be envisioned as physical parameters, such as weather and soil type, acting upon the plant leading to crop growth which can be observed via satellites. In this paper, we propose Weather-based Spatio-Temporal segmentation network with ATTention (WSTATT), a deep learning model that leverages this understanding of crop growth by formulating it as an inverse model that combines weather (Daymet) and satellite imagery (Sentinel-2) to generate accurate crop maps. We show that our approach provides significant improvements over existing algorithms that solely rely on spectral imagery by comparing segmentation maps and F1 classification scores. Furthermore, effective use of attention in WSTATT architecture enables detection of crop types earlier in the season (up to 5 months in advance), which is very useful for improving food supply projections. We finally discuss the impact of weather by correlating our results with crop phenology to show that WSTATT is able to capture physical properties of crop growth.

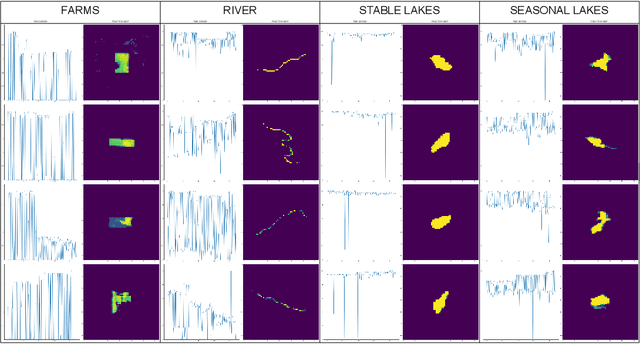

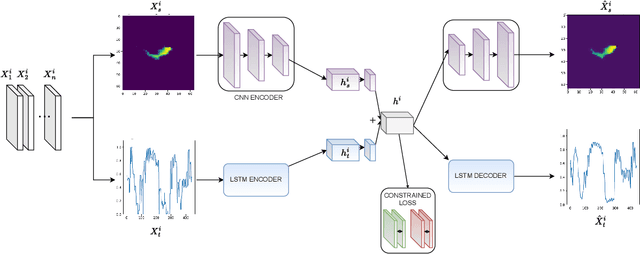

Spatiotemporal Classification with limited labels using Constrained Clustering for large datasets

Oct 14, 2022

Abstract:Creating separable representations via representation learning and clustering is critical in analyzing large unstructured datasets with only a few labels. Separable representations can lead to supervised models with better classification capabilities and additionally aid in generating new labeled samples. Most unsupervised and semisupervised methods to analyze large datasets do not leverage the existing small amounts of labels to get better representations. In this paper, we propose a spatiotemporal clustering paradigm that uses spatial and temporal features combined with a constrained loss to produce separable representations. We show the working of this method on the newly published dataset ReaLSAT, a dataset of surface water dynamics for over 680,000 lakes across the world, making it an essential dataset in terms of ecology and sustainability. Using this large unlabelled dataset, we first show how a spatiotemporal representation is better compared to just spatial or temporal representation. We then show how we can learn even better representation using a constrained loss with few labels. We conclude by showing how our method, using few labels, can pick out new labeled samples from the unlabeled data, which can be used to augment supervised methods leading to better classification.

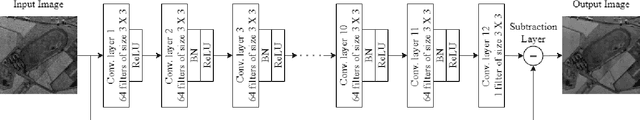

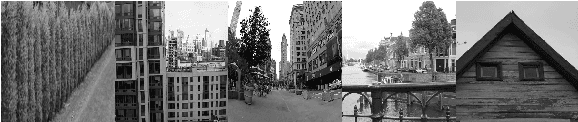

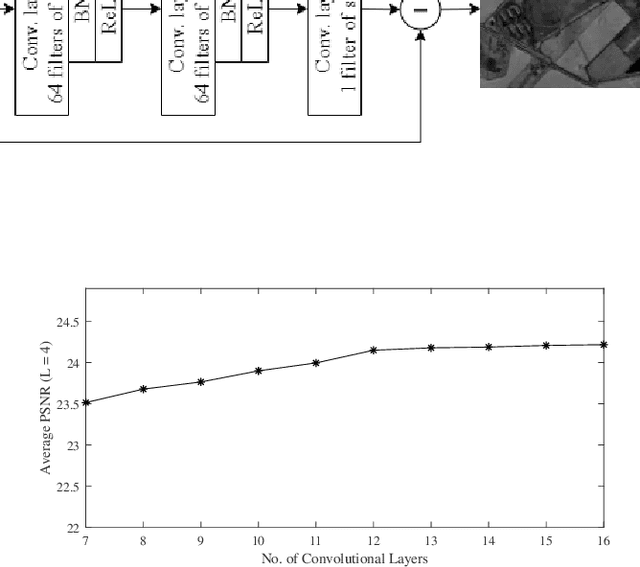

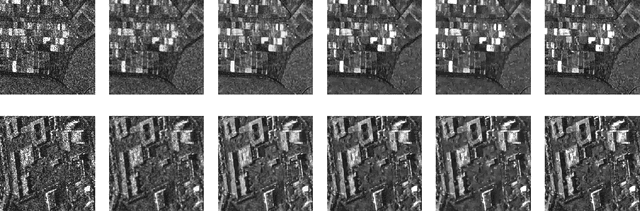

NeighCNN: A CNN based SAR Speckle Reduction using Feature preserving Loss Function

Aug 26, 2021

Abstract:Coherent imaging systems like synthetic aperture radar are susceptible to multiplicative noise that makes applications like automatic target recognition challenging. In this paper, NeighCNN, a deep learning-based speckle reduction algorithm that handles multiplicative noise with relatively simple convolutional neural network architecture, is proposed. We have designed a loss function which is an unique combination of weighted sum of Euclidean, neighbourhood, and perceptual loss for training the deep network. Euclidean and neighbourhood losses take pixel-level information into account, whereas perceptual loss considers high-level semantic features between two images. Various synthetic, as well as real SAR images, are used for testing the NeighCNN architecture, and the results verify the noise removal and edge preservation abilities of the proposed architecture. Performance metrics like peak-signal-to-noise ratio, structural similarity index, and universal image quality index are used for evaluating the efficiency of the proposed architecture on synthetic images.

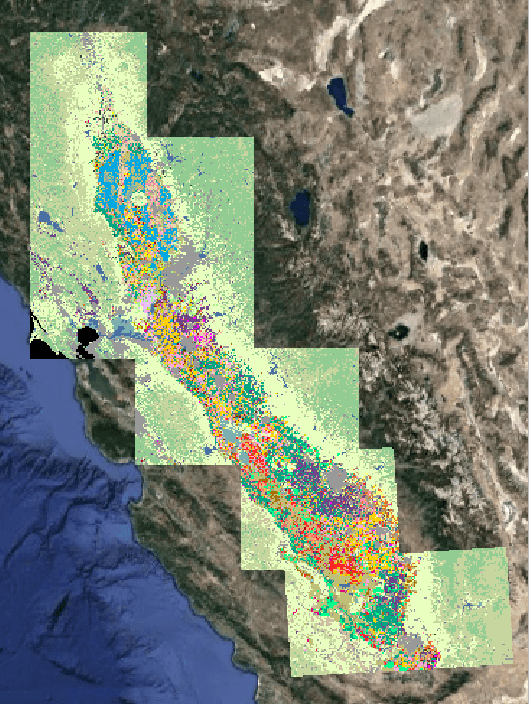

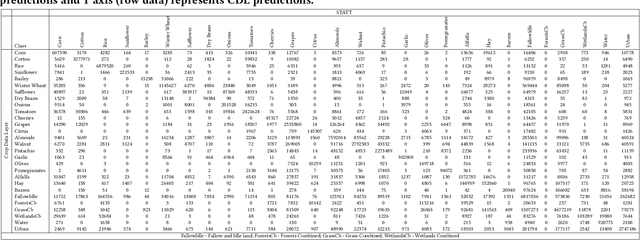

CalCROP21: A Georeferenced multi-spectral dataset of Satellite Imagery and Crop Labels

Jul 26, 2021

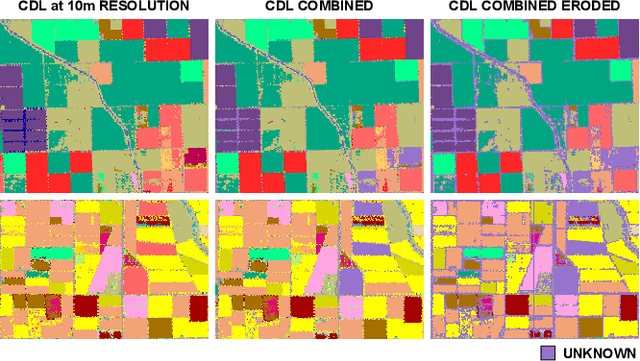

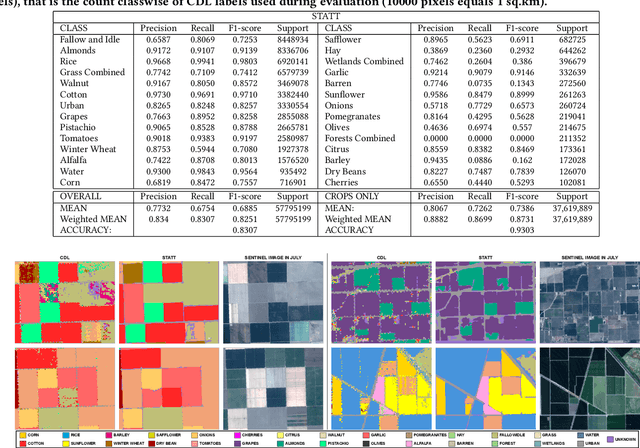

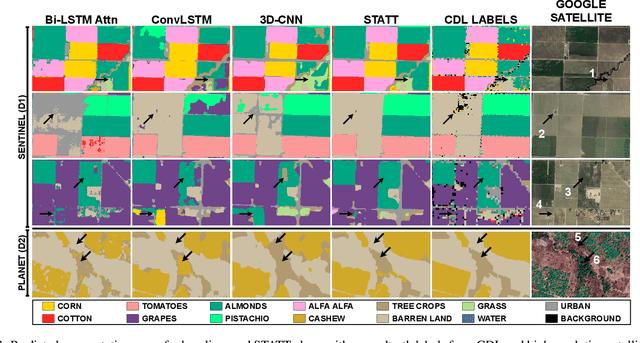

Abstract:Mapping and monitoring crops is a key step towards sustainable intensification of agriculture and addressing global food security. A dataset like ImageNet that revolutionized computer vision applications can accelerate development of novel crop mapping techniques. Currently, the United States Department of Agriculture (USDA) annually releases the Cropland Data Layer (CDL) which contains crop labels at 30m resolution for the entire United States of America. While CDL is state of the art and is widely used for a number of agricultural applications, it has a number of limitations (e.g., pixelated errors, labels carried over from previous errors and absence of input imagery along with class labels). In this work, we create a new semantic segmentation benchmark dataset, which we call CalCROP21, for the diverse crops in the Central Valley region of California at 10m spatial resolution using a Google Earth Engine based robust image processing pipeline and a novel attention based spatio-temporal semantic segmentation algorithm STATT. STATT uses re-sampled (interpolated) CDL labels for training, but is able to generate a better prediction than CDL by leveraging spatial and temporal patterns in Sentinel2 multi-spectral image series to effectively capture phenologic differences amongst crops and uses attention to reduce the impact of clouds and other atmospheric disturbances. We also present a comprehensive evaluation to show that STATT has significantly better results when compared to the resampled CDL labels. We have released the dataset and the processing pipeline code for generating the benchmark dataset.

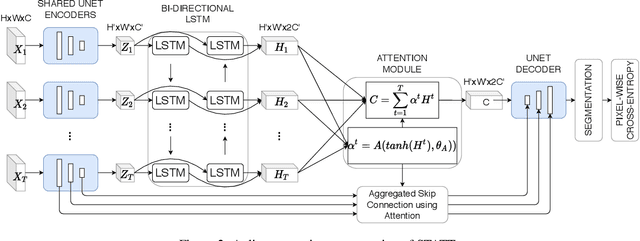

Attention-augmented Spatio-Temporal Segmentation for Land Cover Mapping

May 02, 2021

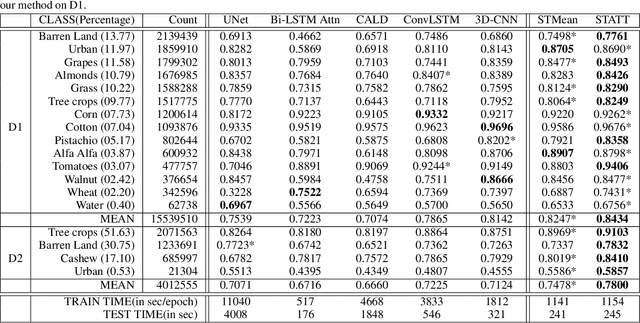

Abstract:The availability of massive earth observing satellite data provide huge opportunities for land use and land cover mapping. However, such mapping effort is challenging due to the existence of various land cover classes, noisy data, and the lack of proper labels. Also, each land cover class typically has its own unique temporal pattern and can be identified only during certain periods. In this article, we introduce a novel architecture that incorporates the UNet structure with Bidirectional LSTM and Attention mechanism to jointly exploit the spatial and temporal nature of satellite data and to better identify the unique temporal patterns of each land cover. We evaluate this method for mapping crops in multiple regions over the world. We compare our method with other state-of-the-art methods both quantitatively and qualitatively on two real-world datasets which involve multiple land cover classes. We also visualise the attention weights to study its effectiveness in mitigating noise and identifying discriminative time period.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge