David Mulla

Towards Fine-Tuning-Based Site Calibration for Knowledge-Guided Machine Learning: A Summary of Results

Dec 17, 2025Abstract:Accurate and cost-effective quantification of the agroecosystem carbon cycle at decision-relevant scales is essential for climate mitigation and sustainable agriculture. However, both transfer learning and the exploitation of spatial variability in this field are challenging, as they involve heterogeneous data and complex cross-scale dependencies. Conventional approaches often rely on location-independent parameterizations and independent training, underutilizing transfer learning and spatial heterogeneity in the inputs, and limiting their applicability in regions with substantial variability. We propose FTBSC-KGML (Fine-Tuning-Based Site Calibration-Knowledge-Guided Machine Learning), a pretraining- and fine-tuning-based, spatial-variability-aware, and knowledge-guided machine learning framework that augments KGML-ag with a pretraining-fine-tuning process and site-specific parameters. Using a pretraining-fine-tuning process with remote-sensing GPP, climate, and soil covariates collected across multiple midwestern sites, FTBSC-KGML estimates land emissions while leveraging transfer learning and spatial heterogeneity. A key component is a spatial-heterogeneity-aware transfer-learning scheme, which is a globally pretrained model that is fine-tuned at each state or site to learn place-aware representations, thereby improving local accuracy under limited data without sacrificing interpretability. Empirically, FTBSC-KGML achieves lower validation error and greater consistency in explanatory power than a purely global model, thereby better capturing spatial variability across states. This work extends the prior SDSA-KGML framework.

Combining Satellite and Weather Data for Crop Type Mapping: An Inverse Modelling Approach

Jan 29, 2024

Abstract:Accurate and timely crop mapping is essential for yield estimation, insurance claims, and conservation efforts. Over the years, many successful machine learning models for crop mapping have been developed that use just the multi-spectral imagery from satellites to predict crop type over the area of interest. However, these traditional methods do not account for the physical processes that govern crop growth. At a high level, crop growth can be envisioned as physical parameters, such as weather and soil type, acting upon the plant leading to crop growth which can be observed via satellites. In this paper, we propose Weather-based Spatio-Temporal segmentation network with ATTention (WSTATT), a deep learning model that leverages this understanding of crop growth by formulating it as an inverse model that combines weather (Daymet) and satellite imagery (Sentinel-2) to generate accurate crop maps. We show that our approach provides significant improvements over existing algorithms that solely rely on spectral imagery by comparing segmentation maps and F1 classification scores. Furthermore, effective use of attention in WSTATT architecture enables detection of crop types earlier in the season (up to 5 months in advance), which is very useful for improving food supply projections. We finally discuss the impact of weather by correlating our results with crop phenology to show that WSTATT is able to capture physical properties of crop growth.

CalCROP21: A Georeferenced multi-spectral dataset of Satellite Imagery and Crop Labels

Jul 26, 2021

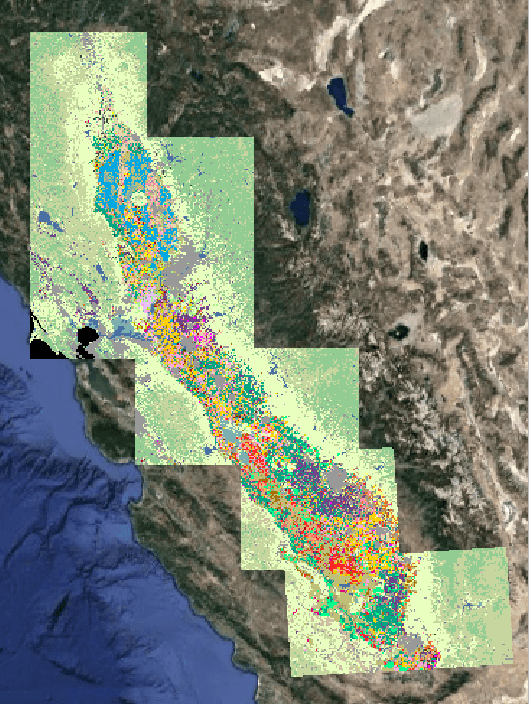

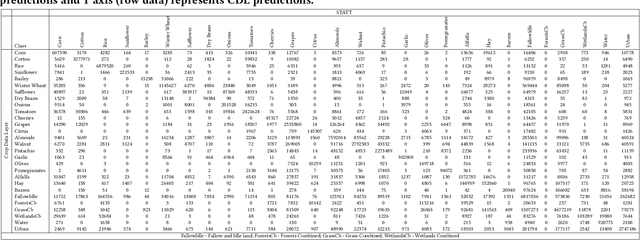

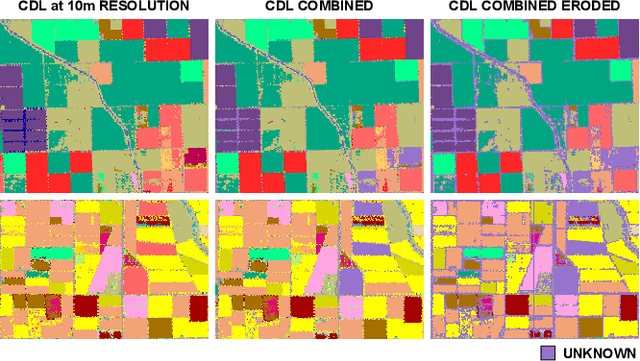

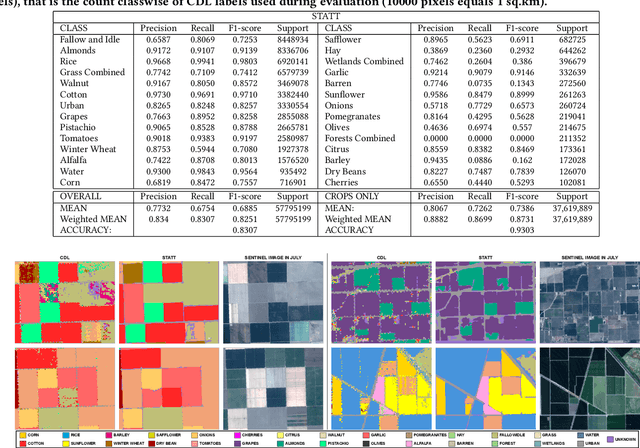

Abstract:Mapping and monitoring crops is a key step towards sustainable intensification of agriculture and addressing global food security. A dataset like ImageNet that revolutionized computer vision applications can accelerate development of novel crop mapping techniques. Currently, the United States Department of Agriculture (USDA) annually releases the Cropland Data Layer (CDL) which contains crop labels at 30m resolution for the entire United States of America. While CDL is state of the art and is widely used for a number of agricultural applications, it has a number of limitations (e.g., pixelated errors, labels carried over from previous errors and absence of input imagery along with class labels). In this work, we create a new semantic segmentation benchmark dataset, which we call CalCROP21, for the diverse crops in the Central Valley region of California at 10m spatial resolution using a Google Earth Engine based robust image processing pipeline and a novel attention based spatio-temporal semantic segmentation algorithm STATT. STATT uses re-sampled (interpolated) CDL labels for training, but is able to generate a better prediction than CDL by leveraging spatial and temporal patterns in Sentinel2 multi-spectral image series to effectively capture phenologic differences amongst crops and uses attention to reduce the impact of clouds and other atmospheric disturbances. We also present a comprehensive evaluation to show that STATT has significantly better results when compared to the resampled CDL labels. We have released the dataset and the processing pipeline code for generating the benchmark dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge