Prateek Saxena

Attacking Byzantine Robust Aggregation in High Dimensions

Dec 22, 2023Abstract:Training modern neural networks or models typically requires averaging over a sample of high-dimensional vectors. Poisoning attacks can skew or bias the average vectors used to train the model, forcing the model to learn specific patterns or avoid learning anything useful. Byzantine robust aggregation is a principled algorithmic defense against such biasing. Robust aggregators can bound the maximum bias in computing centrality statistics, such as mean, even when some fraction of inputs are arbitrarily corrupted. Designing such aggregators is challenging when dealing with high dimensions. However, the first polynomial-time algorithms with strong theoretical bounds on the bias have recently been proposed. Their bounds are independent of the number of dimensions, promising a conceptual limit on the power of poisoning attacks in their ongoing arms race against defenses. In this paper, we show a new attack called HIDRA on practical realization of strong defenses which subverts their claim of dimension-independent bias. HIDRA highlights a novel computational bottleneck that has not been a concern of prior information-theoretic analysis. Our experimental evaluation shows that our attacks almost completely destroy the model performance, whereas existing attacks with the same goal fail to have much effect. Our findings leave the arms race between poisoning attacks and provable defenses wide open.

RETEXO: Scalable Neural Network Training over Distributed Graphs

Mar 08, 2023

Abstract:Graph neural networks offer a promising approach to supervised learning over graph data. Graph data, especially when it is privacy-sensitive or too large to train on centrally, is often stored partitioned across disparate processing units (clients) which want to minimize the communication costs during collaborative training. The fully-distributed setup takes such partitioning to its extreme, wherein features of only a single node and its adjacent edges are kept locally with one client processor. Existing GNNs are not architected for training in such setups and incur prohibitive costs therein. We propose RETEXO, a novel transformation of existing GNNs that improves the communication efficiency during training in the fully-distributed setup. We experimentally confirm that RETEXO offers up to 6 orders of magnitude better communication efficiency even when training shallow GNNs, with a minimal trade-off in accuracy for supervised node classification tasks.

Membership Inference Attacks and Generalization: A Causal Perspective

Sep 18, 2022

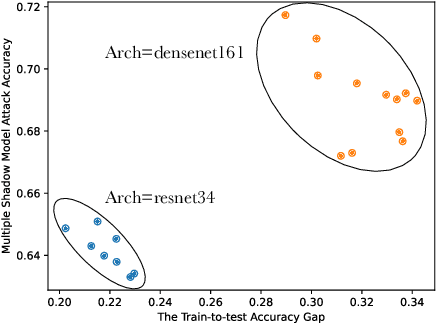

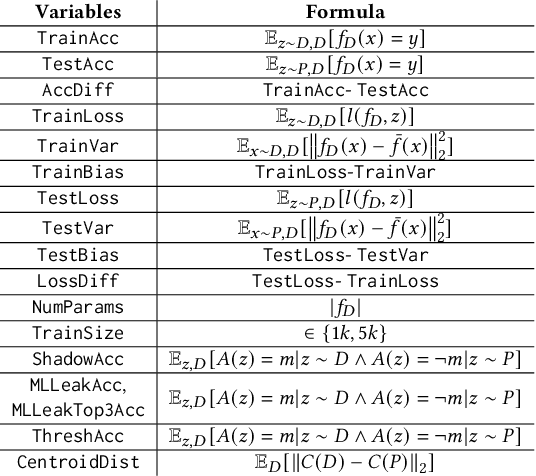

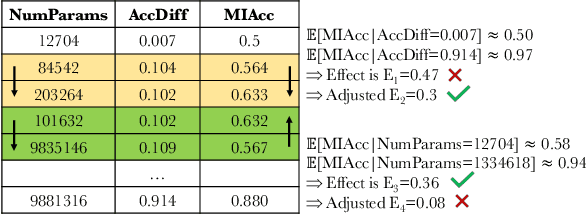

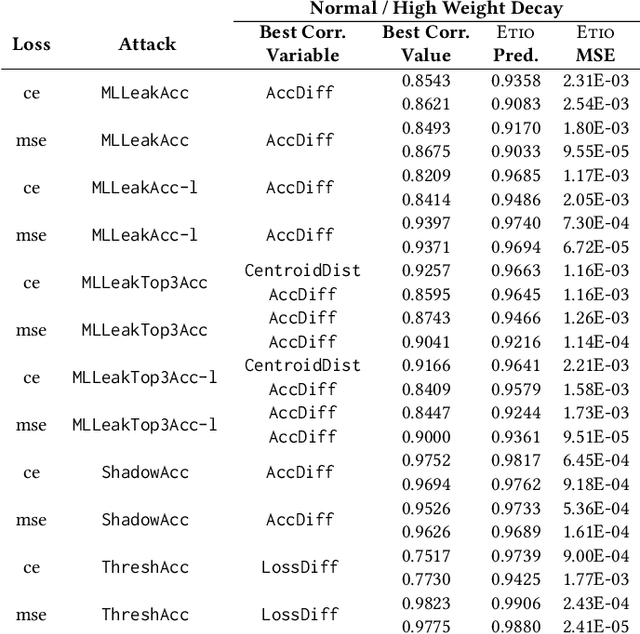

Abstract:Membership inference (MI) attacks highlight a privacy weakness in present stochastic training methods for neural networks. It is not well understood, however, why they arise. Are they a natural consequence of imperfect generalization only? Which underlying causes should we address during training to mitigate these attacks? Towards answering such questions, we propose the first approach to explain MI attacks and their connection to generalization based on principled causal reasoning. We offer causal graphs that quantitatively explain the observed MI attack performance achieved for $6$ attack variants. We refute several prior non-quantitative hypotheses that over-simplify or over-estimate the influence of underlying causes, thereby failing to capture the complex interplay between several factors. Our causal models also show a new connection between generalization and MI attacks via their shared causal factors. Our causal models have high predictive power ($0.90$), i.e., their analytical predictions match with observations in unseen experiments often, which makes analysis via them a pragmatic alternative.

LPGNet: Link Private Graph Networks for Node Classification

May 06, 2022

Abstract:Classification tasks on labeled graph-structured data have many important applications ranging from social recommendation to financial modeling. Deep neural networks are increasingly being used for node classification on graphs, wherein nodes with similar features have to be given the same label. Graph convolutional networks (GCNs) are one such widely studied neural network architecture that perform well on this task. However, powerful link-stealing attacks on GCNs have recently shown that even with black-box access to the trained model, inferring which links (or edges) are present in the training graph is practical. In this paper, we present a new neural network architecture called LPGNet for training on graphs with privacy-sensitive edges. LPGNet provides differential privacy (DP) guarantees for edges using a novel design for how graph edge structure is used during training. We empirically show that LPGNet models often lie in the sweet spot between providing privacy and utility: They can offer better utility than "trivially" private architectures which use no edge information (e.g., vanilla MLPs) and better resilience against existing link-stealing attacks than vanilla GCNs which use the full edge structure. LPGNet also offers consistently better privacy-utility tradeoffs than DPGCN, which is the state-of-the-art mechanism for retrofitting differential privacy into conventional GCNs, in most of our evaluated datasets.

EPIE Dataset: A Corpus For Possible Idiomatic Expressions

Jun 16, 2020

Abstract:Idiomatic expressions have always been a bottleneck for language comprehension and natural language understanding, specifically for tasks like Machine Translation(MT). MT systems predominantly produce literal translations of idiomatic expressions as they do not exhibit generic and linguistically deterministic patterns which can be exploited for comprehension of the non-compositional meaning of the expressions. These expressions occur in parallel corpora used for training, but due to the comparatively high occurrences of the constituent words of idiomatic expressions in literal context, the idiomatic meaning gets overpowered by the compositional meaning of the expression. State of the art Metaphor Detection Systems are able to detect non-compositional usage at word level but miss out on idiosyncratic phrasal idiomatic expressions. This creates a dire need for a dataset with a wider coverage and higher occurrence of commonly occurring idiomatic expressions, the spans of which can be used for Metaphor Detection. With this in mind, we present our English Possible Idiomatic Expressions(EPIE) corpus containing 25206 sentences labelled with lexical instances of 717 idiomatic expressions. These spans also cover literal usages for the given set of idiomatic expressions. We also present the utility of our dataset by using it to train a sequence labelling module and testing on three independent datasets with high accuracy, precision and recall scores.

Scalable Quantitative Verification For Deep Neural Networks

Feb 17, 2020

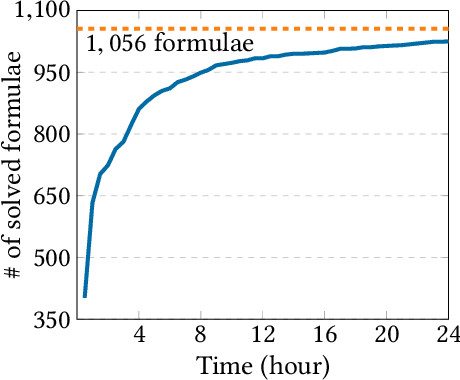

Abstract:Verifying security properties of deep neural networks (DNNs) is becoming increasingly important. This paper introduces a new quantitative verification framework for DNNs that can decide, with user-specified confidence, whether a given logical property {\psi} defined over the space of inputs of the given DNN holds for less than a user-specified threshold, {\theta}. We present new algorithms that are scalable to large real-world models as well as proven to be sound. Our approach requires only black-box access to the models. Further, it certifies properties of both deterministic and non-deterministic DNNs. We implement our approach in a tool called PROVERO. We apply PROVERO to the problem of certifying adversarial robustness. In this context, PROVERO provides an attack-agnostic measure of robustness for a given DNN and a test input. First, we find that this metric has a strong statistical correlation with perturbation bounds reported by 2 of the most prominent white-box attack strategies today. Second, we show that PROVERO can quantitatively certify robustness with high confidence in cases where the state-of-the-art qualitative verification tool (ERAN) fails to produce conclusive results. Thus, quantitative verification scales easily to large DNNs.

Quantitative Verification of Neural Networks And its Security Applications

Jun 25, 2019

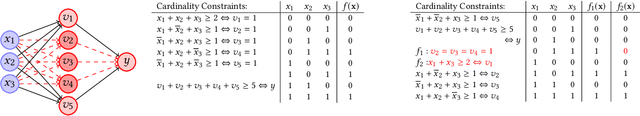

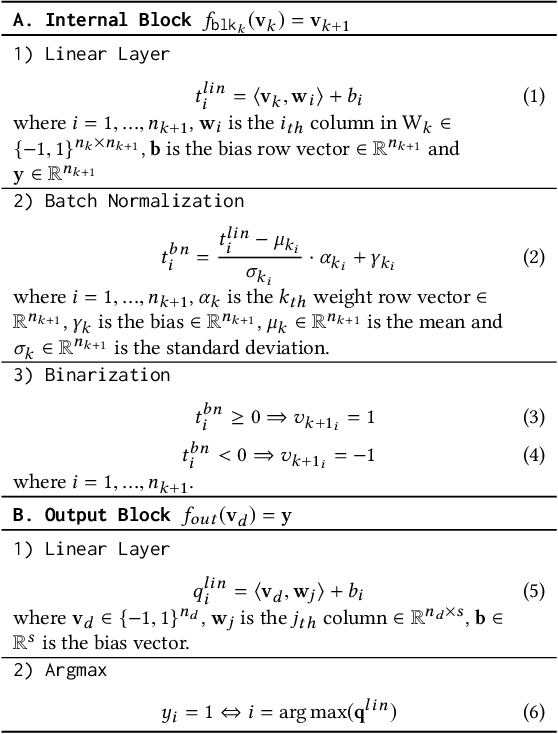

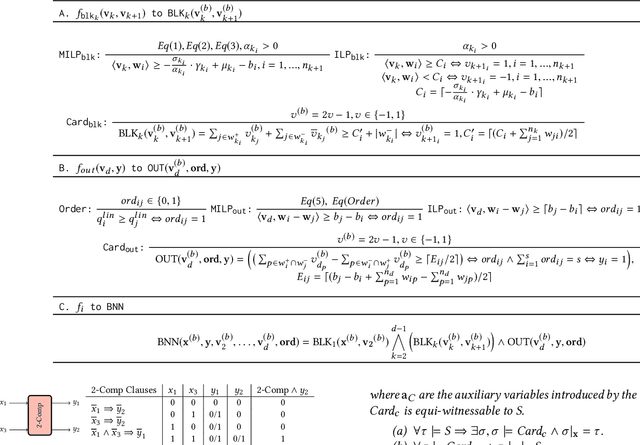

Abstract:Neural networks are increasingly employed in safety-critical domains. This has prompted interest in verifying or certifying logically encoded properties of neural networks. Prior work has largely focused on checking existential properties, wherein the goal is to check whether there exists any input that violates a given property of interest. However, neural network training is a stochastic process, and many questions arising in their analysis require probabilistic and quantitative reasoning, i.e., estimating how many inputs satisfy a given property. To this end, our paper proposes a novel and principled framework to quantitative verification of logical properties specified over neural networks. Our framework is the first to provide PAC-style soundness guarantees, in that its quantitative estimates are within a controllable and bounded error from the true count. We instantiate our algorithmic framework by building a prototype tool called NPAQ that enables checking rich properties over binarized neural networks. We show how emerging security analyses can utilize our framework in 3 concrete point applications: quantifying robustness to adversarial inputs, efficacy of trojan attacks, and fairness/bias of given neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge