Philip Becker-Ehmck

Latent Action World Models for Control with Unlabeled Trajectories

Dec 10, 2025

Abstract:Inspired by how humans combine direct interaction with action-free experience (e.g., videos), we study world models that learn from heterogeneous data. Standard world models typically rely on action-conditioned trajectories, which limits effectiveness when action labels are scarce. We introduce a family of latent-action world models that jointly use action-conditioned and action-free data by learning a shared latent action representation. This latent space aligns observed control signals with actions inferred from passive observations, enabling a single dynamics model to train on large-scale unlabeled trajectories while requiring only a small set of action-labeled ones. We use the latent-action world model to learn a latent-action policy through offline reinforcement learning (RL), thereby bridging two traditionally separate domains: offline RL, which typically relies on action-conditioned data, and action-free training, which is rarely used with subsequent RL. On the DeepMind Control Suite, our approach achieves strong performance while using about an order of magnitude fewer action-labeled samples than purely action-conditioned baselines. These results show that latent actions enable training on both passive and interactive data, which makes world models learn more efficiently.

Constrained Latent Action Policies for Model-Based Offline Reinforcement Learning

Nov 07, 2024

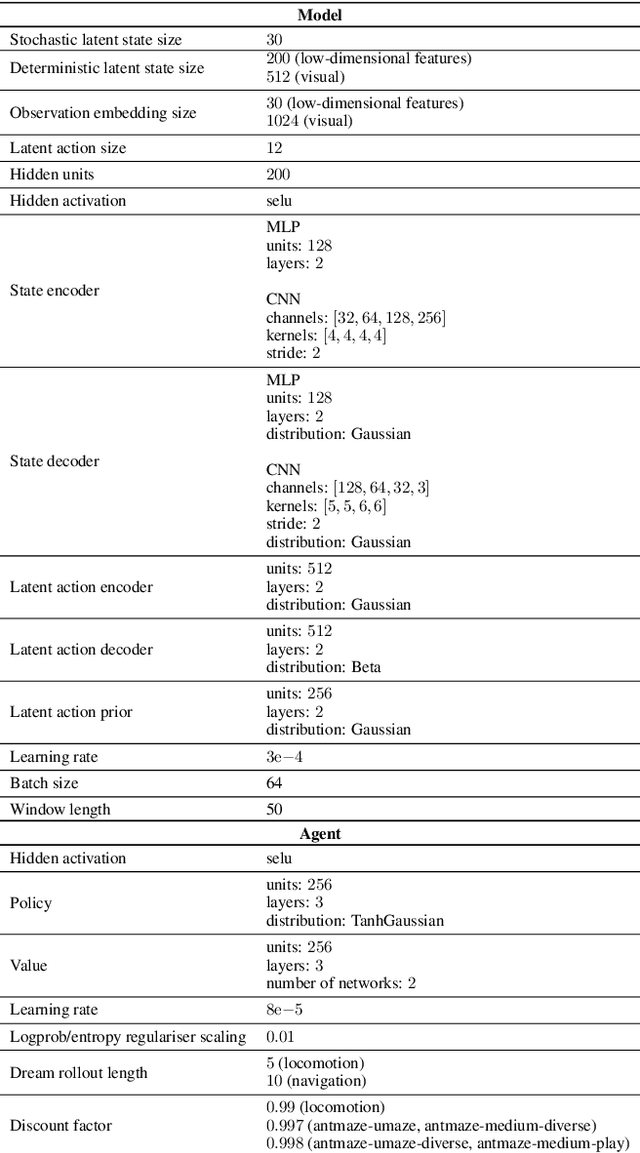

Abstract:In offline reinforcement learning, a policy is learned using a static dataset in the absence of costly feedback from the environment. In contrast to the online setting, only using static datasets poses additional challenges, such as policies generating out-of-distribution samples. Model-based offline reinforcement learning methods try to overcome these by learning a model of the underlying dynamics of the environment and using it to guide policy search. It is beneficial but, with limited datasets, errors in the model and the issue of value overestimation among out-of-distribution states can worsen performance. Current model-based methods apply some notion of conservatism to the Bellman update, often implemented using uncertainty estimation derived from model ensembles. In this paper, we propose Constrained Latent Action Policies (C-LAP) which learns a generative model of the joint distribution of observations and actions. We cast policy learning as a constrained objective to always stay within the support of the latent action distribution, and use the generative capabilities of the model to impose an implicit constraint on the generated actions. Thereby eliminating the need to use additional uncertainty penalties on the Bellman update and significantly decreasing the number of gradient steps required to learn a policy. We empirically evaluate C-LAP on the D4RL and V-D4RL benchmark, and show that C-LAP is competitive to state-of-the-art methods, especially outperforming on datasets with visual observations.

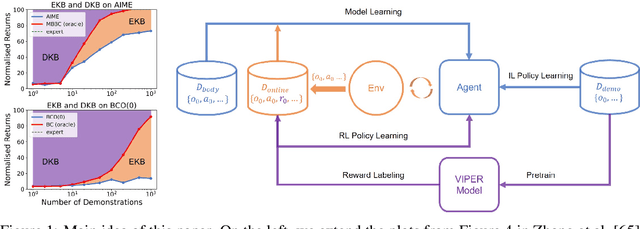

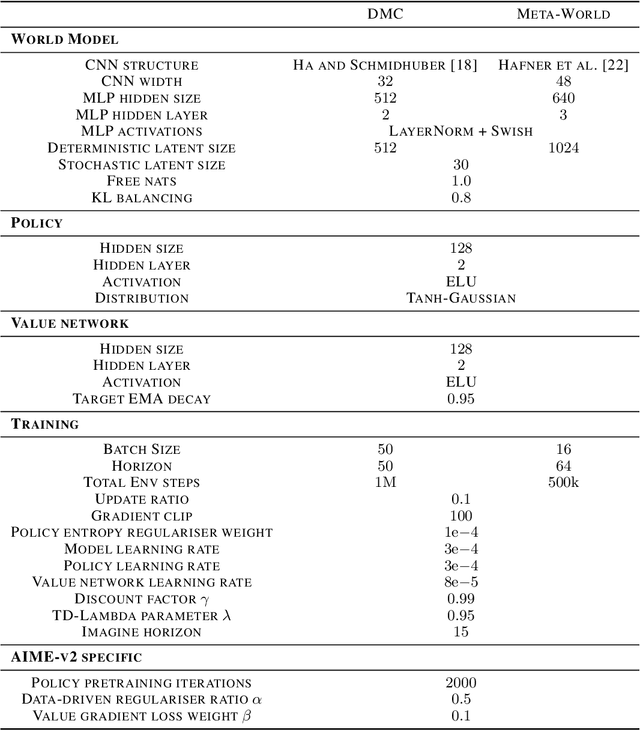

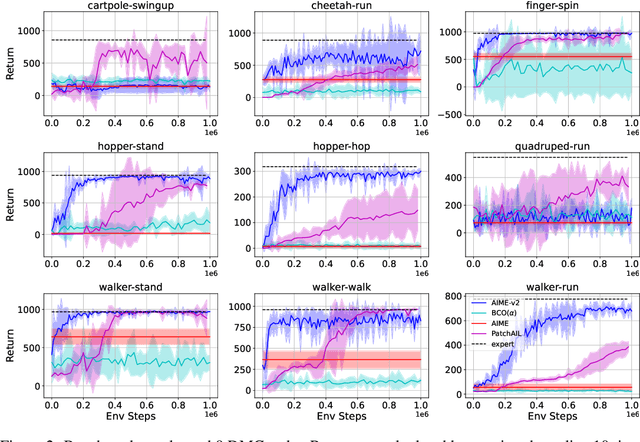

Overcoming Knowledge Barriers: Online Imitation Learning from Observation with Pretrained World Models

Apr 29, 2024

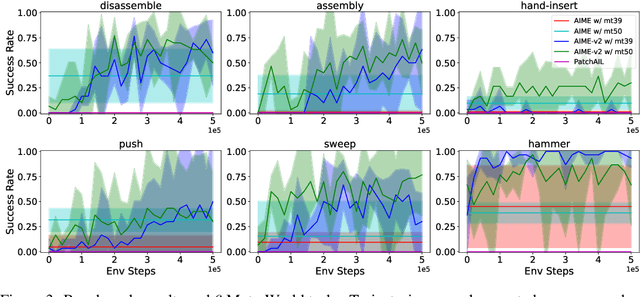

Abstract:Incorporating the successful paradigm of pretraining and finetuning from Computer Vision and Natural Language Processing into decision-making has become increasingly popular in recent years. In this paper, we study Imitation Learning from Observation with pretrained models and find existing approaches such as BCO and AIME face knowledge barriers, specifically the Embodiment Knowledge Barrier (EKB) and the Demonstration Knowledge Barrier (DKB), greatly limiting their performance. The EKB arises when pretrained models lack knowledge about unseen observations, leading to errors in action inference. The DKB results from policies trained on limited demonstrations, hindering adaptability to diverse scenarios. We thoroughly analyse the underlying mechanism of these barriers and propose AIME-v2 upon AIME as a solution. AIME-v2 uses online interactions with data-driven regulariser to alleviate the EKB and mitigates the DKB by introducing a surrogate reward function to enhance policy training. Experimental results on tasks from the DeepMind Control Suite and Meta-World benchmarks demonstrate the effectiveness of these modifications in improving both sample-efficiency and converged performance. The study contributes valuable insights into resolving knowledge barriers for enhanced decision-making in pretraining-based approaches. Code will be available at https://github.com/argmax-ai/aime-v2.

Action Inference by Maximising Evidence: Zero-Shot Imitation from Observation with World Models

Dec 04, 2023Abstract:Unlike most reinforcement learning agents which require an unrealistic amount of environment interactions to learn a new behaviour, humans excel at learning quickly by merely observing and imitating others. This ability highly depends on the fact that humans have a model of their own embodiment that allows them to infer the most likely actions that led to the observed behaviour. In this paper, we propose Action Inference by Maximising Evidence (AIME) to replicate this behaviour using world models. AIME consists of two distinct phases. In the first phase, the agent learns a world model from its past experience to understand its own body by maximising the ELBO. While in the second phase, the agent is given some observation-only demonstrations of an expert performing a novel task and tries to imitate the expert's behaviour. AIME achieves this by defining a policy as an inference model and maximising the evidence of the demonstration under the policy and world model. Our method is "zero-shot" in the sense that it does not require further training for the world model or online interactions with the environment after given the demonstration. We empirically validate the zero-shot imitation performance of our method on the Walker and Cheetah embodiment of the DeepMind Control Suite and find it outperforms the state-of-the-art baselines. Code is available at: https://github.com/argmax-ai/aime.

Learning to Fly via Deep Model-Based Reinforcement Learning

Mar 19, 2020

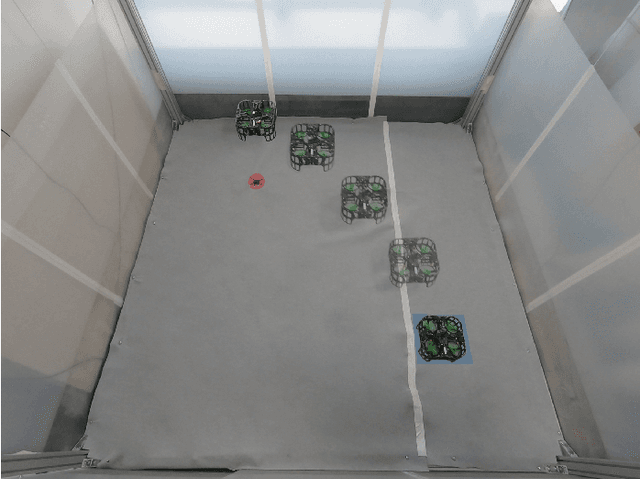

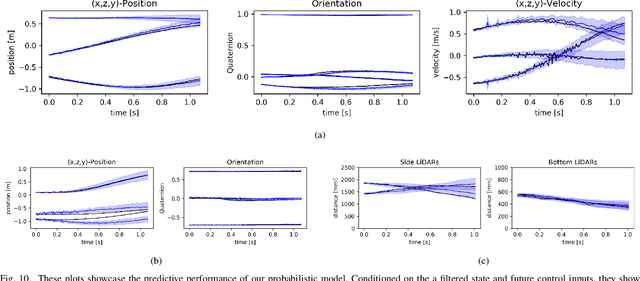

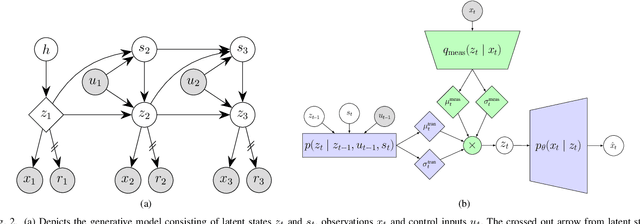

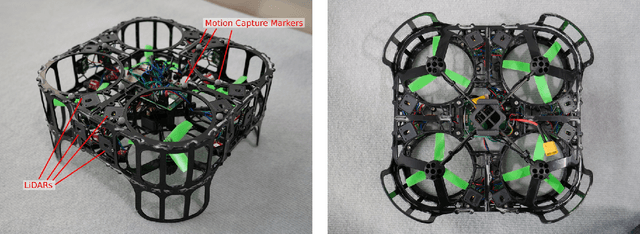

Abstract:Learning to control robots without requiring models has been a long-term goal, promising diverse and novel applications. Yet, reinforcement learning has only achieved limited impact on real-time robot control due to its high demand of real-world interactions. In this work, by leveraging a learnt probabilistic model of drone dynamics, we achieve human-like quadrotor control through model-based reinforcement learning. No prior knowledge of the flight dynamics is assumed; instead, a sequential latent variable model, used generatively and as an online filter, is learnt from raw sensory input. The controller and value function are optimised entirely by propagating stochastic analytic gradients through generated latent trajectories. We show that "learning to fly" can be achieved with less than 30 minutes of experience with a single drone, and can be deployed solely using onboard computational resources and sensors, on a self-built drone.

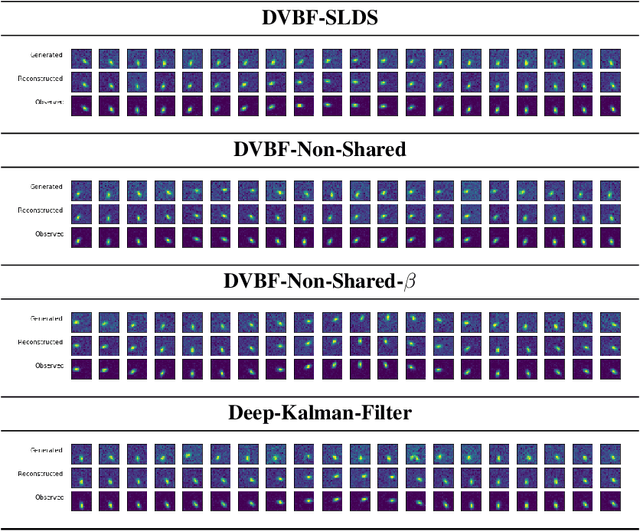

Beta DVBF: Learning State-Space Models for Control from High Dimensional Observations

Nov 02, 2019

Abstract:Learning a model of dynamics from high-dimensional images can be a core ingredient for success in many applications across different domains, especially in sequential decision making. However, currently prevailing methods based on latent-variable models are limited to working with low resolution images only. In this work, we show that some of the issues with using high-dimensional observations arise from the discrepancy between the dimensionality of the latent and observable space, and propose solutions to overcome them.

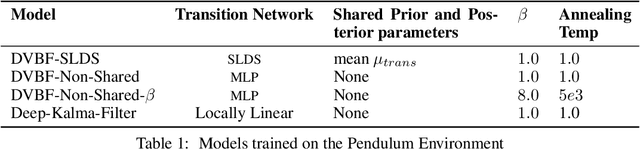

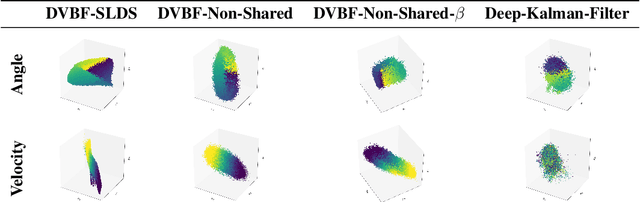

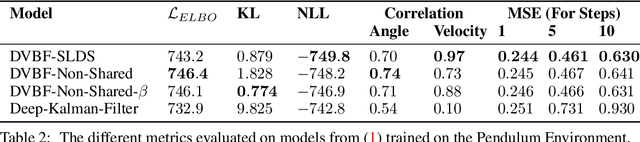

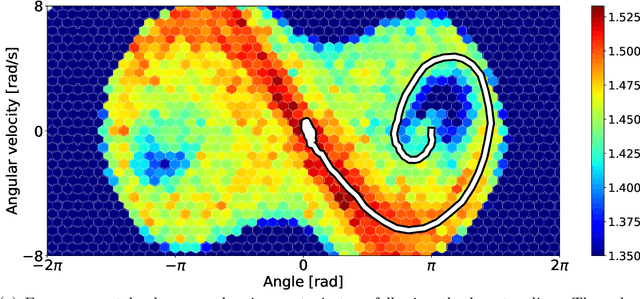

Switching Linear Dynamics for Variational Bayes Filtering

May 29, 2019

Abstract:System identification of complex and nonlinear systems is a central problem for model predictive control and model-based reinforcement learning. Despite their complexity, such systems can often be approximated well by a set of linear dynamical systems if broken into appropriate subsequences. This mechanism not only helps us find good approximations of dynamics, but also gives us deeper insight into the underlying system. Leveraging Bayesian inference, Variational Autoencoders and Concrete relaxations, we show how to learn a richer and more meaningful state space, e.g. encoding joint constraints and collisions with walls in a maze, from partial and high-dimensional observations. This representation translates into a gain of accuracy of learned dynamics showcased on various simulated tasks.

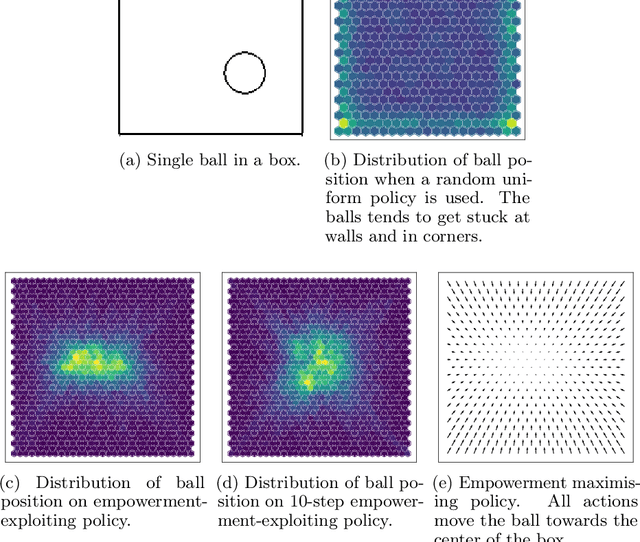

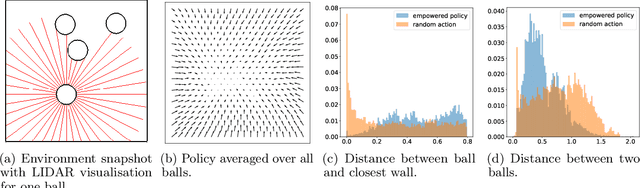

Unsupervised Real-Time Control through Variational Empowerment

Oct 13, 2017

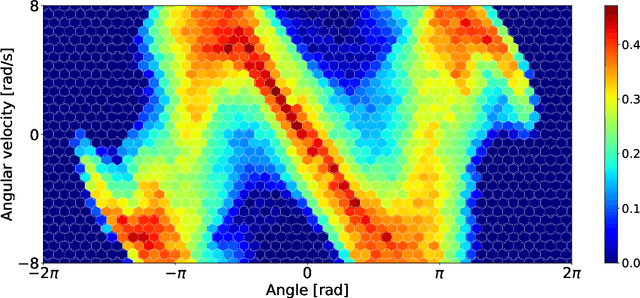

Abstract:We introduce a methodology for efficiently computing a lower bound to empowerment, allowing it to be used as an unsupervised cost function for policy learning in real-time control. Empowerment, being the channel capacity between actions and states, maximises the influence of an agent on its near future. It has been shown to be a good model of biological behaviour in the absence of an extrinsic goal. But empowerment is also prohibitively hard to compute, especially in nonlinear continuous spaces. We introduce an efficient, amortised method for learning empowerment-maximising policies. We demonstrate that our algorithm can reliably handle continuous dynamical systems using system dynamics learned from raw data. The resulting policies consistently drive the agents into states where they can use their full potential.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge