Peter Protzel

FETCH: A Memory-Efficient Replay Approach for Continual Learning in Image Classification

Jul 17, 2024Abstract:Class-incremental continual learning is an important area of research, as static deep learning methods fail to adapt to changing tasks and data distributions. In previous works, promising results were achieved using replay and compressed replay techniques. In the field of regular replay, GDumb achieved outstanding results but requires a large amount of memory. This problem can be addressed by compressed replay techniques. The goal of this work is to evaluate compressed replay in the pipeline of GDumb. We propose FETCH, a two-stage compression approach. First, the samples from the continual datastream are encoded by the early layers of a pre-trained neural network. Second, the samples are compressed before being stored in the episodic memory. Following GDumb, the remaining classification head is trained from scratch using only the decompressed samples from the reply memory. We evaluate FETCH in different scenarios and show that this approach can increase accuracy on CIFAR10 and CIFAR100. In our experiments, simple compression methods (e.g., quantization of tensors) outperform deep autoencoders. In the future, FETCH could serve as a baseline for benchmarking compressed replay learning in constrained memory scenarios.

* Submitted Version for IDEAL 2023

Towards Revisiting Visual Place Recognition for Joining Submaps in Multimap SLAM

Jul 17, 2024Abstract:Visual SLAM is a key technology for many autonomous systems. However, tracking loss can lead to the creation of disjoint submaps in multimap SLAM systems like ORB-SLAM3. Because of that, these systems employ submap merging strategies. As we show, these strategies are not always successful. In this paper, we investigate the impact of using modern VPR approaches for submap merging in visual SLAM. We argue that classical evaluation metrics are not sufficient to estimate the impact of a modern VPR component on the overall system. We show that naively replacing the VPR component does not leverage its full potential without requiring substantial interference in the original system. Because of that, we present a post-processing pipeline along with a set of metrics that allow us to estimate the impact of modern VPR components. We evaluate our approach on the NCLT and Newer College datasets using ORB-SLAM3 with NetVLAD and HDC-DELF as VPR components. Additionally, we present a simple approach for combining VPR with temporal consistency for map merging. We show that the map merging performance of ORB-SLAM3 can be improved. Building on these results, researchers in VPR can assess the potential of their approaches for SLAM systems.

Local positional graphs and attentive local features for a data and runtime-efficient hierarchical place recognition pipeline

Mar 15, 2024Abstract:Large-scale applications of Visual Place Recognition (VPR) require computationally efficient approaches. Further, a well-balanced combination of data-based and training-free approaches can decrease the required amount of training data and effort and can reduce the influence of distribution shifts between the training and application phases. This paper proposes a runtime and data-efficient hierarchical VPR pipeline that extends existing approaches and presents novel ideas. There are three main contributions: First, we propose Local Positional Graphs (LPG), a training-free and runtime-efficient approach to encode spatial context information of local image features. LPG can be combined with existing local feature detectors and descriptors and considerably improves the image-matching quality compared to existing techniques in our experiments. Second, we present Attentive Local SPED (ATLAS), an extension of our previous local features approach with an attention module that improves the feature quality while maintaining high data efficiency. The influence of the proposed modifications is evaluated in an extensive ablation study. Third, we present a hierarchical pipeline that exploits hyperdimensional computing to use the same local features as holistic HDC-descriptors for fast candidate selection and for candidate reranking. We combine all contributions in a runtime and data-efficient VPR pipeline that shows benefits over the state-of-the-art method Patch-NetVLAD on a large collection of standard place recognition datasets with 15$\%$ better performance in VPR accuracy, 54$\times$ faster feature comparison speed, and 55$\times$ less descriptor storage occupancy, making our method promising for real-world high-performance large-scale VPR in changing environments. Code will be made available with publication of this paper.

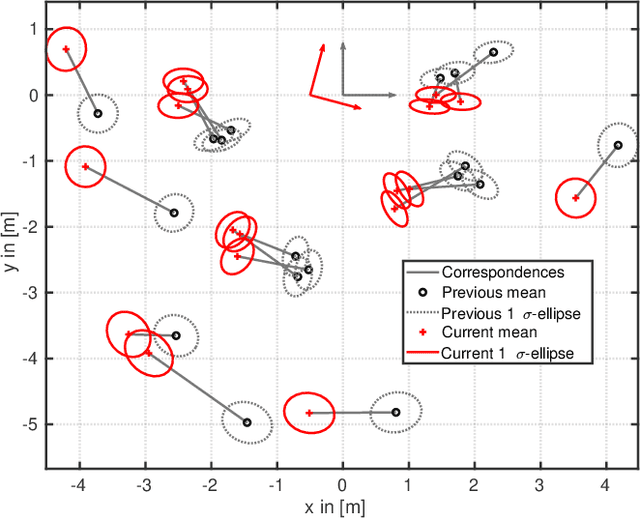

A Credible and Robust approach to Ego-Motion Estimation using an Automotive Radar

Apr 19, 2022

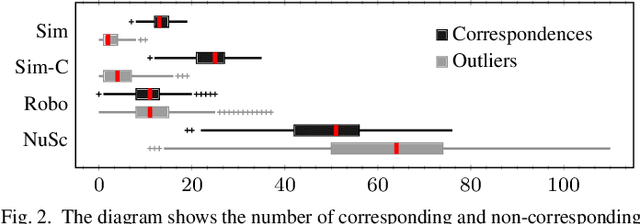

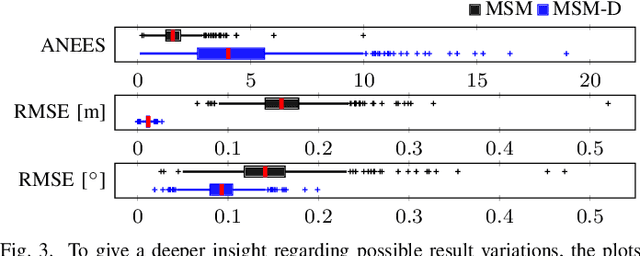

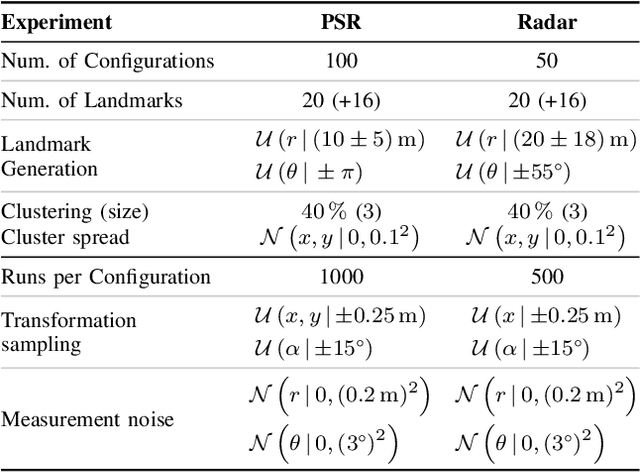

Abstract:Consistent motion estimation is fundamental for all mobile autonomous systems. While this sounds like an easy task, often, it is not the case because of changing environmental conditions affecting odometry obtained from vision, Lidar, or the wheels themselves. Unsusceptible to challenging lighting and weather conditions, radar sensors are an obvious alternative. Usually, automotive radars return a sparse point cloud, representing the surroundings. Utilizing this information to motion estimation is challenging due to unstable and phantom measurements, which result in a high rate of outliers. We introduce a credible and robust probabilistic approach to estimate the ego-motion based on these challenging radar measurements; intended to be used within a loosely-coupled sensor fusion framework. Compared to existing solutions, evaluated on the popular nuScenes dataset and others, we show that our proposed algorithm is more credible while not depending on explicit correspondence calculation.

HDC-MiniROCKET: Explicit Time Encoding in Time Series Classification with Hyperdimensional Computing

Feb 16, 2022

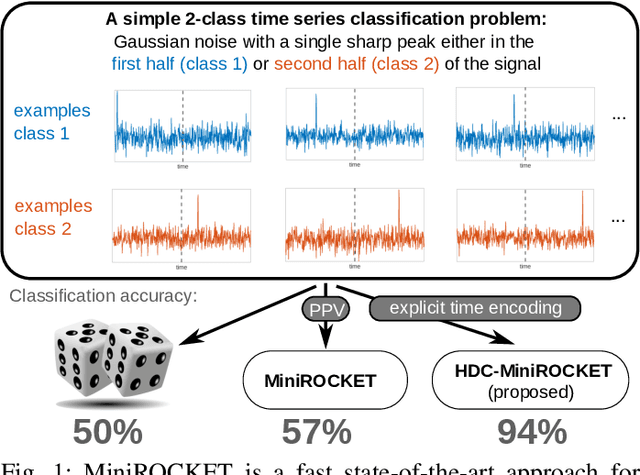

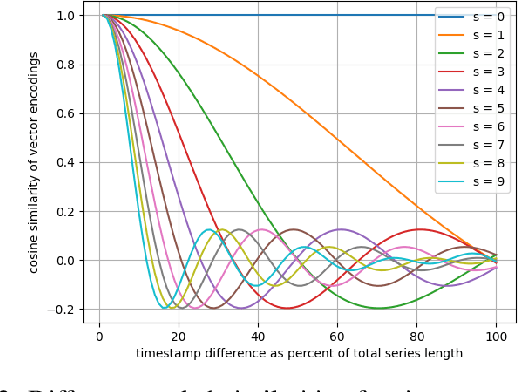

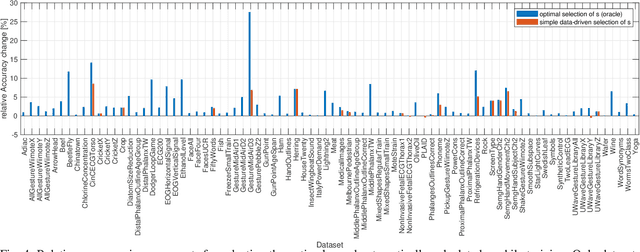

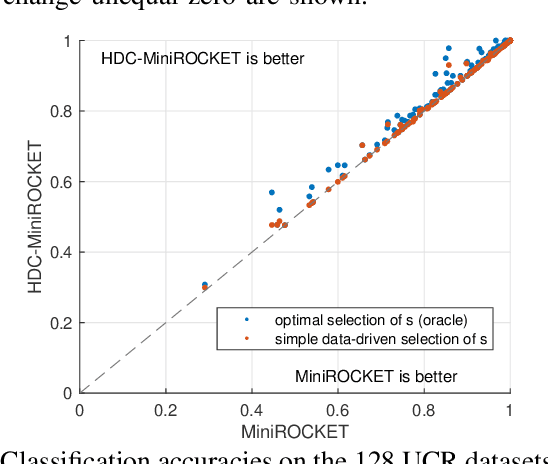

Abstract:Classification of time series data is an important task for many application domains. One of the best existing methods for this task, in terms of accuracy and computation time, is MiniROCKET. In this work, we extend this approach to provide better global temporal encodings using hyperdimensional computing (HDC) mechanisms. HDC (also known as Vector Symbolic Architectures, VSA) is a general method to explicitly represent and process information in high-dimensional vectors. It has previously been used successfully in combination with deep neural networks and other signal processing algorithms. We argue that the internal high-dimensional representation of MiniROCKET is well suited to be complemented by the algebra of HDC. This leads to a more general formulation, HDC-MiniROCKET, where the original algorithm is only a special case. We will discuss and demonstrate that HDC-MiniROCKET can systematically overcome catastrophic failures of MiniROCKET on simple synthetic datasets. These results are confirmed by experiments on the 128 datasets from the UCR time series classification benchmark. The extension with HDC can achieve considerably better results on datasets with high temporal dependence without increasing the computational effort for inference.

Advancing Mixture Models for Least Squares Optimization

Apr 01, 2021

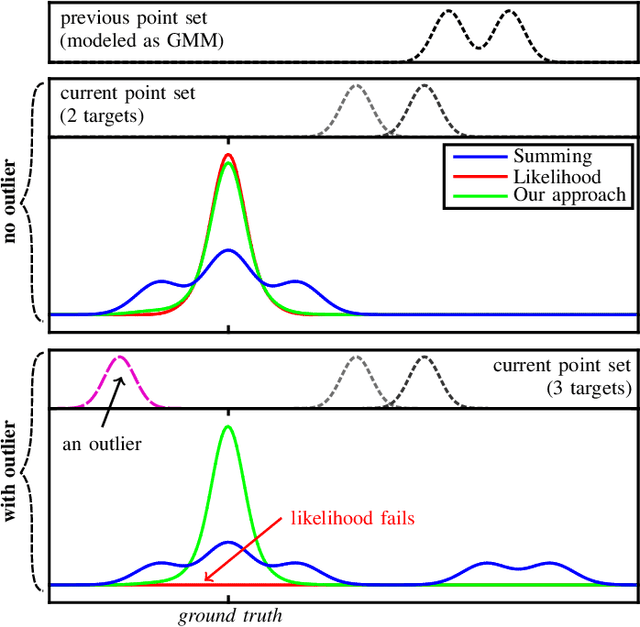

Abstract:Gaussian mixtures are a powerful and widely used tool to model non-Gaussian estimation problems. They are able to describe measurement errors that follow arbitrary distributions and can represent ambiguity in assignment tasks like point set registration or tracking. However, using them with common least squares solvers is still difficult. Existing approaches are either approximations of the true mixture or prone to convergence issues due to their strong nonlinearity. We propose a novel least squares representation of a Gaussian mixture, which is an exact and almost linear model of the corresponding log-likelihood. Our approach provides an efficient, accurate and flexible model for many probabilistic estimation problems and can be used as cost function for least squares solvers. We demonstrate its superior performance in various Monte Carlo experiments, including different kinds of point set registration. Our implementation is available as open source code for the state-of-the-art solvers Ceres and GTSAM.

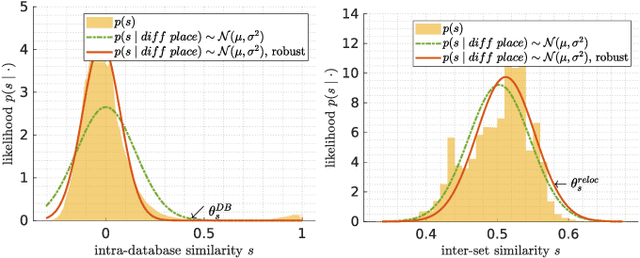

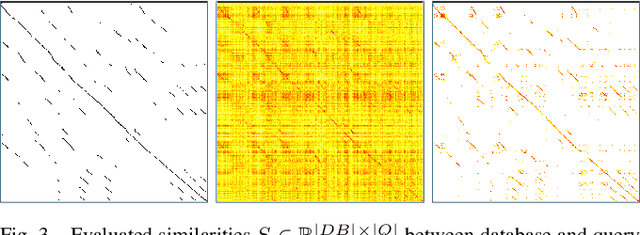

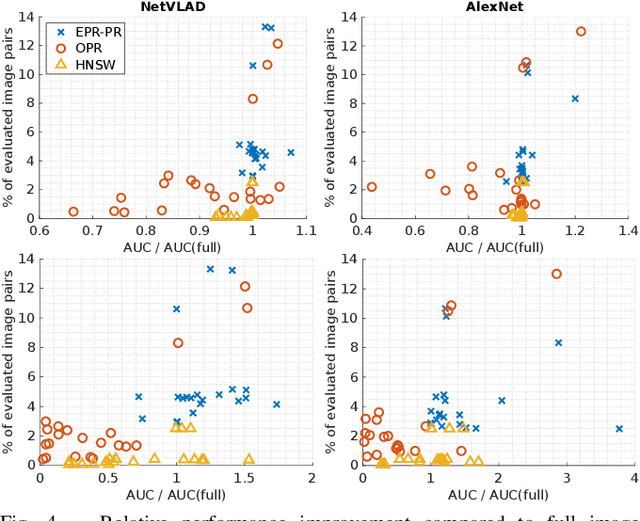

Beyond ANN: Exploiting Structural Knowledge for Efficient Place Recognition

Mar 15, 2021

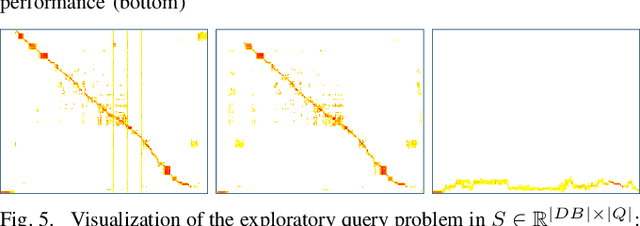

Abstract:Visual place recognition is the task of recognizing same places of query images in a set of database images, despite potential condition changes due to time of day, weather or seasons. It is important for loop closure detection in SLAM and candidate selection for global localization. Many approaches in the literature perform computationally inefficient full image comparisons between queries and all database images. There is still a lack of suited methods for efficient place recognition that allow a fast, sparse comparison of only the most promising image pairs without any loss in performance. While this is partially given by ANN-based methods, they trade speed for precision and additional memory consumption, and many cannot find arbitrary numbers of matching database images in case of loops in the database. In this paper, we propose a novel fast sequence-based method for efficient place recognition that can be applied online. It uses relocalization to recover from sequence losses, and exploits usually available but often unused intra-database similarities for a potential detection of all matching database images for each query in case of loops or stops in the database. We performed extensive experimental evaluations over five datasets and 21 sequence combinations, and show that our method outperforms two state-of-the-art approaches and even full image comparisons in many cases, while providing a good tradeoff between performance and percentage of evaluated image pairs. Source code for Matlab will be provided with publication of this paper.

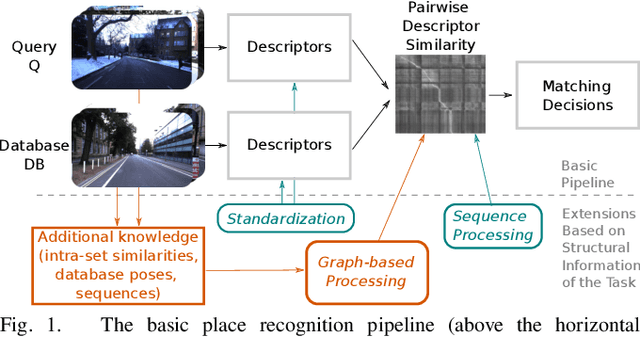

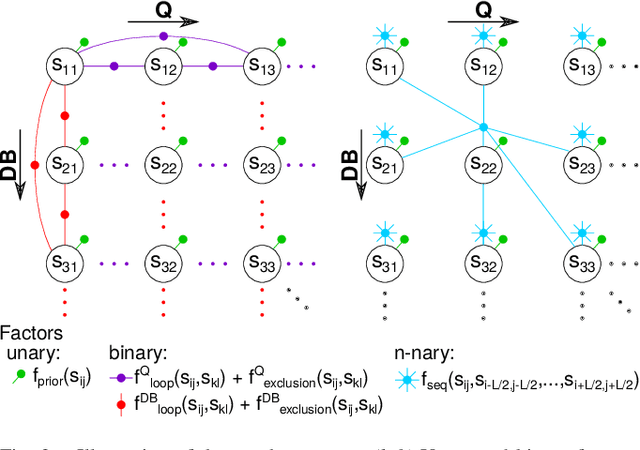

Graph-based non-linear least squares optimization for visual place recognition in changing environments

Dec 29, 2020

Abstract:Visual place recognition is an important subproblem of mobile robot localization. Since it is a special case of image retrieval, the basic source of information is the pairwise similarity of image descriptors. However, the embedding of the image retrieval problem in this robotic task provides additional structure that can be exploited, e.g. spatio-temporal consistency. Several algorithms exist to exploit this structure, e.g., sequence processing approaches or descriptor standardization approaches for changing environments. In this paper, we propose a graph-based framework to systematically exploit different types of additional structure and information. The graphical model is used to formulate a non-linear least squares problem that can be optimized with standard tools. Beyond sequences and standardization, we propose the usage of intra-set similarities within the database and/or the query image set as additional source of information. If available, our approach also allows to seamlessly integrate additional knowledge about poses of database images. We evaluate the system on a variety of standard place recognition datasets and demonstrate performance improvements for a large number of different configurations including different sources of information, different types of constraints, and online or offline place recognition setups.

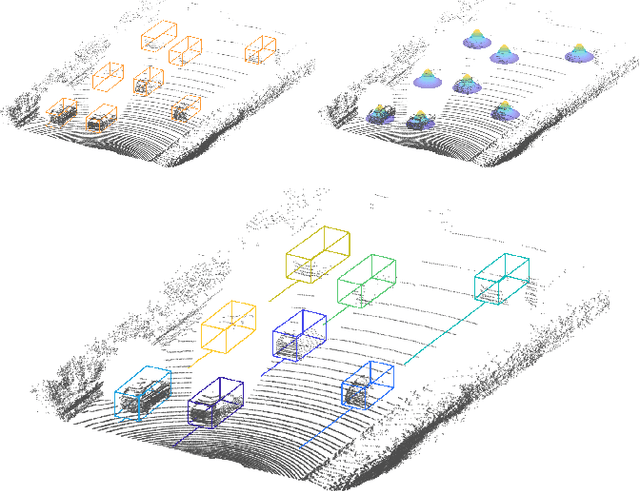

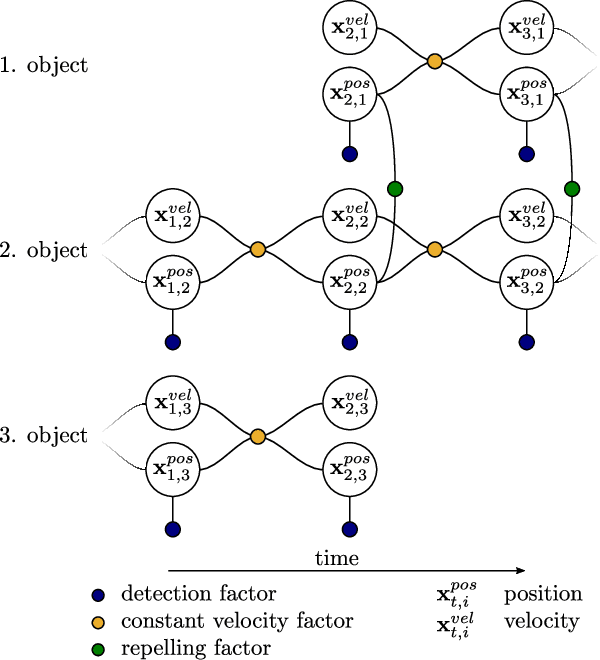

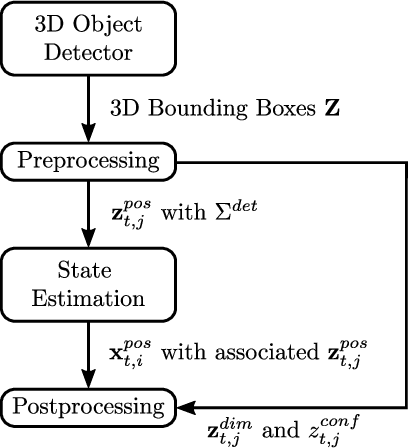

Factor Graph based 3D Multi-Object Tracking in Point Clouds

Aug 12, 2020

Abstract:Accurate and reliable tracking of multiple moving objects in 3D space is an essential component of urban scene understanding. This is a challenging task because it requires the assignment of detections in the current frame to the predicted objects from the previous one. Existing filter-based approaches tend to struggle if this initial assignment is not correct, which can happen easily. We propose a novel optimization-based approach that does not rely on explicit and fixed assignments. Instead, we represent the result of an off-the-shelf 3D object detector as Gaussian mixture model, which is incorporated in a factor graph framework. This gives us the flexibility to assign all detections to all objects simultaneously. As a result, the assignment problem is solved implicitly and jointly with the 3D spatial multi-object state estimation using non-linear least squares optimization. Despite its simplicity, the proposed algorithm achieves robust and reliable tracking results and can be applied for offline as well as online tracking. We demonstrate its performance on the real world KITTI tracking dataset and achieve better results than many state-of-the-art algorithms. Especially the consistency of the estimated tracks is superior offline as well as online.

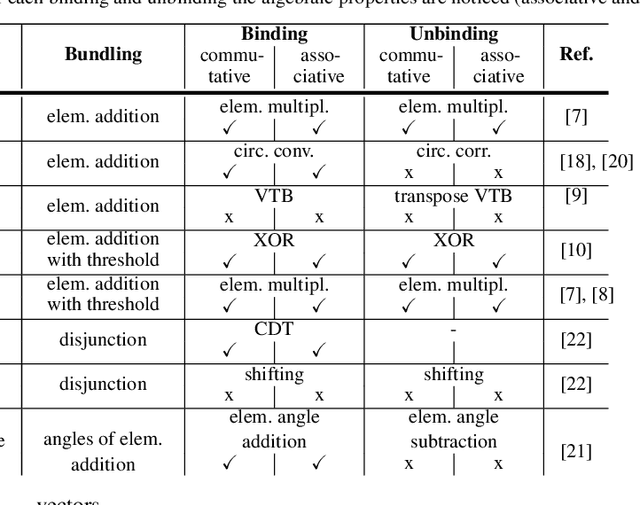

A comparison of Vector Symbolic Architectures

Feb 20, 2020

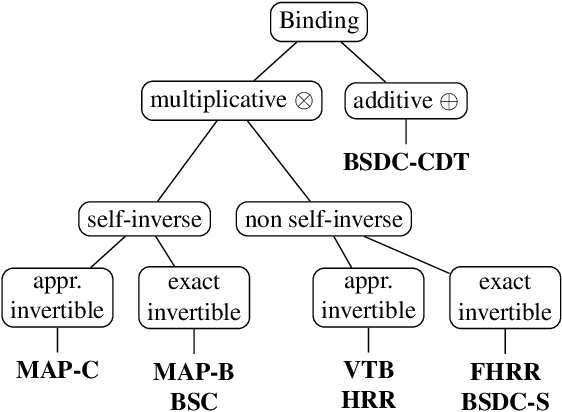

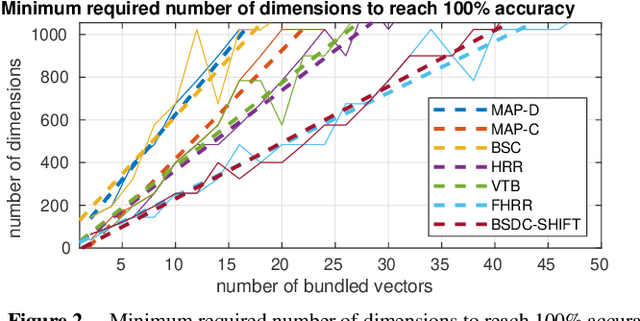

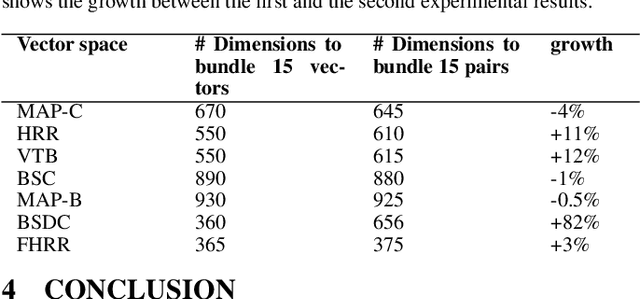

Abstract:Vector Symbolic Architectures (VSAs) combine a high-dimensional vector space with a set of carefully designed operators in order to perform symbolic computations with large numerical vectors. Major goals are the exploitation of their representational power and ability to deal with fuzziness and ambiguity. Over the past years, VSAs have been applied to a broad range of tasks and several VSA implementations have been proposed. The available implementations differ in the underlying vector space (e.g., binary vectors or complex-valued vectors) and the particular implementations of the required VSA operators - with important ramifications for the properties of these architectures. For example, not every VSA is equally well suited to address each task, including complete incompatibility. In this paper, we give an overview of eight available VSA implementations and discuss their commonalities and differences in the underlying vector space, bundling, and binding/unbinding operations. We create a taxonomy of available binding/unbinding operations and show an important ramification for non self-inverse binding operation using an example from analogical reasoning. A main contribution is the experimental comparison of the available implementations regarding (1) the capacity of bundles, (2) the approximation quality of non-exact unbinding operations, and (3) the influence of combined binding and bundling operations on the query answering performance. We expect this systematization and comparison to be relevant for development and evaluation of new VSAs, but most importantly, to support the selection of an appropriate VSA for a particular task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge