Pengwei Xing

FedLogic: Interpretable Federated Multi-Domain Chain-of-Thought Prompt Selection for Large Language Models

Aug 29, 2023

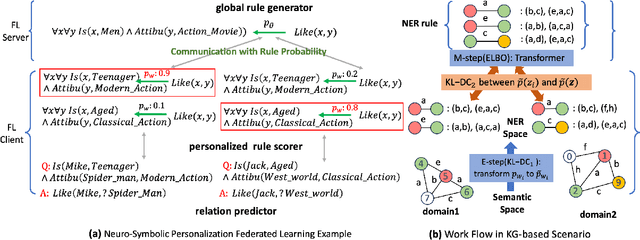

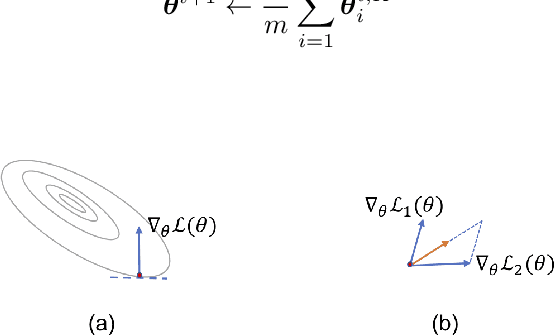

Abstract:Leveraging ``chain-of-thought (CoT)'' reasoning to elicit rapid and precise responses from large language models (LLMs) is rapidly attracting research interest. A notable challenge here is how to design or select optimal prompts. The process of prompt selection relies on trial and error, involving continuous adjustments and combinations of input prompts by users based on the corresponding new responses generated from LLMs. Furthermore, minimal research has been conducted to explore how LLMs employ the mathematical problem-solving capabilities learned from user interactions to address issues in narrative writing. To improve interpretability and explore the balance principle between generality and personalization under a multi-domain CoT prompt selection scenario, we propose the Federated Logic rule learning approach (FedLogic). We introduce a theoretical formalization and interactive emulation of the multi-domain CoT prompt selection dilemma in the context of federated LLMs. We cast the problem of joint probability modeling as a bilevel program, where the CoT prompt selection intricacy can be likened to a fuzzy score-based rule selection with the LLMs function as rule generators. FedLogic solves this problem through variational expectation maximization (V-EM). In addition, we incorporate two KL-divergence constraints within this probabilistic modeling framework to surmount the intricacies of managing extensive search spaces and accomplishing cross-domain personalization of CoTs. To the best of our knowledge, FedLogic is the first interpretable and principled federated multi-domain CoT prompt selection approach for LLMs.

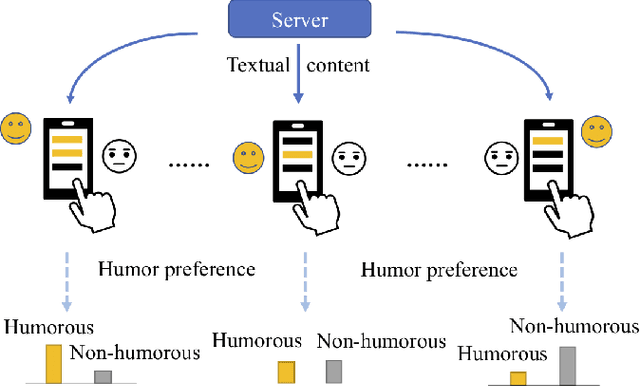

Federated Learning with Diversified Preference for Humor Recognition

Dec 03, 2020

Abstract:Understanding humor is critical to creative language modeling with many applications in human-AI interaction. However, due to differences in the cognitive systems of the audience, the perception of humor can be highly subjective. Thus, a given passage can be regarded as funny to different degrees by different readers. This makes training humorous text recognition models that can adapt to diverse humor preferences highly challenging. In this paper, we propose the FedHumor approach to recognize humorous text contents in a personalized manner through federated learning (FL). It is a federated BERT model capable of jointly considering the overall distribution of humor scores with humor labels by individuals for given texts. Extensive experiments demonstrate significant advantages of FedHumor in recognizing humor contents accurately for people with diverse humor preferences compared to 9 state-of-the-art humor recognition approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge