Penghong Lin

Interpretable Motion Planner for Urban Driving via Hierarchical Imitation Learning

Mar 24, 2023

Abstract:Learning-based approaches have achieved impressive performance for autonomous driving and an increasing number of data-driven works are being studied in the decision-making and planning module. However, the reliability and the stability of the neural network is still full of challenges. In this paper, we introduce a hierarchical imitation method including a high-level grid-based behavior planner and a low-level trajectory planner, which is not only an individual data-driven driving policy and can also be easily embedded into the rule-based architecture. We evaluate our method both in closed-loop simulation and real world driving, and demonstrate the neural network planner has outstanding performance in complex urban autonomous driving scenarios.

Novel Video Prediction for Large-scale Scene using Optical Flow

May 30, 2018

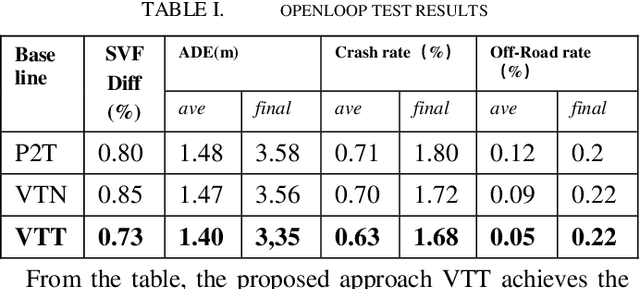

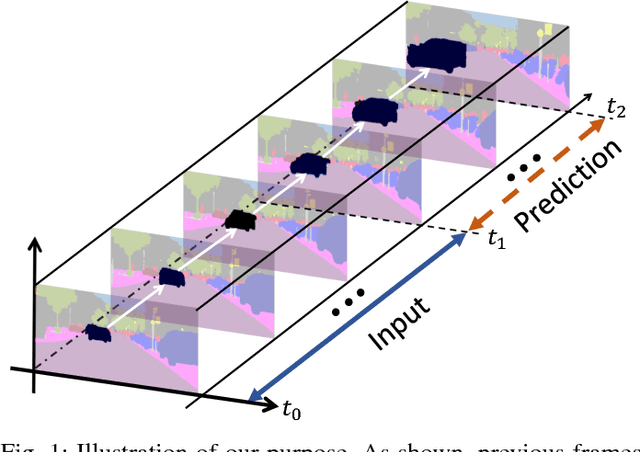

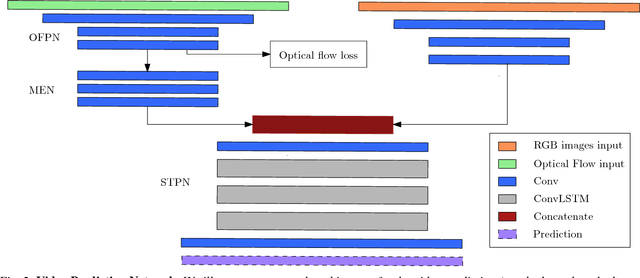

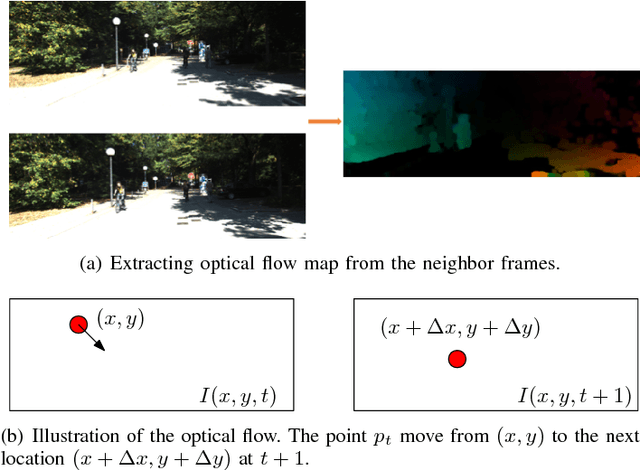

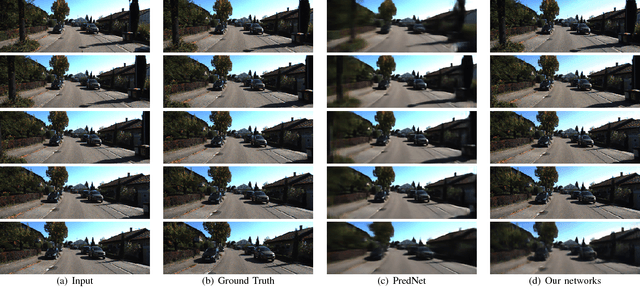

Abstract:Making predictions of future frames is a critical challenge in autonomous driving research. Most of the existing methods for video prediction attempt to generate future frames in simple and fixed scenes. In this paper, we propose a novel and effective optical flow conditioned method for the task of video prediction with an application to complex urban scenes. In contrast with previous work, the prediction model only requires video sequences and optical flow sequences for training and testing. Our method uses the rich spatial-temporal features in video sequences. The method takes advantage of the motion information extracting from optical flow maps between neighbor images as well as previous images. Empirical evaluations on the KITTI dataset and the Cityscapes dataset demonstrate the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge