Pengcheng Han

CurriculumLoc: Enhancing Cross-Domain Geolocalization through Multi-Stage Refinement

Nov 20, 2023

Abstract:Visual geolocalization is a cost-effective and scalable task that involves matching one or more query images, taken at some unknown location, to a set of geo-tagged reference images. Existing methods, devoted to semantic features representation, evolving towards robustness to a wide variety between query and reference, including illumination and viewpoint changes, as well as scale and seasonal variations. However, practical visual geolocalization approaches need to be robust in appearance changing and extreme viewpoint variation conditions, while providing accurate global location estimates. Therefore, inspired by curriculum design, human learn general knowledge first and then delve into professional expertise. We first recognize semantic scene and then measure geometric structure. Our approach, termed CurriculumLoc, involves a delicate design of multi-stage refinement pipeline and a novel keypoint detection and description with global semantic awareness and local geometric verification. We rerank candidates and solve a particular cross-domain perspective-n-point (PnP) problem based on these keypoints and corresponding descriptors, position refinement occurs incrementally. The extensive experimental results on our collected dataset, TerraTrack and a benchmark dataset, ALTO, demonstrate that our approach results in the aforementioned desirable characteristics of a practical visual geolocalization solution. Additionally, we achieve new high recall@1 scores of 62.6% and 94.5% on ALTO, with two different distances metrics, respectively. Dataset, code and trained models are publicly available on https://github.com/npupilab/CurriculumLoc.

ClusterFusion: Real-time Relative Positioning and Dense Reconstruction for UAV Cluster

Apr 11, 2023Abstract:As robotics technology advances, dense point cloud maps are increasingly in demand. However, dense reconstruction using a single unmanned aerial vehicle (UAV) suffers from limitations in flight speed and battery power, resulting in slow reconstruction and low coverage. Cluster UAV systems offer greater flexibility and wider coverage for map building. Existing methods of cluster UAVs face challenges with accurate relative positioning, scale drift, and high-speed dense point cloud map generation. To address these issues, we propose a cluster framework for large-scale dense reconstruction and real-time collaborative localization. The front-end of the framework is an improved visual odometry which can effectively handle large-scale scenes. Collaborative localization between UAVs is enabled through a two-stage joint optimization algorithm and a relative pose optimization algorithm, effectively achieving accurate relative positioning of UAVs and mitigating scale drift. Estimated poses are used to achieve real-time dense reconstruction and fusion of point cloud maps. To evaluate the performance of our proposed method, we conduct qualitative and quantitative experiments on real-world data. The results demonstrate that our framework can effectively suppress scale drift and generate large-scale dense point cloud maps in real-time, with the reconstruction speed increasing as more UAVs are added to the system.

GSLAM: A General SLAM Framework and Benchmark

Feb 21, 2019

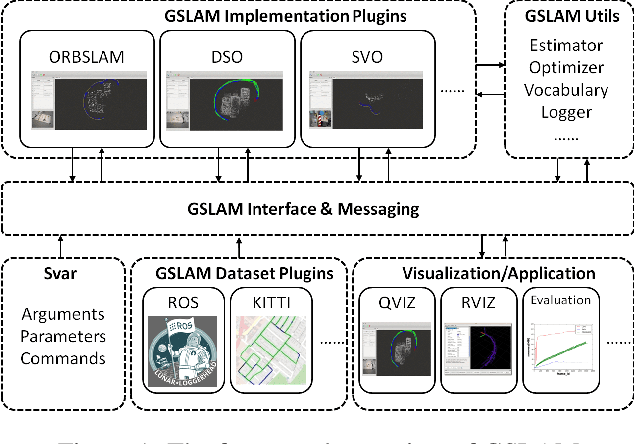

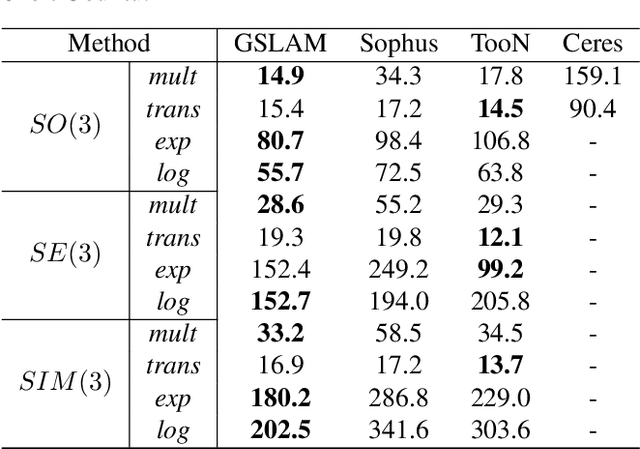

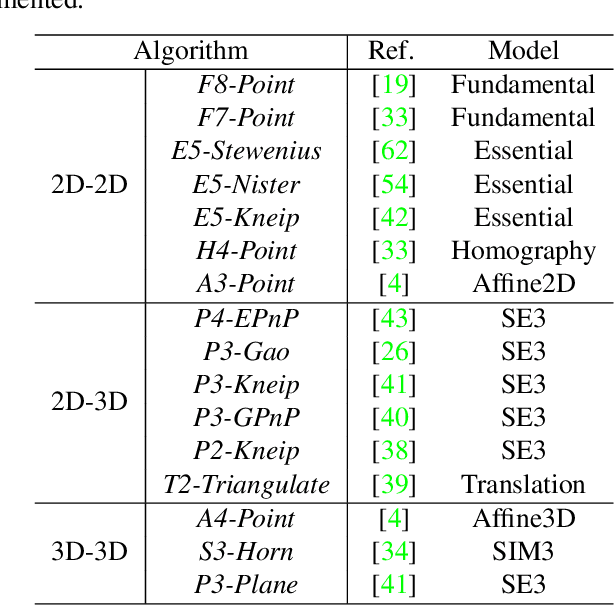

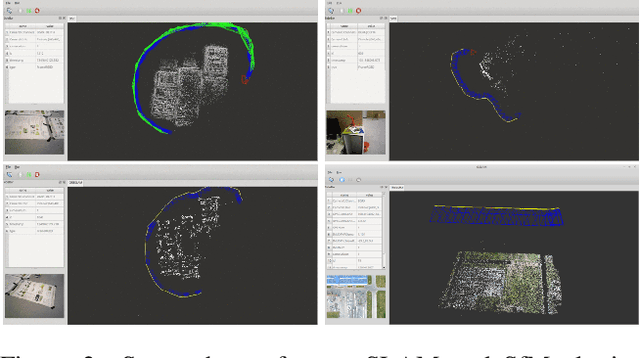

Abstract:SLAM technology has recently seen many successes and attracted the attention of high-technological companies. However, how to unify the interface of existing or emerging algorithms, and effectively perform benchmark about the speed, robustness and portability are still problems. In this paper, we propose a novel SLAM platform named GSLAM, which not only provides evaluation functionality, but also supplies useful toolkit for researchers to quickly develop their own SLAM systems. The core contribution of GSLAM is an universal, cross-platform and full open-source SLAM interface for both research and commercial usage, which is aimed to handle interactions with input dataset, SLAM implementation, visualization and applications in an unified framework. Through this platform, users can implement their own functions for better performance with plugin form and further boost the application to practical usage of the SLAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge