Peng Gang

A Pushing-Grasping Collaborative Method Based on Deep Q-Network Algorithm in Dual Perspectives

Jan 04, 2021

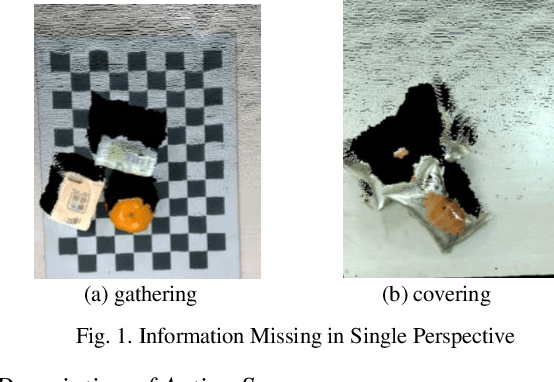

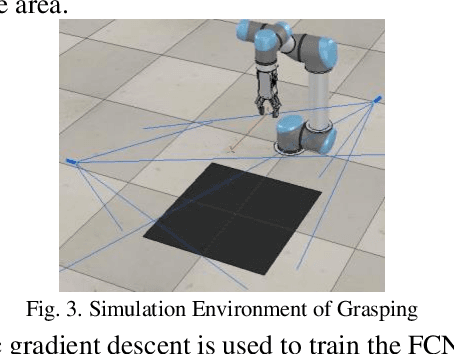

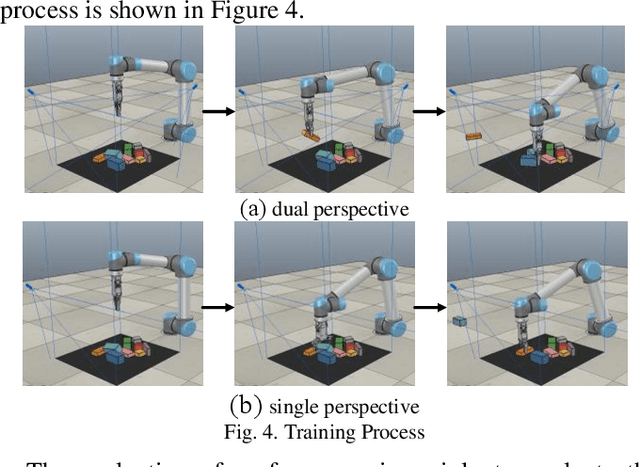

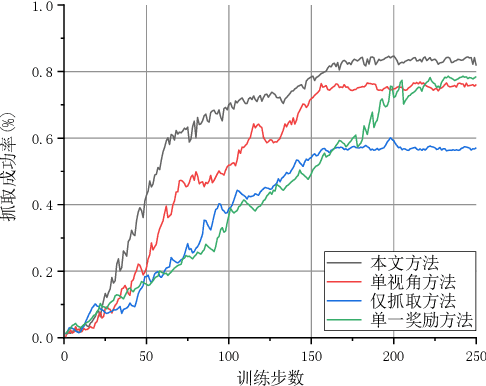

Abstract:Aiming at the traditional grasping method for manipulators based on 2D camera, when faced with the scene of gathering or covering, it can hardly perform well in unstructured scenes that appear as gathering and covering, for the reason that can't recognize objects accurately in cluster scenes from a single perspective and the manipulators can't make the environment better for grasping. In this case, a novel method of pushing-grasping collaborative based on the deep Q-network in dual perspectives is proposed in this paper. This method adopts an improved deep Q network algorithm, with an RGB-D camera to obtain the information of objects' RGB images and point clouds from two perspectives, and combines the pushing and grasping actions so that the trained manipulator can make the scenes better for grasping so that it can perform well in more complicated grasping scenes. What's more, we improved the reward function of the deep Q-network and propose the piecewise reward function to speed up the convergence of the deep Q-network. We trained different models and tried different methods in the V-REP simulation environment, and it concluded that the method proposed in this paper converges quickly and the success rate of grasping objects in unstructured scenes raises up to 83.5%. Besides, it shows the generalization ability and well performance when novel objects appear in the scenes that the manipulator has never grasped before.

A Visual Kinematics Calibration Method for Manipulator Based on Nonlinear Optimization

May 18, 2020

Abstract:The traditional kinematic calibration method for manipulators requires precise three-dimensional measuring instruments to measure the end pose, which is not only expensive due to the high cost of the measuring instruments but also not applicable to all manipulators. Another calibration method uses a camera, but the system error caused by the camera's parameters affects the calibration accuracy of the kinematics of the robot arm. Therefore, this paper proposes a method for calibrating the geometric parameters of a kinematic model of a manipulator based on monocular vision. Firstly, the classic Denavit-Hartenberg(D-H) modeling method is used to establish the kinematic parameters of the manipulator. Secondly, nonlinear optimization and parameter compensation are performed. The three-dimensional positions of the feature points of the calibration plate under each manipulator attitude corresponding to the actual kinematic model and the classic D-H kinematic model are mapped into the pixel coordinate system, and the sum of Euclidean distance errors of the pixel coordinates of the two is used as the objective function to be optimized. The experimental results show that the pixel deviation of the end pose corresponding to the optimized D-H kinematic model proposed in this paper and the end pose corresponding to the actual kinematic model in the pixel coordinate system is 0.99 pixels. Compared with the 7.9 deviation pixels between the pixel coordinates calculated by the classic D-H kinematic model and the actual pixel coordinates, the deviation is reduced by nearly 7 pixels for an 87% reduction in error. Therefore, the proposed method can effectively avoid system errors caused by camera parameters in visual calibration, can improve the absolute positioning accuracy of the end of the robotic arm, and has good economy and universality.

Monocular visual-inertial SLAM algorithm combined with wheel speed anomaly detection

Mar 22, 2020

Abstract:To address the weak observability of monocular visual-inertial odometers on ground-based mobile robots, this paper proposes a monocular inertial SLAM algorithm combined with wheel speed anomaly detection. The algorithm uses a wheel speed odometer pre-integration method to add the wheel speed measurement to the least-squares problem in a tightly coupled manner. For abnormal motion situations, such as skidding and abduction, this paper adopts the Mecanum mobile chassis control method, based on torque control. This method uses the motion constraint error to estimate the reliability of the wheel speed measurement. At the same time, in order to prevent incorrect chassis speed measurements from negatively influencing robot pose estimation, this paper uses three methods to detect abnormal chassis movement and analyze chassis movement status in real time. When the chassis movement is determined to be abnormal, the wheel odometer pre-integration measurement of the current frame is removed from the state estimation equation, thereby ensuring the accuracy and robustness of the state estimation. Experimental results show that the accuracy and robustness of the method in this paper are better than those of a monocular visual-inertial odometer.

Robust tightly coupled pose estimation based on monocular vision, inertia, and wheel speed

Mar 05, 2020

Abstract:The visual SLAM method is widely used for self-localization and mapping in complex environments. Visual-inertia SLAM, which combines a camera with IMU, can significantly improve the robustness and enable scale weak-visibility, whereas monocular visual SLAM is scale-invisible. For ground mobile robots, the introduction of a wheel speed sensor can solve the scale weak-visible problem and improve the robustness under abnormal conditions. In this thesis, a multi-sensor fusion SLAM algorithm using monocular vision, inertia, and wheel speed measurements is proposed. The sensor measurements are combined in a tightly coupled manner, and a nonlinear optimization method is used to maximize the posterior probability to solve the optimal state estimation. Loop detection and back-end optimization are added to help reduce or even eliminate the cumulative error of the estimated poses, thus ensuring global consistency of the trajectory and map. The wheel odometer pre-integration algorithm, which combines the chassis speed and IMU angular speed, can avoid repeated integration caused by linearization point changes during iterative optimization; state initialization based on the wheel odometer and IMU enables a quick and reliable calculation of the initial state values required by the state estimator in both stationary and moving states. Comparative experiments were carried out in room-scale scenes, building scale scenes, and visual loss scenarios. The results showed that the proposed algorithm has high accuracy, 2.2 m of cumulative error after moving 812 m (0.28%, loopback optimization disabled), strong robustness, and effective localization capability even in the event of sensor loss such as visual loss. The accuracy and robustness of the proposed method are superior to those of monocular visual inertia SLAM and traditional wheel odometers.

Prediction of Physical Load Level by Machine Learning Analysis of Heart Activity after Exercises

Dec 20, 2019

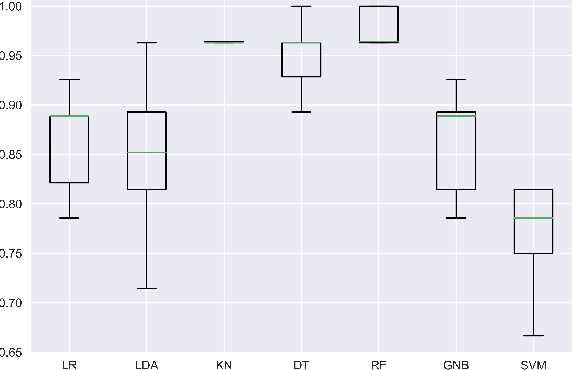

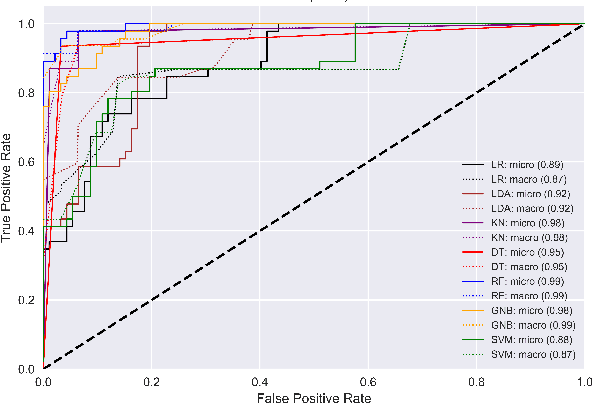

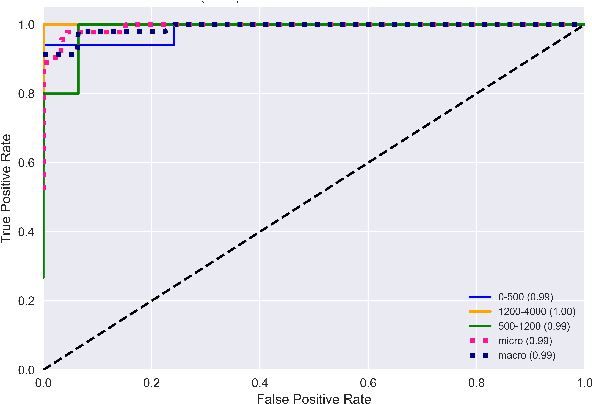

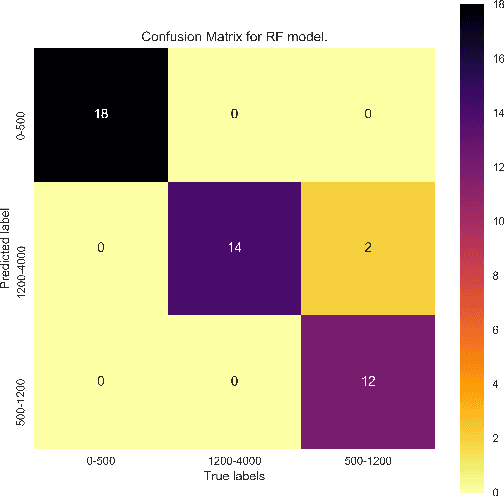

Abstract:The assessment of energy expenditure in real life is of great importance for monitoring the current physical state of people, especially in work, sport, elderly care, health care, and everyday life even. This work reports about application of some machine learning methods (linear regression, linear discriminant analysis, k-nearest neighbors, decision tree, random forest, Gaussian naive Bayes, support-vector machine) for monitoring energy expenditures in athletes. The classification problem was to predict the known level of the in-exercise loads (in three categories by calories) by the heart rate activity features measured during the short period of time (1 minute only) after training, i.e by features of the post-exercise load. The results obtained shown that the post-exercise heart activity features preserve the information of the in-exercise training loads and allow us to predict their actual in-exercise levels. The best performance can be obtained by the random forest classifier with all 8 heart rate features (micro-averaged area under curve value AUCmicro = 0.87 and macro-averaged one AUCmacro = 0.88) and the k-nearest neighbors classifier with 4 most important heart rate features (AUCmicro = 0.91 and AUCmacro = 0.89). The limitations and perspectives of the ML methods used are outlined, and some practical advices are proposed as to their improvement and implementation for the better prediction of in-exercise energy expenditures.

Open Source Dataset and Machine Learning Techniques for Automatic Recognition of Historical Graffiti

Aug 31, 2018

Abstract:Machine learning techniques are presented for automatic recognition of the historical letters (XI-XVIII centuries) carved on the stoned walls of St.Sophia cathedral in Kyiv (Ukraine). A new image dataset of these carved Glagolitic and Cyrillic letters (CGCL) was assembled and pre-processed for recognition and prediction by machine learning methods. The dataset consists of more than 4000 images for 34 types of letters. The explanatory data analysis of CGCL and notMNIST datasets shown that the carved letters can hardly be differentiated by dimensionality reduction methods, for example, by t-distributed stochastic neighbor embedding (tSNE) due to the worse letter representation by stone carving in comparison to hand writing. The multinomial logistic regression (MLR) and a 2D convolutional neural network (CNN) models were applied. The MLR model demonstrated the area under curve (AUC) values for receiver operating characteristic (ROC) are not lower than 0.92 and 0.60 for notMNIST and CGCL, respectively. The CNN model gave AUC values close to 0.99 for both notMNIST and CGCL (despite the much smaller size and quality of CGCL in comparison to notMNIST) under condition of the high lossy data augmentation. CGCL dataset was published to be available for the data science community as an open source resource.

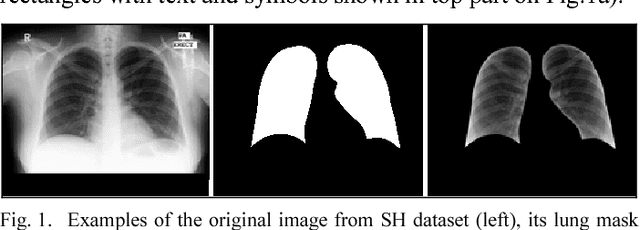

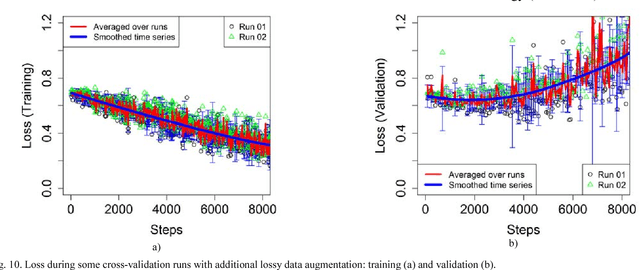

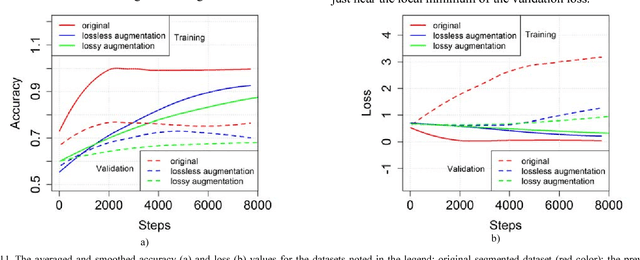

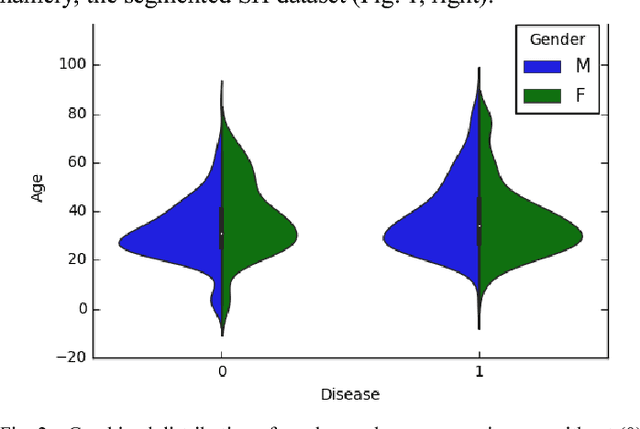

Chest X-Ray Analysis of Tuberculosis by Deep Learning with Segmentation and Augmentation

Mar 03, 2018

Abstract:The results of chest X-ray (CXR) analysis of 2D images to get the statistically reliable predictions (availability of tuberculosis) by computer-aided diagnosis (CADx) on the basis of deep learning are presented. They demonstrate the efficiency of lung segmentation, lossless and lossy data augmentation for CADx of tuberculosis by deep convolutional neural network (CNN) applied to the small and not well-balanced dataset even. CNN demonstrates ability to train (despite overfitting) on the pre-processed dataset obtained after lung segmentation in contrast to the original not-segmented dataset. Lossless data augmentation of the segmented dataset leads to the lowest validation loss (without overfitting) and nearly the same accuracy (within the limits of standard deviation) in comparison to the original and other pre-processed datasets after lossy data augmentation. The additional limited lossy data augmentation results in the lower validation loss, but with a decrease of the validation accuracy. In conclusion, besides the more complex deep CNNs and bigger datasets, the better progress of CADx for the small and not well-balanced datasets even could be obtained by better segmentation, data augmentation, dataset stratification, and exclusion of non-evident outliers.

Dimensionality Reduction in Deep Learning for Chest X-Ray Analysis of Lung Cancer

Jan 19, 2018

Abstract:Efficiency of some dimensionality reduction techniques, like lung segmentation, bone shadow exclusion, and t-distributed stochastic neighbor embedding (t-SNE) for exclusion of outliers, is estimated for analysis of chest X-ray (CXR) 2D images by deep learning approach to help radiologists identify marks of lung cancer in CXR. Training and validation of the simple convolutional neural network (CNN) was performed on the open JSRT dataset (dataset #01), the JSRT after bone shadow exclusion - BSE-JSRT (dataset #02), JSRT after lung segmentation (dataset #03), BSE-JSRT after lung segmentation (dataset #04), and segmented BSE-JSRT after exclusion of outliers by t-SNE method (dataset #05). The results demonstrate that the pre-processed dataset obtained after lung segmentation, bone shadow exclusion, and filtering out the outliers by t-SNE (dataset #05) demonstrates the highest training rate and best accuracy in comparison to the other pre-processed datasets.

Deep Learning with Lung Segmentation and Bone Shadow Exclusion Techniques for Chest X-Ray Analysis of Lung Cancer

Dec 20, 2017

Abstract:The recent progress of computing, machine learning, and especially deep learning, for image recognition brings a meaningful effect for automatic detection of various diseases from chest X-ray images (CXRs). Here efficiency of lung segmentation and bone shadow exclusion techniques is demonstrated for analysis of 2D CXRs by deep learning approach to help radiologists identify suspicious lesions and nodules in lung cancer patients. Training and validation was performed on the original JSRT dataset (dataset #01), BSE-JSRT dataset, i.e. the same JSRT dataset, but without clavicle and rib shadows (dataset #02), original JSRT dataset after segmentation (dataset #03), and BSE-JSRT dataset after segmentation (dataset #04). The results demonstrate the high efficiency and usefulness of the considered pre-processing techniques in the simplified configuration even. The pre-processed dataset without bones (dataset #02) demonstrates the much better accuracy and loss results in comparison to the other pre-processed datasets after lung segmentation (datasets #02 and #03).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge