Yuriy Kochura

Batch Size Influence on Performance of Graphic and Tensor Processing Units during Training and Inference Phases

Dec 31, 2018

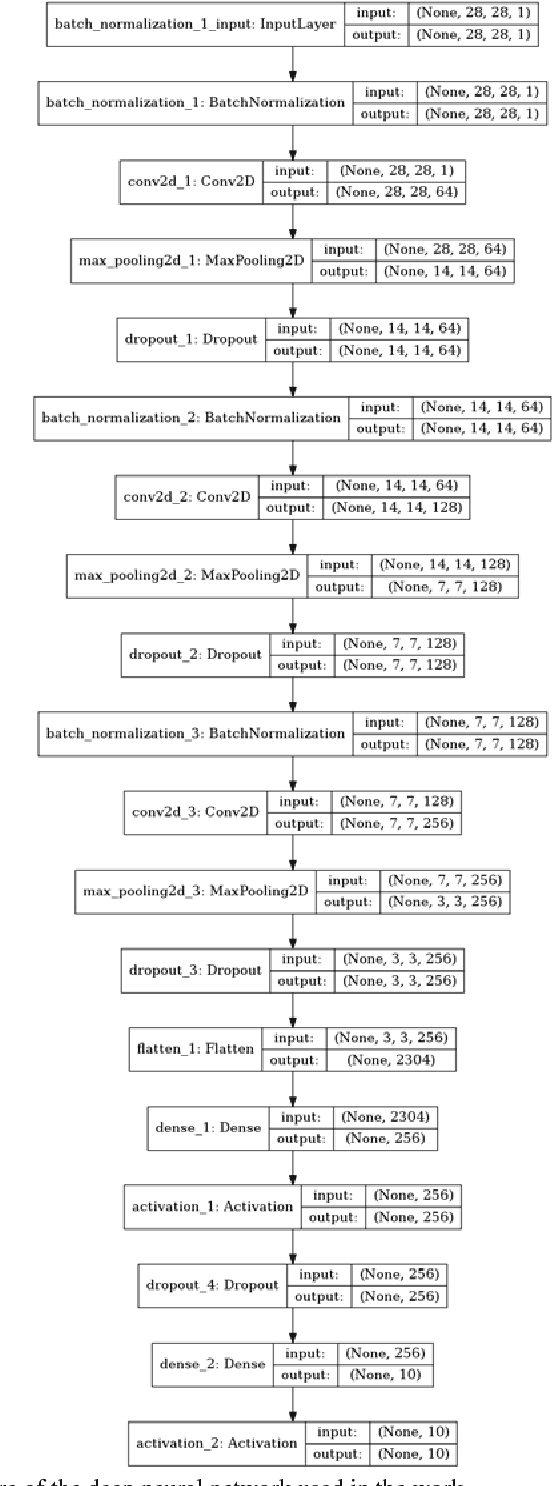

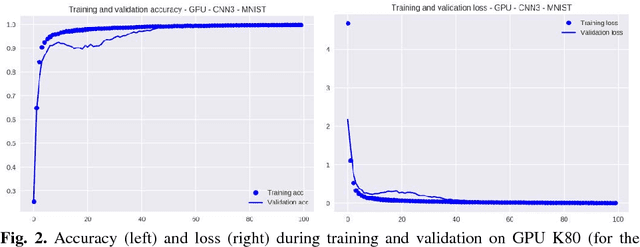

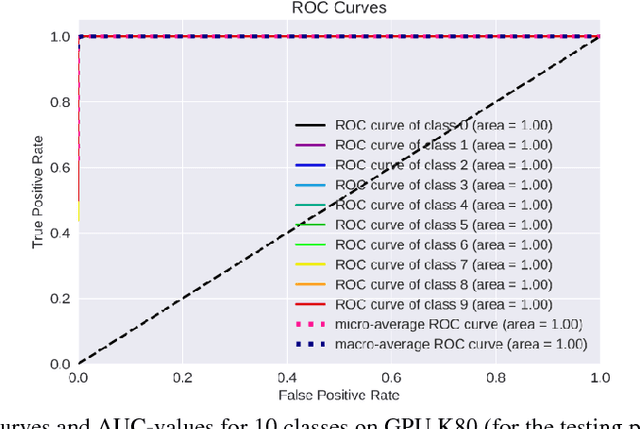

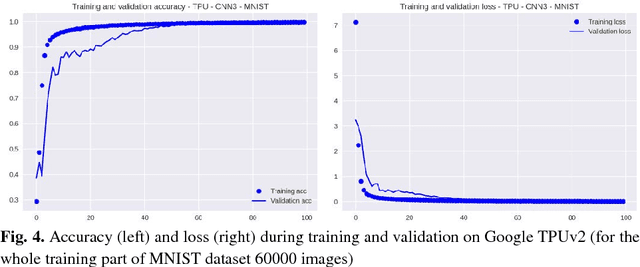

Abstract:The impact of the maximally possible batch size (for the better runtime) on performance of graphic processing units (GPU) and tensor processing units (TPU) during training and inference phases is investigated. The numerous runs of the selected deep neural network (DNN) were performed on the standard MNIST and Fashion-MNIST datasets. The significant speedup was obtained even for extremely low-scale usage of Google TPUv2 units (8 cores only) in comparison to the quite powerful GPU NVIDIA Tesla K80 card with the speedup up to 10x for training stage (without taking into account the overheads) and speedup up to 2x for prediction stage (with and without taking into account overheads). The precise speedup values depend on the utilization level of TPUv2 units and increase with the increase of the data volume under processing, but for the datasets used in this work (MNIST and Fashion-MNIST with images of sizes 28x28) the speedup was observed for batch sizes >512 images for training phase and >40 000 images for prediction phase. It should be noted that these results were obtained without detriment to the prediction accuracy and loss that were equal for both GPU and TPU runs up to the 3rd significant digit for MNIST dataset, and up to the 2nd significant digit for Fashion-MNIST dataset.

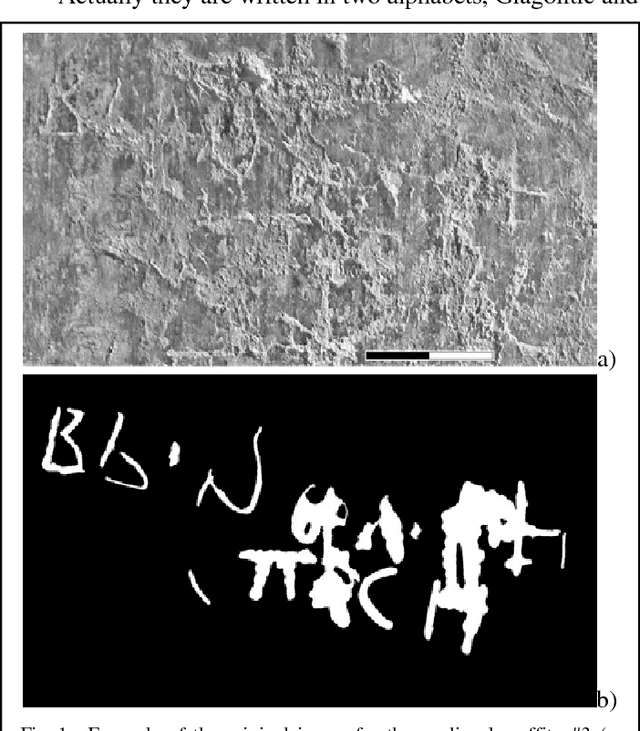

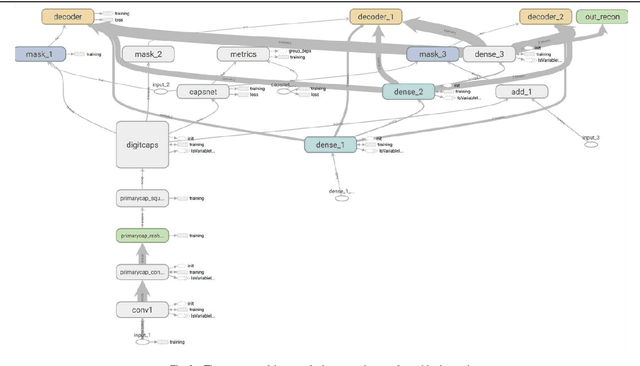

Capsule Deep Neural Network for Recognition of Historical Graffiti Handwriting

Sep 11, 2018

Abstract:Automatic recognition of the historical letters (XI-XVIII centuries) carved on the stoned walls of St.Sophia cathedral in Kyiv (Ukraine) was demonstrated by means of capsule deep learning neural network. It was applied to the image dataset of the carved Glagolitic and Cyrillic letters (CGCL), which was assembled and pre-processed recently for recognition and prediction by machine learning methods (https://www.kaggle.com/yoctoman/graffiti-st-sophia-cathedral-kyiv). CGCL dataset contains >4000 images for glyphs of 34 letters which are hardly recognized by experts even in contrast to notMNIST dataset with the better images of 10 letters taken from different fonts. Despite the much worse quality of CGCL dataset and extremely low number of samples (in comparison to notMNIST dataset) the capsule network model demonstrated much better results than the previously used convolutional neural network (CNN). The validation accuracy (and validation loss) was higher (lower) for capsule network model than for CNN without data augmentation even. The area under curve (AUC) values for receiver operating characteristic (ROC) were also higher for the capsule network model than for CNN model: 0.88-0.93 (capsule network) and 0.50 (CNN) without data augmentation, 0.91-0.95 (capsule network) and 0.51 (CNN) with lossless data augmentation, and similar results of 0.91-0.93 (capsule network) and 0.9 (CNN) in the regime of lossless data augmentation only. The confusion matrixes were much better for capsule network than for CNN model and gave the much lower type I (false positive) and type II (false negative) values in all three regimes of data augmentation. These results supports the previous claims that capsule-like networks allow to reduce error rates not only on MNIST digit dataset, but on the other notMNIST letter dataset and the more complex CGCL handwriting graffiti letter dataset also.

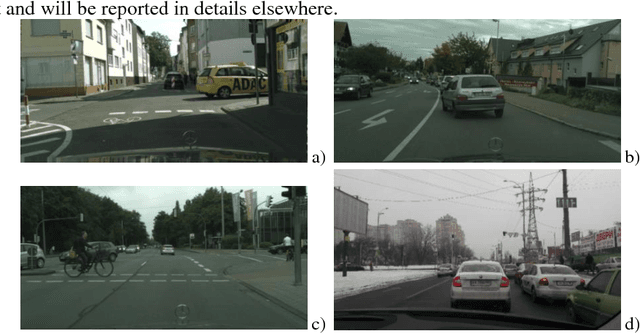

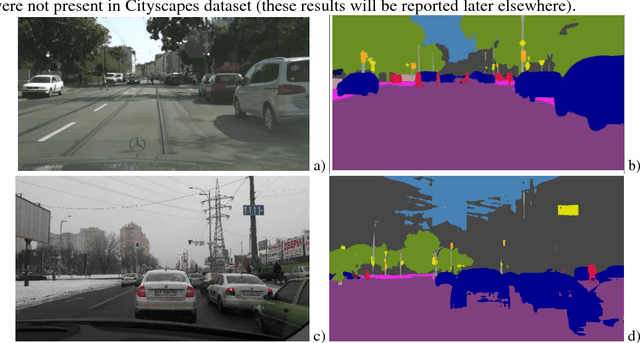

Performance Evaluation of Deep Learning Networks for Semantic Segmentation of Traffic Stereo-Pair Images

Jun 05, 2018

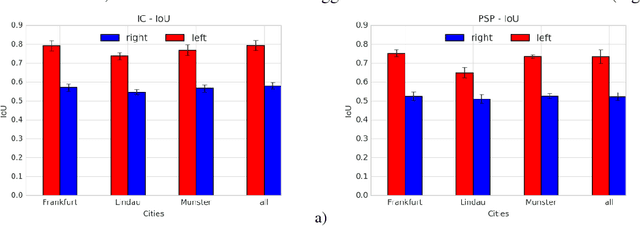

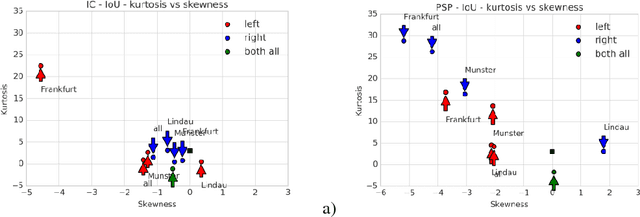

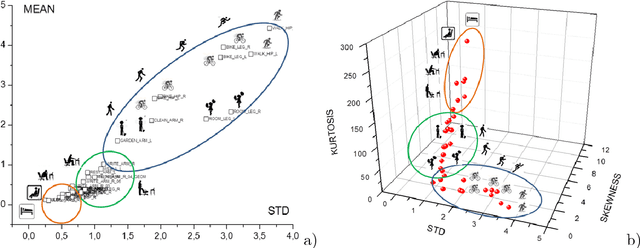

Abstract:Semantic image segmentation is one the most demanding task, especially for analysis of traffic conditions for self-driving cars. Here the results of application of several deep learning architectures (PSPNet and ICNet) for semantic image segmentation of traffic stereo-pair images are presented. The images from Cityscapes dataset and custom urban images were analyzed as to the segmentation accuracy and image inference time. For the models pre-trained on Cityscapes dataset, the inference time was equal in the limits of standard deviation, but the segmentation accuracy was different for various cities and stereo channels even. The distributions of accuracy (mean intersection over union - mIoU) values for each city and channel are asymmetric, long-tailed, and have many extreme outliers, especially for PSPNet network in comparison to ICNet network. Some statistical properties of these distributions (skewness, kurtosis) allow us to distinguish these two networks and open the question about relations between architecture of deep learning networks and statistical distribution of the predicted results (mIoU here). The results obtained demonstrated the different sensitivity of these networks to: (1) the local street view peculiarities in different cities that should be taken into account during the targeted fine tuning the models before their practical applications, (2) the right and left data channels in stereo-pairs. For both networks, the difference in the predicted results (mIoU here) for the right and left data channels in stereo-pairs is out of the limits of statistical error in relation to mIoU values. It means that the traffic stereo pairs can be effectively used not only for depth calculations (as it is usually used), but also as an additional data channel that can provide much more information about scene objects than simple duplication of the same street view images.

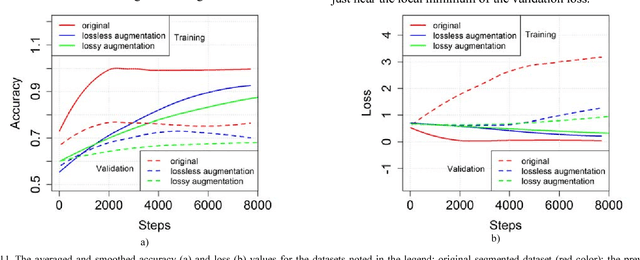

Chest X-Ray Analysis of Tuberculosis by Deep Learning with Segmentation and Augmentation

Mar 03, 2018

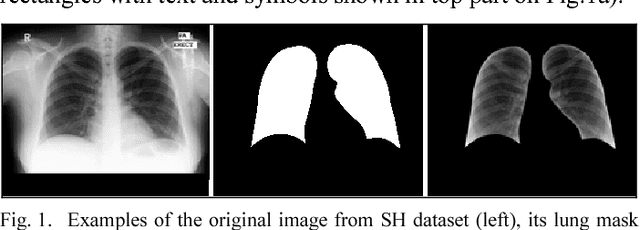

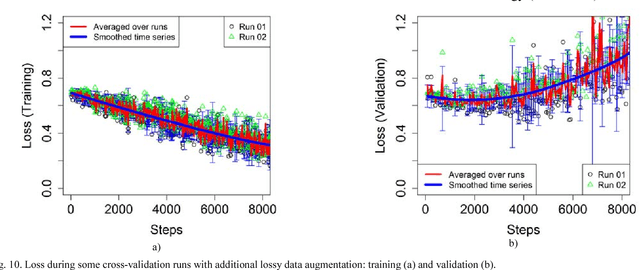

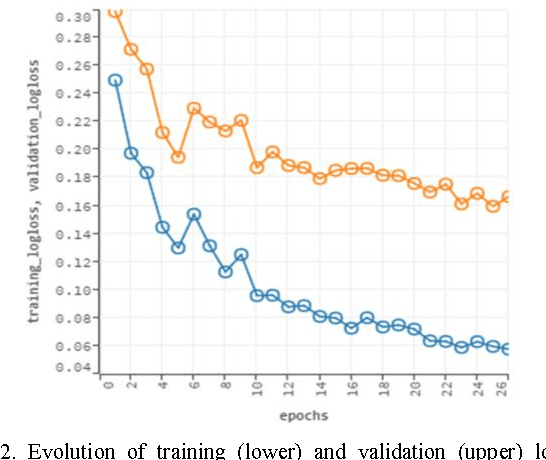

Abstract:The results of chest X-ray (CXR) analysis of 2D images to get the statistically reliable predictions (availability of tuberculosis) by computer-aided diagnosis (CADx) on the basis of deep learning are presented. They demonstrate the efficiency of lung segmentation, lossless and lossy data augmentation for CADx of tuberculosis by deep convolutional neural network (CNN) applied to the small and not well-balanced dataset even. CNN demonstrates ability to train (despite overfitting) on the pre-processed dataset obtained after lung segmentation in contrast to the original not-segmented dataset. Lossless data augmentation of the segmented dataset leads to the lowest validation loss (without overfitting) and nearly the same accuracy (within the limits of standard deviation) in comparison to the original and other pre-processed datasets after lossy data augmentation. The additional limited lossy data augmentation results in the lower validation loss, but with a decrease of the validation accuracy. In conclusion, besides the more complex deep CNNs and bigger datasets, the better progress of CADx for the small and not well-balanced datasets even could be obtained by better segmentation, data augmentation, dataset stratification, and exclusion of non-evident outliers.

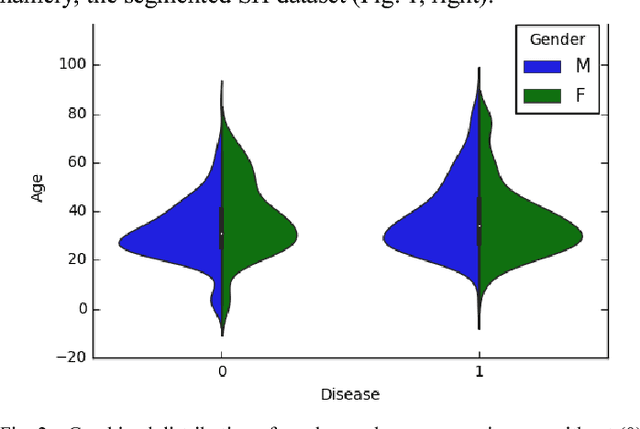

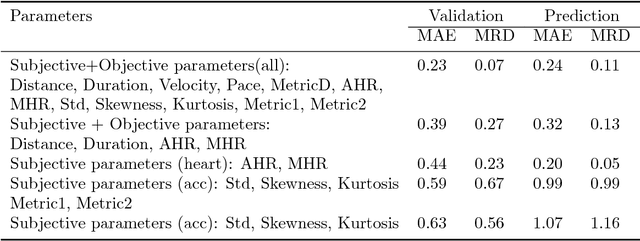

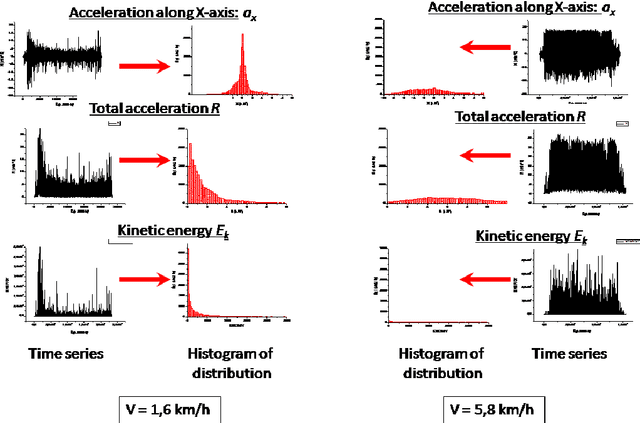

Deep Learning for Fatigue Estimation on the Basis of Multimodal Human-Machine Interactions

Dec 30, 2017

Abstract:The new method is proposed to monitor the level of current physical load and accumulated fatigue by several objective and subjective characteristics. It was applied to the dataset targeted to estimate the physical load and fatigue by several statistical and machine learning methods. The data from peripheral sensors (accelerometer, GPS, gyroscope, magnetometer) and brain-computing interface (electroencephalography) were collected, integrated, and analyzed by several statistical and machine learning methods (moment analysis, cluster analysis, principal component analysis, etc.). The hypothesis 1 was presented and proved that physical activity can be classified not only by objective parameters, but by subjective parameters also. The hypothesis 2 (experienced physical load and subsequent restoration as fatigue level can be estimated quantitatively and distinctive patterns can be recognized) was presented and some ways to prove it were demonstrated. Several "physical load" and "fatigue" metrics were proposed. The results presented allow to extend application of the machine learning methods for characterization of complex human activity patterns (for example, to estimate their actual physical load and fatigue, and give cautions and advice).

Performance Analysis of Open Source Machine Learning Frameworks for Various Parameters in Single-Threaded and Multi-Threaded Modes

Aug 29, 2017

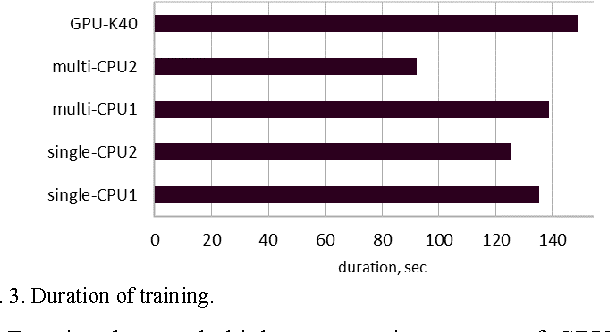

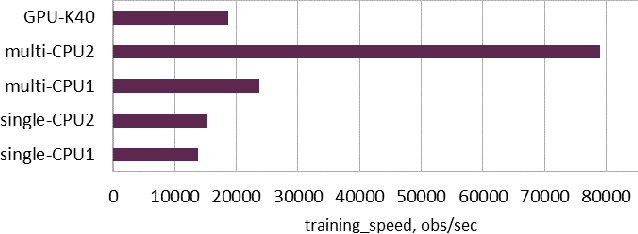

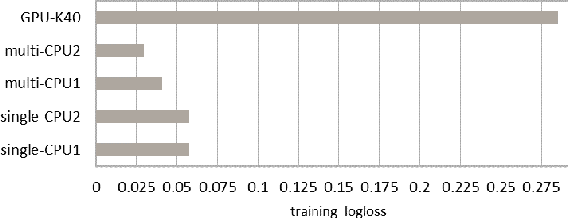

Abstract:The basic features of some of the most versatile and popular open source frameworks for machine learning (TensorFlow, Deep Learning4j, and H2O) are considered and compared. Their comparative analysis was performed and conclusions were made as to the advantages and disadvantages of these platforms. The performance tests for the de facto standard MNIST data set were carried out on H2O framework for deep learning algorithms designed for CPU and GPU platforms for single-threaded and multithreaded modes of operation Also, we present the results of testing neural networks architectures on H2O platform for various activation functions, stopping metrics, and other parameters of machine learning algorithm. It was demonstrated for the use case of MNIST database of handwritten digits in single-threaded mode that blind selection of these parameters can hugely increase (by 2-3 orders) the runtime without the significant increase of precision. This result can have crucial influence for optimization of available and new machine learning methods, especially for image recognition problems.

* 15 pages, 11 figures, 4 tables; this paper summarizes the activities which were started recently and described shortly in the previous conference presentations arXiv:1706.02248 and arXiv:1707.04940; it is accepted for Springer book series "Advances in Intelligent Systems and Computing"

Comparative Performance Analysis of Neural Networks Architectures on H2O Platform for Various Activation Functions

Jul 16, 2017

Abstract:Deep learning (deep structured learning, hierarchi- cal learning or deep machine learning) is a branch of machine learning based on a set of algorithms that attempt to model high- level abstractions in data by using multiple processing layers with complex structures or otherwise composed of multiple non-linear transformations. In this paper, we present the results of testing neural networks architectures on H2O platform for various activation functions, stopping metrics, and other parameters of machine learning algorithm. It was demonstrated for the use case of MNIST database of handwritten digits in single-threaded mode that blind selection of these parameters can hugely increase (by 2-3 orders) the runtime without the significant increase of precision. This result can have crucial influence for opitmization of available and new machine learning methods, especially for image recognition problems.

Comparative Analysis of Open Source Frameworks for Machine Learning with Use Case in Single-Threaded and Multi-Threaded Modes

Jun 07, 2017

Abstract:The basic features of some of the most versatile and popular open source frameworks for machine learning (TensorFlow, Deep Learning4j, and H2O) are considered and compared. Their comparative analysis was performed and conclusions were made as to the advantages and disadvantages of these platforms. The performance tests for the de facto standard MNIST data set were carried out on H2O framework for deep learning algorithms designed for CPU and GPU platforms for single-threaded and multithreaded modes of operation.

* 4 pages, 6 figures, 4 tables; XIIth International Scientific and Technical Conference on Computer Sciences and Information Technologies (CSIT 2017), Lviv, Ukraine

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge