Peiyu Zeng

Multi-robot workspace design and motion planning for package sorting

Dec 15, 2024Abstract:Robotic systems are routinely used in the logistics industry to enhance operational efficiency, but the design of robot workspaces remains a complex and manual task, which limits the system's flexibility to changing demands. This paper aims to automate robot workspace design by proposing a computational framework to generate a budget-minimizing layout by selectively placing stationary robots on a floor grid, which includes robotic arms and conveyor belts, and plan their cooperative motions to sort packages from given input and output locations. We propose a hierarchical solving strategy that first optimizes the layout to minimize the hardware budget with a subgraph optimization subject to network flow constraints, followed by task allocation and motion planning based on the generated layout. In addition, we demonstrate how to model conveyor belts as manipulators with multiple end effectors to integrate them into our design and planning framework. We evaluated our framework on a set of simulated scenarios and showed that it can generate optimal layouts and collision-free motion trajectories, adapting to different available robots, cost assignments, and box payloads.

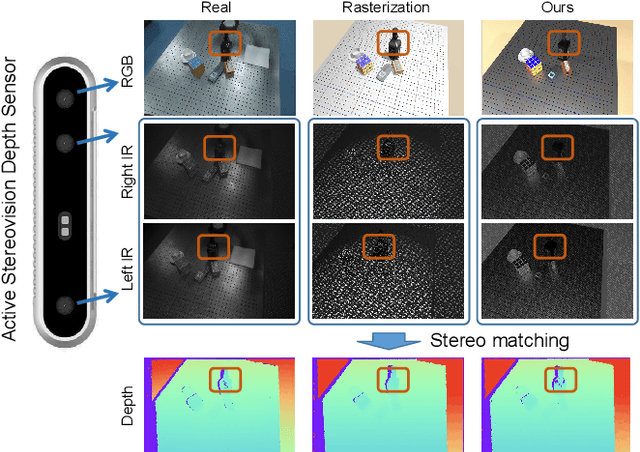

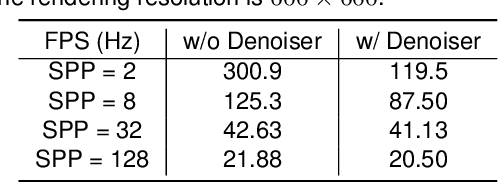

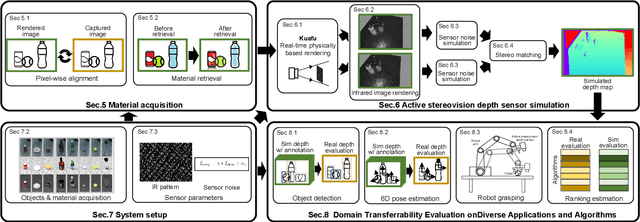

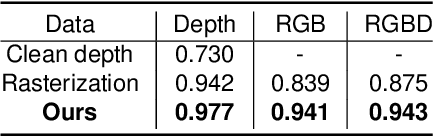

Close the Visual Domain Gap by Physics-Grounded Active Stereovision Depth Sensor Simulation

Feb 07, 2022

Abstract:In this paper, we focus on the simulation of active stereovision depth sensors, which are popular in both academic and industry communities. Inspired by the underlying mechanism of the sensors, we designed a fully physics-grounded simulation pipeline, which includes material acquisition, ray tracing based infrared (IR) image rendering, IR noise simulation, and depth estimation. The pipeline is able to generate depth maps with material-dependent error patterns similar to a real depth sensor. We conduct extensive experiments to show that perception algorithms and reinforcement learning policies trained in our simulation platform could transfer well to real world test cases without any fine-tuning. Furthermore, due to the high degree of realism of this simulation, our depth sensor simulator can be used as a convenient testbed to evaluate the algorithm performance in the real world, which will largely reduce the human effort in developing robotic algorithms. The entire pipeline has been integrated into the SAPIEN simulator and is open-sourced to promote the research of vision and robotics communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge