Paul Schmitt

nuReality: A VR environment for research of pedestrian and autonomous vehicle interactions

Jan 12, 2022

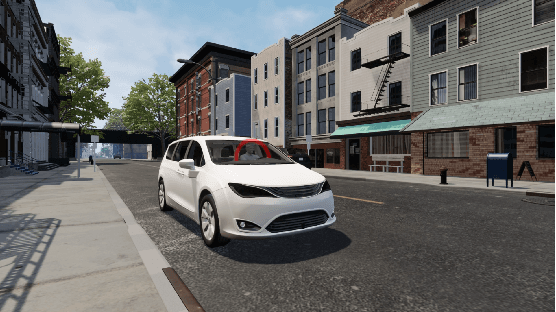

Abstract:We present nuReality, a virtual reality 'VR' environment designed to test the efficacy of vehicular behaviors to communicate intent during interactions between autonomous vehicles 'AVs' and pedestrians at urban intersections. In this project we focus on expressive behaviors as a means for pedestrians to readily recognize the underlying intent of the AV's movements. VR is an ideal tool to use to test these situations as it can be immersive and place subjects into these potentially dangerous scenarios without risk. nuReality provides a novel and immersive virtual reality environment that includes numerous visual details (road and building texturing, parked cars, swaying tree limbs) as well as auditory details (birds chirping, cars honking in the distance, people talking). In these files we present the nuReality environment, its 10 unique vehicle behavior scenarios, and the Unreal Engine and Autodesk Maya source files for each scenario. The files are publicly released as open source at www.nuReality.org, to support the academic community studying the critical AV-pedestrian interaction.

Understanding Model Drift in a Large Cellular Network

Sep 07, 2021

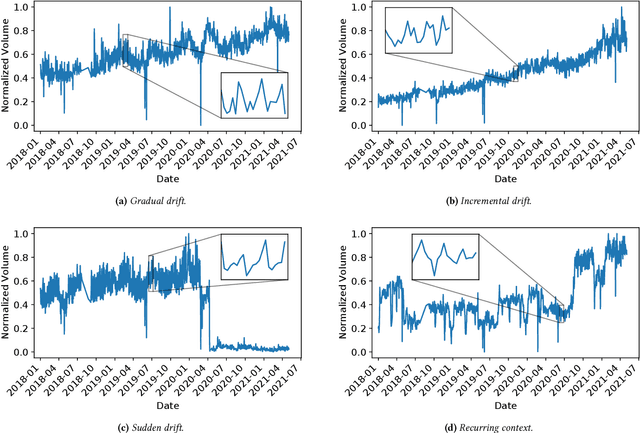

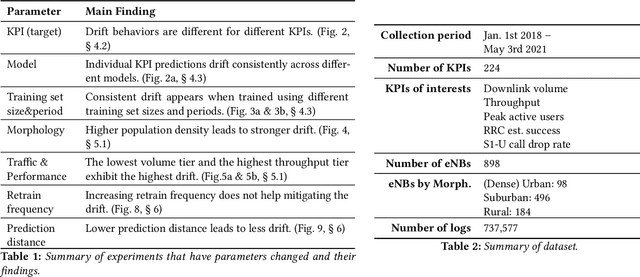

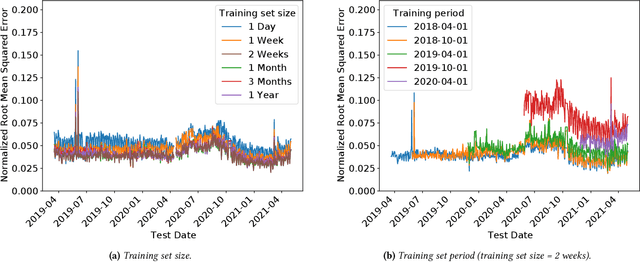

Abstract:Operational networks are increasingly using machine learning models for a variety of tasks, including detecting anomalies, inferring application performance, and forecasting demand. Accurate models are important, yet accuracy can degrade over time due to concept drift, whereby either the characteristics of the data change over time (data drift) or the relationship between the features and the target predictor change over time (model drift). Drift is important to detect because changes in properties of the underlying data or relationships to the target prediction can require model retraining, which can be time-consuming and expensive. Concept drift occurs in operational networks for a variety of reasons, ranging from software upgrades to seasonality to changes in user behavior. Yet, despite the prevalence of drift in networks, its extent and effects on prediction accuracy have not been extensively studied. This paper presents an initial exploration into concept drift in a large cellular network in the United States for a major metropolitan area in the context of demand forecasting. We find that concept drift arises largely due to data drift, and it appears across different key performance indicators (KPIs), models, training set sizes, and time intervals. We identify the sources of concept drift for the particular problem of forecasting downlink volume. Weekly and seasonal patterns introduce both high and low-frequency model drift, while disasters and upgrades result in sudden drift due to exogenous shocks. Regions with high population density, lower traffic volumes, and higher speeds also tend to correlate with more concept drift. The features that contribute most significantly to concept drift are User Equipment (UE) downlink packets, UE uplink packets, and Real-time Transport Protocol (RTP) total received packets.

Beyond Accuracy: Cost-Aware Data Representation Exploration for Network Traffic Model Performance

Oct 27, 2020

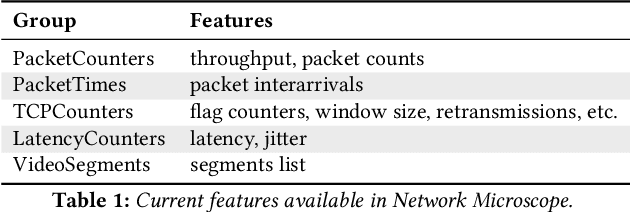

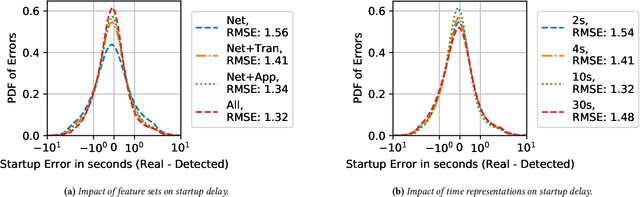

Abstract:In this paper, we explore how different representations of network traffic affect the performance of machine learning models for a range of network management tasks, including application performance diagnosis and attack detection. We study the relationship between the systems-level costs of different representations of network traffic to the ultimate target performance metric -- e.g., accuracy -- of the models trained from these representations. We demonstrate the benefit of exploring a range of representations of network traffic and present Network Microscope, a proof-of-concept reference implementation that both monitors network traffic at high speed and transforms the traffic in real time to produce a variety of representations for input to machine learning models. Systems like Network Microscope can ultimately help network operators better explore the design space of data representation for learning, balancing systems costs related to feature extraction and model training against resulting model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge