Renata Teixeira

Predicting IPv4 Services Across All Ports

Mar 02, 2023

Abstract:Internet-wide scanning is commonly used to understand the topology and security of the Internet. However, IPv4 Internet scans have been limited to scanning only a subset of services -- exhaustively scanning all IPv4 services is too costly and no existing bandwidth-saving frameworks are designed to scan IPv4 addresses across all ports. In this work we introduce GPS, a system that efficiently discovers Internet services across all ports. GPS runs a predictive framework that learns from extremely small sample sizes and is highly parallelizable, allowing it to quickly find patterns between services across all 65K ports and a myriad of features. GPS computes service predictions in 13 minutes (four orders of magnitude faster than prior work) and finds 92.5% of services across all ports with 131x less bandwidth, and 204x more precision, compared to exhaustive scanning. GPS is the first work to show that, given at least two responsive IP addresses on a port to train from, predicting the majority of services across all ports is possible and practical.

Beyond Accuracy: Cost-Aware Data Representation Exploration for Network Traffic Model Performance

Oct 27, 2020

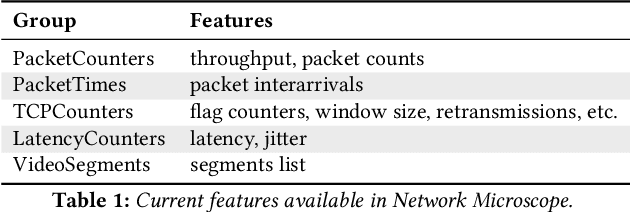

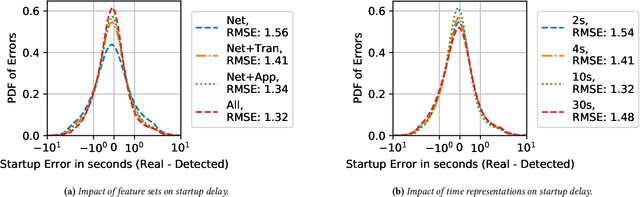

Abstract:In this paper, we explore how different representations of network traffic affect the performance of machine learning models for a range of network management tasks, including application performance diagnosis and attack detection. We study the relationship between the systems-level costs of different representations of network traffic to the ultimate target performance metric -- e.g., accuracy -- of the models trained from these representations. We demonstrate the benefit of exploring a range of representations of network traffic and present Network Microscope, a proof-of-concept reference implementation that both monitors network traffic at high speed and transforms the traffic in real time to produce a variety of representations for input to machine learning models. Systems like Network Microscope can ultimately help network operators better explore the design space of data representation for learning, balancing systems costs related to feature extraction and model training against resulting model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge