Francesco Bronzino

Ironing the Graphs: Toward a Correct Geometric Analysis of Large-Scale Graphs

Jul 31, 2024

Abstract:Graph embedding approaches attempt to project graphs into geometric entities, i.e, manifolds. The idea is that the geometric properties of the projected manifolds are helpful in the inference of graph properties. However, if the choice of the embedding manifold is incorrectly performed, it can lead to incorrect geometric inference. In this paper, we argue that the classical embedding techniques cannot lead to correct geometric interpretation as they miss the curvature at each point, of manifold. We advocate that for doing correct geometric interpretation the embedding of graph should be done over regular constant curvature manifolds. To this end, we present an embedding approach, the discrete Ricci flow graph embedding (dRfge) based on the discrete Ricci flow that adapts the distance between nodes in a graph so that the graph can be embedded onto a constant curvature manifold that is homogeneous and isotropic, i.e., all directions are equivalent and distances comparable, resulting in correct geometric interpretations. A major contribution of this paper is that for the first time, we prove the convergence of discrete Ricci flow to a constant curvature and stable distance metrics over the edges. A drawback of using the discrete Ricci flow is the high computational complexity that prevented its usage in large-scale graph analysis. Another contribution of this paper is a new algorithmic solution that makes it feasible to calculate the Ricci flow for graphs of up to 50k nodes, and beyond. The intuitions behind the discrete Ricci flow make it possible to obtain new insights into the structure of large-scale graphs. We demonstrate this through a case study on analyzing the internet connectivity structure between countries at the BGP level.

Understanding Model Drift in a Large Cellular Network

Sep 07, 2021

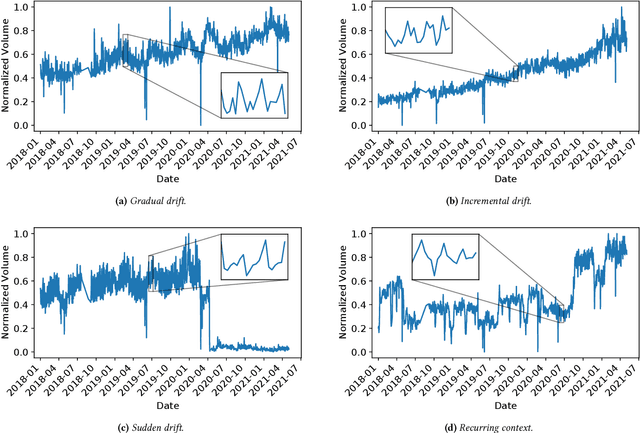

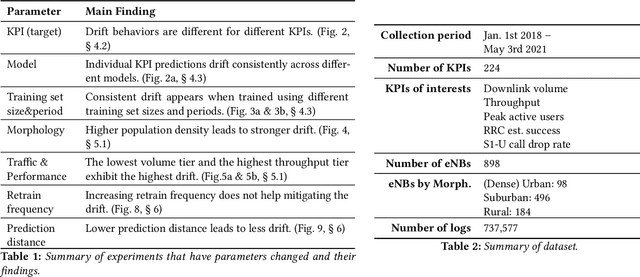

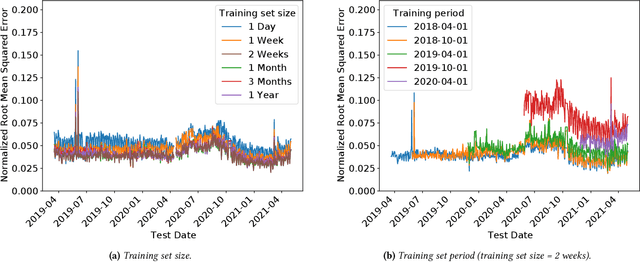

Abstract:Operational networks are increasingly using machine learning models for a variety of tasks, including detecting anomalies, inferring application performance, and forecasting demand. Accurate models are important, yet accuracy can degrade over time due to concept drift, whereby either the characteristics of the data change over time (data drift) or the relationship between the features and the target predictor change over time (model drift). Drift is important to detect because changes in properties of the underlying data or relationships to the target prediction can require model retraining, which can be time-consuming and expensive. Concept drift occurs in operational networks for a variety of reasons, ranging from software upgrades to seasonality to changes in user behavior. Yet, despite the prevalence of drift in networks, its extent and effects on prediction accuracy have not been extensively studied. This paper presents an initial exploration into concept drift in a large cellular network in the United States for a major metropolitan area in the context of demand forecasting. We find that concept drift arises largely due to data drift, and it appears across different key performance indicators (KPIs), models, training set sizes, and time intervals. We identify the sources of concept drift for the particular problem of forecasting downlink volume. Weekly and seasonal patterns introduce both high and low-frequency model drift, while disasters and upgrades result in sudden drift due to exogenous shocks. Regions with high population density, lower traffic volumes, and higher speeds also tend to correlate with more concept drift. The features that contribute most significantly to concept drift are User Equipment (UE) downlink packets, UE uplink packets, and Real-time Transport Protocol (RTP) total received packets.

Beyond Accuracy: Cost-Aware Data Representation Exploration for Network Traffic Model Performance

Oct 27, 2020

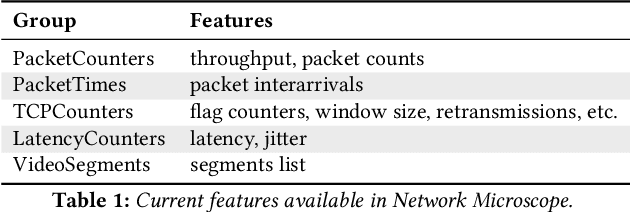

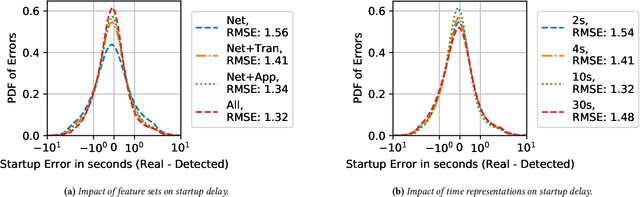

Abstract:In this paper, we explore how different representations of network traffic affect the performance of machine learning models for a range of network management tasks, including application performance diagnosis and attack detection. We study the relationship between the systems-level costs of different representations of network traffic to the ultimate target performance metric -- e.g., accuracy -- of the models trained from these representations. We demonstrate the benefit of exploring a range of representations of network traffic and present Network Microscope, a proof-of-concept reference implementation that both monitors network traffic at high speed and transforms the traffic in real time to produce a variety of representations for input to machine learning models. Systems like Network Microscope can ultimately help network operators better explore the design space of data representation for learning, balancing systems costs related to feature extraction and model training against resulting model performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge