Paul J. Goulart

A neural network-based approach to hybrid systems identification for control

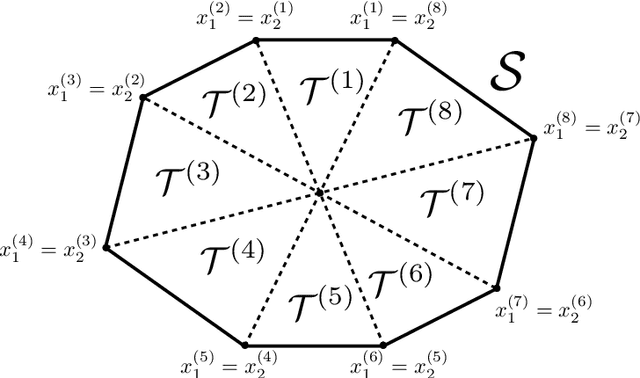

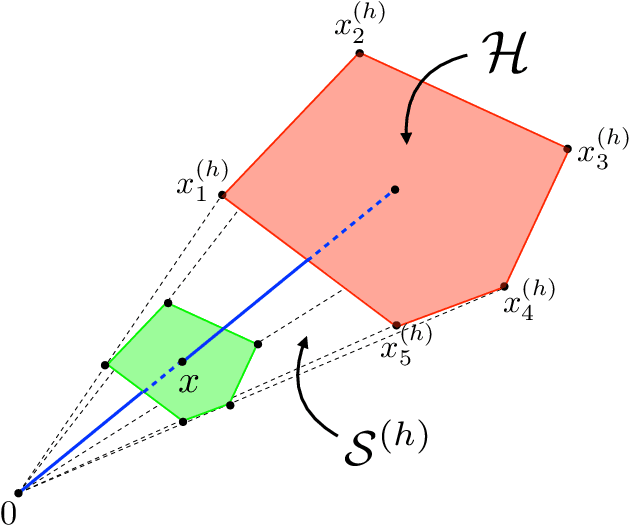

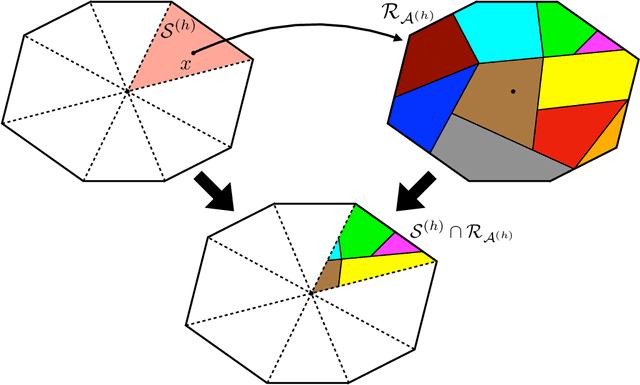

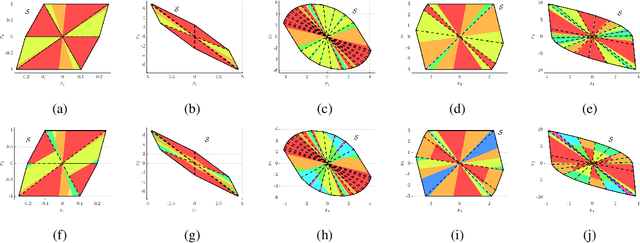

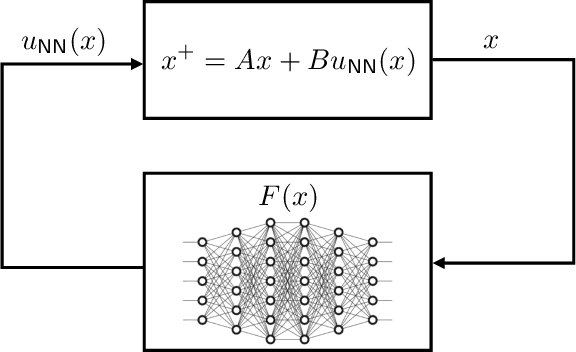

Apr 02, 2024Abstract:We consider the problem of designing a machine learning-based model of an unknown dynamical system from a finite number of (state-input)-successor state data points, such that the model obtained is also suitable for optimal control design. We propose a specific neural network (NN) architecture that yields a hybrid system with piecewise-affine dynamics that is differentiable with respect to the network's parameters, thereby enabling the use of derivative-based training procedures. We show that a careful choice of our NN's weights produces a hybrid system model with structural properties that are highly favourable when used as part of a finite horizon optimal control problem (OCP). Specifically, we show that optimal solutions with strong local optimality guarantees can be computed via nonlinear programming, in contrast to classical OCPs for general hybrid systems which typically require mixed-integer optimization. In addition to being well-suited for optimal control design, numerical simulations illustrate that our NN-based technique enjoys very similar performance to state-of-the-art system identification methodologies for hybrid systems and it is competitive on nonlinear benchmarks.

Neural network controllers for uncertain linear systems

Apr 27, 2022

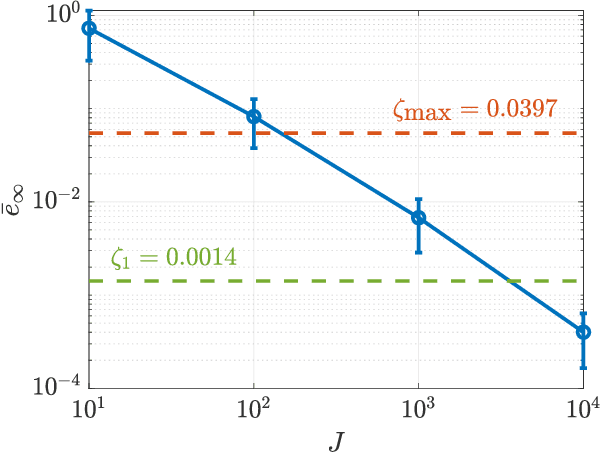

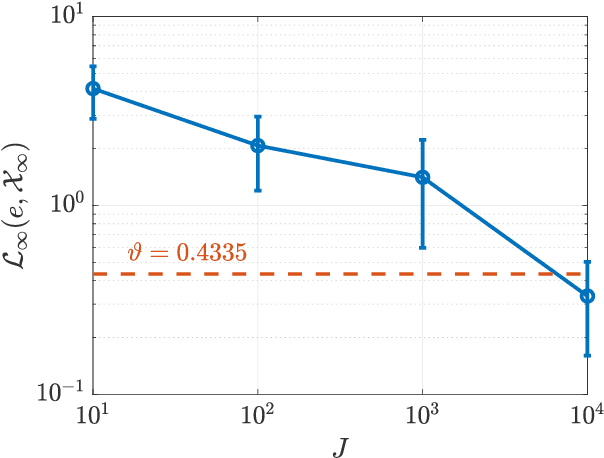

Abstract:We consider the design of reliable neural network (NN)-based approximations of traditional stabilizing controllers for linear systems affected by polytopic uncertainty, including controllers with variable structure and those based on a minimal selection policy. We develop a systematic procedure to certify the closed-loop stability and performance of a polytopic system when a rectified linear unit (ReLU)-based approximation replaces such traditional controllers. We provide sufficient conditions to ensure stability involving the worst-case approximation error and the Lipschitz constant characterizing the error function between ReLU-based and traditional controller-based state-to-input mappings, and further provide offline, mixed-integer optimization-based methods that allow us to compute those quantities exactly.

Personalized incentives as feedback design in generalized Nash equilibrium problems

Mar 24, 2022

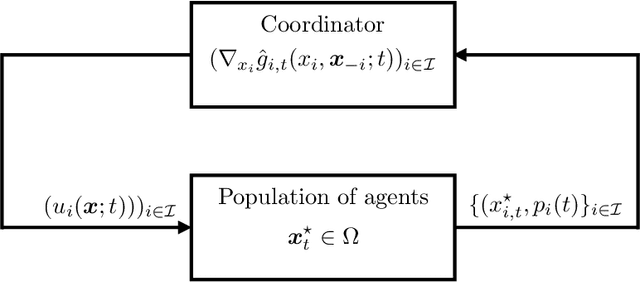

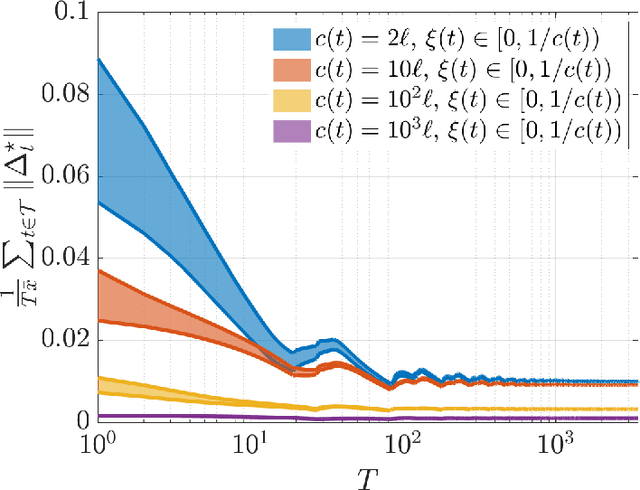

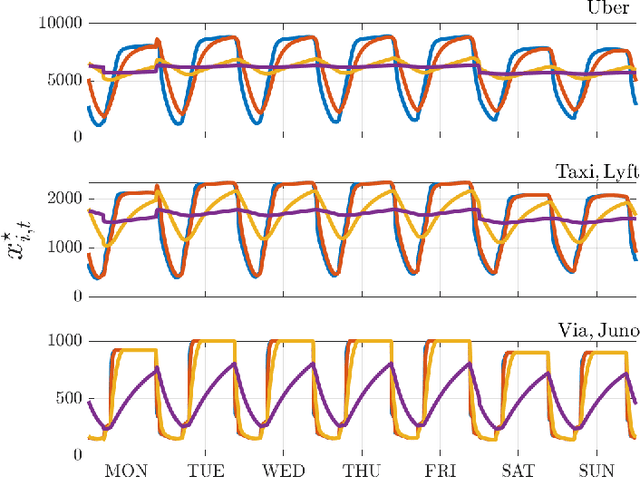

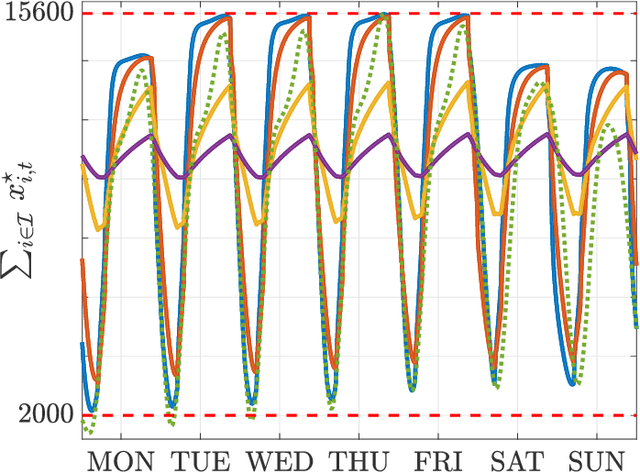

Abstract:We investigate both stationary and time-varying, nonmonotone generalized Nash equilibrium problems that exhibit symmetric interactions among the agents, which are known to be potential. As may happen in practical cases, however, we envision a scenario in which the formal expression of the underlying potential function is not available, and we design a semi-decentralized Nash equilibrium seeking algorithm. In the proposed two-layer scheme, a coordinator iteratively integrates the (possibly noisy and sporadic) agents' feedback to learn the pseudo-gradients of the agents, and then design personalized incentives for them. On their side, the agents receive those personalized incentives, compute a solution to an extended game, and then return feedback measurements to the coordinator. In the stationary setting, our algorithm returns a Nash equilibrium in case the coordinator is endowed with standard learning policies, while it returns a Nash equilibrium up to a constant, yet adjustable, error in the time-varying case. As a motivating application, we consider the ridehailing service provided by several companies with mobility as a service orchestration, necessary to both handle competition among firms and avoid traffic congestion, which is also adopted to run numerical experiments verifying our results.

Reliably-stabilizing piecewise-affine neural network controllers

Nov 22, 2021

Abstract:A common problem affecting neural network (NN) approximations of model predictive control (MPC) policies is the lack of analytical tools to assess the stability of the closed-loop system under the action of the NN-based controller. We present a general procedure to quantify the performance of such a controller, or to design minimum complexity NNs with rectified linear units (ReLUs) that preserve the desirable properties of a given MPC scheme. By quantifying the approximation error between NN-based and MPC-based state-to-input mappings, we first establish suitable conditions involving two key quantities, the worst-case error and the Lipschitz constant, guaranteeing the stability of the closed-loop system. We then develop an offline, mixed-integer optimization-based method to compute those quantities exactly. Together these techniques provide conditions sufficient to certify the stability and performance of a ReLU-based approximation of an MPC control law.

Learning equilibria with personalized incentives in a class of nonmonotone games

Nov 06, 2021

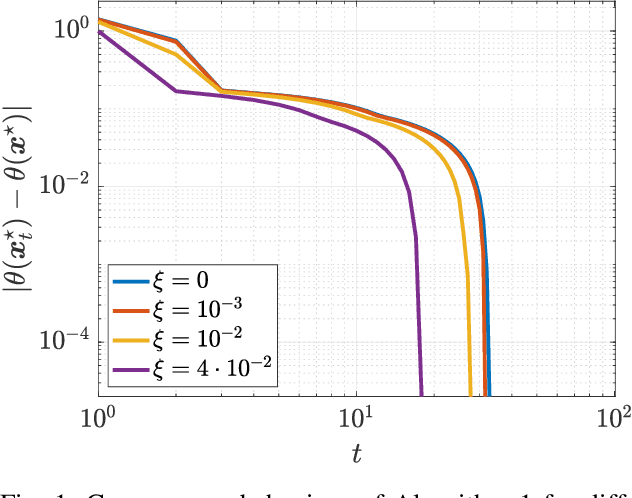

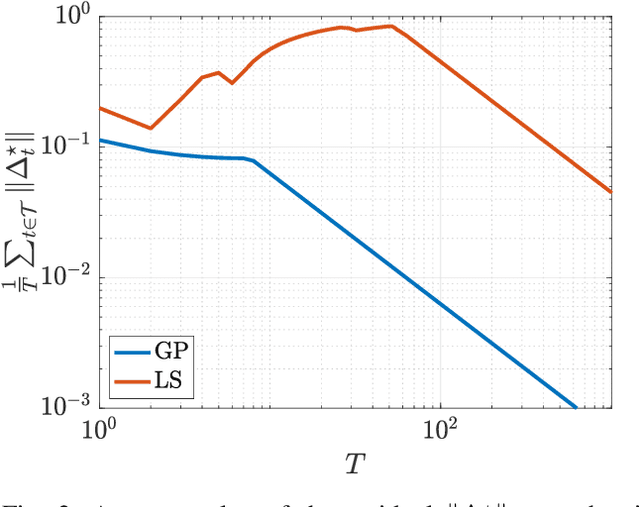

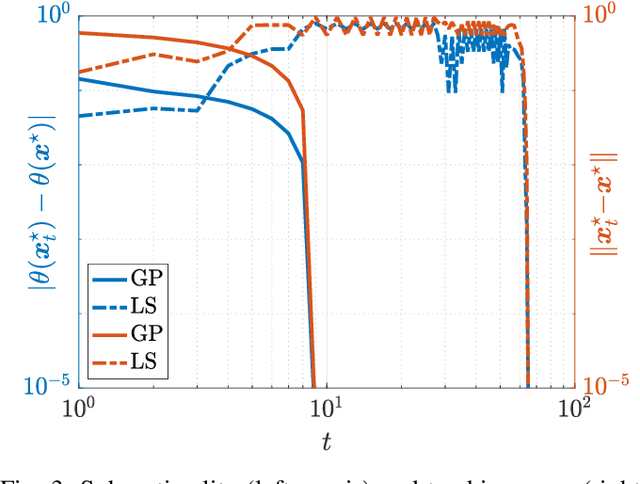

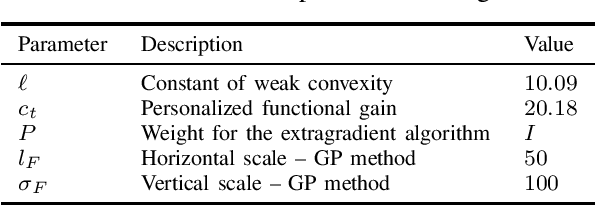

Abstract:We consider quadratic, nonmonotone generalized Nash equilibrium problems with symmetric interactions among the agents, which are known to be potential. As may happen in practical cases, we envision a scenario in which an explicit expression of the underlying potential function is not available, and we design a two-layer Nash equilibrium seeking algorithm. In the proposed scheme, a coordinator iteratively integrates the noisy agents' feedback to learn the pseudo-gradients of the agents, and then design personalized incentives for them. On their side, the agents receive those personalized incentives, compute a solution to an extended game, and then return feedback measures to the coordinator. We show that our algorithm returns an equilibrium in case the coordinator is endowed with standard learning policies, and corroborate our results on a numerical instance of a hypomonotone game.

An optimal transport approach to data compression in distributionally robust control

May 19, 2020

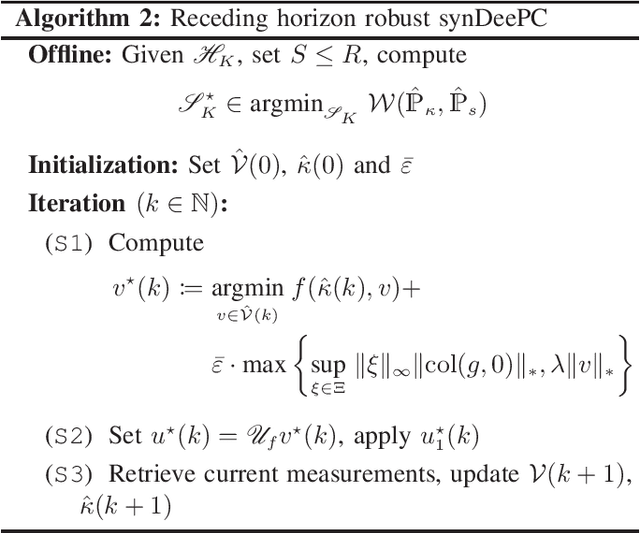

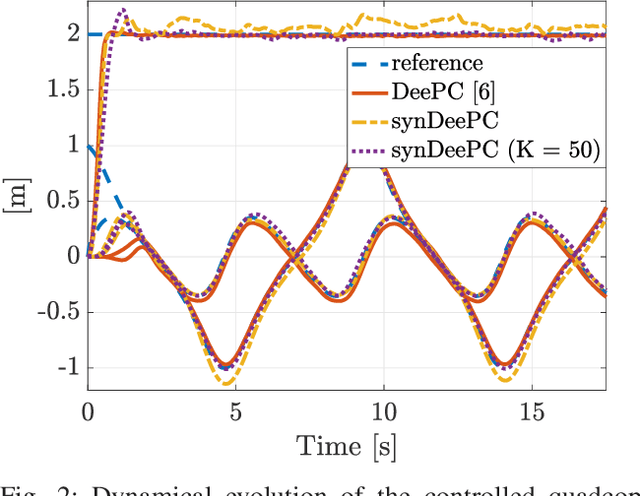

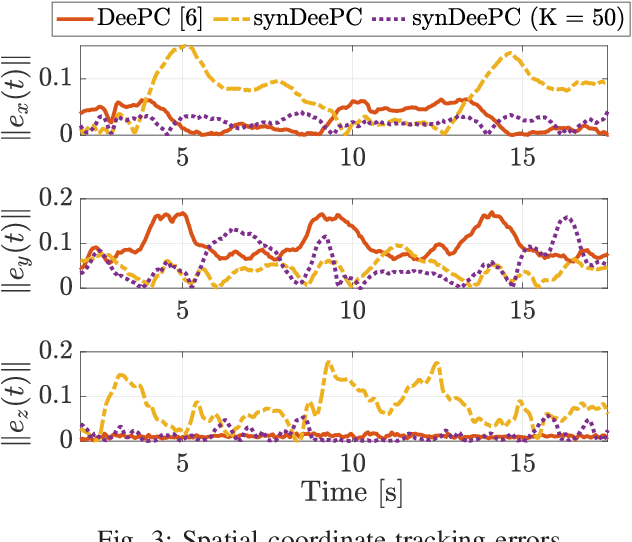

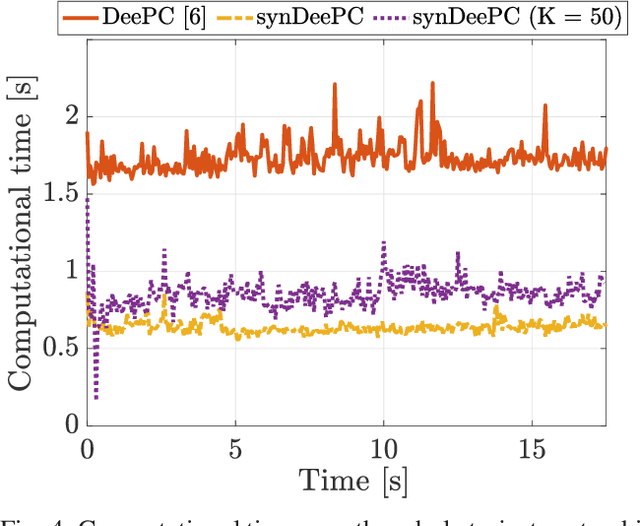

Abstract:We consider the problem of controlling a stochastic linear time-invariant system using a behavioural approach based on the direct optimization of controllers over input-output pairs drawn from a large dataset. In order to improve the computational efficiency of controllers implemented online, we propose a method for compressing this large data set to a smaller synthetic set of representative behaviours using techniques based on optimal transport. Specifically, we choose our synthetic data by minimizing the Wasserstein distance between atomic distributions supported on both the original data set and our synthetic one. We show that a distributionally robust optimal control computed using our synthetic dataset enjoys the same performance guarantees onto an arbitrarily larger ambiguity set relative to the original one. Finally, we illustrate the robustness and control performances over the original and compressed datasets through numerical simulations.

Distributionally Ambiguous Optimization Techniques for Batch Bayesian Optimization

Apr 16, 2018

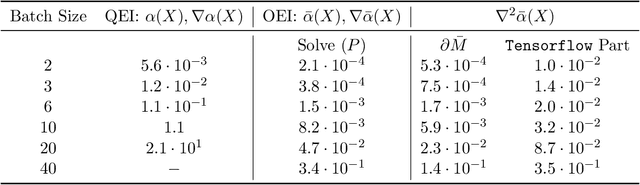

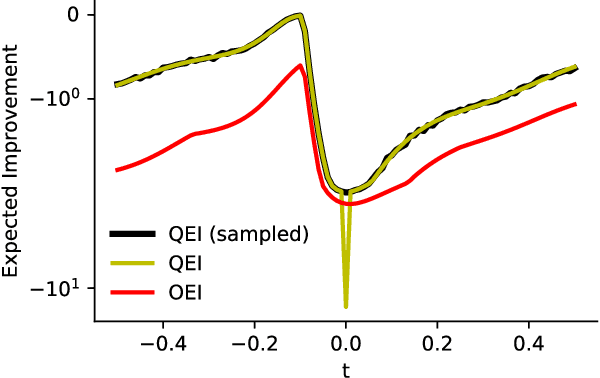

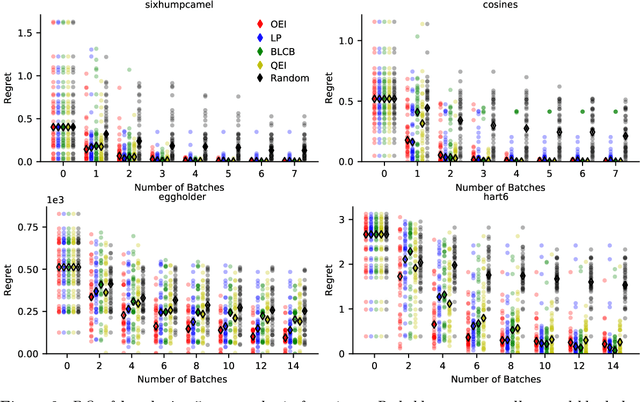

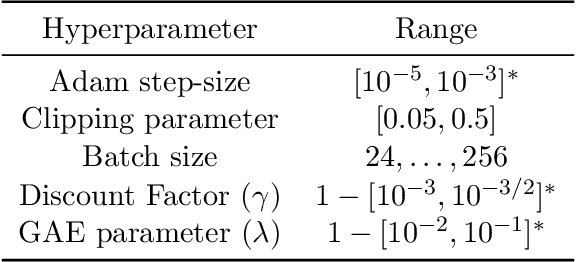

Abstract:We propose a novel, theoretically-grounded, acquisition function for Batch Bayesian optimization informed by insights from distributionally ambiguous optimization. Our acquisition function is a lower bound on the well-known Expected Improvement function, which requires evaluation of a Gaussian Expectation over a multivariate piecewise affine function. Our bound is computed instead by evaluating the best-case expectation over all probability distributions consistent with the same mean and variance as the original Gaussian distribution. Unlike alternative approaches, including Expected Improvement, our proposed acquisition function avoids multi-dimensional integrations entirely, and can be computed exactly - even on large batch sizes - as the solution of a tractable convex optimization problem. Our suggested acquisition function can also be optimized efficiently, since first and second derivative information can be calculated inexpensively as by-products of the acquisition function calculation itself. We derive various novel theorems that ground our work theoretically and we demonstrate superior performance via simple motivating examples, benchmark functions and real-world problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge