Patrick Siarry

Learning K-U-Net with constant complexity: An Application to time series forecasting

Oct 03, 2024

Abstract:Training deep models for time series forecasting is a critical task with an inherent challenge of time complexity. While current methods generally ensure linear time complexity, our observations on temporal redundancy show that high-level features are learned 98.44\% slower than low-level features. To address this issue, we introduce a new exponentially weighted stochastic gradient descent algorithm designed to achieve constant time complexity in deep learning models. We prove that the theoretical complexity of this learning method is constant. Evaluation of this method on Kernel U-Net (K-U-Net) on synthetic datasets shows a significant reduction in complexity while improving the accuracy of the test set.

Evidential Deep Partial Multi-View Classification With Discount Fusion

Aug 23, 2024Abstract:Incomplete multi-view data classification poses significant challenges due to the common issue of missing views in real-world scenarios. Despite advancements, existing methods often fail to provide reliable predictions, largely due to the uncertainty of missing views and the inconsistent quality of imputed data. To tackle these problems, we propose a novel framework called Evidential Deep Partial Multi-View Classification (EDP-MVC). Initially, we use K-means imputation to address missing views, creating a complete set of multi-view data. However, the potential conflicts and uncertainties within this imputed data can affect the reliability of downstream inferences. To manage this, we introduce a Conflict-Aware Evidential Fusion Network (CAEFN), which dynamically adjusts based on the reliability of the evidence, ensuring trustworthy discount fusion and producing reliable inference outcomes. Comprehensive experiments on various benchmark datasets reveal EDP-MVC not only matches but often surpasses the performance of state-of-the-art methods.

Anomaly Prediction: A Novel Approach with Explicit Delay and Horizon

Aug 09, 2024

Abstract:Anomaly detection in time series data is a critical challenge across various domains. Traditional methods typically focus on identifying anomalies in immediate subsequent steps, often underestimating the significance of temporal dynamics such as delay time and horizons of anomalies, which generally require extensive post-analysis. This paper introduces a novel approach for detecting time series anomalies called Anomaly Prediction, incorporating temporal information directly into the prediction results. We propose a new dataset specifically designed to evaluate this approach and conduct comprehensive experiments using several state-of-the-art time series forecasting methods. The results demonstrate the efficacy of our approach in providing timely and accurate anomaly predictions, setting a new benchmark for future research in this field.

Robust Time Series Forecasting with Non-Heavy-Tailed Gaussian Loss-Weighted Sampler

Jun 19, 2024

Abstract:Forecasting multivariate time series is a computationally intensive task challenged by extreme or redundant samples. Recent resampling methods aim to increase training efficiency by reweighting samples based on their running losses. However, these methods do not solve the problems caused by heavy-tailed distribution losses, such as overfitting to outliers. To tackle these issues, we introduce a novel approach: a Gaussian loss-weighted sampler that multiplies their running losses with a Gaussian distribution weight. It reduces the probability of selecting samples with very low or very high losses while favoring those close to average losses. As it creates a weighted loss distribution that is not heavy-tailed theoretically, there are several advantages to highlight compared to existing methods: 1) it relieves the inefficiency in learning redundant easy samples and overfitting to outliers, 2) It improves training efficiency by preferentially learning samples close to the average loss. Application on real-world time series forecasting datasets demonstrate improvements in prediction quality for 1%-4% using mean square error measurements in channel-independent settings. The code will be available online after 1 the review.

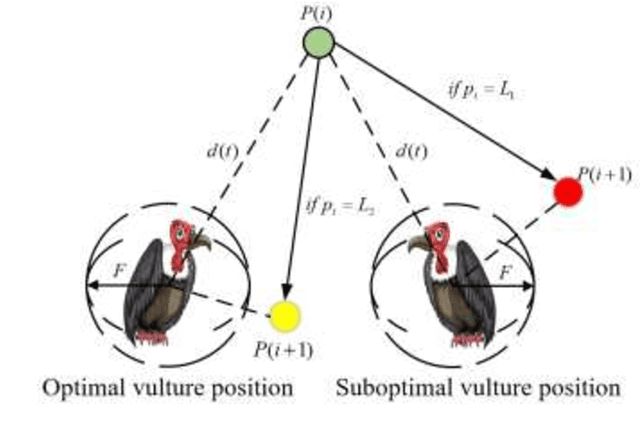

A Nonlinear African Vulture Optimization Algorithm Combining Henon Chaotic Mapping Theory and Reverse Learning Competition Strategy

Mar 26, 2024

Abstract:In order to alleviate the main shortcomings of the AVOA, a nonlinear African vulture optimization algorithm combining Henon chaotic mapping theory and reverse learning competition strategy (HWEAVOA) is proposed. Firstly, the Henon chaotic mapping theory and elite population strategy are proposed to improve the randomness and diversity of the vulture's initial population; Furthermore, the nonlinear adaptive incremental inertial weight factor is introduced in the location update phase to rationally balance the exploration and exploitation abilities, and avoid individual falling into a local optimum; The reverse learning competition strategy is designed to expand the discovery fields for the optimal solution and strengthen the ability to jump out of the local optimal solution. HWEAVOA and other advanced comparison algorithms are used to solve classical and CEC2022 test functions. Compared with other algorithms, the convergence curves of the HWEAVOA drop faster and the line bodies are smoother. These experimental results show the proposed HWEAVOA is ranked first in all test functions, which is superior to the comparison algorithms in convergence speed, optimization ability, and solution stability. Meanwhile, HWEAVOA has reached the general level in the algorithm complexity, and its overall performance is competitive in the swarm intelligence algorithms.

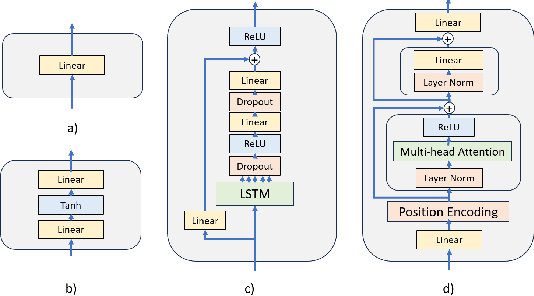

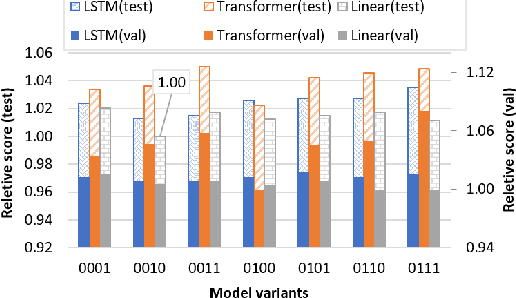

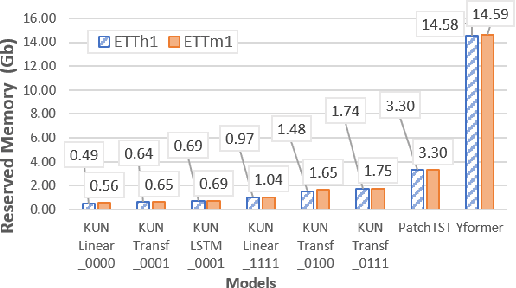

Kernel-U-Net: Hierarchical and Symmetrical Framework for Multivariate Time Series Forecasting

Jan 03, 2024

Abstract:Time series forecasting task predicts future trends based on historical information. Recent U-Net-based methods have demonstrated superior performance in predicting real-world datasets. However, the performance of these models is lower than patch-based models or linear models. In this work, we propose a symmetric and hierarchical framework, Kernel-U-Net, which cuts the input sequence into slices at each layer of the network and then computes them using kernels. Furthermore, it generalizes the concept of convolutional kernels in classic U-Net to accept custom kernels that follow the same design pattern. Compared to the existing linear or transformer-based solution, our model contains 3 advantages: 1) A small number of parameters: the parameters size is $O(log(L)^2)$ where $L$ is the look-back window size, 2) Flexibility: its kernels can be customized and fitted to the datasets, 3) Computation efficiency: the computation complexity of transformer modules is reduced to $O(log(L)^2)$ if they are placed close to the latent vector. Kernel-U-Net accuracy was greater than or equal to the state-of-the-art model on six (out of seven) real-world datasets.

DASPS: A Database for Anxious States based on a Psychological Stimulation

Jan 09, 2019

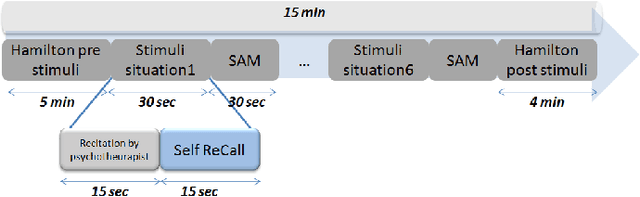

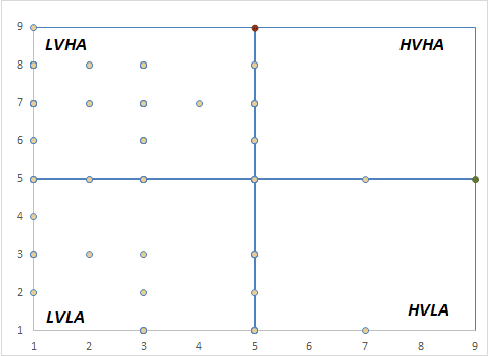

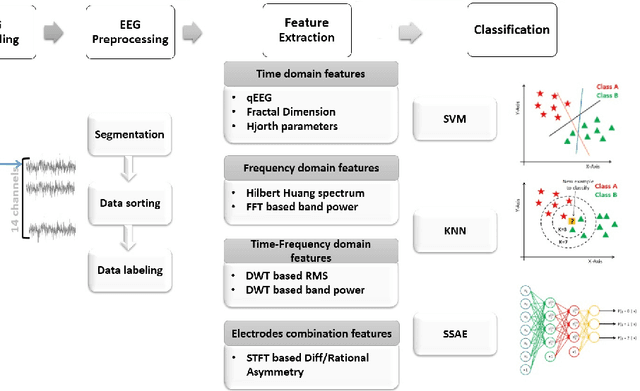

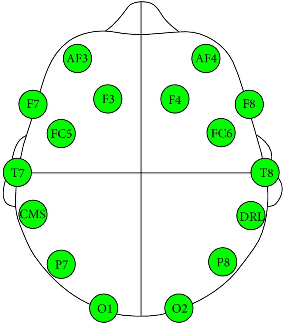

Abstract:Anxiety affects human capabilities and behavior as much as it affects productivity and quality of life. It can be considered as the main cause of depression and suicide. Anxious states are easily detectable by humans due to their acquired cognition, humans interpret the interlocutor's tone of speech, gesture, facial expressions and recognize their mental state. There is a need for non-invasive reliable techniques that performs the complex task of anxiety detection. In this paper, we present DASPS database containing recorded Electroencephalogram (EEG) signals of 23 participants during anxiety elicitation by means of face-to-face psychological stimuli. EEG signals were captured with Emotiv Epoc headset as it's a wireless wearable low-cost equipment. In our study, we investigate the impact of different parameters, notably: trial duration, feature type, feature combination and anxiety levels number. Our findings showed that anxiety is well elicited in 1 second. For instance, stacked sparse autoencoder with different type of features achieves 83.50% and 74.60% for 2 and 4 anxiety levels detection, respectively. The presented results prove the benefits of the use of a low-cost EEG headset instead of medical non-wireless devices and create a starting point for new researches in the field of anxiety detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge