Pantelis Georgiou

Privacy Preserved Blood Glucose Level Cross-Prediction: An Asynchronous Decentralized Federated Learning Approach

Jun 21, 2024

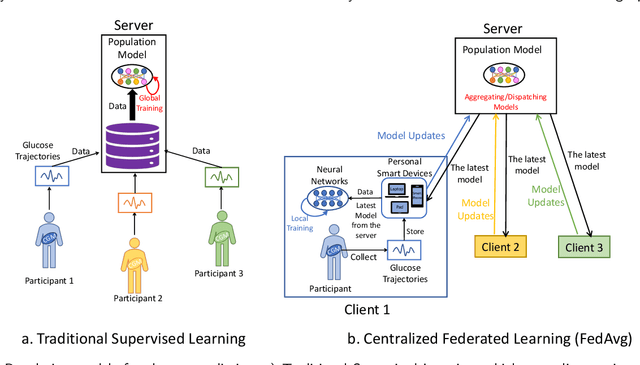

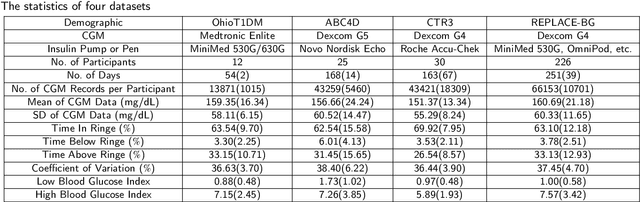

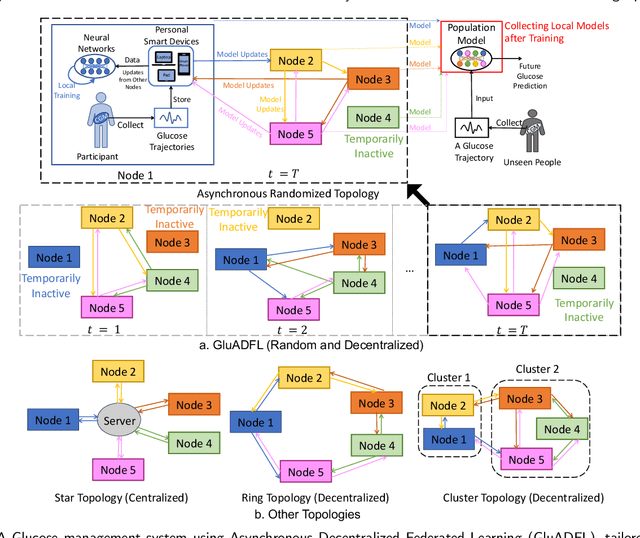

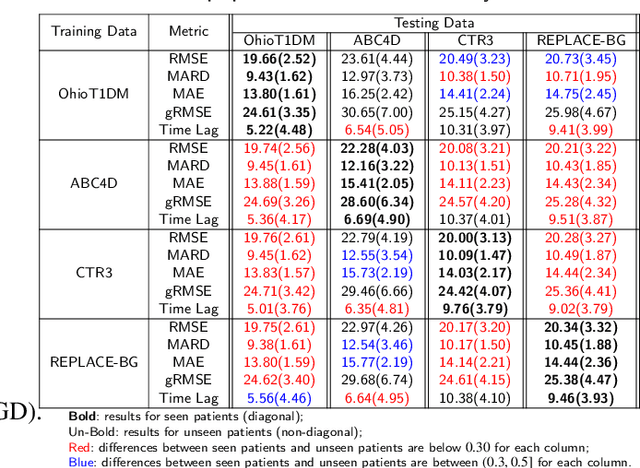

Abstract:Newly diagnosed Type 1 Diabetes (T1D) patients often struggle to obtain effective Blood Glucose (BG) prediction models due to the lack of sufficient BG data from Continuous Glucose Monitoring (CGM), presenting a significant "cold start" problem in patient care. Utilizing population models to address this challenge is a potential solution, but collecting patient data for training population models in a privacy-conscious manner is challenging, especially given that such data is often stored on personal devices. Considering the privacy protection and addressing the "cold start" problem in diabetes care, we propose "GluADFL", blood Glucose prediction by Asynchronous Decentralized Federated Learning. We compared GluADFL with eight baseline methods using four distinct T1D datasets, comprising 298 participants, which demonstrated its superior performance in accurately predicting BG levels for cross-patient analysis. Furthermore, patients' data might be stored and shared across various communication networks in GluADFL, ranging from highly interconnected (e.g., random, performs the best among others) to more structured topologies (e.g., cluster and ring), suitable for various social networks. The asynchronous training framework supports flexible participation. By adjusting the ratios of inactive participants, we found it remains stable if less than 70% are inactive. Our results confirm that GluADFL offers a practical, privacy-preserving solution for BG prediction in T1D, significantly enhancing the quality of diabetes management.

GARNN: An Interpretable Graph Attentive Recurrent Neural Network for Predicting Blood Glucose Levels via Multivariate Time Series

Feb 26, 2024

Abstract:Accurate prediction of future blood glucose (BG) levels can effectively improve BG management for people living with diabetes, thereby reducing complications and improving quality of life. The state of the art of BG prediction has been achieved by leveraging advanced deep learning methods to model multi-modal data, i.e., sensor data and self-reported event data, organised as multi-variate time series (MTS). However, these methods are mostly regarded as ``black boxes'' and not entirely trusted by clinicians and patients. In this paper, we propose interpretable graph attentive recurrent neural networks (GARNNs) to model MTS, explaining variable contributions via summarizing variable importance and generating feature maps by graph attention mechanisms instead of post-hoc analysis. We evaluate GARNNs on four datasets, representing diverse clinical scenarios. Upon comparison with twelve well-established baseline methods, GARNNs not only achieve the best prediction accuracy but also provide high-quality temporal interpretability, in particular for postprandial glucose levels as a result of corresponding meal intake and insulin injection. These findings underline the potential of GARNN as a robust tool for improving diabetes care, bridging the gap between deep learning technology and real-world healthcare solutions.

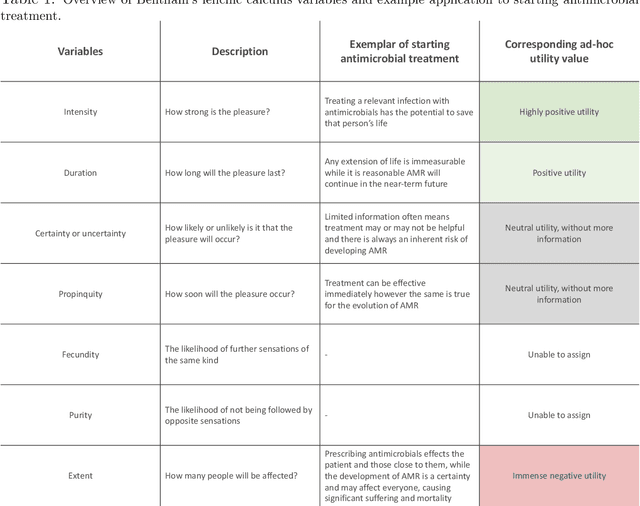

Developing moral AI to support antimicrobial decision making

Aug 12, 2022

Abstract:Artificial intelligence (AI) assisting with antimicrobial prescribing raises significant moral questions. Utilising ethical frameworks alongside AI-driven systems, while considering infection specific complexities, can support moral decision making to tackle antimicrobial resistance.

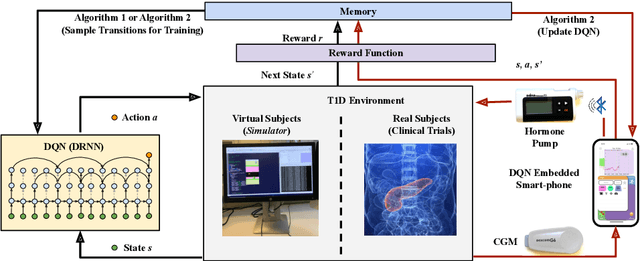

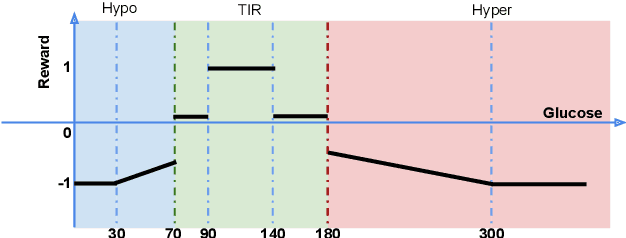

Basal Glucose Control in Type 1 Diabetes using Deep Reinforcement Learning: An In Silico Validation

May 18, 2020

Abstract:People with Type 1 diabetes (T1D) require regular exogenous infusion of insulin to maintain their blood glucose concentration in a therapeutically adequate target range. Although the artificial pancreas and continuous glucose monitoring have been proven to be effective in achieving closed-loop control, significant challenges still remain due to the high complexity of glucose dynamics and limitations in the technology. In this work, we propose a novel deep reinforcement learning model for single-hormone (insulin) and dual-hormone (insulin and glucagon) delivery. In particular, the delivery strategies are developed by double Q-learning with dilated recurrent neural networks. For designing and testing purposes, the FDA-accepted UVA/Padova Type 1 simulator was employed. First, we performed long-term generalized training to obtain a population model. Then, this model was personalized with a small data-set of subject-specific data. In silico results show that the single and dual-hormone delivery strategies achieve good glucose control when compared to a standard basal-bolus therapy with low-glucose insulin suspension. Specifically, in the adult cohort (n=10), percentage time in target range [70, 180] mg/dL improved from 77.6% to 80.9% with single-hormone control, and to $85.6\%$ with dual-hormone control. In the adolescent cohort (n=10), percentage time in target range improved from 55.5% to 65.9% with single-hormone control, and to 78.8% with dual-hormone control. In all scenarios, a significant decrease in hypoglycemia was observed. These results show that the use of deep reinforcement learning is a viable approach for closed-loop glucose control in T1D.

A Dual-Hormone Closed-Loop Delivery System for Type 1 Diabetes Using Deep Reinforcement Learning

Oct 09, 2019

Abstract:We propose a dual-hormone delivery strategy by exploiting deep reinforcement learning (RL) for people with Type 1 Diabetes (T1D). Specifically, double dilated recurrent neural networks (RNN) are used to learn the hormone delivery strategy, trained by a variant of Q-learning, whose inputs are raw data of glucose \& meal carbohydrate and outputs are dual-hormone (insulin and glucagon) delivery. Without prior knowledge of the glucose-insulin metabolism, we run the method on the UVA/Padova simulator. Hundreds days of self-play are performed to obtain a generalized model, then importance sampling is adopted to customize the model for personal use. \emph{In-silico} the proposed strategy achieves glucose time in target range (TIR) $93\%$ for adults and $83\%$ for adolescents given standard bolus, outperforming previous approaches significantly. The results indicate that deep RL is effective in building personalized hormone delivery strategy for people with T1D.

Convolutional Recurrent Neural Networks for Glucose Prediction

Aug 16, 2018

Abstract:Control of blood glucose is essential for diabetes management. Current digital therapeutic approaches for subjects with Type 1 diabetes mellitus (T1DM) such as the artificial pancreas and insulin bolus calculators leverage machine learning techniques for predicting subcutaneous glucose for improved control. Deep learning has recently been applied in healthcare and medical research to achieve state-of-the-art results in a range of tasks including disease diagnosis, and patient state prediction among others. In this work, we present a deep learning model that is capable of predicting glucose levels over a 30-minute horizon with leading accuracy for simulated patient cases (RMSE = 9.38$\pm$0.71 [mg/dL] and MARD = 5.50$\pm$0.62\%) and real patient cases (RMSE = 21.13$\pm$1.23 [mg/dL] and MARD = 10.08$\pm$0.83\%). In addition, the model also provides competitive performance in forecasting adverse glycaemic events with minimal time lag both in a simulated patient dataset (MCC$_{hyper}$ = 0.83$\pm$0.05 and MCC$_{hypo}$ = 0.80$\pm$0.10) and in a real patient dataset (MCC$_{hyper}$ = 0.79$\pm$0.04 and MCC$_{hypo}$ = 0.38$\pm$0.10). This approach is evaluated on a dataset of 10 simulated cases generated from the UVa/Padova simulator and a clinical dataset of 5 real cases each containing glucose readings, insulin bolus, and meal (carbohydrate) data. Performance of the recurrent convolutional neural network is benchmarked against four algorithms. The prediction algorithm is implemented on an Android mobile phone, with an execution time of $6$ms on a phone compared to an execution time of $780$ms on a laptop in Python.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge